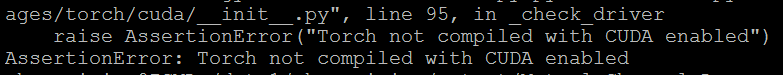

Torch Not Compiled With Cuda Enabled

However, there may be certain instances where Torch is not compiled with CUDA enabled. This can be due to multiple reasons and understanding these reasons is crucial for users looking to maximize the potential of Torch by utilizing CUDA acceleration.

There are several factors that could explain why Torch may not be compiled with CUDA enabled. Firstly, it could be a result of the installation process. When Torch is installed, one of the dependencies is CUDA, and if CUDA is not installed or configured properly, Torch will not be compiled with CUDA enabled.

Another reason could be the absence of compatible GPU hardware. CUDA requires NVIDIA GPUs, and if the system running Torch does not have a compatible GPU or the GPU is not recognized by the system, Torch will not be compiled with CUDA enabled.

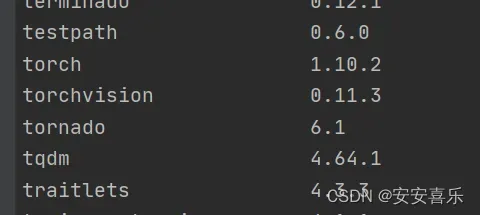

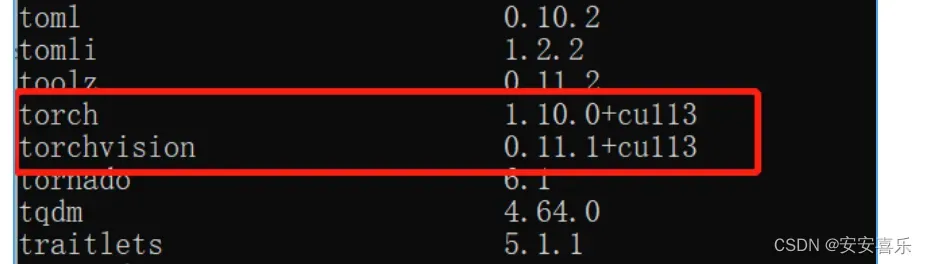

Additionally, the version mismatch between Torch and CUDA can be a potential cause for Torch not being compiled with CUDA enabled. If the installed version of Torch is not compatible with the version of CUDA, Torch will default to CPU execution, omitting CUDA acceleration.

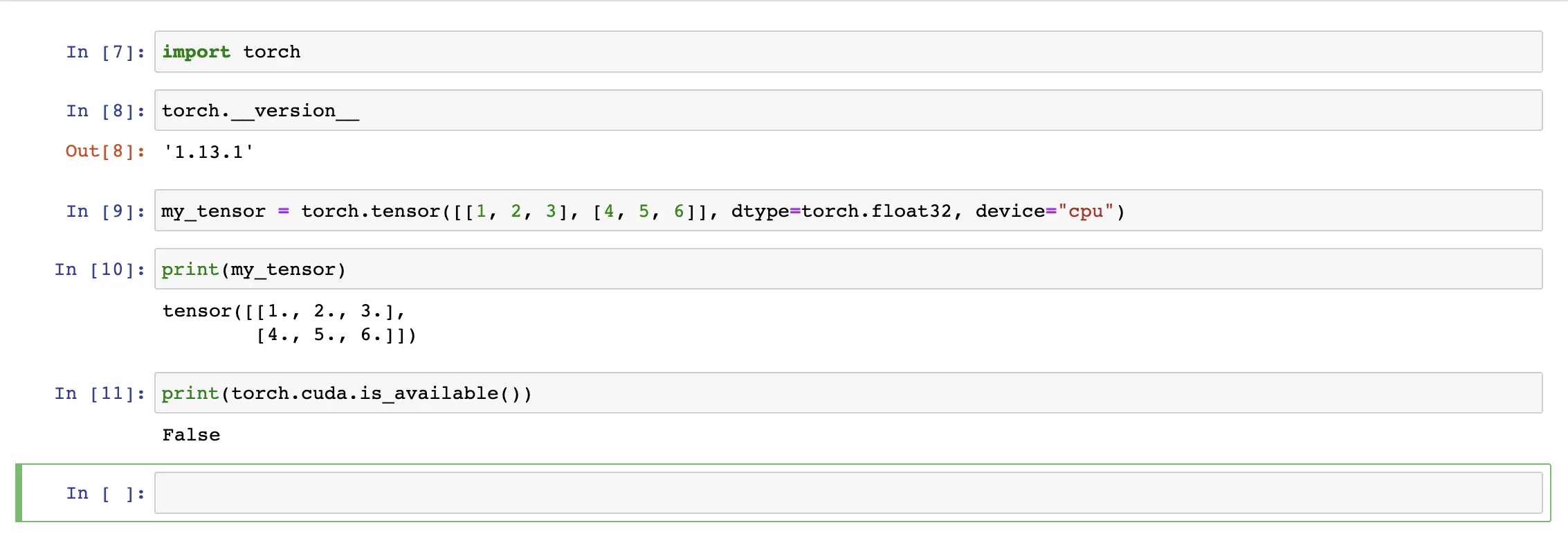

To check if Torch is compiled with CUDA enabled, you can use the following method:

“`

import torch

print(torch.cuda.is_available())

“`

If the output of this code is `False`, it indicates that Torch is not compiled with CUDA enabled.

To compile Torch with CUDA support, you need to follow a few steps. First, ensure that you have installed CUDA and the appropriate GPU drivers. Then, you can install PyTorch, which includes Torch with CUDA support. The PyTorch installation package automatically enables CUDA if it is available.

However, there can be some common issues that users might face during the compilation process. One common issue is the mismatch between CUDA and CuDNN versions. Ensure that you have compatible versions of both CUDA and CuDNN installed. You can check the compatibility matrix provided by PyTorch to find the appropriate versions.

Another issue could be the absence of the necessary dependencies during the compilation process. Make sure that you have all the required libraries and dependencies installed before attempting to compile Torch with CUDA support.

If you encounter any problems during the compilation process, it is recommended to refer to the official documentation and community forums for specific troubleshooting steps.

Using Torch with CUDA enabled provides several benefits. Firstly, GPU acceleration significantly speeds up the training and inference process, reducing the time required to train complex neural networks. This is especially important for large-scale projects or tasks involving massive datasets.

Furthermore, CUDA enables Torch to exploit the massive parallel processing capabilities of GPUs, which are specifically designed for high-performance computing tasks. This results in improved performance and efficiency compared to CPU-only execution.

Users of Torch with CUDA enabled can leverage the capabilities of tools like PyCharm, an integrated development environment (IDE), to harness the potential of deep learning. PyCharm provides a seamless coding experience and debugging tools that can greatly enhance the productivity of developers working with Torch.

In conclusion, while Torch’s integration with CUDA provides accelerated computational capabilities, there may be instances where Torch is not compiled with CUDA enabled. This can be due to installation issues, incompatible hardware, or version mismatch between Torch and CUDA. Checking the CUDA availability using `torch.cuda.is_available()` can help identify if Torch is compiled with CUDA enabled. To enable CUDA support, ensure proper installation and compatibility of CUDA, CuDNN, and Torch. Troubleshooting common issues and seeking help from the official documentation and community forums is highly recommended. Utilizing Torch with CUDA acceleration offers numerous benefits, including faster training times and improved performance, making it a valuable tool for deep learning practitioners.

—

FAQs:

Q: Why is Torch not compiled with CUDA enabled?

A: Torch may not be compiled with CUDA enabled due to various reasons such as incorrect installation or configuration, incompatible GPU hardware, or version mismatch between Torch and CUDA.

Q: How can I check if Torch is compiled with CUDA enabled?

A: You can use the `torch.cuda.is_available()` function to check if Torch is compiled with CUDA enabled. If the output is `False`, it means that Torch is not compiled with CUDA enabled.

Q: How can I compile Torch with CUDA support?

A: To compile Torch with CUDA support, ensure that CUDA and the appropriate GPU drivers are installed. Then, install PyTorch, which includes Torch with CUDA support. The PyTorch installation package automatically enables CUDA if it is available.

Q: What are the benefits of using Torch with CUDA enabled?

A: Using Torch with CUDA enabled allows for GPU acceleration, resulting in faster training and improved performance. It leverages the parallel processing capabilities of GPUs, leading to enhanced efficiency compared to CPU-only execution.

Q: Can I use Torch with CUDA enabled in PyCharm?

A: Yes, you can use Torch with CUDA enabled in PyCharm. PyCharm provides an integrated development environment (IDE) that facilitates coding and debugging deep learning applications using Torch with CUDA support.

Q: Why does `torch.cuda.is_available()` return false even with CUDA installed?

A: If `torch.cuda.is_available()` returns `False` despite having CUDA installed, it could be due to issues such as incompatible hardware, incorrect installation, or version mismatch between Torch and CUDA.

Q: How to enable CUDA in PyTorch?

A: CUDA is automatically enabled in PyTorch if it is available. Ensure that you have CUDA installed and the compatible version of PyTorch, and CUDA will be enabled automatically.

Grounding Dino | Assertionerror: Torch Not Compiled With Cuda Enabled | Solve Easily

How To Install Torch With Cuda Enabled?

If you are interested in deep learning and want to utilize the power of your NVIDIA GPU, enabling CUDA support for Torch can be a game-changer. CUDA allows you to harness the massive parallel computing capabilities of your GPU, resulting in faster and more efficient deep learning computations. In this article, we will guide you through the process of installing Torch with CUDA enabled on your system.

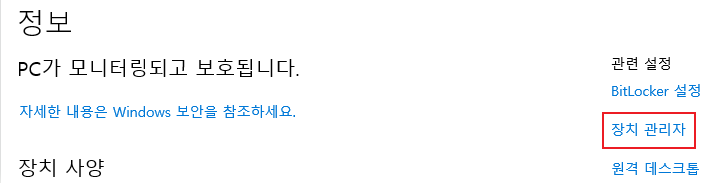

Step 1: Verify Your GPU Compatibility

Before installing torch with CUDA, it’s crucial to ensure that your GPU is compatible with CUDA. NVIDIA GPUs after 2006 should generally support CUDA, but it’s always a good idea to double-check. Visit the NVIDIA CUDA website (https://developer.nvidia.com/cuda-gpus) to confirm your GPU’s compatibility.

Step 2: Install CUDA Toolkit

To enable CUDA support, we need to install the CUDA toolkit on our system. Visit the NVIDIA CUDA Toolkit download page (https://developer.nvidia.com/cuda-downloads) and choose the appropriate installer for your operating system. The CUDA toolkit includes the necessary libraries, compilers, and tools required to utilize CUDA.

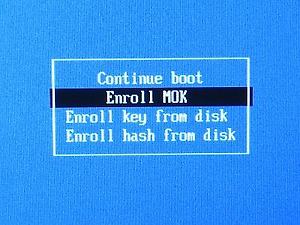

Once the download is complete, run the installer and follow the on-screen instructions. Make sure to select the appropriate options during installation. CUDA requires the GPU driver to be compatible, so if it prompts you to install an updated driver, go ahead and install it.

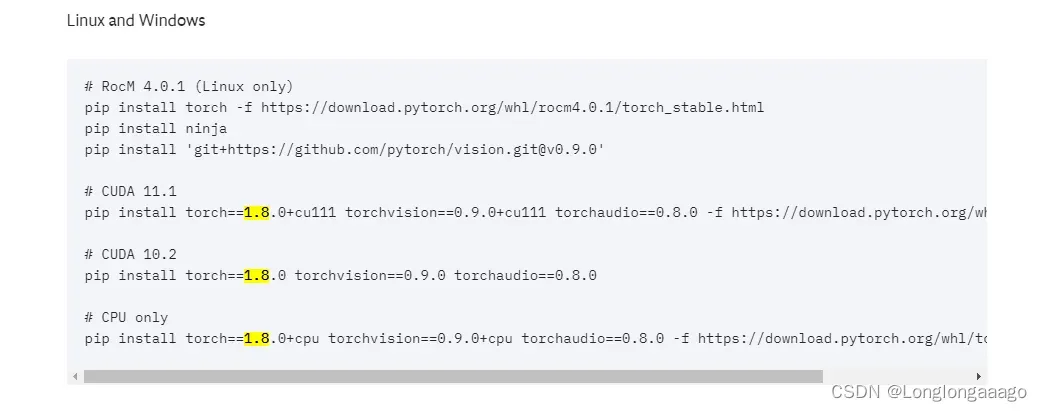

Step 3: Install Torch with CUDA

Now that we have CUDA installed on our system, it’s time to install Torch with CUDA support. Open a terminal or command prompt and execute the following commands:

$ git clone https://github.com/torch/distro.git ~/torch –recursive

$ cd ~/torch

$ bash install-deps

$ ./install.sh –with-cuda

The first command clones the Torch repository from GitHub, while the second navigates to the cloned directory. The third command installs the required dependencies for Torch, and the final command starts the Torch installation with CUDA support.

Be patient during the installation process, as it may take some time to compile the required components. Once the installation is complete, you will see a success message.

Step 4: Verify CUDA and Torch Installation

To ensure that both CUDA and Torch have been installed correctly, let’s verify them. In the terminal, execute the following commands:

$ luarocks –version

$ th

The first command should display the version of luarocks, the package manager for Torch. The second command opens a Torch interactive shell, indicating that Torch has been installed successfully. If you encounter any errors, double-check the installation process or consult the Torch documentation for troubleshooting steps.

Frequently Asked Questions (FAQs):

Q1: What if I already have Torch installed without CUDA support?

A: If you have Torch installed without CUDA support, you need to uninstall it completely before proceeding with the steps mentioned above. You can use the following command to uninstall Torch:

$ rm -rf ~/torch

Q2: Is CUDA necessary for Torch?

A: No, CUDA is not necessary for Torch. Torch can be used for deep learning without CUDA or GPU support. CUDA provides acceleration using your GPU’s computing power, enabling faster computations for deep learning tasks.

Q3: How can I verify that CUDA is working with Torch?

A: To verify CUDA support, launch a Torch shell and execute the following command:

$ torch.CudaTensor(1)

If CUDA is working correctly, no error should be displayed. However, if an error is shown, it means that CUDA is not properly configured or your GPU does not support CUDA.

Q4: Can I use multiple GPUs with Torch and CUDA?

A: Yes, Torch supports using multiple GPUs with CUDA. You can set the desired GPU device using the following command:

$ CUDA_VISIBLE_DEVICES=

Replace

Q5: How do I install additional packages and libraries for Torch with CUDA?

A: Torch provides luarocks, a package manager, to install additional packages. To install a package with CUDA support, use the following command:

$ luarocks install –cuda Ensure that the package you want to install has CUDA support. In conclusion, installing Torch with CUDA support opens up new avenues for deep learning enthusiasts by utilizing the power of NVIDIA GPUs. By following the outlined steps and carefully verifying the installation, you can seamlessly run deep learning computations with improved speed and efficiency.

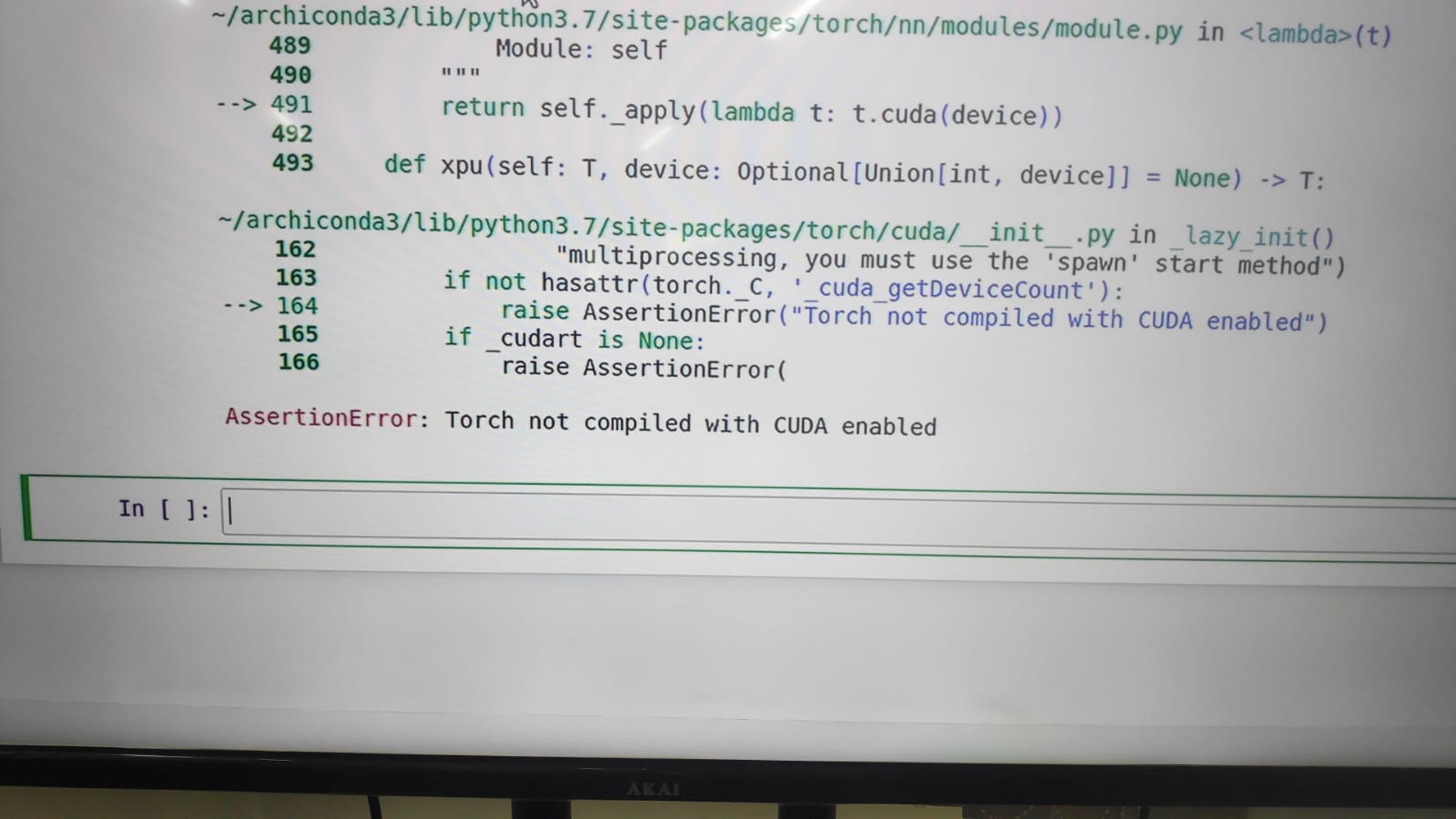

What Is Assertionerror Torch Not Compiled With Cuda Enabled In Windows?

When working with deep learning models and frameworks, such as PyTorch, CUDA plays a crucial role in accelerating computations by leveraging the power of GPUs. However, sometimes you may encounter an AssertionError stating “torch not compiled with CUDA enabled” on your Windows machine. This error message typically indicates that your PyTorch installation does not have CUDA support enabled or is not configured correctly.

PyTorch is an open-source machine learning framework that provides efficient tensor computations on both CPUs and GPUs. By utilizing CUDA, PyTorch can take advantage of the highly parallel architecture of GPUs, significantly speeding up the training and inference processes. Therefore, having CUDA-enabled PyTorch is beneficial, especially for complex deep learning models that require heavy computations.

Understanding the ‘torch not compiled with CUDA enabled’ Assertion Error:

So, why does this error occur, and what can you do to fix it? There are a few potential reasons for encountering this AssertionError in Windows:

1. Missing or incompatible CUDA Toolkit: The CUDA Toolkit is a prerequisite for using CUDA with PyTorch. If you have not installed CUDA Toolkit or have installed an incompatible version, the error may occur. Make sure to install the correct CUDA Toolkit version that is compatible with your PyTorch installation.

2. Incorrect PyTorch installation: If the PyTorch package you installed is not compiled with CUDA support, either due to an incomplete installation or compatibility issues, you will encounter the ‘torch not compiled with CUDA enabled’ error. Ensure that you install a CUDA-enabled version of PyTorch using the official PyTorch website or package manager.

3. Mismatch between CUDA and GPU driver versions: It is crucial to maintain compatibility between the CUDA version and the GPU driver installed on your Windows machine. If the versions are not matching, the CUDA-enabled PyTorch may fail to operate correctly, resulting in the AssertionError. Always double-check and update both the CUDA Toolkit and GPU driver to compatible versions.

4. GPU-related configuration issues: Sometimes, the error may occur due to misconfigurations in the GPU settings of your Windows machine. For example, if the GPU memory allocation is insufficient, PyTorch may not be able to utilize CUDA, triggering the AssertionError. Ensure that your GPU is properly configured and has enough memory for PyTorch operations.

Solutions to resolve the ‘torch not compiled with CUDA enabled’ Assertion Error:

Now that we understand the potential causes, let’s explore some solutions to resolve the ‘torch not compiled with CUDA enabled’ error:

1. Verify CUDA Toolkit installation: Check whether you have correctly installed the CUDA Toolkit by running the ‘nvcc –version’ command in the command prompt. If the command is not recognized, reinstall the CUDA Toolkit following the official documentation and ensure it is added to the system PATH.

2. Reinstall PyTorch with CUDA support: If you encounter the error after installing PyTorch, it is advisable to reinstall it using the appropriate CUDA-enabled version. Uninstall the existing PyTorch package and perform a fresh installation using the recommended command or package manager. This should ensure that PyTorch is compiled with CUDA support.

3. Update CUDA Toolkit and GPU driver: Ensure that both your CUDA Toolkit and GPU driver are up-to-date and compatible. Check the NVIDIA website or the official documentation for instructions on updating these components. Keeping them in sync will help avoid compatibility issues and AssertionErrors.

4. Adjust GPU memory allocation: If the error persists, it may be due to insufficient GPU memory allocation for PyTorch. Locate the configuration file for your GPU driver and increase the memory allocation if possible. Alternatively, reduce the batch size or model complexity to reduce the memory requirements.

FAQs:

1. How can I check if PyTorch is compiled with CUDA support?

To verify if your PyTorch installation is CUDA-enabled, you can use the following code snippet:

“`python

import torch

print(torch.cuda.is_available())

“`

This will print True if PyTorch is compiled with CUDA support; otherwise, it will print False.

2. Can I use PyTorch without CUDA support?

Yes, PyTorch can still be used without CUDA support. It will fall back to CPU computations, which are generally slower but can still execute most tasks. However, leveraging GPUs through CUDA can significantly speed up computations, especially for deep learning workloads.

3. Can I enable CUDA support after installing PyTorch without it?

Unfortunately, enabling CUDA support requires a version of PyTorch that is specifically compiled with it. If you have installed a PyTorch version without CUDA support, you will need to reinstall it with the correct version that includes CUDA support.

In conclusion, encountering the ‘torch not compiled with CUDA enabled’ error in Windows can be frustrating when working on deep learning models with PyTorch. However, understanding the causes and applying the appropriate solutions, such as verifying the CUDA Toolkit, reinstalling PyTorch with CUDA support, updating CUDA Toolkit and GPU driver, and adjusting GPU memory allocation, can resolve this error and allow you to take advantage of accelerated computations offered by CUDA.

Keywords searched by users: torch not compiled with cuda enabled Torch not compiled with CUDA enabled pycharm, PyTorch, How to enable CUDA in PyTorch, torch.cuda.is_available() false, Mac Torch not compiled with CUDA enabled, CUDA not available – defaulting to CPU note This module is much faster with a GPU, Torch cuda is_available false CUDA 11, Torch cuda is_available False Windows

Categories: Top 18 Torch Not Compiled With Cuda Enabled

See more here: nhanvietluanvan.com

Torch Not Compiled With Cuda Enabled Pycharm

Why is Torch not compiled with CUDA enabled in PyCharm?

There can be multiple reasons why Torch may not be compiled with CUDA enabled in PyCharm. Let’s discuss some of the possible causes:

1. PyTorch installation issues: PyTorch, a popular library built on top of Torch, provides seamless integration with CUDA. If you have installed PyTorch without enabling CUDA support, it can result in Torch not being compiled with CUDA enabled in PyCharm.

2. CUDA installation issues: CUDA requires the correct installation of NVIDIA drivers, the CUDA toolkit, and cuDNN (CUDA Deep Neural Network library) to function properly. If any of these components are missing or are not installed correctly, Torch may not be able to utilize CUDA in PyCharm.

3. GPU compatibility: Torch with CUDA support requires a compatible GPU. If your system does not have a supported NVIDIA GPU, or if the GPU drivers are not up to date, you may encounter issues with Torch not being compiled with CUDA enabled.

Solutions for enabling CUDA support in Torch with PyCharm:

If you are facing issues with Torch not being compiled with CUDA enabled in PyCharm, here are some potential solutions to address the problem:

1. Check PyTorch installation: Ensure that you have the correct version of PyTorch installed. If you haven’t explicitly installed the CUDA-enabled version of PyTorch, you can do so by following the official PyTorch installation guide. This will ensure that PyTorch has access to CUDA.

2. Verify CUDA installation: Confirm that CUDA and its dependencies are installed correctly on your system. Visit the NVIDIA CUDA Toolkit download page to download the appropriate version for your OS, and follow the installation instructions provided by NVIDIA. Make sure to check the compatibility of your GPU with the CUDA version you are installing.

3. Update GPU drivers: Outdated or incompatible GPU drivers can prevent Torch from utilizing CUDA. Visit the NVIDIA drivers download page to download and install the latest drivers compatible with your GPU. Restart your system after updating the GPU drivers for the changes to take effect.

4. Verify PyCharm configuration: Ensure that your PyCharm project is set up correctly to use the CUDA-enabled version of Torch. To do this, go to “File” > “Settings” (or “Preferences” on macOS) in PyCharm and navigate to the “Project: [your project name]” > “Python Interpreter” settings. Make sure that the correct PyTorch installation with CUDA support is selected as the interpreter.

FAQs:

Q1. Can I use Torch without CUDA support?

A1. Yes, you can still use Torch without CUDA support. However, it might result in slower computations, especially for complex machine learning models or large datasets, as it won’t be leveraging the parallel processing capabilities of GPUs.

Q2. How can I check if Torch is compiled with CUDA support?

A2. You can check if Torch is compiled with CUDA support by running the following command in your PyCharm environment: `import torch; print(torch.cuda.is_available())`. If it returns `True`, then Torch has CUDA support; otherwise, it doesn’t.

Q3. I have followed all the steps correctly, but Torch still doesn’t have CUDA support. What do I do?

A3. In such cases, it is advisable to seek help from the PyTorch or PyCharm community forums, where experts can provide you with more specific guidance tailored to your setup and environment.

In conclusion, Torch not being compiled with CUDA enabled in PyCharm can be caused by issues with PyTorch installation, CUDA installation, or GPU compatibility. By following the suggested solutions, you can enable CUDA support in Torch and leverage the power of GPUs for faster and more efficient computations. Remember to verify your installations, update GPU drivers, and ensure correct PyCharm configuration, as these steps are crucial in resolving the issue.

Pytorch

Introduction to PyTorch

PyTorch, developed by Facebook’s AI research lab, is a widely popular open-source machine learning library. Launched in 2016, it has quickly gained recognition for its dynamic computational graph and ease of use. PyTorch provides a simple yet powerful framework for creating and training both simple and complex neural networks, making it a favorite among researchers and practitioners in the field of deep learning.

Key Features of PyTorch

1. Dynamic Computational Graph: One of the standout features of PyTorch is its dynamic computation graph. Unlike static graph frameworks like TensorFlow, PyTorch allows users to define and modify computational graphs on the fly. This flexibility makes it highly suitable for exploratory research, prototyping, and handling inputs of varying sizes. By using this approach, PyTorch helps developers to understand and debug models more effectively.

2. Pythonic Interface: PyTorch is built as an extension of Python, making it smooth and easy to use for Python developers. Its API provides high-level abstractions and simplifies the process of defining, training, and deploying deep learning models.

3. GPU Support: PyTorch natively supports the use of GPUs, enabling accelerated computation for training and inference. This feature allows for faster experimentation and training on large-scale datasets, resulting in substantial speed-ups when compared to running on CPUs alone.

4. Extensive Community and Ecosystem: PyTorch boasts a large and active community. This community has contributed a wide range of pre-trained models, online tutorials, and third-party libraries, thus growing the ecosystem around PyTorch. Researchers and developers can leverage these resources to speed up their projects and get a head start on solving complex tasks.

5. Multi-platform Compatibility: PyTorch supports multiple platforms, including Linux, macOS, and Windows, making it accessible to developers across different systems. Additionally, it offers support for deployment on cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure.

Building Blocks of PyTorch

1. Tensors: Tensors are PyTorch’s base data structure, which are similar to NumPy’s ndarrays. Tensors can be created on both CPUs and GPUs, and they store and manipulate data efficiently for numerical computations.

2. Autograd: PyTorch’s built-in automatic differentiation system is known as autograd. It allows for automatic computation of gradients during the forward pass, which is crucial for backpropagation and training deep neural networks. The autograd subsystem keeps track of all operations performed on tensors and efficiently calculates their gradients.

3. Neural Networks: The torch.nn package provides a high-level and modular way to build neural networks in PyTorch. It includes a wide range of layers, loss functions, and optimization algorithms. PyTorch’s neural networks can be customized easily, giving developers the flexibility to design models according to their specific requirements.

4. Data Loading and Transformation: PyTorch provides tools to load and preprocess data efficiently for training purposes. The torch.utils.data module facilitates the creation of custom datasets, while the torch.utils.data.DataLoader allows for efficient batch processing and parallel loading of data.

Frequently Asked Questions (FAQs):

Q1. Is PyTorch only suitable for research purposes, or can it be used for production-level deployments?

A: PyTorch is versatile and can be used for both research and production deployments. Its dynamic computational graph makes it particularly suitable for research and development, allowing quick experimentation with models. Moreover, PyTorch provides tools like TorchScript and TorchServe that enable efficient model deployment and productionizing of PyTorch models.

Q2. How does PyTorch compare to other popular deep learning frameworks like TensorFlow?

A: PyTorch and TensorFlow are two widely used deep learning frameworks, each with its own strengths. PyTorch’s dynamic computational graph makes it more flexible for experimentation and easier to debug. TensorFlow, on the other hand, offers better optimization and distributed training capabilities. Choosing between the two often boils down to personal preferences and specific project requirements.

Q3. Does PyTorch offer pre-trained models?

A: Yes, PyTorch has a growing ecosystem of pre-trained models known as “TorchVision” and “TorchText.” These models cover a wide range of tasks, such as image classification, object detection, machine translation, and natural language processing. Pre-trained models can be easily loaded and fine-tuned for specific applications, saving significant time and effort.

Q4. Can PyTorch utilize distributed computing?

A: Yes, PyTorch offers distributed training capabilities through its DistributedDataParallel (DDP) module. DDP enables efficient training on multi-GPU setups or even across multiple machines. By leveraging distributed computing, users can train models on large-scale datasets more effectively, reducing training time.

Q5. Can PyTorch be integrated with other popular libraries and frameworks?

A: PyTorch integrates well with several widely used libraries and frameworks, such as TensorFlow, NumPy, and SciPy. This compatibility allows for easy data interchange and leveraging the strengths of different tools to solve complex problems efficiently.

Conclusion

PyTorch has rapidly gained popularity within the machine learning and deep learning communities. Its dynamic computational graph, ease of use, and extensive community support make it an excellent choice for researchers, students, and practitioners. By leveraging PyTorch’s powerful building blocks, developers can build and train complex neural networks with relative ease. With its growing ecosystem and continuous development, PyTorch is expected to influence the future of deep learning and artificial intelligence.

Images related to the topic torch not compiled with cuda enabled

Found 27 images related to torch not compiled with cuda enabled theme

Article link: torch not compiled with cuda enabled.

Learn more about the topic torch not compiled with cuda enabled.

- Torch not compiled with CUDA enabled” in spite upgrading to …

- AssertionError: Torch not compiled with CUDA enabled #30664

- How to resolve Torch not compiled with CUDA enabled

- AssertionError: torch not compiled with cuda enabled ( Fix )

- How to Fix AssertionError: torch not compiled with cuda enabled

- Torch not compiled with CUDA enabled – PyTorch Forums

- How to Install PyTorch with CUDA 10.0 – VarHowto

- AssertionError: torch not compiled with cuda enabled ( Fix )

- Why Does PyTorch Not Find My NVIDIA Drivers for CUDA Support

- Which PyTorch version is CUDA 30 compatible | Saturn Cloud Blog

- Torch Not Compiled With Cuda Enabled: Causes and Solutions

- Assertionerror: Torch Not Compiled With Cuda Enabled

- Torch not compiled with CUDA enabled – Jetson AGX Xavier

- Torch not compiled with CUDA enabled in PyTorch – LinuxPip

See more: nhanvietluanvan.com/luat-hoc