Torch Is Not Able To Use Gpu

1. Technical Limitations of Torch

a. Differences in GPU Architecture: GPUs from different manufacturers, such as NVIDIA and AMD, have distinct architectures that require specific programming techniques. Torch may not have the necessary support to exploit the unique features of diverse GPU architectures, resulting in limited GPU utilization.

b. Lack of Supported APIs: Torch relies heavily on the use of CUDA, an NVIDIA proprietary parallel computing API. This tight integration with CUDA restricts the framework’s compatibility with non-NVIDIA GPUs or alternative GPU programming interfaces, hampering Torch’s ability to use different GPU technologies.

c. Incompatibility with GPU Drivers: The compatibility between Torch and GPU drivers plays a vital role in achieving successful GPU utilization. If there are inconsistencies or compatibility issues between Torch and the installed GPU drivers, it can prevent Torch from effectively utilizing the GPU’s computing power.

2. Limited GPU Support in Torch

a. Unavailability of CUDA Support: CUDA is the go-to API for GPU programming, and Torch is heavily reliant on it. Without proper CUDA support, Torch cannot fully exploit the GPU’s capabilities, leading to limited or no GPU usage during computations.

b. Absence of cuDNN Integration: The CUDA Deep Neural Network library (cuDNN) provides highly optimized implementations of various deep learning operations on GPUs. Unfortunately, Torch lacks seamless integration with cuDNN, preventing efficient utilization of optimized GPU-accelerated operations.

c. Fewer GPU-optimized Operations: Torch offers a wide variety of functions and operations for machine learning tasks. However, the framework may lack optimized GPU implementations for certain operations, resulting in inefficient GPU utilization or even fallbacks to CPU processing.

3. Alternative Frameworks for GPU Usage

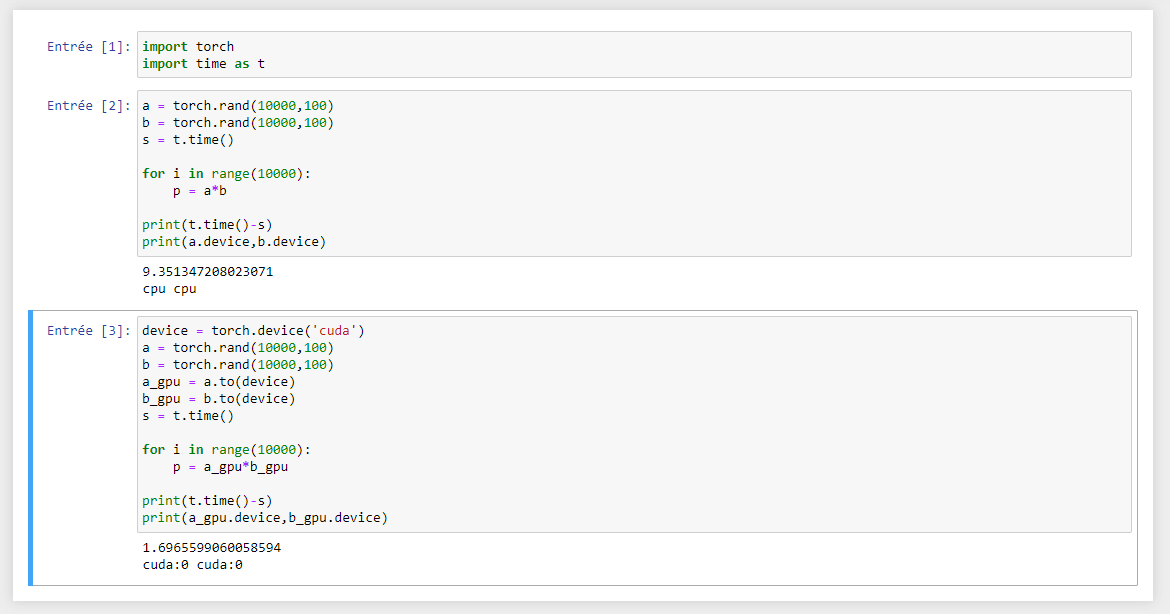

a. PyTorch: Considered as the successor of Torch, PyTorch addresses the limitations of Torch by delivering a GPU-capable version. PyTorch provides extensive support for GPU computation using CUDA, enabling efficient GPU utilization for deep learning tasks.

b. TensorFlow: TensorFlow, a popular machine learning framework developed by Google, has robust GPU support. It leverages its own GPU computing library, TensorFlow-GPU, which allows users to efficiently harness the power of NVIDIA GPUs for accelerated computations.

c. Theano: Theano is another alternative framework that emphasizes GPU usage for deep learning. It provides a high-level interface to express mathematical operations, which are then efficiently executed on GPUs. Theano’s focus on GPU utilization makes it a suitable option for those seeking GPU acceleration.

4. Workarounds and Solutions

a. Leveraging Torch’s CPU Capabilities: Despite its limited GPU support, Torch remains widely used for machine learning tasks due to its efficient CPU computations. When GPU usage is not feasible, leveraging Torch’s powerful CPU capabilities can still deliver satisfactory results.

b. Utilizing Tensorboard for Visualization: Torch integrates seamlessly with Tensorboard, a visualization tool for machine learning. By employing Tensorboard, users can easily monitor and visualize the training progress of their Torch models, regardless of GPU availability.

c. Transferring Torch to a GPU-compatible Framework: If GPU acceleration is crucial for a particular project, one viable solution is to transfer the Torch model to a GPU-compatible framework. This allows users to retain the benefits of Torch’s intuitive interface while harnessing the GPU capabilities of more GPU-focused frameworks like PyTorch or TensorFlow.

5. Community Efforts and Contributions

a. CUDA Integration Improvements: The Torch community actively works on enhancing the integration of CUDA into the framework. This involves addressing compatibility issues, expanding support for different GPUs, and optimizing CUDA-based functions to ensure better GPU utilization.

b. Development of GPU-accelerated Libraries: Various community-driven efforts have led to the creation of GPU-accelerated libraries specifically designed to work in conjunction with Torch. These libraries aim to fill the gaps in GPU support by providing optimized GPU implementations for essential operations.

c. Collaboration with Other Frameworks: Inter-framework collaborations between Torch and other established GPU-capable frameworks, such as PyTorch and TensorFlow, can lead to the development of unified solutions that combine the strengths of each framework, mitigating the limitations of individual frameworks.

6. Overcoming Limitations and Future Possibilities

a. Research and Development for GPU Support: Torch’s development team is actively conducting research and development to improve GPU support within the framework. Future updates and releases may introduce enhanced GPU utilization and overcome the current limitations.

b. Integration with Advanced GPU Technologies: As advanced GPU technologies emerge, such as NVIDIA’s RTX series with dedicated hardware for machine learning operations, Torch may explore integration possibilities to fully capitalize on these advancements.

c. Evolution of Torch’s GPU Compatibility: Torch’s community and development team are committed to ensuring improved GPU compatibility by addressing technical limitations, extending support for alternative GPU APIs, and refining GPU-accelerated operations within the framework.

In conclusion, while Torch has several technical limitations and lacks robust GPU support, alternative frameworks like PyTorch, TensorFlow, and Theano provide comprehensive solutions for GPU utilization in deep learning tasks. Workarounds such as leveraging CPU capabilities, utilizing visualization tools like Tensorboard, and transferring Torch models to GPU-compatible frameworks can also be employed. Additionally, community efforts, contributions, and future research and development hold promise for overcoming Torch’s limitations and enhancing the framework’s GPU compatibility.

Errors While Installing Stable Diffusion Webui, Quick Fix, Torch And Torchvision, Torch Gpu

Why Torch Is Not Able To Use Gpu?

Torch, a widely-used open-source machine learning library, offers a range of powerful tools and capabilities for developing and training deep learning models. However, one of the limitations of Torch is its inability to utilize GPU (graphics processing unit) acceleration. This can be a cause of frustration for many machine learning practitioners who seek to leverage the computational power of GPUs to speed up their training processes. In this article, we will explore the reasons why Torch lacks GPU support and delve into some frequently asked questions surrounding this topic.

GPU Acceleration in Deep Learning:

To understand why Torch cannot use GPU, it is important to first grasp the significance of GPU acceleration in deep learning. GPUs are specialized processors designed for handling complex graphical computations. However, due to their parallel architecture and ability to perform multiple tasks simultaneously, GPUs have become a game-changer in the field of deep learning.

Deep learning algorithms involve heavy matrix calculations, which can be executed much more efficiently on GPUs compared to traditional central processing units (CPUs). GPUs contain thousands of small, efficient cores that can process large matrices in parallel, resulting in substantial speed-ups during training. These speed-ups are particularly evident when dealing with large datasets or complex neural network architectures.

Why Torch Does Not Support GPU:

1. Torch’s Background and Legacy:

Torch was initially developed as a successor to the popular Lua-based package, Torch5. Lua is a lightweight scripting language primarily known for its simplicity and ease of integration. Torch inherited Lua’s ease of use and scripting capabilities, making it an attractive option for deep learning researchers and practitioners. However, Lua lacks native support for GPU acceleration, which ultimately translated to a lack of GPU support in Torch.

2. LuaJIT and Torch7:

Torch relies heavily on Lua’s Just-In-Time (JIT) compiler, LuaJIT, for optimizing performance. LuaJIT efficiently compiles Lua code, resulting in faster execution times. However, LuaJIT lacks direct GPU support, making it challenging to seamlessly integrate GPU acceleration into Torch. Without a method to interface directly with GPUs, Torch is limited to CPU execution.

3. Torch vs. PyTorch:

As Torch gained popularity, a successor library called PyTorch was developed. PyTorch addresses the limitation of GPU support in Torch by leveraging the power of CUDA (Compute Unified Device Architecture), a parallel computing platform and application programming interface model specifically designed for NVIDIA GPUs. PyTorch became the go-to solution for deep learning practitioners who require GPU acceleration, while Torch remained more focused on CPU execution.

Frequently Asked Questions:

Q: Can I use Torch on a system with GPU?

A: While Torch itself does not natively support GPU acceleration, you can utilize GPU through external libraries, such as CUDA or OpenCL. These libraries provide the necessary functionality to interface with GPUs, enabling you to incorporate GPU acceleration in your Torch workflows.

Q: Is there any plan to add GPU support to Torch in the future?

A: Torch has largely been succeeded by PyTorch, which already offers robust GPU support. However, since Torch is an open-source project, it is always possible that community contributions or forks may add GPU capabilities. Though, it is worth noting that the original developers may not actively focus on enhancing GPU support in Torch.

Q: Can I achieve comparable performance without GPU acceleration in Torch?

A: While GPU acceleration can significantly speed up deep learning training times, it doesn’t mean that you cannot achieve good performance without it. Depending on the complexity of your models and the size of your datasets, using efficient implementations, parallelism, and optimization techniques can still yield satisfactory results. Additionally, for small-scale projects, the absence of GPU acceleration may not have a substantial impact on overall performance.

Q: Should I switch to PyTorch if I want to utilize GPU acceleration?

A: If your primary focus is on leveraging GPU acceleration for deep learning tasks, it is advisable to transition to PyTorch. PyTorch provides seamless GPU integration through its support for CUDA, allowing you to take full advantage of GPU performance. However, it is important to consider the learning curve and potential code adaptations required when migrating from Torch to PyTorch.

In conclusion, while Torch is a powerful machine learning library, it does not support GPU acceleration due to its underlying development framework and legacy. However, alternative solutions such as PyTorch offer native GPU support to address this limitation. Despite the lack of built-in GPU capability, there are ways to utilize external libraries and optimization techniques to enhance performance in Torch-based workflows. Understanding the strengths and limitations of different frameworks allows machine learning practitioners to make informed decisions that align with their specific requirements and project constraints.

Why Is My Gpu Not Recognized Pytorch?

PyTorch is a popular open-source machine learning framework that is widely used for building deep learning models. It offers a range of functionalities and supports parallel processing using GPUs for faster computation. However, users sometimes encounter issues where their GPU is not recognized by PyTorch, preventing them from leveraging the full power of their graphics card. In this article, we explore some common reasons behind this problem and provide solutions to help you troubleshoot the issue effectively.

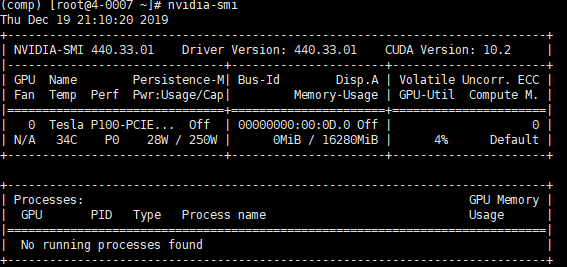

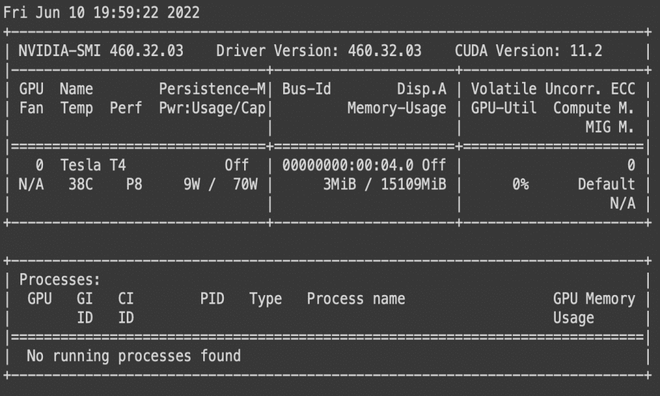

1. Outdated GPU Drivers: One of the main reasons why PyTorch may not recognize your GPU is outdated graphics card drivers. GPU manufacturers regularly release driver updates that enhance compatibility with various software, including machine learning frameworks like PyTorch. To resolve this issue, visit the website of your GPU manufacturer and check for the latest driver version. Download and install it, restart your computer, and then check if PyTorch recognizes your GPU.

2. Unsupported GPU: PyTorch requires a compatible GPU to utilize its parallel processing capabilities. Ensure that you have a CUDA-enabled GPU as PyTorch relies on CUDA, a parallel computing platform and programming model developed by NVIDIA. Additionally, verify the minimum CUDA version required by your PyTorch version. If your GPU does not meet the requirements, you may need to upgrade your hardware to utilize PyTorch effectively.

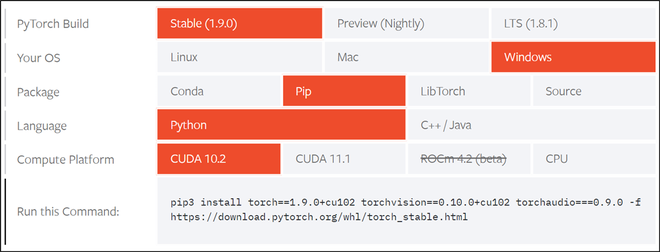

3. Incorrect Installation: Insufficient GPU recognition may also occur due to a faulty or incomplete installation of PyTorch. When installing PyTorch, ensure that you select the correct version compatible with your operating system and GPU. Use pip or conda to download the appropriate PyTorch package and follow the installation instructions carefully. Make sure to include the necessary CUDA dependencies to ensure GPU support. If the installation was unsuccessful or incomplete, reinstall PyTorch with the correct settings to rectify the issue.

4. CUDA Toolkit Configuration: PyTorch relies on the CUDA toolkit to interact with GPUs effectively. Improper configuration of the CUDA toolkit can prevent PyTorch from recognizing your GPU. To resolve this, reconfigure your CUDA toolkit installation. Check that the CUDA Toolkit version matches the required version for your PyTorch version. Additionally, ensure that the CUDA bin directory is included in your system’s PATH environment variable. Restart your computer after making any changes and verify if PyTorch now recognizes your GPU.

5. Insufficient VRAM: Another reason why PyTorch may not recognize your GPU is insufficient video random-access memory (VRAM). Deep learning models often require a significant amount of memory to store and process large tensors during training and inference. If your GPU does not have enough VRAM to accommodate the model’s memory requirements, PyTorch will default to using the CPU. Consider upgrading your GPU to one with higher VRAM capacity or reduce the model’s batch size to accommodate the available VRAM.

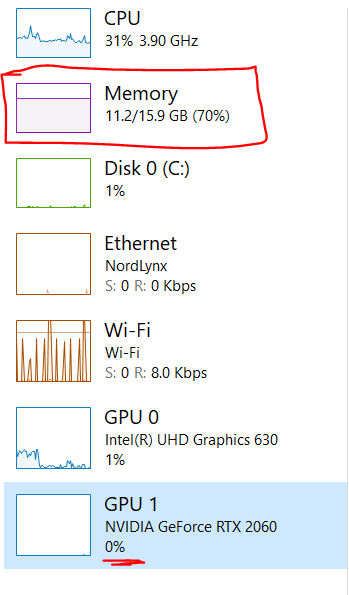

6. Multiple GPU Setup: If you have multiple GPUs installed on your system, PyTorch may fail to recognize them if they are not configured correctly. Ensure that your GPU drivers are up to date and that each GPU is properly connected to the power supply and motherboard. Additionally, verify that your system BIOS recognizes all installed GPUs. PyTorch provides an API to manually select the GPU device to be used, so ensure you are specifying the correct GPU index in your code.

7. Conflict with Other Libraries: Occasionally, conflicts may arise between PyTorch and other installed libraries that utilize GPUs or interfere with GPU recognition. Check if any other machine learning or GPU-focused libraries are installed and try disabling them temporarily to determine if they are causing the issue. Updating these libraries to the latest versions may also resolve any compatibility conflicts.

FAQs:

1. How can I check if my GPU is recognized by PyTorch?

You can check if PyTorch recognizes your GPU by running the following code snippet:

“`python

import torch

if torch.cuda.is_available():

print(“GPU is available!”)

else:

print(“GPU is not available!”)

“`

If the output displays “GPU is available!” then PyTorch has successfully recognized your GPU.

2. What are the minimum requirements for GPU support in PyTorch?

PyTorch requires a CUDA-enabled GPU with CUDA Toolkit support. Check the PyTorch documentation or the official NVIDIA CUDA website to determine the specific CUDA version required for your PyTorch version.

3. Can I use PyTorch without a GPU?

Yes, PyTorch can be used without a GPU. If PyTorch does not detect a compatible GPU, it will fallback to using the CPU. However, note that utilizing a GPU significantly speeds up computations in deep learning tasks.

4. I have followed all the troubleshooting steps, yet my GPU is still not recognized. What should I do?

If you have followed the troubleshooting steps outlined above and are still unable to resolve the issue, consider seeking help from the PyTorch community or relevant forums. Provide detailed information about your GPU, operating system, PyTorch version, and any error messages you encounter to facilitate effective troubleshooting assistance.

In conclusion, resolving GPU recognition issues in PyTorch requires a systematic approach that involves ensuring up-to-date GPU drivers, proper installation and configuration of PyTorch and the CUDA toolkit, sufficient VRAM, and checking for conflicts with other libraries. Following the troubleshooting steps outlined in this article will help you identify and resolve the issue, allowing you to harness the full power of your GPU for accelerated deep learning with PyTorch.

Keywords searched by users: torch is not able to use gpu Torch is not able to use GPU stable diffusion, runtimeerror: “layernormkernelimpl” not implemented for ‘half’, Pytorch not using GPU, RuntimeError: log” _vml_cpu” not implemented for ‘Half, Torch CUDA is_available False, Torch==1.13 1 cu117, Stable diffusion not using GPU, CUDA unavailable, invalid device 0 requested

Categories: Top 51 Torch Is Not Able To Use Gpu

See more here: nhanvietluanvan.com

Torch Is Not Able To Use Gpu Stable Diffusion

GPU stable diffusion, also known as GPU-based diffusion, refers to the ability to efficiently compute the diffusion algorithm on a GPU. Diffusion algorithms are widely used in various fields such as image processing, computer vision, and data analysis. These algorithms involve solving partial differential equations, which can be computationally demanding. GPUs, with their massive parallel processing capabilities, are particularly well-suited for these tasks, offering significant speed improvements over traditional CPUs.

However, Torch, as of yet, does not include a native implementation of GPU stable diffusion. This limitation poses several challenges for researchers and developers who heavily rely on Torch for their deep learning projects. Without GPU stable diffusion, users are forced to either use alternative libraries/frameworks that support this feature or resort to custom implementations, which can be time-consuming and error-prone.

One possible reason for this limitation could be the inherent complexity of implementing GPU-based diffusion within Torch. Developing efficient GPU algorithms requires low-level programming and intricate memory management. Torch’s focus has primarily been on high-level abstractions and ease of use, which might explain the absence of a GPU stable diffusion implementation.

Another factor that may contribute to Torch’s inability to support GPU stable diffusion is the lack of widespread demand. While diffusion algorithms have their applications, they are not as commonly used as other deep learning components like convolutional neural networks (CNNs) or recurrent neural networks (RNNs). Thus, the Torch development team may have prioritized features and optimizations that cater to more mainstream needs.

On the user side, some developers might argue that they can achieve GPU-based diffusion using Torch’s support for CUDA, an application programming interface (API) designed specifically for NVIDIA GPUs. While it is true that CUDA can be used to execute custom GPU code within Torch, it requires significant expertise and effort in GPU programming. Moreover, this approach can undermine the high-level abstractions that Torch offers, making the overall development process more complex.

To address any further queries you might have about Torch’s limitation in GPU stable diffusion, we have put together a list of frequently asked questions:

1. Can I still use Torch for deep learning tasks without GPU stable diffusion?

Yes, absolutely. Torch remains a powerful library for various deep learning applications, even without GPU stable diffusion.

2. Are there alternative libraries that support GPU stable diffusion?

Yes, there are other machine learning libraries, such as TensorFlow and PyTorch, which provide native support for GPU-based diffusion.

3. Is there any ongoing work to implement GPU stable diffusion in Torch?

While Torch’s development team is continually working on improving the library, there doesn’t seem to be any specific ongoing effort to include GPU stable diffusion at the moment.

4. Can I implement my own GPU-based diffusion algorithm within Torch using CUDA?

Yes, it is possible to use CUDA to implement custom GPU code within Torch. However, this approach requires advanced GPU programming skills and may not be suitable for all users.

In conclusion, while Torch offers a wide range of features and capabilities for deep learning tasks, it currently lacks native support for GPU stable diffusion. As diffusion algorithms continue to find their place in various applications, it becomes increasingly important for Torch developers to consider implementing GPU-based diffusion functionality in the library. Until then, users who heavily rely on GPU stable diffusion are encouraged to explore alternative libraries or consider custom implementations tailored to their specific needs.

Runtimeerror: “Layernormkernelimpl” Not Implemented For ‘Half’

If you’re a programmer or someone involved in machine learning, you might have encountered the dreaded “RuntimeError: layernormkernelimpl not implemented for ‘half’.” This error message can be frustrating, especially when you are in the midst of an important project or trying to debug your code. In this article, we will delve into the details of this error, understand its causes, and explore potential solutions to get you back on track.

Understanding the Error:

The error message “RuntimeError: layernormkernelimpl not implemented for ‘half'” originates from the PyTorch library, a popular open-source machine learning framework. This error typically occurs when trying to apply layer normalization, a technique used to standardize the inputs of a neural network layer, on a tensor of type ‘half.’

The tensor type ‘half’ is a floating-point number representation that occupies only 16 bits, whereas ‘float’ and ‘double’ take up 32 and 64 bits respectively. The ‘half’ type sacrifices precision for efficiency and is commonly used to reduce memory usage or accelerate computations on hardware with limited resources, such as GPUs.

Causes of the Error:

1. Incompatible Data Type: The most common cause of this error is attempting to perform layer normalization on a tensor of type ‘half’ that PyTorch does not support. Certain operations or implementations used in the layer normalization process might not be compatible with the ‘half’ data type, resulting in this error.

2. Incomplete Installation or Outdated Version: Another potential cause could be incomplete installation of PyTorch or using an outdated version of the library. Ensure that you have the latest version installed and all required dependencies are properly configured.

Solutions:

1. Data Type Conversion: The simplest solution to this error is to convert the tensor from type ‘half’ to ‘float’ or ‘double’ using the `.float()` or `.double()` functions respectively. This will ensure compatibility with the layer normalization process. However, keep in mind that this conversion might increase memory usage and impact performance.

2. Update PyTorch: Ensure that you are using the latest version of PyTorch. Newer versions often include bug fixes, optimizations, and additional support for various data types. Check the PyTorch official website or GitHub repository for any updates or bug reports related to this error.

3. Verify Input Data Type: Double-check that you are applying layer normalization to the correct tensor and its data type is indeed ‘half.’ If you mistakenly assigned the wrong type or performed prior operations that transformed the tensor into ‘half,’ you may encounter this error. Review your code for any inconsistencies.

4. Check Layer Normalization Implementation: If you are using a custom implementation of layer normalization or utilizing a third-party library, verify their compatibility with ‘half’ tensors. Explore the documentation or source code to ensure they support the ‘half’ type. Consider either modifying the implementation or using an alternative library that is compatible with ‘half’ tensors.

FAQs:

Q: Can I ignore this error and proceed with my code execution?

A: It is not recommended to ignore this error as it indicates an unsupported operation on the ‘half’ tensor type. Ignoring the error may lead to inconsistent or erroneous results. It is best to address the issue by following the suggested solutions.

Q: Why am I using ‘half’ tensors in the first place?

A: ‘half’ tensors are commonly used to reduce memory consumption or accelerate computations on GPUs. They are especially useful when working with large datasets or models that demand significant computational resources.

Q: Will converting ‘half’ tensors to ‘float’ or ‘double’ impact performance?

A: Yes, converting to higher precision data types can increase memory usage and potentially slow down computations. However, the impact on performance depends on the specific use case and hardware being utilized. It’s advised to benchmark and experiment with different data types to determine the most optimal configuration for your application.

Q: Are there any plans to add support for ‘half’ tensors in future PyTorch versions?

A: PyTorch frequently releases updates and enhancements. It is possible that support for ‘half’ tensors in different operations or implementations could be added in future versions. Stay informed about the latest updates from the PyTorch community to benefit from potential improvements in this area.

In conclusion, the “RuntimeError: layernormkernelimpl not implemented for ‘half'” error can be resolved by converting the tensor type, ensuring a compatible implementation, or updating your PyTorch version. Understanding the causes and potential solutions to this error will help you overcome this challenge and continue your machine learning projects smoothly.

Pytorch Not Using Gpu

Introduction:

PyTorch has become increasingly popular among developers and researchers due to its flexibility and ease of use in implementing deep learning models. One notable feature is its ability to efficiently utilize the power of GPUs (Graphics Processing Units) for accelerated computations. However, there are cases where users encounter issues with PyTorch not utilizing the GPU. In this article, we will dive into the reasons behind this behavior, explore possible solutions, and answer frequently asked questions to provide a comprehensive understanding of this topic.

Why is PyTorch Not Using GPU?

1. Incompatible Hardware: The most common reason for PyTorch not using the GPU is the presence of incompatible hardware. PyTorch requires an NVIDIA GPU with CUDA support to run computations on the GPU. If your hardware doesn’t meet these requirements, PyTorch will automatically fallback to using the CPU for computations.

2. Inadequate Drivers: Outdated or incompatible GPU drivers can also prevent PyTorch from utilizing the GPU. Ensure that you have the latest GPU drivers installed on your machine to leverage the GPU’s computational power effectively.

3. CUDA Toolkit Version Mismatch: PyTorch relies on the CUDA toolkit for GPU acceleration. If there is a version mismatch between PyTorch and the installed CUDA toolkit, GPU acceleration may not work as expected. Make sure to check the PyTorch documentation for the recommended compatible versions of CUDA toolkit and PyTorch.

4. Unavailability of GPU Memory: Limited GPU memory may result in PyTorch not using the GPU. Deep learning models with large parameters or images in high resolution can quickly consume all available GPU memory, forcing PyTorch to resort to CPU computation. Reducing batch size or opting for lower-resolution inputs can help mitigate memory limitations.

5. Improper Model Transfer: If you have a pre-trained model designed to use the GPU and you have accidentally transferred it to the CPU with the `.to(‘cpu’)` method, PyTorch will use the CPU for computations. Double-check your code to ensure you haven’t inadvertently changed the device target for your model.

Solutions to PyTorch Not Using GPU:

1. Verify Compatible Hardware: Check the specifications of your GPU to ensure it is CUDA-enabled and meets PyTorch’s requirements. If necessary, consider upgrading your hardware to leverage the full potential of PyTorch’s GPU acceleration.

2. Update GPU Drivers: Visit the official website of your GPU manufacturer and download the latest GPU drivers suitable for your hardware. Keeping your drivers up to date helps ensure compatibility with PyTorch and other GPU-accelerated applications.

3. Install Correct CUDA Toolkit Version: Follow the PyTorch documentation to identify the compatible version of the CUDA toolkit. Uninstall any previously installed CUDA toolkit and reinstall the recommended version to resolve any version mismatches.

4. Optimize GPU Memory Usage: If you frequently encounter out-of-memory errors, reduce the batch size during training or inference. Additionally, consider using data loading techniques like memory mapping or mini-batching to lower memory requirements. Taking advantage of mixed-precision training, where lower-precision data types are used for computations, can also help reduce memory usage.

5. Verify Model Transfer: Double-check that you haven’t inadvertently transferred your model to the CPU using `.to(‘cpu’)` or a similar method. Ensure that your model is loaded onto the GPU device with the `.to(‘cuda’)` or `.cuda()` method to enable GPU computations.

FAQs:

Q1. Can I use PyTorch without a GPU?

A1. Absolutely! PyTorch is perfectly capable of running on CPUs, albeit with slower computations compared to GPU utilization. It remains a viable option for small-scale models or development purposes.

Q2. How can I check if PyTorch is using the GPU?

A2. You can use the `torch.cuda.is_available()` function to check if PyTorch can access and use a compatible GPU.

Q3. What are the benefits of using PyTorch with a GPU?

A3. PyTorch with GPU acceleration provides significant speedup for deep learning tasks, especially for large and complex models. It enables faster training and inference times, leading to more efficient experimentation and prototyping.

Q4. Why does PyTorch automatically fallback to the CPU?

A4. PyTorch prioritizes compatibility and gracefully handles hardware incompatibilities. If your GPU or drivers don’t meet the requirements, PyTorch will use the CPU to ensure the code can run without errors.

Q5. Can I switch between GPU and CPU during training?

A5. Yes, you can switch between GPU and CPU during training by transferring the model and data between devices using the `.to()` method. However, frequent transfers can introduce additional overhead and may impact performance.

Conclusion:

PyTorch’s ability to utilize GPUs for accelerated computations is a powerful feature that significantly boosts deep learning tasks. However, there are several reasons why PyTorch may not automatically use the GPU. By understanding the limitations and potential solutions discussed in this article, users can proactively address these issues and leverage PyTorch’s full capabilities for GPU acceleration in their machine learning workflows.

Images related to the topic torch is not able to use gpu

Found 28 images related to torch is not able to use gpu theme

Article link: torch is not able to use gpu.

Learn more about the topic torch is not able to use gpu.

- Torch is not able to use GPU; add –skip-torch-cuda-test to …

- “Torch is not able to use GPU” : r/StableDiffusion – Reddit

- Stable Diffusion: “Torch Is Unable To Use GPU,” Meaning

- Why is GPU not found after installing PyTorch via Anaconda? – ask.CI

- PyTorch GPU: Working with CUDA in PyTorch – Run:ai

- Can I use PyTorch without a CUDA gpu? – deployment

- Stable Diffusion “Torch is not able to use GPU” – can you fix?

- Stable Diffusion: “Torch Is Unable To Use GPU,” Meaning

- How to solve “Torch is not able to use GPU”error?

- torch is not able to use gpu; add –skip-torch-cuda-test to …

- Stable Diffusion Torch Is Not Able To Use Gpu Amd. When I try

- Skip-torch-cuda-test to COMMANDLINE_ARGS variable to …

See more: nhanvietluanvan.com/luat-hoc