Spark Filter Multiple Conditions

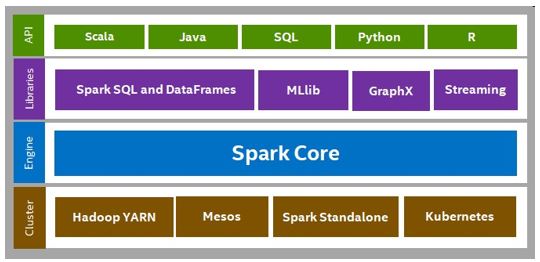

The Spark Filter function is a widely used functionality in Apache Spark that allows users to filter datasets based on specific conditions. It is a powerful tool that helps in extracting the required data from a large dataset efficiently. In this article, we will explore the Spark Filter function in detail, including its definition, purpose, operation on datasets, and advantages.

Definition and Purpose of the Spark Filter Function

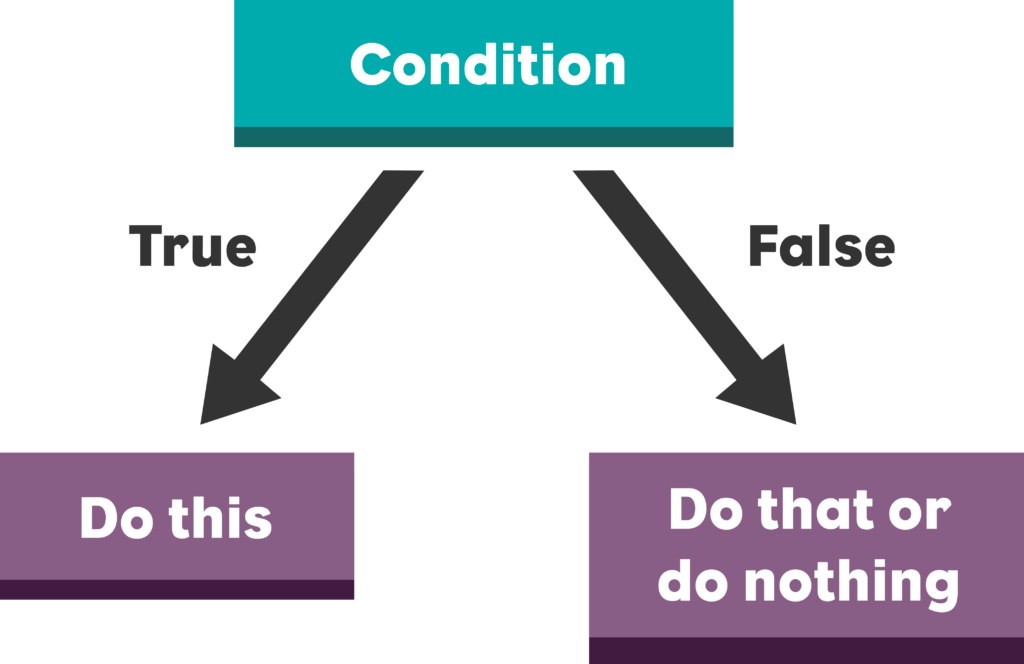

The Spark Filter function is a transformation operation that is applied to a dataset to filter out the rows that satisfy specific conditions. It takes a Boolean expression as input and returns a new dataset containing only the rows that evaluate to true for that expression. This function is vital for data preprocessing and cleaning, as it allows users to eliminate irrelevant or redundant data before performing further operations.

How Filter Function Operates on a Dataset in Spark

When the Filter function is applied to a dataset in Spark, it iterates through each row of the dataset and evaluates the given Boolean expression for that row. If the expression evaluates to true for a particular row, that row is included in the new dataset; otherwise, it is excluded. This process is carried out in a distributed manner, making it highly efficient for processing large datasets.

Advantages and Use Cases of Spark Filter Function

The Spark Filter function offers several advantages that make it a preferred choice for data filtering in Spark applications. Some of the key advantages and use cases are:

1. Improved Performance: The Filter function optimizes the data filtering process by evaluating the Boolean expressions on each row in parallel. This parallel processing capability ensures efficient utilization of resources and reduces the time taken for filtering operations.

2. Simplified Data Manipulation: Using the Filter function, users can easily extract subsets of data from a dataset based on their requirements. This simplifies the data manipulation process and allows for seamless data exploration and analysis.

3. Enhanced Data Quality: By leveraging the Filter function, users can eliminate erroneous or irrelevant data from their datasets, resulting in improved data quality. This is particularly beneficial when dealing with noisy or incomplete datasets.

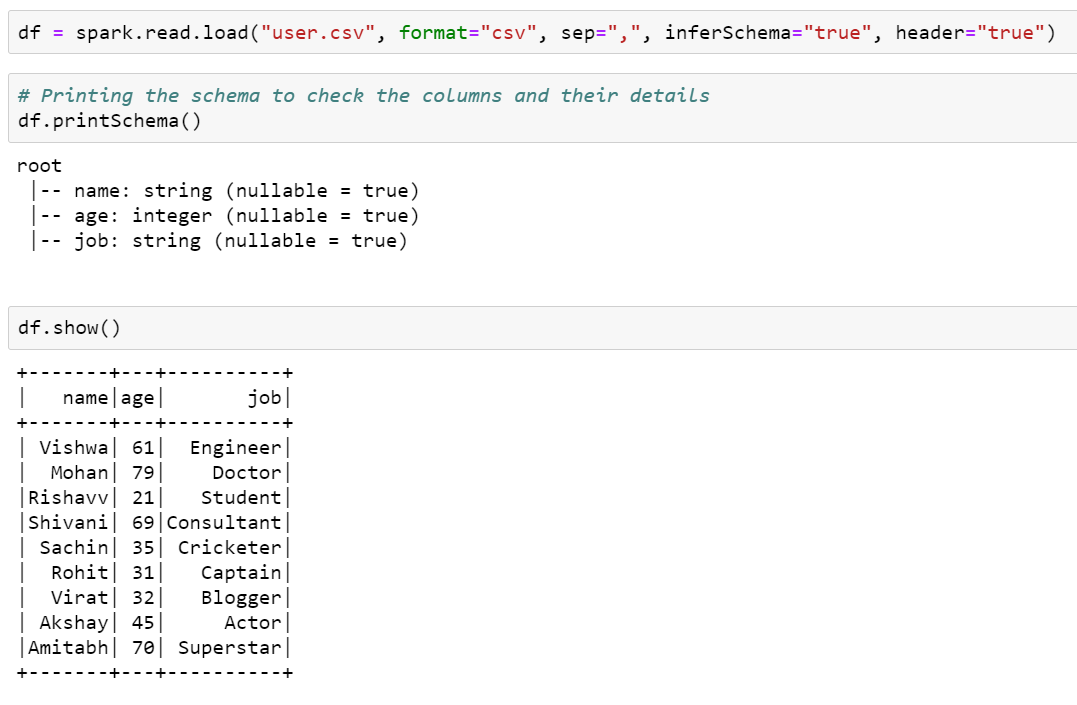

Filtering Data Based on a Single Condition using Spark Filter

Syntax and Usage of Spark Filter Function with a Single Condition

To filter data based on a single condition using the Spark Filter function, the syntax is as follows:

filtered_data = input_data.filter(condition_expr)

where “filtered_data” is the new dataset containing the filtered rows, “input_data” is the original dataset, and “condition_expr” is the Boolean expression specifying the condition.

Examples of Filtering Data based on Equality, Comparison, and String Conditions

Let’s consider some examples to illustrate how the Spark Filter function can be used to filter data based on different types of conditions.

1. Filtering Data based on Equality:

filtered_data = input_data.filter(input_data[‘age’] == 25)

This example filters the “input_data” dataset and selects only the rows where the “age” column is equal to 25.

2. Filtering Data based on Comparison:

filtered_data = input_data.filter((input_data[‘age’] > 30) & (input_data[‘salary’] <= 50000))

In this example, the "filtered_data" dataset is created by filtering the "input_data" dataset and selecting only the rows where the "age" column is greater than 30 and the "salary" column is less than or equal to 50000.

3. Filtering Data based on String Conditions:

filtered_data = input_data.filter(input_data['category'].startswith('A'))

Here, the "filtered_data" dataset is obtained by filtering the "input_data" dataset and selecting only the rows where the "category" column starts with the letter 'A'.

Handling Null Values and Complex Conditions in Spark Filtering

When dealing with null values in Spark filtering, it is essential to handle them appropriately to avoid any unexpected results. The Spark Filter function provides various methods to handle null values, such as using "isNull", "isNotNull", or filtering them explicitly using the "!=" operator.

To handle complex conditions, users can combine multiple conditions using logical operators such as "AND", "OR", and "NOT". We will explore this in more detail in the next section.

Filtering Data using Multiple Conditions in Spark Filter

Overview of Filtering Multiple Conditions in Spark Filter

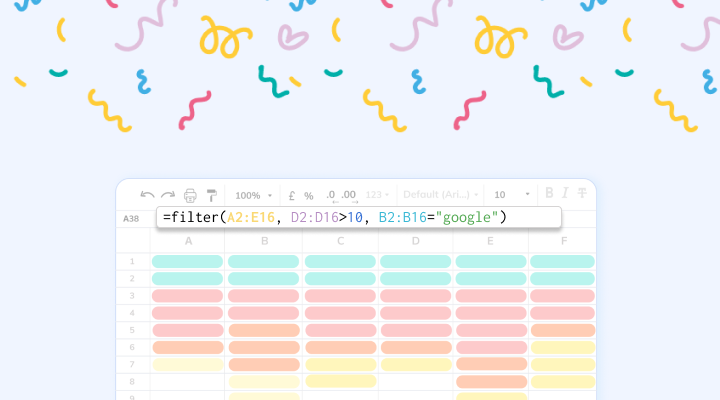

Sometimes, filtering based on a single condition may not be sufficient, and users may need to apply multiple conditions to achieve the desired result. The Spark Filter function supports filtering with multiple conditions, allowing users to combine different criteria for data selection.

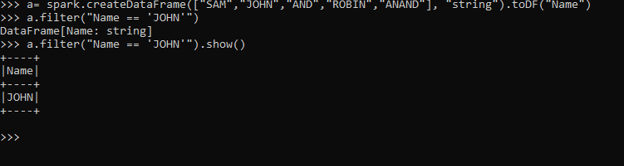

Applying Logical Operators (AND, OR, NOT) on Conditions with Spark Filter

To filter data based on multiple conditions, users can apply logical operators like "AND", "OR", and "NOT" in their filter expressions. Here are some examples:

1. Filtering using AND:

filtered_data = input_data.filter((input_data['age'] > 30) & (input_data[‘salary’] <= 50000))

2. Filtering using OR:

filtered_data = input_data.filter((input_data['age'] >= 40) | (input_data[‘salary’] > 80000))

3. Filtering using NOT:

filtered_data = input_data.filter(~(input_data[‘category’].startswith(‘A’)))

Examples of Filtering Data with Multiple Conditions using Spark Filter

Let’s consider a few examples to better understand how to filter data using multiple conditions in Spark Filter.

1. Filtering Data with AND Operator:

filtered_data = input_data.filter((input_data[‘age’] > 30) & (input_data[‘salary’] <= 50000) & (input_data['category'] != 'B'))

This example filters the "input_data" dataset based on three conditions: age greater than 30, salary less than or equal to 50000, and category not equal to 'B'.

2. Filtering Data with OR Operator:

filtered_data = input_data.filter((input_data['age'] >= 40) | (input_data[‘salary’] > 80000))

Here, the “filtered_data” dataset is created by filtering the “input_data” dataset and selecting rows where the age is greater than or equal to 40 OR the salary is greater than 80000.

Understanding the Order of Operations in Spark Filter Multiple Conditions

Precedence of Logical Operators in Spark Filter Multiple Conditions

When filtering data in Spark using multiple conditions, it is essential to understand the precedence of logical operators for correct evaluation. The order of precedence, from highest to lowest, is as follows: NOT, AND, and OR. It is recommended to use parentheses to group conditions explicitly and avoid any confusion or misinterpretation.

How to Use Parentheses for Grouping Conditions in Spark Filter

To ensure the correct evaluation of multiple conditions, users can use parentheses to group conditions explicitly. Here’s an example:

filtered_data = input_data.filter((input_data[‘age’] >= 40) & ((input_data[‘salary’] > 80000) | (input_data[‘category’] == ‘A’)))

In this example, the conditions inside the inner parentheses are evaluated first, followed by the logical OR operation, and then the logical AND operation.

Considering Evaluation Order and Performance Impact in Spark Filter

When filtering data with multiple conditions in Spark, the order of conditions can impact performance. It is advisable to place the most restrictive conditions first to reduce the dataset size earlier in the filtering process. This can significantly improve the overall performance by reducing unnecessary computations.

Advanced Techniques for Spark Filter Multiple Conditions

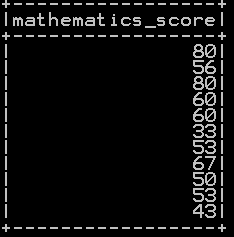

Applying Functions and Expressions in Spark Filter Conditions

The Spark Filter function allows users to apply various functions and expressions within the filter conditions to perform advanced filtering operations. Users can leverage built-in functions like “substring”, “date_format”, “to_date”, etc., or even define their own user-defined functions (UDFs) to filter data based on complex conditions.

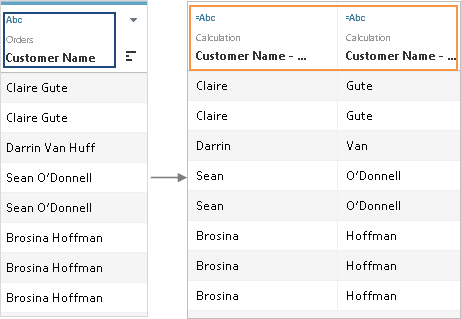

Using Columns and Column Expressions for Complex Filtering in Spark

To filter data based on complex conditions, users can use column names and column expressions in the Spark Filter function. Column expressions provide a rich set of operations and transformations that can be used to create custom filtering conditions. For example:

from pyspark.sql.functions import col

filtered_data = input_data.filter((col(‘age’) * 2) > col(‘salary’))

Here, the “filtered_data” dataset is obtained by filtering the “input_data” dataset based on the condition “age * 2 > salary”.

Incorporating User-defined Functions (UDFs) in Spark Filter Multiple Conditions

Spark enables users to define their own User-Defined Functions (UDFs) and apply them in the Spark Filter function. UDFs allow users to create custom functions for filtering based on specific requirements. For example:

from pyspark.sql.functions import udf

def filter_condition(value):

# Custom filtering logic

return value > 100

udf_filter_condition = udf(filter_condition)

filtered_data = input_data.filter(udf_filter_condition(input_data[‘age’]))

Here, the “filtered_data” dataset is created by applying the user-defined function “udf_filter_condition” to the “age” column of the “input_data” dataset.

Optimization Tips and Best Practices for Spark Filter Multiple Conditions

Leveraging Partition Pruning and Predicate Pushdown in Spark Filter

To optimize Spark Filter operations involving multiple conditions, users can take advantage of partition pruning and predicate pushdown techniques. Partition pruning refers to the process of eliminating irrelevant partitions in the dataset based on the filter conditions. Predicate pushdown, on the other hand, refers to pushing the filter operation closer to the data source, minimizing unnecessary data movement across the network.

Considering Data Skewness and Join Filters for Enhanced Performance

In scenarios where the data distribution is uneven or skewed, Spark Filter performance can be affected. Users should be aware of data skewness and employ techniques like data repartitioning, bucketing, or using specialized functions (e.g., “broadcast”) to mitigate the impact of data skew on filtering operations.

Additionally, when joining multiple datasets, it is recommended to apply filters on the smaller dataset before performing the join. This reduces the amount of data shuffled during the join operation and improves overall performance.

Caching and Reusing Filtered Data for Improved Efficiency in Spark

To avoid recomputing the filtered dataset every time it is required, users can cache or persist the filtered data in memory or disk storage. Caching allows subsequent operations to retrieve the filtered data from memory, eliminating the need for recomputation and significantly improving overall efficiency.

FAQs

Q1. What is the syntax for using the Spark Filter function?

A1. The syntax for using the Spark Filter function is:

filtered_data = input_data.filter(condition_expr)

Q2. How can I handle null values when filtering data in Spark?

A2. Spark provides methods like “isNull” and “isNotNull” to handle null values in filtering. Alternatively, null values can be filtered explicitly using the “!=” operator.

Q3. How can I filter data based on multiple conditions using the Spark Filter function?

A3. To filter data based on multiple conditions, users can combine conditions using logical operators like “AND”, “OR”, and “NOT” in the filter expression.

Q4. Can I use functions and expressions within the Spark Filter conditions?

A4. Yes, Spark allows users to apply various functions and expressions within the filter conditions, including built-in functions and user-defined functions (UDFs).

Q5. What are some optimization tips for Spark Filter operations involving multiple conditions?

A5. Users can optimize Spark Filter operations by leveraging techniques like partition pruning, predicate pushdown, considering data skewness, and applying join filters. Additionally, caching or persisting filtered data can enhance efficiency.

In conclusion, the Spark Filter function in Apache Spark provides a versatile and efficient approach to filter datasets based on specific conditions. By understanding its capabilities, syntax, and optimization techniques, users can leverage the power of Spark Filter to preprocess, clean, and extract relevant data from large datasets efficiently.

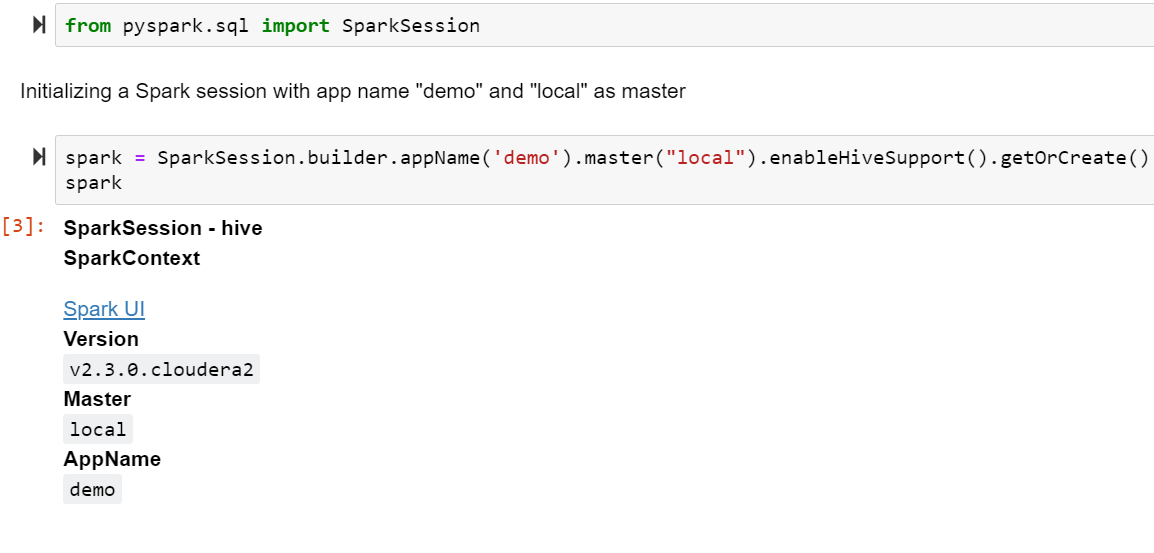

07. Databricks | Pyspark: Filter Condition

Keywords searched by users: spark filter multiple conditions Pyspark filter, Pyspark filter timestamp greater than, Spark filter not in list, Filter null spark, Filter spark java, Scala filter DataFrame, Case when spark, Scala filter column

Categories: Top 90 Spark Filter Multiple Conditions

See more here: nhanvietluanvan.com

Pyspark Filter

Apache Spark, an open-source data processing framework, has gained significant popularity for its ability to process large-scale data sets rapidly. In Spark, PySpark is the Python API that facilitates programming in Spark. One of the most powerful operations in PySpark is the filter function, which enables data analysts and data scientists to perform SQL-like filtering on distributed data with exceptional ease and efficiency.

What is PySpark Filter?

PySpark filter is a transformation operation that allows users to extract a subset of data from a DataFrame or RDD (Resilient Distributed Dataset) based on specified conditions. It accepts a lambda function or a SQL-like expression, enabling users to perform complex filtering operations on distributed data in a concise and familiar manner.

PySpark filter operates similar to the SQL WHERE clause, allowing users to implement filtering conditions on specific columns or fields of the DataFrame. It evaluates each record against the specified condition and retains only those records that satisfy the condition.

Using PySpark Filter:

To utilize the power of PySpark filter, one must have a basic understanding of PySpark RDDs and DataFrames.

1. Filtering Using RDD:

In PySpark, an RDD represents a distributed collection of elements that can be processed in parallel. To apply filter on an RDD, we create a lambda function or use the lambda expression to define the filtering condition. For instance, consider an RDD named ‘numbers’ containing integer values:

numbers = sc.parallelize([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

To filter the RDD and retain only the even numbers, we can simply use the filter function as follows:

filtered_numbers = numbers.filter(lambda x: x % 2 == 0)

This will create a new RDD containing only the even numbers {2, 4, 6, 8, 10}.

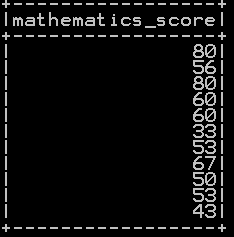

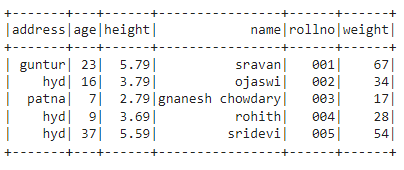

2. Filtering Using DataFrames:

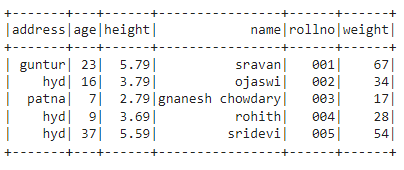

DataFrames are the preferred data structure in Spark 2.0 onwards. They provide a higher-level API and are optimized for distributed processing. To filter a DataFrame, we need to utilize the filter function available within the DataFrame API. Consider a DataFrame named ’employees’ with multiple columns like ‘name’, ‘age’, and ‘salary’:

| Name | Age | Salary |

|——–|—–|——–|

| John | 25 | 5000 |

| Alice | 30 | 6000 |

| Bob | 40 | 8000 |

| Claire | 35 | 5500 |

| David | 22 | 4500 |

To filter the DataFrame and retain only the employees with age greater than 30, we can use the filter operation as demonstrated below:

filtered_employees = employees.filter(employees.age > 30)

This will create a new DataFrame that contains the following records:

| Name | Age | Salary |

|——-|—–|——–|

| Alice | 30 | 6000 |

| Bob | 40 | 8000 |

| Claire| 35 | 5500 |

Advanced Filtering in PySpark:

PySpark filter supports a wide range of filtering conditions, including complex logical operations, null checks, and comparisons. Here are a few examples:

1. Logical operations: PySpark filter supports logical operators such as AND, OR, and NOT. For example, to filter for employees aged between 30 and 40 with a salary greater than 5000, we use the following filter expression:

filtered_employees = employees.filter((employees.age > 30) & (employees.age < 40) & (employees.salary > 5000))

2. Null checks: PySpark filter can handle null values effortlessly. For instance, to filter out records with null values in the ‘salary’ column, we can use the following filter expression:

filtered_employees = employees.filter(employees.salary.isNotNull())

3. String filtering: PySpark filter also works effectively with string data. For instance, to filter employees with names starting with the letter ‘J’, we use the following filter expression:

filtered_employees = employees.filter(employees.name.startswith(‘J’))

FAQs:

1. Is PySpark filter lazy evaluated?

Yes, similar to other PySpark transformations, filter is a lazy operation. It returns a new RDD or DataFrame without immediately executing the filtering operation. The actual filtering happens only when an action is performed on the filtered data.

2. Can I use multiple conditions while filtering?

Yes, PySpark filter allows users to apply multiple conditions using logical operators like AND, OR, and NOT. Multiple conditions can be combined using parentheses for complex filtering operations.

3. Can I filter based on a column value present within a list?

Yes, PySpark filter supports filtering based on column values present within a list. For example, to filter employees whose age is either 25 or 30, we can use the following filter expression:

filtered_employees = employees.filter(employees.age.isin([25, 30]))

4. Is PySpark filter case-sensitive?

By default, PySpark filter is case-sensitive while filtering string data. To perform case-insensitive filtering, users need to utilize the lower() or upper() function in combination with filter.

5. Does PySpark filter support custom filtering functions?

Yes, PySpark filter supports custom filtering functions by using lambda functions or UDFs (User Defined Functions).

In conclusion, PySpark filter provides an efficient and user-friendly way to filter and extract subset data from large-scale datasets. The ability to perform complex filtering operations in a SQL-like manner simplifies data manipulation and allows data analysts and data scientists to focus on extracting meaningful insights from distributed data efficiently.

Pyspark Filter Timestamp Greater Than

Introduction:

Apache Spark has emerged as a popular big data processing engine due to its ability to handle large-scale datasets efficiently. PySpark, the Python interface to Spark, provides a powerful API that allows users to perform various data processing operations, including filtering, transforming, and aggregating data. When working with temporal data, it is often necessary to filter records based on specific time-related conditions, such as selecting all records that have timestamps greater than a certain point in time. In this article, we will delve into the mechanics of filtering temporal data in Spark using the PySpark filter() operation and demonstrate how to filter timestamps greater than a given value.

Filtering Temporal Data in PySpark:

PySpark provides multiple ways to filter data, with the filter() operation being the most commonly used method. This operation allows users to create a Boolean condition to specify the records they want to keep in a dataset. When working with temporal data, filtering timestamps greater than a certain value can be achieved by comparing the timestamp column with a specific date or time.

Assuming we have a DataFrame named `df` containing a timestamp column named `timestamp_col`, we can filter records with a timestamp greater than a given value using the following PySpark code:

“`python

from pyspark.sql.functions import col

filtered_df = df.filter(col(“timestamp_col”) > “2022-01-01 00:00:00”)

“`

In the above code snippet, we import the `col()` function from the `pyspark.sql.functions` module, which allows us to reference columns in our DataFrame using the dot notation. We then use the `filter()` operation on the DataFrame `df` to create a filtering condition. By comparing the `timestamp_col` with the string `”2022-01-01 00:00:00″` using the greater-than operator (`>`), we ensure that only records with timestamps greater than the specified value are retained in the resulting `filtered_df`.

It’s worth noting that the value we compare against should be of the same data type as the column we are filtering on. In this case, since the `timestamp_col` is of timestamp type, we use a string representation of the timestamp as the comparison value.

Frequently Asked Questions (FAQs):

Q1: Can I filter timestamps greater than a dynamically specified value?

A1: Absolutely! The comparison value used in the filter operation does not have to be hard-coded. You can use variables or expressions to specify the comparison value dynamically. For example:

“`python

from pyspark.sql.functions import col

threshold_timestamp = “2022-01-01 00:00:00”

filtered_df = df.filter(col(“timestamp_col”) > threshold_timestamp)

“`

By using a variable (`threshold_timestamp`) to store the desired timestamp value, you can easily modify the filtering condition without changing the code logic.

Q2: Can I filter timestamps greater than a specific time of the day?

A2: Yes, you can filter based on specific time ranges within a day. To achieve this, you can extract the time component from the timestamp column and compare it with the desired time. For example, if you want to filter records with timestamps greater than 10:30 AM, you can use the following code:

“`python

from pyspark.sql.functions import col, hour, minute

filtered_df = df.filter((hour(col(“timestamp_col”)) > 10) & (minute(col(“timestamp_col”)) > 30))

“`

In this code snippet, we use the `hour()` and `minute()` functions from PySpark to extract the hour and minute components from the `timestamp_col`, respectively. We then apply a logical AND condition using the greater-than operator (`>`) to filter records greater than 10:30 AM.

Q3: What happens if the DataFrame contains null values in the timestamp column?

A3: When using the filter operation, Spark automatically handles null values in the columns being compared. Records with null values in the timestamp column will be excluded from the resulting DataFrame.

Q4: Can I filter timestamps greater than the current system time?

A4: Yes, you can compare timestamps to the current system time by using the `current_timestamp()` function from PySpark. Here’s an example:

“`python

from pyspark.sql.functions import col, current_timestamp

filtered_df = df.filter(col(“timestamp_col”) > current_timestamp())

“`

By comparing the `timestamp_col` with `current_timestamp()`, you can filter records with timestamps greater than the current time when executing the code.

Conclusion:

Filtering temporal data using PySpark’s filter() operation is a straightforward and efficient way to extract records based on conditions involving timestamps. By comparing the timestamp column with a specified value, you can easily filter timestamps greater than a particular point in time. PySpark’s expressive syntax and powerful API make it an ideal choice for processing large-scale temporal datasets.

Spark Filter Not In List

When it comes to big data processing, Apache Spark has emerged as a leading technology in the field. With its powerful capabilities for distributed computing, Spark enables businesses to perform complex analytics tasks at lightning speed. One of the most essential components of any Spark application is the filtering algorithm, which allows users to extract relevant information from large datasets. In this article, we will explore the concept of Spark filter not in list, its applications, and common FAQs associated with its usage.

Understanding Spark Filter Not in List

Spark filter not in list is a filtering operation supported by Spark SQL. It allows users to filter out records that do not match a specified list of values. This filter operation is commonly used when the user wants to exclude a set of specific values from a dataset, facilitating easier data analysis and manipulation.

Syntax and Usage

The syntax for the Spark filter not in list operation is as follows:

“`

DataFrame.filter(col(“column_name”).isNotInCollection(list_of_values))

“`

In this syntax, the `column_name` refers to the column on which the filter operation should be performed, and `list_of_values` represents the collection of values to be excluded. When this operation is applied to a Spark DataFrame, it removes all records that do not match the values in the provided list.

Applications of Spark Filter Not in List

1. Data Cleansing: When working with large datasets, it is common to encounter outliers or inconsistent data. The Spark filter not in list operation facilitates the removal of unimportant or irrelevant data values, improving the quality and accuracy of the dataset.

2. Customer Segmentation: In marketing analytics, understanding customer behavior is crucial. By using Spark filter not in list, marketers can segment their customers based on exclusion criteria, such as excluding customers who have not made a purchase within a specific time frame.

3. Anomaly Detection: For anomaly detection purposes, excluding known patterns or normal behaviors is important. Using Spark filter not in list, users can eliminate the expected behavior from the dataset and focus only on the abnormal patterns.

4. Fraud Detection: In financial fraud detection, identifying fraudulent transactions among a large volume of data is challenging. Spark filter not in list enables users to exclude legitimate transactions or known non-fraudulent patterns, allowing for easier identification of potential fraud instances.

Frequently Asked Questions

Q1: What is the difference between Spark filter not in list and filter?

A1: The primary difference is that the Spark filter operation applies a single filter condition to the dataset, whereas the filter not in list operation excludes a specific set of values from the dataset.

Q2: Can I use Spark filter not in list operation on multiple columns simultaneously?

A2: Yes, Spark filter not in list operation can be used on multiple columns by chaining multiple filter operations together on each column.

Q3: Are there any performance implications of using Spark filter not in list?

A3: The performance implications of using Spark filter not in list operation depend on the size of the dataset, the complexity of the exclusion condition, and the available computational resources. It is advisable to conduct performance testing on large datasets to evaluate its impact.

Q4: How do I handle cases where the list of exclusion values is dynamically generated?

A4: Spark filter not in list operation supports dynamically generated exclusion lists. You can use Spark programming features to generate the list programmatically or retrieve it from an external data source, and then apply the exclusion logic on the DataFrame.

Q5: Is Spark filter not in list case-sensitive?

A5: By default, Spark filter not in list is case-sensitive. However, you can use additional Spark functions like `upper()` or `lower()` to perform case-insensitive filtering as per your requirements.

Conclusion

Spark filter not in list is a powerful tool for data filtering in Apache Spark, allowing users to exclude specific values from a dataset efficiently. Its applications span across various domains, including data cleansing, customer segmentation, anomaly detection, and fraud detection. By utilizing this capability, analysts and data scientists can focus on the desired aspects of their datasets, improving their insights and decision-making processes.

Images related to the topic spark filter multiple conditions

Found 39 images related to spark filter multiple conditions theme

![4. Working with Key/Value Pairs - Learning Spark [Book] 4. Working With Key/Value Pairs - Learning Spark [Book]](https://www.oreilly.com/api/v2/epubs/9781449359034/files/assets/lnsp_0403.png)

Article link: spark filter multiple conditions.

Learn more about the topic spark filter multiple conditions.

- multiple conditions for filter in spark data frames – Stack Overflow

- Pyspark – Filter dataframe based on multiple conditions

- Subset or Filter data with multiple conditions in pyspark

- Spark Where And Filter DataFrame Or DataSet – BigData-ETL

- Filter a column based on multiple conditions: Scala Spark-scala

- PySpark: Dataframe Filters – DbmsTutorials

See more: https://nhanvietluanvan.com/luat-hoc/