Python Max Time Complexity

Overview of time complexity in Python

Time complexity refers to the measure of the amount of time an algorithm takes to run as a function of the input size. It is a critical aspect of algorithm analysis and is key in determining the efficiency and scalability of a program. In Python, time complexity is influenced by various factors, such as the size of the input, the operations performed, and the data structures used.

Understanding the concept of maximum time complexity

Maximum time complexity, often denoted as “Big O notation,” indicates the upper bound on the running time of an algorithm in the worst-case scenario. It characterizes the scalability of an algorithm by expressing how the running time grows with the input size.

Big O notation and its significance in measuring time complexity

Big O notation is a mathematical notation used to describe the behavior of an algorithm as the input size approaches infinity. It provides an upper bound by disregarding constants and lower-order terms. For example, if an algorithm has a time complexity of O(n^2), it means that the running time grows quadratically with the input size, based on the worst-case scenario.

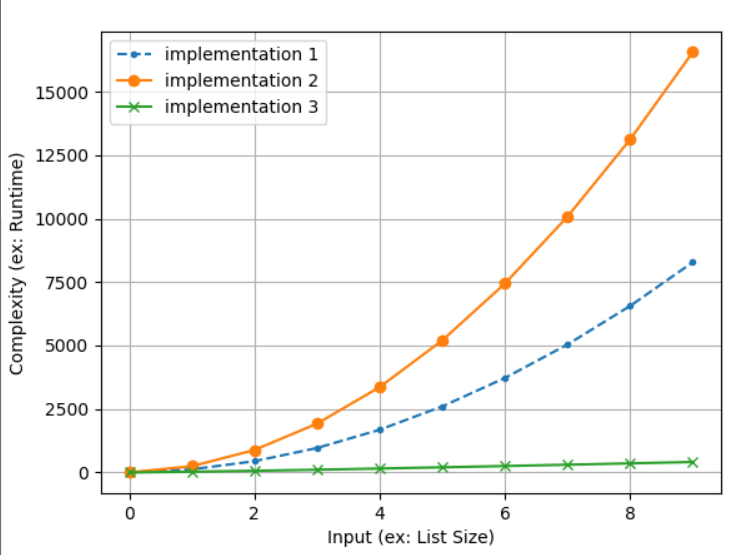

Exploring different time complexity classes and their implications in Python

In Python, several time complexity classes commonly arise. Some notable examples include:

1. O(1) constant time complexity: This implies that the algorithm’s running time remains constant, regardless of the input size. An example of this is accessing an element in a list by its index.

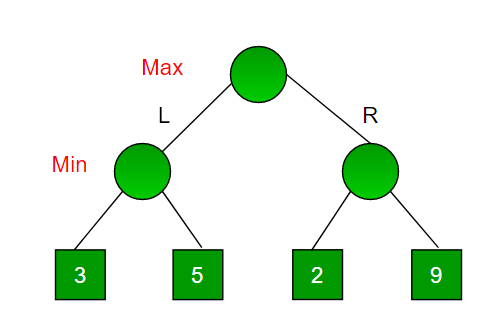

2. O(log n) logarithmic time complexity: This indicates that the running time grows logarithmically with the input size. It frequently occurs in algorithms such as binary search, where the input is halved in each step.

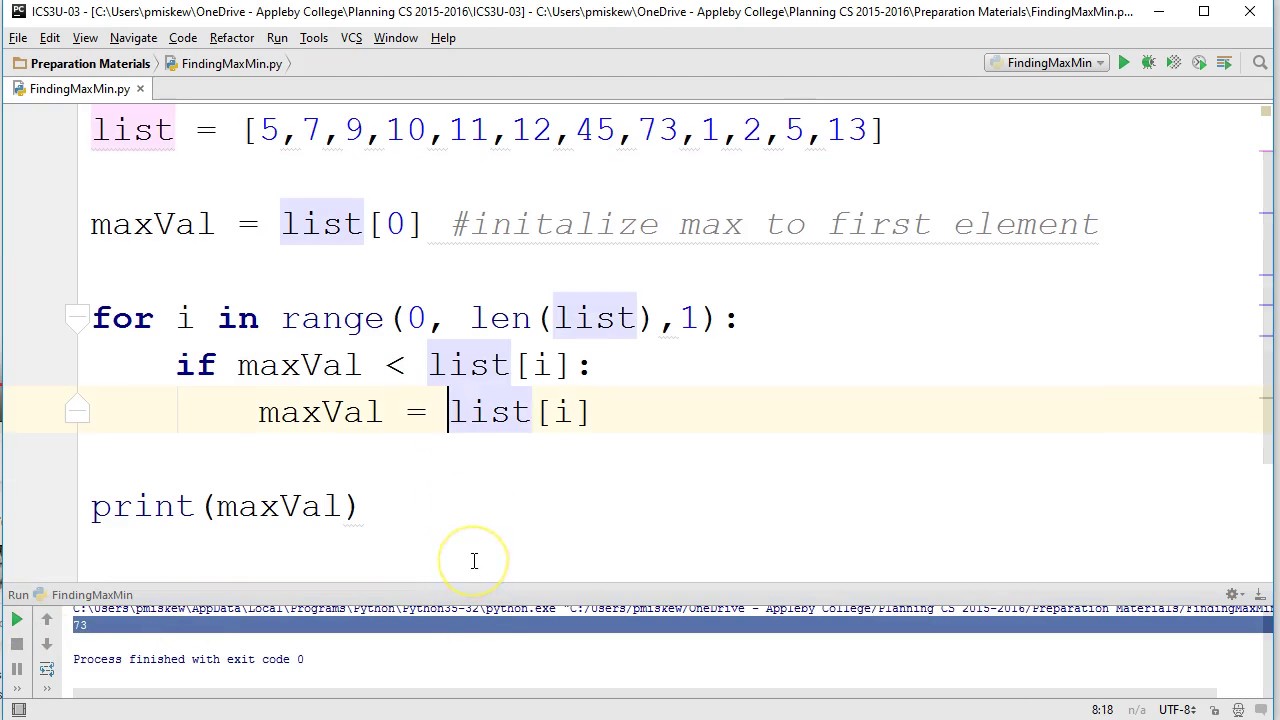

3. O(n) linear time complexity: This implies that the running time scales linearly with the input size. Many algorithms, such as traversing a list or array, fall into this category.

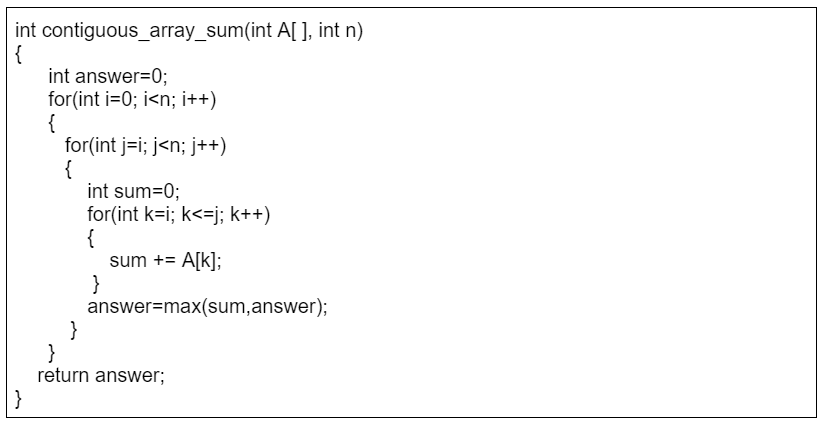

4. O(n^2) quadratic time complexity: This class represents algorithms in which the running time grows quadratically with the input size. It commonly arises in nested loops.

The relationship between time complexity and algorithm efficiency

Time complexity is directly related to algorithm efficiency. An algorithm with a lower time complexity will execute faster for large input sizes compared to an algorithm with a higher time complexity. Therefore, it is crucial to optimize algorithms to reduce time complexity and improve efficiency.

Analyzing the worst-case scenario and its impact on time complexity

The worst-case scenario represents the input that would result in the maximum time complexity. It is an essential consideration in algorithm analysis as it provides insight into the algorithm’s behavior under unfavorable conditions. By analyzing the worst-case scenario, developers can identify potential performance bottlenecks and devise suitable optimizations.

Techniques to optimize time complexity in Python

There are several techniques to optimize time complexity in Python:

1. Choosing suitable data structures: By selecting appropriate data structures like dictionaries or sets, you can improve the efficiency of various operations, such as searching or retrieving elements, resulting in better time complexity.

2. Implementing efficient algorithms: By utilizing efficient algorithms specifically designed for a problem, you can achieve better time complexity compared to brute-force or inefficient approaches.

3. Utilizing built-in functions and libraries: Python provides numerous built-in functions and libraries that are highly optimized. Utilizing them can significantly reduce time complexity.

Common Python operations and their time complexities

Python offers a variety of operations, each with its own time complexity:

1. Indexing an array or list: O(1)

2. Inserting or deleting an element at the beginning of an array: O(n)

3. Appending an element to an array or list: O(1) (amortized time complexity)

4. Searching for an element in a list: O(n)

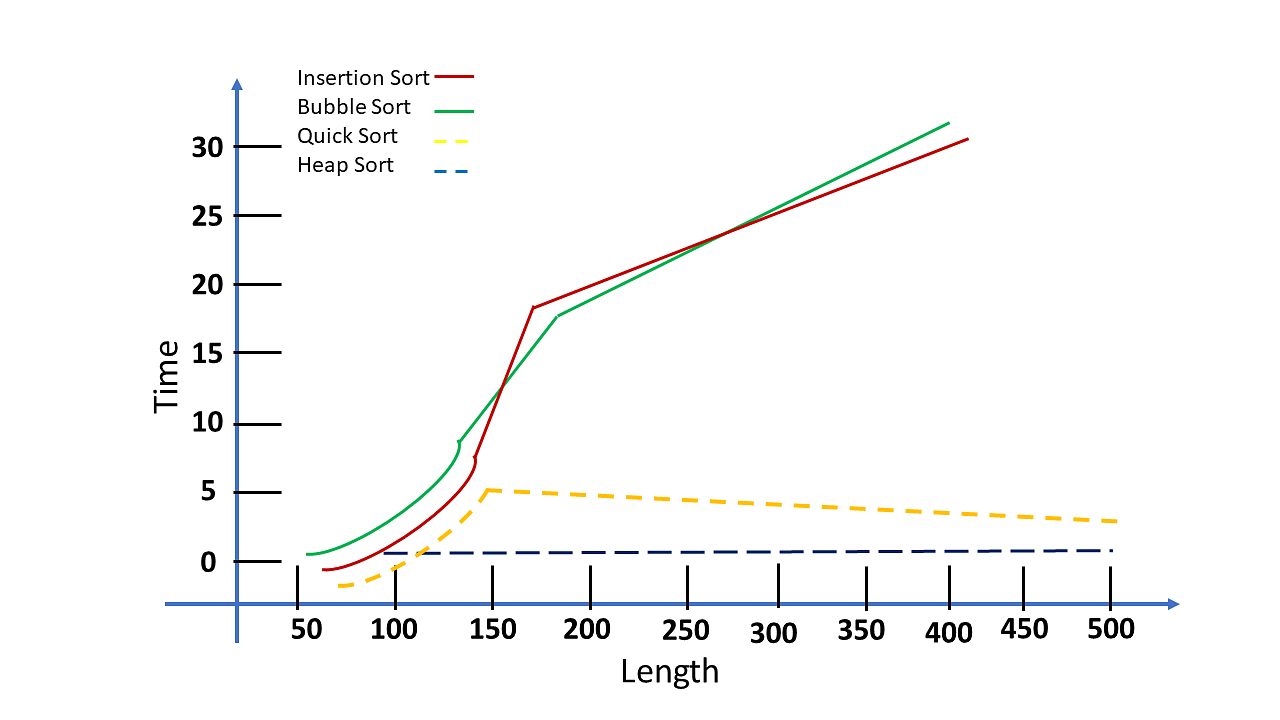

5. Sorting a list using built-in sort functions: O(n log n)

Real-world examples of Python code with various time complexities

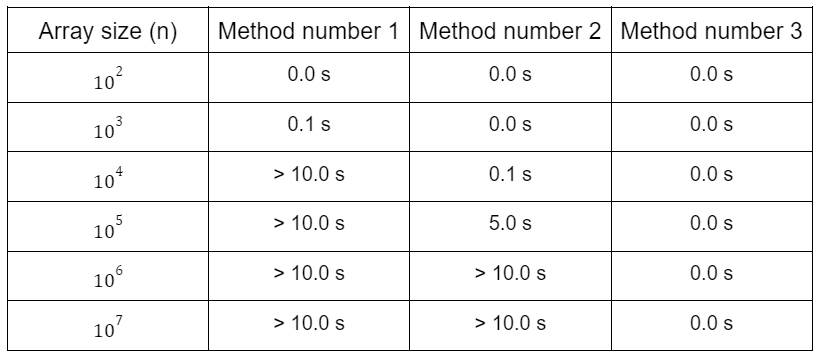

Let’s explore some real-world examples to understand how time complexity impacts Python code:

Example 1: Inefficient Linear Search

“`python

def linear_search(array, target):

for item in array:

if item == target:

return True

return False

“`

This code has a time complexity of O(n) since it needs to iterate through the entire list in the worst-case scenario.

Example 2: Efficient Binary Search

“`python

def binary_search(array, target):

low = 0

high = len(array) – 1

while low <= high:

mid = (low + high) // 2

if array[mid] == target:

return True

elif array[mid] < target:

low = mid + 1

else:

high = mid - 1

return False

```

Binary search has a time complexity of O(log n) since it halves the search space in each iteration.

FAQs

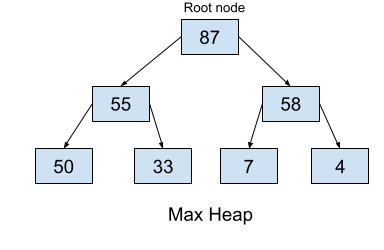

Q: What is the time complexity of the max() function in Python?

A: The max() function has a time complexity of O(n) since it needs to iterate through the entire input to find the maximum value.

Q: What is the time complexity of the math.max() function in Python?

A: The math.max() function does not exist in Python. However, the built-in max() function serves a similar purpose and has a time complexity of O(n).

Q: How does time complexity affect the performance of a Python program?

A: Time complexity directly impacts the performance of a Python program. Lower time complexity results in faster execution for large input sizes, whereas higher time complexity can lead to slower execution times.

Q: What is the time complexity of indexing in Python?

A: Indexing a list or array in Python has a constant time complexity of O(1) since it directly accesses the element at a specific position.

Q: What is the time complexity of dictionary operations in Python?

A: Common dictionary operations, such as getting or setting a value by key, have an average time complexity of O(1). However, in the worst-case scenario, it can degrade to O(n) if many keys collide.

In summary, understanding time complexity in Python is crucial for analyzing algorithm efficiency and optimizing code. By identifying maximum time complexity using Big O notation, developers can improve program performance, choose suitable data structures, and utilize efficient algorithms to reduce time complexity and enhance scalability.

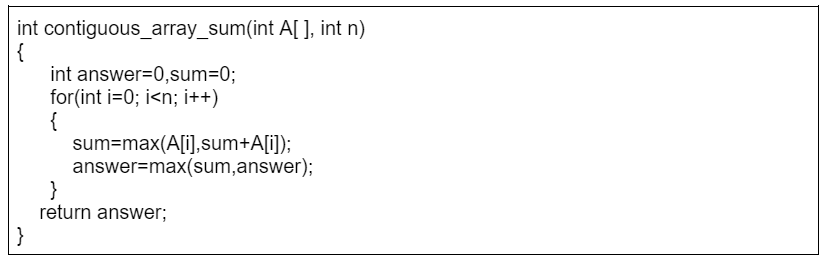

Calculating Time Complexity | New Examples | Geeksforgeeks

What Is Maximum Time Complexity?

In the field of computer science and algorithm analysis, time complexity is an essential concept that measures the efficiency of an algorithm. It provides an estimation of the time required by an algorithm to solve a problem based on the input size. The maximum time complexity, also known as the worst-case time complexity, represents the upper bound time required by an algorithm for any given input size.

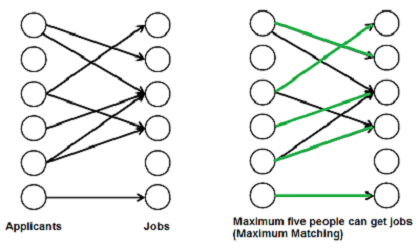

Time complexity is a vital aspect to consider when designing algorithms, as it helps in determining the scalability and efficiency of the solution. By analyzing the time complexity, researchers and developers can make informed decisions about which algorithm to use in various scenarios.

Understanding Big O Notation

Before delving into maximum time complexity, it is crucial to grasp the concept of Big O notation, which is widely used to represent time complexity. Big O notation provides an asymptotic upper bound of the time complexity in the worst case scenario.

The Big O notation is denoted by O(f(n)), where f(n) represents a function of the input size n. The letter “O” signifies the upper bound, indicating that the algorithm’s time complexity will not exceed this function. Here, we are primarily concerned with the maximum time complexity, so we focus on the worst-case scenario.

For instance, if an algorithm has a time complexity of O(n), it means that the time taken by the algorithm increases linearly with the input size. Similarly, if the time complexity is O(n^2), it indicates that the time taken by the algorithm increases quadratically with the input size.

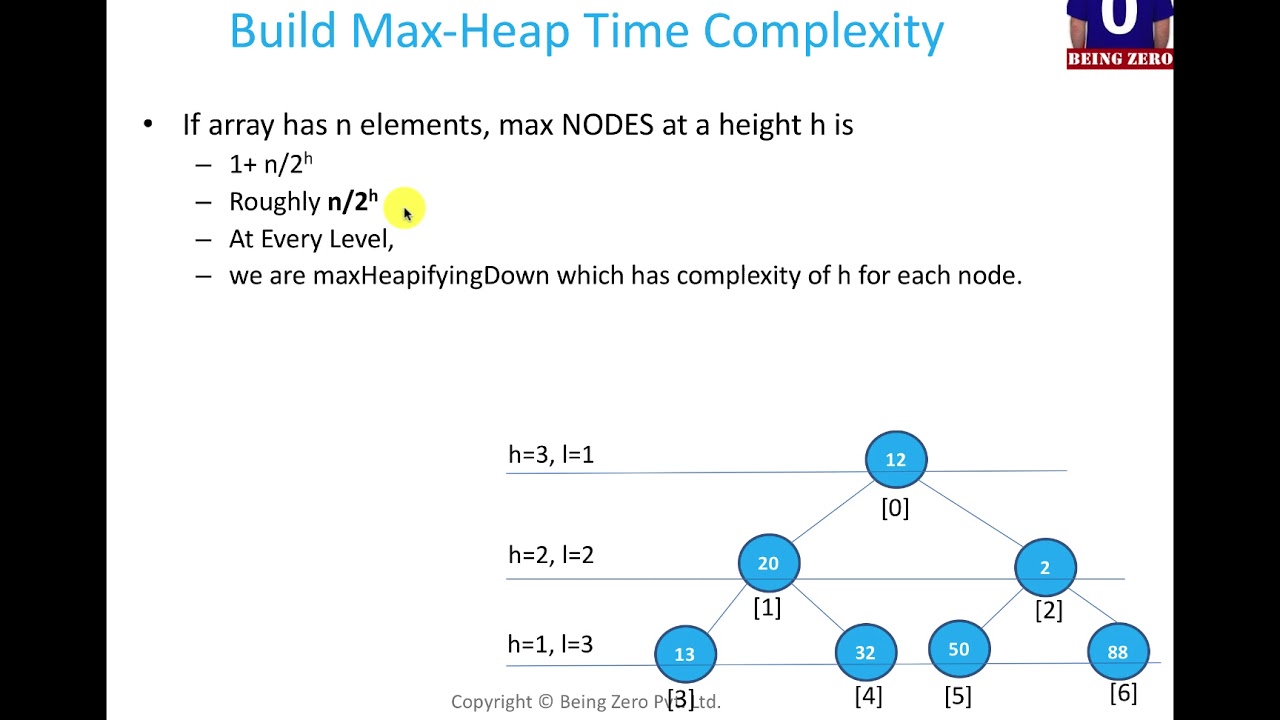

Analyzing Maximum Time Complexity

Maximum time complexity allows us to assess the worst-case scenario, ensuring that the algorithm does not run infinitely or unexpectedly slow for specific inputs. It provides an upper bound on the required time, guaranteeing that the algorithm will terminate within a predictable timeframe.

By analyzing maximum time complexity, developers can optimize their algorithms by ensuring that they operate efficiently even for the most extreme inputs. Designing algorithms with less-than-optimal complexity can lead to performance bottlenecks and potential compatibility issues.

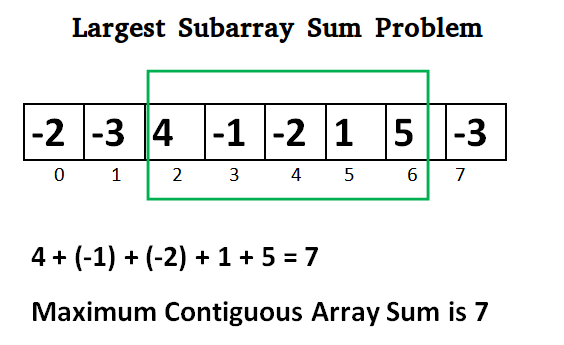

For example, consider an algorithm to search for an element in an unsorted array. If the maximum time complexity is O(n), it implies that for any given input size, the algorithm will take at most n operations to find the element. Thus, the algorithm’s efficiency is directly dependent on the maximum time complexity.

Common Time Complexities

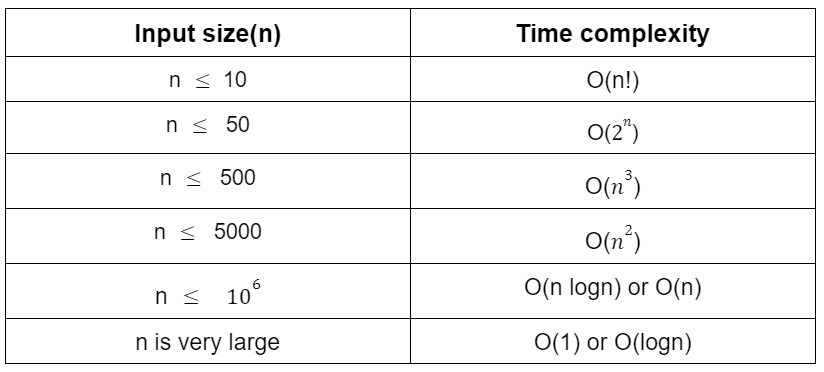

Various time complexities exist, representing different algorithms and their efficiency in the worst-case scenario. Let’s discuss some of the common time complexities and their implications:

1. O(1) – Constant Time Complexity: This represents an algorithm that always takes the same amount of time regardless of the input size. It is highly efficient and desirable in many scenarios.

2. O(log n) – Logarithmic Time Complexity: Algorithms with logarithmic time complexity grow slowly as the input size increases. They are commonly observed in divide and conquer techniques, such as binary search.

3. O(n) – Linear Time Complexity: This represents algorithms that have a linear relationship with the input size. The time required grows proportionally to the input.

4. O(n^2) – Quadratic Time Complexity: Algorithms with quadratic time complexity have a direct relationship with the square of the input size. It indicates that the time required increases rapidly as the input size grows.

5. O(2^n) – Exponential Time Complexity: Algorithms with exponential time complexity become increasingly inefficient as the input size grows. They are not suitable for large inputs and can often be optimized.

Frequently Asked Questions

Q: Can an algorithm have multiple time complexities?

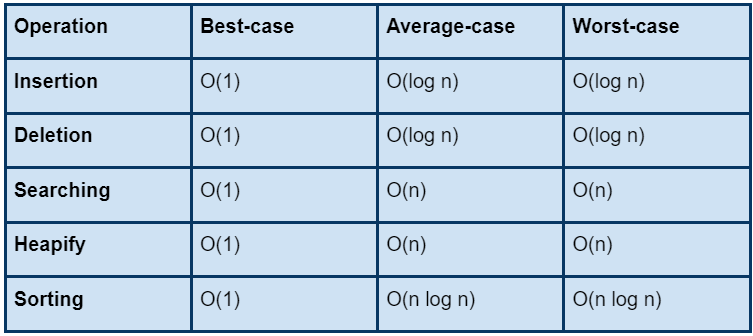

A: Yes, an algorithm can have different time complexities depending on the situation. It is common to analyze the best-case, average-case, and worst-case time complexities.

Q: Is the maximum time complexity the only factor to consider when analyzing algorithm efficiency?

A: No, while maximum time complexity is crucial, it is not the sole factor. Other factors, such as space complexity, average-case time complexity, and practical limitations, should also be considered.

Q: Does a lower time complexity always mean a more efficient algorithm?

A: Yes, in general, a lower time complexity indicates a more efficient algorithm. However, practical considerations and specific requirements may influence the choice of algorithm.

Q: Are algorithms with exponential time complexity considered unusable?

A: Algorithms with exponential time complexity are highly inefficient, especially for large input sizes. They are often avoided unless there is a specific need or if there are significant optimizations available.

Q: Are there algorithms with time complexities beyond what can be expressed by Big O notation?

A: Yes, while Big O notation is widely used, there are other notations such as Big Theta and Big Omega that express more precise bounds on an algorithm’s behavior.

In conclusion, maximum time complexity is a crucial metric for analyzing the efficiency and scalability of algorithms. By understanding the worst-case time complexity, developers can make informed decisions about which algorithm to use based on their requirements and limitations. It is important to consider multiple factors, such as space complexity and practical considerations, in addition to the maximum time complexity when assessing algorithm efficiency.

What Is The Time Complexity Of Python?

Python is a versatile and popular programming language known for its simplicity and readability. One important aspect of programming languages is the efficiency of their algorithms. Time complexity is a measure of the time taken by an algorithm to run as a function of the input size. In this article, we will delve into the time complexity of Python and explore how it affects algorithm performance.

Understanding Time Complexity

Time complexity is often expressed using big O notation, which states the upper bound of the running time of an algorithm in terms of the input size. It provides an estimate of how an algorithm’s execution time grows with increasing input. In big O notation, the constant factors and lower-order terms are disregarded, focusing only on the dominant term that influences the growth rate.

Python’s Time Complexity

Python comes with built-in data structures and functions that offer various trade-offs between time and space efficiency. Understanding the time complexity of these data structures and functions is crucial for choosing the right approach to solving a problem efficiently.

1. Lists:

Python lists are dynamic arrays that can accommodate elements of different types. The time complexity for common list operations is as follows:

– Indexing and assigning a value to a specific index: O(1)

– Appending an element to the end of the list: O(1) amortized, as it occasionally requires resizing the underlying array

– Extending a list with another list: O(k), where k is the length of the second list

– Inserting or deleting an element anywhere except the end: O(n), where n is the length of the list

2. Tuples:

Similar to lists, tuples are also dynamic arrays. However, they are immutable, meaning their elements cannot be modified after creation. Tuples have the same time complexity as lists for indexing and assigning values.

3. Sets:

Python sets are unordered collections of unique elements. The time complexity for common set operations is as follows:

– Adding an element: O(1)

– Removing an element: O(1)

– Checking for membership: O(1)

– Union, intersection, difference, and subset operations: O(min(m, n)), where m and n are the sizes of the sets involved

4. Dictionaries:

Dictionaries in Python are implemented as hash tables, providing efficient key-value mapping. The time complexity for common dictionary operations is as follows:

– Accessing or assigning a value to a specific key: O(1) on average, but O(n) in the worst case scenario

– Adding or deleting a key-value pair: O(1) on average, but O(n) in the worst case scenario

5. Sorting:

Python offers various sorting algorithms, such as the built-in `sorted()` function and the `sort()` method for lists. Their time complexities are typically O(n log n) for average cases. However, some algorithms like counting sort have linear time complexity (O(n)) under specific conditions.

Frequently Asked Questions (FAQs)

Q1. Why is time complexity important in Python?

A1. Time complexity gives an insight into how algorithms and data structures perform on different inputs. Understanding time complexity helps in selecting efficient algorithms and optimizing code for better performance.

Q2. How does time complexity affect scalability?

A2. Time complexity provides information about how an algorithm’s performance scales with increasing input size. Lower time complexity, such as O(log n) or O(n), indicates better scalability for larger inputs than higher time complexities like O(n^2) or O(2^n).

Q3. Can time complexity be improved for certain operations?

A3. Although time complexity is inherent to particular algorithms, optimizing code and utilizing more efficient algorithms can improve execution time. For example, using a dictionary instead of a list for fast lookup operations.

Q4. Is Python a good choice for performance-critical applications?

A4. Python, being an interpreted language, may not offer the same low-level performance as languages like C or C++. However, Python’s extensive library ecosystem provides ways to leverage compiled code or optimize critical sections using techniques like NumPy or Cython, making it suitable for many performance-critical tasks.

Q5. Which Python data structure should I choose for my use case?

A5. The choice of data structure depends on the specific requirements of your problem. Understanding the time complexity can guide you in selecting the appropriate data structure to achieve the desired performance characteristics.

In conclusion, understanding the time complexity of Python’s built-in data structures and functions can greatly assist in designing efficient algorithms. By selecting the appropriate data structures and algorithms, developers can optimize their Python code and achieve better performance for a wide range of applications.

Keywords searched by users: python max time complexity Max python complexity, max function time complexity in python, Math max time complexity, Time complexity Python, Index Python time complexity, Dictionary complexity python, python max() function, Find max in Python

Categories: Top 49 Python Max Time Complexity

See more here: nhanvietluanvan.com

Max Python Complexity

Introduction

Python is a high-level programming language known for its simplicity and ease of use. It offers a range of powerful libraries and frameworks that make it popular among developers. One such library is Max Python, which provides tools for implementing complex algorithms and data structures efficiently. In this article, we will explore the concept of Max Python complexity, its significance, and how it affects the performance of your code.

Understanding Max Python Complexity

Max Python complexity refers to the measure of computational resources required to execute an algorithm or solve a problem using Max Python. It helps us determine the efficiency and scalability of our code. Different algorithms have different complexity levels, and understanding them is crucial for optimizing code performance.

Complexity is generally expressed using Big O notation, which provides an upper bound on growth rates relative to the input size. It focuses on the worst-case scenarios and enables us to compare algorithms based on their efficiency. The most commonly used notations are O(1), O(log n), O(n), O(n log n), O(n^2), and so on.

Types of Complexity

There are two main types of Max Python complexity:

1. Time Complexity: Time complexity measures the amount of time required for code execution. It estimates the number of operations an algorithm performs relative to the input size. The lower the time complexity, the faster the algorithm runs. For example, an algorithm with O(1) complexity will execute in constant time, regardless of the input size. On the other hand, an algorithm with O(n^2) complexity will take exponentially longer as the input size increases.

2. Space Complexity: Space complexity measures the amount of memory required for code execution. It estimates the additional memory needed to store variables, data structures, and recursive function calls. Similar to time complexity, lower space complexity generally indicates better performance. Algorithms with O(1) space complexity use a constant amount of memory, regardless of the input size, whereas algorithms with O(n) space complexity require memory proportional to the input size.

Significance of Understanding Max Python Complexity

Understanding Max Python complexity is essential because it allows developers to determine whether an algorithm or solution is feasible and efficient for a given problem. By analyzing complexities, developers can make informed decisions about which algorithms to use, predict the performance of their code, and optimize it as necessary.

Furthermore, with an understanding of complexity, developers can anticipate how code performance may degrade as the input size increases. This knowledge is particularly important for applications that handle large datasets or undertake time-sensitive tasks.

In addition, complexity analysis helps identify bottlenecks and areas for improvement in your code. It allows you to spot inefficient algorithms or data structures that may be slowing down your program. By optimizing these parts, you can significantly enhance the overall performance of your application.

Frequently Asked Questions (FAQs)

Q1. Why is complexity analysis important in Python programming?

Complexity analysis is important in Python programming because it helps developers evaluate the efficiency of algorithms and predict the performance of their code. By understanding the complexity of different algorithms, developers can make informed decisions to optimize their programs and improve code performance.

Q2. How can I determine the complexity of my Python code?

To determine the complexity of your Python code, you need to analyze the algorithm or solution you have implemented. Identify loops, recursive calls, and data structure operations, and count the number of times they are executed relative to the input size. With this information, you can then determine the time and space complexity of your code.

Q3. Can I change the complexity of my code?

In many cases, the complexity of an algorithm is inherent and cannot be easily changed. However, by selecting different algorithms or optimizing existing ones, you can improve the performance of your code. It is important to note that certain problems may have inherent complexities that cannot be improved beyond a certain point.

Q4. Is higher complexity always bad?

Higher complexity is not always bad, but it usually indicates that an algorithm may take longer to execute or require more memory. However, the significance of complexity also depends on the problem at hand. For smaller input sizes or problems that do not require real-time processing, higher complexity may not be a concern. Ultimately, selecting an appropriate algorithm with acceptable complexity levels is vital for efficient code execution.

Conclusion

In conclusion, understanding Max Python complexity is crucial for ensuring efficient and scalable code performance. By analyzing the time and space complexity of your algorithms, you can make informed decisions about their suitability for a particular problem. Complexity analysis allows you to optimize your code, predict its performance, and identify potential bottlenecks. By mastering this concept, you can create robust Python applications that handle large datasets and time-sensitive operations with ease.

Max Function Time Complexity In Python

In Python, the max() function is a built-in function that allows us to find the largest item in an iterable or the maximum of two or more arguments. It is a widely-used function that can be found in various programming contexts. However, it is crucial to understand the time complexity of this function to avoid potential performance issues and optimize our code when necessary. In this article, we will delve into the time complexity of the max() function in Python, providing a comprehensive overview of its behavior and performance characteristics.

Time Complexity of the Max Function

The time complexity of an algorithm refers to the amount of time it takes to run as a function of the input size. It allows us to analyze the efficiency of an algorithm and provides valuable insights into how the algorithm’s performance scales with an increasing input size.

When it comes to the max() function in Python, the time complexity depends on the type of input provided. We will discuss the time complexities of various scenarios separately.

1. Iterables of Comparable Elements

When the max() function is called on an iterable (such as a list or tuple) of comparable elements, the time complexity is O(n), where n represents the number of elements in the iterable. This implies that the execution time will linearly increase as the input size grows. In this case, the function iterates through each element of the iterable to find the maximum.

It’s important to note that the time complexity remains the same regardless of the values in the iterable. Whether the elements are integers, floats, or custom objects, the time complexity remains O(n).

2. Multiple Arguments

When the max() function is called with multiple arguments, its time complexity is O(n), where n is the number of arguments passed. Similar to iterables, the function iterates through all the arguments to determine the largest one.

It’s worth mentioning that the constant factor involved in processing multiple arguments might be higher compared to processing a single iterable due to argument unpacking. However, the underlying time complexity remains O(n).

3. Key Function

The max() function also allows us to specify a key function that determines how the elements are compared. This additional function is applied to each element and is often used to compare elements based on a specific attribute or transform them before comparison.

If a key function is provided, the time complexity becomes O(n * k), where n represents the number of elements in the iterable, and k denotes the time complexity of the key function. It’s essential to consider the time complexity of the key function itself when dealing with large datasets or complex transformations.

FAQs

Q1. Can the time complexity of the max() function be improved?

A1. The time complexity of the max() function is inherently dependent on the number of elements it needs to compare. Since it requires examining each element at least once, it is difficult to improve the worst-case time complexity.

Q2. Can the time complexity change depending on the type of elements in the iterable?

A2. No, the time complexity of the max() function remains O(n) regardless of the type of elements in the iterable. The comparison process is agnostic to the elements’ types.

Q3. Does the size of the iterable affect the time complexity?

A3. Yes, the time complexity is directly proportional to the number of elements in the iterable. As the size of the iterable increases, the execution time of the max() function also increases linearly.

Q4. What can be done to optimize the max() function’s performance?

A4. If there is a need to optimize the performance of the max() function, it is advisable to analyze the usage context and consider potential optimizations at a broader level. For instance, using sorted() with slicing or maintaining the maximum value manually during the data collection may lead to efficiency improvements in certain situations.

In conclusion, the time complexity of the max() function in Python is O(n) when it is called on an iterable or when multiple arguments are provided. Adding a key function can increase the time complexity to O(n * k), where k represents the time complexity of the key function itself. By understanding these complexities, developers can optimize their code and make informed decisions while using the max() function in their applications efficiently.

Images related to the topic python max time complexity

Found 29 images related to python max time complexity theme

Article link: python max time complexity.

Learn more about the topic python max time complexity.

- Python List max() Method – GeeksforGeeks

- How efficient is Python’s max function – Stack Overflow

- Time complexity of min() and max() on a list of constant size?

- Find minimum and maximum value in an array – Interview Problem

- Understanding time complexity with Python examples

- Python – Algorithm Types – Tutorialspoint

- Using Python Max Function Like a Pro

- TimeComplexity – Python Wiki

- Python’s min() and max(): Find Smallest and Largest Values

- Design an algorithm that can return the Maximum item of a …

- The Complexity of Python Operators/Functions

- max – Python Reference (The Right Way) – Read the Docs

See more: https://nhanvietluanvan.com/luat-hoc