Python Download Url Image

In today’s digital age, downloading images from a URL is a common task for web developers, data analysts, and other professionals who work with images. Python provides a powerful and versatile set of libraries that make it easy to download images from a URL and perform various tasks on them. In this article, we will explore the process of downloading images from a URL using Python, along with other related functionalities and best practices.

Installing the necessary libraries

Before we begin downloading images, we need to install two essential libraries: requests and Pillow. The requests library allows us to send HTTP requests to a URL and retrieve the response, while Pillow provides a rich set of functionalities for image processing and manipulation.

To install the requests library, open your command prompt or terminal and run the following command:

“`

pip install requests

“`

Similarly, to install the Pillow library, run the following command:

“`

pip install Pillow

“`

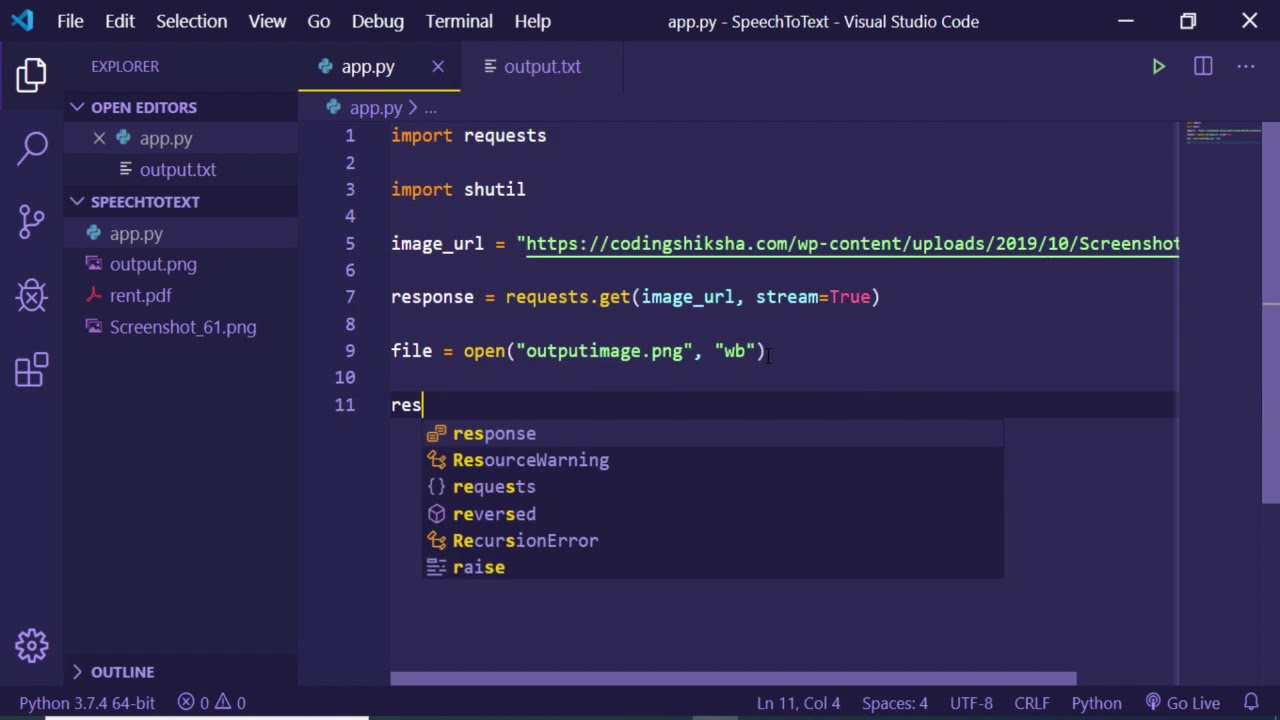

Downloading and saving the image

Once we have installed the required libraries, we can proceed with downloading and saving the image. The process involves sending a GET request to the image URL, extracting the image data from the response, specifying the storage location and file name, and finally, saving the image to the specified location.

To send a GET request to the image URL, we can use the `get()` function provided by the requests library. This function takes the URL as an input and returns a response object.

“`python

import requests

url = “https://example.com/image.jpg”

response = requests.get(url)

“`

After obtaining the response, we can extract the image data using the `content` attribute.

“`python

image_data = response.content

“`

Next, we need to specify the storage location and file name for the downloaded image. We can use a combination of the `os` and `os.path` modules to handle file path operations.

“`python

import os

storage_location = “path/to/save/images”

filename = “image.jpg”

image_path = os.path.join(storage_location, filename)

“`

Finally, we can save the image to the specified location using the `open()` function provided by the Pillow library.

“`python

from PIL import Image

with open(image_path, “wb”) as f:

f.write(image_data)

“`

Handling exceptions and errors

While downloading images, we need to handle various exceptions and errors that may occur during the process. This ensures that our code is robust and can handle unexpected situations gracefully.

Firstly, we should check for the valid URL format before sending the GET request. This can be done using regular expressions or by utilizing the `urlparse` module in Python.

“`python

from urllib.parse import urlparse

def is_valid_url(url):

result = urlparse(url)

return all([result.scheme, result.netloc])

“`

Additionally, we should handle connection errors that may occur if the server is unreachable or the internet connection is disrupted. We can use the `try-except` block to catch and handle these errors.

“`python

try:

response = requests.get(url)

# Rest of the download and save code goes here

except requests.exceptions.ConnectionError:

print(“Error: Failed to establish a connection.”)

“`

Furthermore, we should handle request errors that may occur if the image URL is invalid or the server returns a non-successful response status code.

“`python

try:

response = requests.get(url)

response.raise_for_status()

# Rest of the download and save code goes here

except requests.exceptions.HTTPError as e:

print(f”Error: {e}”)

“`

Lastly, we should handle errors that may occur while saving the image. These errors can be related to file permissions, disk space, or other issues.

“`python

try:

with open(image_path, “wb”) as f:

f.write(image_data)

except IOError:

print(“Error: Failed to save the image.”)

“`

Validating the downloaded image

To ensure the integrity and usability of the downloaded images, we can perform some validations on them. Firstly, we can verify the image file extension to ensure that we are downloading the correct image type.

“`python

from pathlib import Path

def is_valid_extension(filename):

extension = Path(filename).suffix.lower()

supported_extensions = [“.jpg”, “.jpeg”, “.png”, “.gif”]

return extension in supported_extensions

“`

Secondly, we can verify the image content type by checking the `Content-Type` header of the response.

“`python

def get_content_type(response):

return response.headers.get(“Content-Type”)

def is_valid_content_type(content_type):

supported_types = [“image/jpeg”, “image/png”, “image/gif”]

return content_type in supported_types

“`

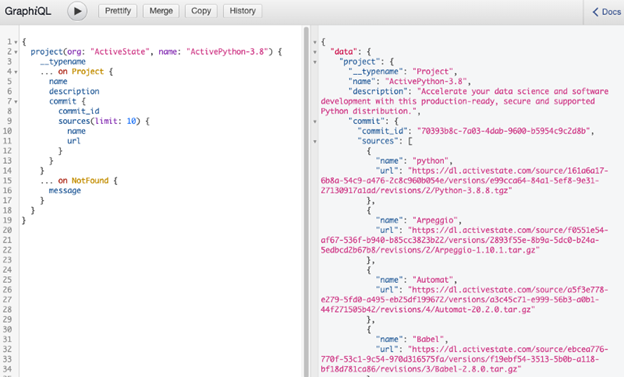

Resizing and optimizing the downloaded image

Often, we need to resize or optimize the downloaded images to meet specific requirements. The Pillow library provides several methods to resize and manipulate images.

To resize an image, we can use the `resize()` function provided by the `Image` class in the Pillow library.

“`python

from PIL import Image

image = Image.open(image_path)

resized_image = image.resize((800, 600))

“`

After resizing the image, we may also need to compress it to reduce its file size. The `save()` function of the `Image` class allows us to provide a quality parameter for compression.

“`python

resized_image.save(image_path, optimize=True, quality=80)

“`

Adding progress and error handling

To provide a better user experience and handle potential errors during image downloads, we can implement progress tracking and retry mechanisms.

To display the download progress, we can utilize the `tqdm` library, which provides a progress bar widget for loops and iterators.

“`python

from tqdm import tqdm

response = requests.get(url, stream=True)

total_size = int(response.headers.get(“content-length”))

chunk_size = 1024

progress_bar = tqdm(total=total_size, unit=”B”, unit_scale=True)

with open(image_path, “wb”) as f:

for chunk in response.iter_content(chunk_size=chunk_size):

if chunk:

f.write(chunk)

progress_bar.update(len(chunk))

“`

For failed downloads, we can implement a retry mechanism using a `while` loop and a maximum retry count.

“`python

max_retries = 3

retry_count = 0

success = False

while not success and retry_count < max_retries: try: # Download and save code goes here success = True except Exception: retry_count += 1 ``` Handling image metadata and attributes In addition to downloading and saving images, we may also need to extract metadata and manage attributes associated with the images. The Pillow library provides features to extract basic image metadata, such as the image size and color mode. ```python image = Image.open(image_path) width, height = image.size color_mode = image.mode ``` To retrieve and manage image attributes, we can utilize the `exifread` library, which allows us to read EXIF data from image files. ```python import exifread with open(image_path, "rb") as f: tags = exifread.process_file(f) for tag, value in tags.items(): print(f"{tag}: {value}") ``` Automating the image download process In many scenarios, we may need to download multiple images efficiently. We can achieve this by iterating through a list of image URLs and saving each image in a separate file. ```python image_urls = ["https://example.com/image1.jpg", "https://example.com/image2.jpg"] for i, url in enumerate(image_urls): filename = f"image{i+1}.jpg" image_path = os.path.join(storage_location, filename) response = requests.get(url) if response.status_code == 200: with open(image_path, "wb") as f: f.write(response.content) ``` Protecting against malicious images When downloading images from URLs, it is crucial to implement security measures to prevent image-based attacks and ensure the safety of our systems and users. To protect against malicious images, we can perform various checks, such as limiting the maximum image size, validating image file signatures, and sanitizing user input when specifying the image URL. Additionally, we should regularly update our libraries and follow security advisories to eliminate potential vulnerabilities. FAQs: 1. How can I download an image from a URL using Python? To download an image from a URL using Python, you can utilize the requests library to send a GET request to the image URL and save the response content to a file. 2. Can I resize the downloaded image using Python? Yes, you can resize the downloaded image using the Pillow library in Python. The Pillow library provides a resize() function that allows you to specify the desired dimensions of the image. 3. How can I display download progress when downloading an image using Python? To display the download progress when downloading an image using Python, you can use the tqdm library, which provides a progress bar widget for loops and iterators. 4. What security measures should I implement when downloading images from URLs? When downloading images from URLs, it is important to implement security measures such as validating image file extensions, verifying image content types, limiting image size, and sanitizing user input. Additionally, it is crucial to regularly update libraries and follow security advisories to eliminate potential vulnerabilities. 5. Can I automate the image download process and save multiple images efficiently? Yes, you can automate the image download process and save multiple images efficiently by iterating through a list of image URLs and saving each image in a separate file. This allows you to download multiple images without having to repeat the download and save code for each image. In conclusion, Python provides a robust and versatile set of libraries for downloading and managing images from URLs. By following the best practices outlined in this article, you can effectively download images, handle exceptions and errors, validate the downloaded images, perform various image manipulations, add progress tracking and error handling, manage metadata and attributes, automate the download process, and protect against malicious images. These functionalities empower you to work with images efficiently and securely in your Python projects.

Download Any Image From The Internet With Python 3.10 Tutorial

How To Download Image From Url In Python?

Python is a powerful programming language widely used for various purposes, including web development and image processing. When working with images in Python, you may sometimes need to download images from a given URL. In this article, we will explore different methods to accomplish this task efficiently.

Prerequisites:

To follow along with this tutorial, you should have a basic understanding of Python and its libraries, particularly the Requests library. Make sure to have Python and Pip (Python package installer) installed on your machine.

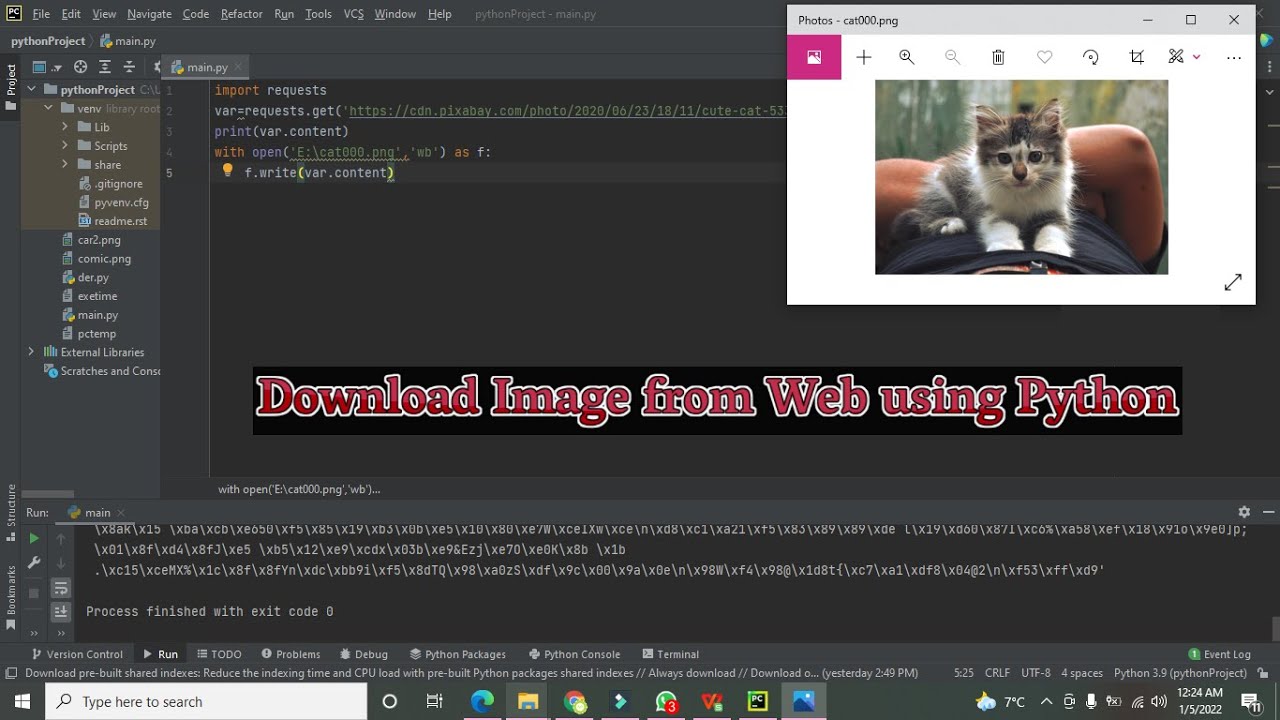

1. Using the Requests library:

The Requests library is widely used for handling HTTP requests in Python. To download an image from a URL, you can utilize the get() method provided by this library. Here is a step-by-step guide:

Step 1: Install the Requests library

Open your terminal or command prompt and enter the following command:

“`

pip install requests

“`

Step 2: Import the requests library

To use the requests library in your Python program, you need to import it first. Add the following line at the beginning of your Python script:

“`python

import requests

“`

Step 3: Download the image

Now you can proceed with downloading the image. Use the get() method provided by the requests library, passing the image URL as the parameter. Then, open the file in binary mode and write the image content into it.

“`python

url = “https://example.com/image.jpg”

response = requests.get(url)

filename = “image.jpg”

with open(filename, “wb”) as file:

file.write(response.content)

“`

Make sure to replace “https://example.com/image.jpg” with the actual URL and “image.jpg” with the desired filename.

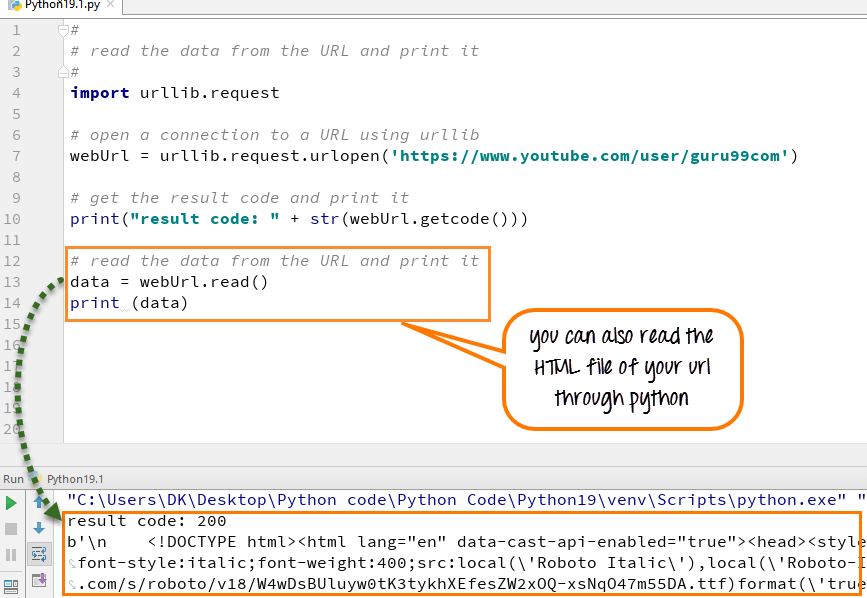

2. Using the urllib library:

Python also provides the urllib library, which offers functions for handling URLs. One of its advantages is that it comes pre-installed with Python, so you don’t need to install any external dependencies.

Step 1: Import the urllib library

To use the urllib library, add the following line at the beginning of your Python script:

“`python

import urllib.request

“`

Step 2: Download the image

Similar to the previous method, you can now proceed with downloading the image using the `urlretrieve()` method provided by the urllib library.

“`python

url = “https://example.com/image.jpg”

filename = “image.jpg”

urllib.request.urlretrieve(url, filename)

“`

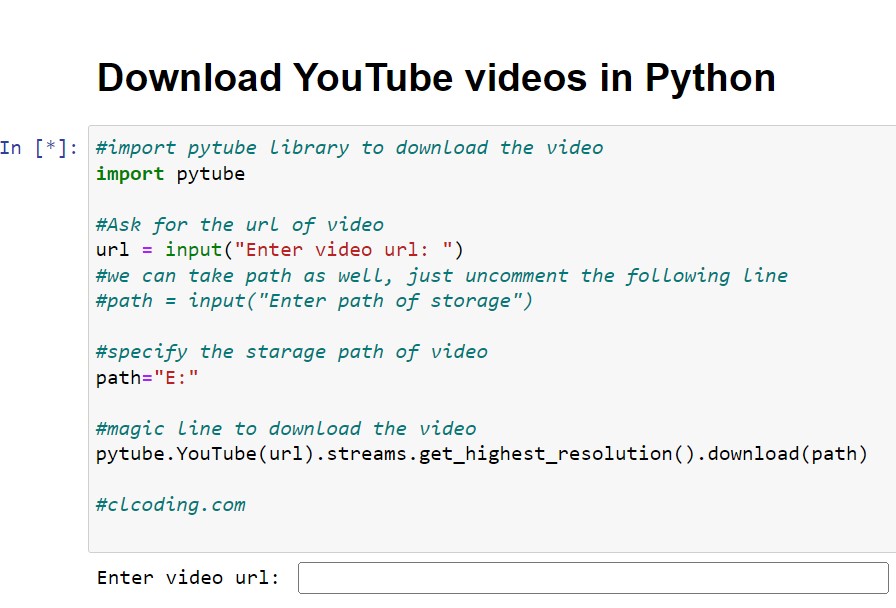

3. Using the wget library:

The wget library is another option for downloading images from URLs in Python. It simplifies the process by providing a single function called `download()`.

Step 1: Install the wget library

Open your terminal or command prompt and enter the following command:

“`

pip install wget

“`

Step 2: Import the wget library

To use the wget library in your Python program, you need to import it first. Add the following line at the beginning of your Python script:

“`python

import wget

“`

Step 3: Download the image

With the wget library, downloading an image becomes straightforward:

“`python

url = “https://example.com/image.jpg”

filename = “image.jpg”

wget.download(url, filename)

“`

Frequently Asked Questions (FAQs):

Q1: Can I download multiple images from URLs using these methods?

Yes, you can download multiple images by iterating over a list of URLs and performing the downloading process for each URL.

Q2: How can I handle errors while downloading an image?

While downloading images, it’s crucial to handle potential errors that may occur. You can use try-except blocks to catch exceptions and handle them accordingly.

Q3: Can I specify a download location for the images?

Yes, you can specify a download location by providing the full path of the desired directory along with the filename when opening the file in binary mode. For example:

“`python

with open(“path/to/directory/filename.jpg”, “wb”) as file:

file.write(response.content)

“`

Q4: Are there any limitations in terms of image size or URL type?

There are no limitations in terms of image size, as long as you have enough disk space to store the downloaded images. Regarding URL types, these methods work with HTTP and HTTPS URLs.

Conclusion:

In this article, we explored different methods to download images from URLs using Python. We covered three methods: using the Requests library, the urllib library, and the wget library. Each method has its advantages, so choose the one that best suits your needs. Remember to handle errors and specify a download location, if necessary. Now you can confidently download images from URLs programmatically using Python!

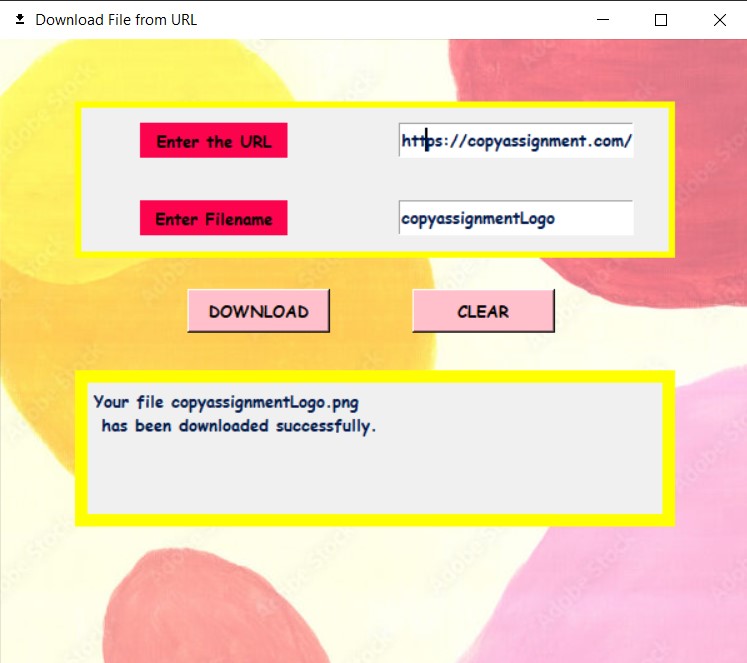

How To Download From Url In Python?

Python is a versatile and powerful programming language widely used for various applications, including web scraping, data mining, and automation tasks. One common task is downloading files from a URL. In this article, we will explore different ways to download files in Python from a given URL, including using built-in libraries and third-party modules.

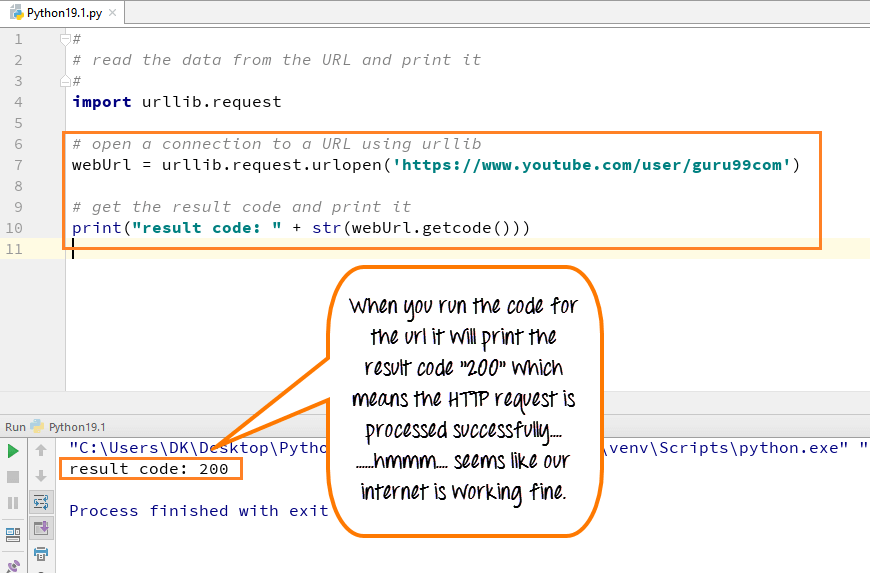

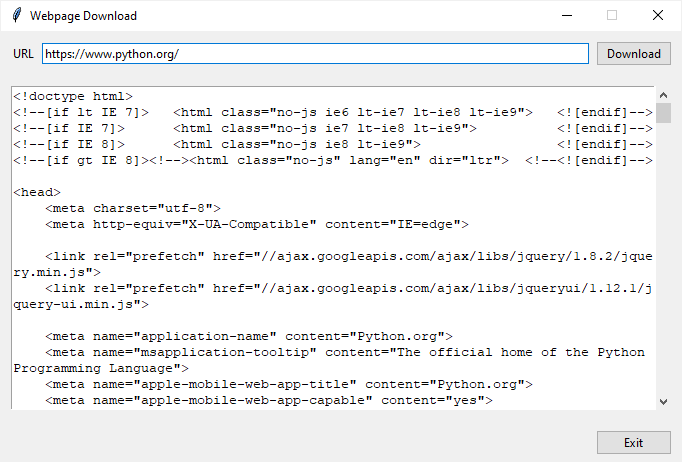

Using urllib to Download Files

Python’s built-in library urllib provides a convenient way to download files from a URL. It offers several modules, such as urllib.request, urllib.parse, and urllib.error, which can be used for different tasks. Let’s dive into the process of downloading files using urllib.request.

To begin with, you need to import the necessary module:

“`python

import urllib.request

“`

Next, you can use the urllib.request.urlretrieve() function to download the file. This function takes two arguments: the URL of the file to be downloaded and the local path where you want to save it. Here’s a simple example:

“`python

url = ‘https://example.com/somefile.txt’

path = ‘/path/to/save/somefile.txt’

urllib.request.urlretrieve(url, path)

“`

This will download the file from the given URL and save it to the specified path. In case you don’t provide a path, urllib will save the file in the current working directory with the same name as in the URL.

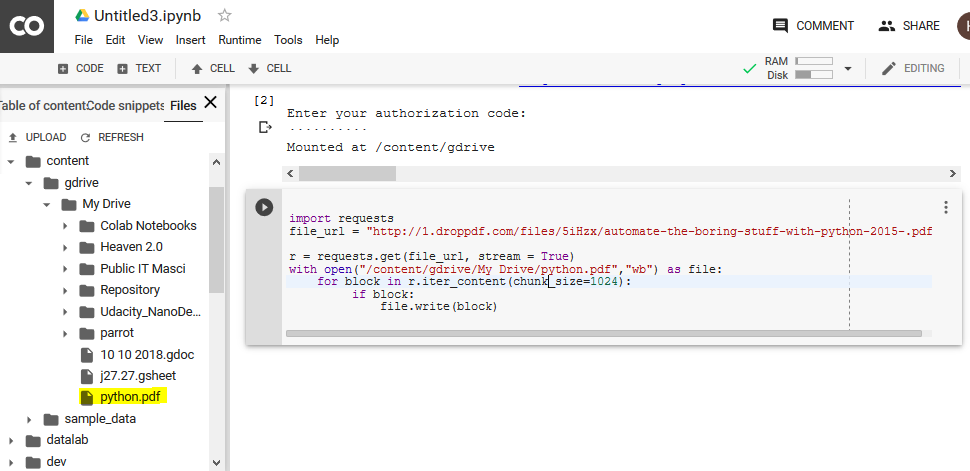

Using Requests Library to Download Files

While urllib provides basic functionality to download files, it can sometimes be cumbersome to handle certain scenarios. This is where third-party libraries like requests come in handy. Requests is a popular HTTP library that simplifies the process of sending HTTP requests in Python.

To use the requests library, you need to install it first using pip:

“`python

pip install requests

“`

Once installed, you can import the library and use its functions to download files. Let’s see how it works:

“`python

import requests

url = ‘https://example.com/somefile.txt’

path = ‘/path/to/save/somefile.txt’

response = requests.get(url)

with open(path, ‘wb’) as file:

file.write(response.content)

“`

In this example, we import the requests library and assign the URL and the local path. We then use the requests.get() function to send a GET request to the URL and store the response in a variable. Finally, we open a file in binary write mode and write the content of the response to the file.

Frequently Asked Questions (FAQs):

Q: What is the difference between urllib and requests?

A: While both urllib and requests can be used to download files from a URL, requests offers a more user-friendly interface and additional features, making it easier to handle complex scenarios.

Q: Can I download multiple files using these methods?

A: Yes, you can download multiple files by simply looping over the URLs and paths, calling the appropriate download function for each iteration.

Q: How can I handle authentication or cookies when downloading files?

A: The requests library provides methods to handle authentication and cookies. You can pass the necessary credentials using the auth parameter or manage cookies using the cookies parameter.

Q: Is it possible to download large files using these methods?

A: Yes, both urllib and requests support downloading large files by reading the file in chunks rather than loading it entirely into memory.

Q: Are there any other third-party libraries for downloading files in Python?

A: Yes, besides requests, you can also use libraries like wget and urllib3 to download files from a URL.

Conclusion

Downloading files from a URL is a common task in Python, and there are multiple approaches to accomplish this. In this article, we explored two popular methods: using the built-in urllib library and the third-party requests library. Both methods come with their own advantages, and the choice depends on the complexity of the task at hand. By following the provided examples, you can easily download files from URLs using Python and incorporate this functionality into your own projects.

Keywords searched by users: python download url image Download image from url Python, Python download picture from website, Python code to download images from website, List image in python, Python3 download image, Urllib request download image, Save image to folder python, Read image from URL Python

Categories: Top 44 Python Download Url Image

See more here: nhanvietluanvan.com

Download Image From Url Python

Python is widely known as a versatile programming language that offers a range of powerful libraries and modules. When working with images, Python provides several methods to download images from a given URL. In this article, we’ll explore various techniques to download images from URLs using Python, along with some practical examples. So, let’s dive in!

Downloading Images using the urllib.request Module:

The urllib.request module is a built-in Python library that allows us to open and read URLs. It provides functionality for downloading files from the internet, including images. Here’s a step-by-step guide on how to use this module for image downloading:

Step 1: Import the required module.

“`

import urllib.request

“`

Step 2: Define the URL of the image you want to download.

“`

image_url = “https://example.com/image.jpg”

“`

Step 3: Specify the path where you want to save the downloaded image.

“`

save_as = “path/to/save/image.jpg”

“`

Step 4: Use the `urlretrieve()` method to download the image.

“`

urllib.request.urlretrieve(image_url, save_as)

“`

By executing these steps, Python will automatically download the image from the provided URL and save it in the specified location.

Downloading Images using the Requests Library:

The Requests library is a widely used third-party library in Python for handling HTTP requests. It simplifies the process of performing HTTP requests and downloading files, including images. Here’s how you can use the Requests library to download images:

Step 1: Install the Requests library (if not already installed) using pip:

“`

pip install requests

“`

Step 2: Import the requests module.

“`

import requests

“`

Step 3: Define the URL of the image.

“`

image_url = “https://example.com/image.jpg”

“`

Step 4: Send an HTTP GET request to fetch the image data.

“`

response = requests.get(image_url)

“`

Step 5: Specify the path to save the downloaded image.

“`

save_as = “path/to/save/image.jpg”

“`

Step 6: Open a file in binary write mode and write the image content.

“`

with open(save_as, “wb”) as file:

file.write(response.content)

“`

This code snippet will download the image from the given URL and save it at the specified location.

Frequently Asked Questions (FAQs):

Q1. Can I download multiple images at once using these methods?

Absolutely! To download multiple images, you can either loop through a list of URLs and save them one by one or use parallel processing techniques to download them concurrently. Libraries like `multiprocessing` or `asyncio` can help you achieve the latter.

Q2. How can I handle errors while downloading images?

Both methods discussed above can raise exceptions during the download process. For example, a `HTTPError` may occur if the URL is invalid or inaccessible. To handle such errors, enclose the downloading code inside a try-except block and handle the exceptions accordingly.

Q3. Can I specify a different name for the downloaded image?

Yes, you can specify a different filename while saving the downloaded image. Instead of hardcoding the filename with an extension, you can use dynamic naming techniques like extracting the filename from the URL or naming it based on a specific pattern.

Q4. Is it possible to resize the downloaded images using Python?

Certainly! After downloading an image, you can use various image processing libraries like `PIL` (Python Imaging Library) or `opencv-python` to resize the image. These libraries provide convenient methods to manipulate and transform images according to your requirements.

Q5. Can I download images from password-protected URLs?

Yes, downloading images from password-protected URLs is possible. You can include authentication parameters within the request headers or use libraries like `requests` that allow you to pass credentials while making the HTTP request.

Conclusion:

In this article, we explored different ways to download images from URLs using Python. Both the in-built `urllib.request` module and the third-party library `requests` offer convenient methods to accomplish this task. Be it a single image or multiple images, Python provides flexibility to cater to your specific requirements. Additionally, we discussed FAQs to address common queries related to image downloading in Python. With these techniques at your disposal, you can now download images effortlessly and leverage Python’s immense capabilities for your image processing and data analysis needs.

Python Download Picture From Website

Before diving into the technical details, let’s understand the basics of downloading pictures from a website. Websites typically store images in HTML documents using tags, where the source attribute points to the location of the image file. Python provides several libraries, such as BeautifulSoup and Requests, that enable us to interact with websites, locate image URLs in HTML documents, and save them to our local machine.

To begin, ensure that you have Python installed on your system. You can verify this by opening a terminal or command prompt and typing “python –version.” If Python is not installed, visit the official Python website and download the appropriate version for your operating system.

Once you have Python installed, you’ll need to install the necessary libraries. The most commonly used libraries for this task are BeautifulSoup and Requests. To install these libraries, open your terminal or command prompt and run the following commands:

“`python

pip install beautifulsoup4

pip install requests

“`

With the libraries installed, we can now begin the process of downloading pictures from a website. First, import the required libraries into your Python script:

“`python

import requests

from bs4 import BeautifulSoup

“`

Next, specify the URL of the webpage from which you want to download pictures. For example, let’s consider a fictional website “www.example.com” that contains a gallery of images:

“`python

url = “http://www.example.com/gallery”

“`

Now, use the Requests library to send an HTTP GET request to the specified URL and retrieve the webpage’s HTML content:

“`python

response = requests.get(url)

“`

To parse the HTML content and locate the URLs of the images, initialize a BeautifulSoup object:

“`python

soup = BeautifulSoup(response.content, “html.parser”)

“`

At this point, you can use BeautifulSoup’s built-in functionality to locate all tags and retrieve the value of the source attribute:

“`python

image_tags = soup.findAll(“img”)

image_urls = [img[‘src’] for img in image_tags]

“`

This code snippet finds all tags in the HTML and retrieves the value of the source attribute, which represents the URL of the image. You can now iterate over the list of URLs and use the Requests library to download each image:

“`python

for url in image_urls:

response = requests.get(url)

# Specify the location where you want to save the image

with open(“path/to/save/image.jpg”, ‘wb’) as f:

f.write(response.content)

“`

Replace “path/to/save/image.jpg” with the desired file path and name for saving the images. You can also modify this code snippet to save the images with unique names or in separate directories based on your requirements.

That’s it! You’ve successfully written a Python script to download pictures from a website. By leveraging the power of Python and libraries like BeautifulSoup and Requests, you can quickly automate this task and save valuable time.

FAQs

Q: Can I download images from any website using this method?

A: Yes, this method works for most websites. However, some websites might employ measures, such as CAPTCHA or anti-scraping mechanisms, to prevent automated image downloading.

Q: Can I download images from multiple webpages?

A: Yes, you can modify the script to retrieve images from multiple webpages by iterating over a list of URLs.

Q: Are there any legal restrictions when downloading images from websites?

A: It is crucial to respect the copyright and terms of use of the images you download. Ensure that you have the necessary permissions or the images are freely available for download.

Q: Can I download images in a specific format (e.g., PNG, JPEG)?

A: Yes, you can modify the file name or extension while saving the image to specify the desired format.

Q: How can I optimize the script for faster image downloading?

A: You can leverage multithreading or asynchronous programming techniques to download images concurrently, improving the script’s performance.

In conclusion, Python provides a straightforward and powerful way to download pictures from websites. By utilizing libraries like BeautifulSoup and Requests, you can automate the process and save images for various purposes. Remember to use this capability responsibly, respecting copyright and usage terms when downloading images. Happy coding!

Python Code To Download Images From Website

Introduction:

Downloading images from websites is a common task in web scraping and data collection pipelines. Python provides various libraries and modules that make this process straightforward and efficient. In this article, we will explore the Python code that allows us to download images from websites. We will cover different methods, explore their implementation, and provide examples for each step. So, let’s get started!

Downloading Images using the Requests Library:

The Requests library is a popular Python module for making HTTP requests. It provides a simple and elegant way to retrieve content from the web, including images. To download images using the Requests library, follow the steps below:

1. Install the Requests library

“`

pip install requests

“`

2. Import the necessary modules

“`python

import requests

“`

3. Send an HTTP request to the image URL

“`python

url = “https://example.com/image.jpg”

response = requests.get(url)

“`

4. Save the image to a file

“`python

with open(“image.jpg”, “wb”) as f:

f.write(response.content)

“`

The above code downloads the image from the specified URL and saves it as “image.jpg” in the current directory. Replace the URL with the actual image URL you want to download.

Downloading Images using the BeautifulSoup Library:

BeautifulSoup is a powerful library for parsing HTML and XML documents. It can be used to extract information from web pages and, in our case, to download images. Here’s how to download images using the BeautifulSoup library:

1. Install the BeautifulSoup library

“`

pip install beautifulsoup4

“`

2. Import the necessary modules

“`python

import requests

from bs4 import BeautifulSoup

“`

3. Send an HTTP request to the webpage

“`python

url = “https://example.com”

response = requests.get(url)

“`

4. Parse the HTML content using BeautifulSoup

“`python

soup = BeautifulSoup(response.content, “html.parser”)

“`

5. Find the image tags within the parsed HTML

“`python

image_tags = soup.find_all(“img”)

“`

6. Extract the image URLs and download the images

“`python

for tag in image_tags:

image_url = tag[“src”]

image_response = requests.get(image_url)

with open(image_url.split(“/”)[-1], “wb”) as f:

f.write(image_response.content)

“`

The above code finds all the `` tags in the webpage’s HTML source and extracts the source URLs. It then downloads each image and saves it with the original filename in the current directory.

Frequently Asked Questions (FAQs):

Q1. Can I specify a different directory to save the downloaded images?

Yes, you can specify a different directory by changing the path in the `open()` function. For example, to save the images in the “images” directory within the current directory, modify the code as follows:

“`python

with open(“images/” + image_url.split(“/”)[-1], “wb”) as f:

f.write(image_response.content)

“`

Q2. How can I handle errors if the image URL is not accessible?

You can use try-except blocks to handle errors while downloading images. For instance, you can catch exceptions related to network errors or invalid URLs. Here’s an example:

“`python

try:

image_response = requests.get(image_url)

image_response.raise_for_status() # Raises an exception if the status code is not 200

with open(“image.jpg”, “wb”) as f:

f.write(image_response.content)

except requests.exceptions.RequestException as e:

print(“Error downloading image:”, e)

“`

Q3. How can I download images from multiple webpages?

To download images from multiple webpages, you can wrap the code inside a loop that iterates over a list of URLs. For each URL, the code executes the image downloading steps. Here’s an example:

“`python

url_list = [“https://example.com/page1”, “https://example.com/page2”, …]

for url in url_list:

# Downloading code here

“`

Conclusion:

In this article, we have discussed two methods to download images from websites using Python. The first method utilizes the Requests library to send HTTP requests and save the image content. The second method utilizes the BeautifulSoup library to parse HTML content and extract image URLs. Both methods provide flexibility and ease of use, allowing you to efficiently download images from websites. Remember to respect the website’s terms of service and licensing when downloading images, and happy scraping!

Images related to the topic python download url image

Found 5 images related to python download url image theme

![Download Image from URL using Python[ Requests, Wget, Urllib] - YouTube Download Image From Url Using Python[ Requests, Wget, Urllib] - Youtube](https://i.ytimg.com/vi/nftB9I6oMSY/maxresdefault.jpg)

Article link: python download url image.

Learn more about the topic python download url image.

- How to download an image from a URL in Python

- python save image from url – Stack Overflow

- 5 Easy Ways to Download an Image from a URL in Python

- How to download an image with Python? – ScrapingBee

- How to Download an Image Using Python

- How to download an image using requests in Python

- Download Image from URL using Python – PyShark

- 5 Easy Ways to Download an Image from a URL in Python

- How to download a file over HTTP? – python – Stack Overflow

- How To Download Multiple Images In Python – Just Understanding Data

- Secure Python URL validation | Snyk

- How to Download Images From URLs, Convert the Type, and …

See more: nhanvietluanvan.com/luat-hoc