Pyarrow Table To Numpy

PyArrow is a powerful Python library that provides a bridge between Apache Arrow and Python data structures, allowing for efficient and fast data manipulation and processing. One of the key functionalities offered by PyArrow is the ability to convert data tables into Numpy arrays, enabling seamless integration with the extensive ecosystem of Numpy and other data analysis libraries.

A PyArrow Table is a columnar data structure that consists of multiple columns, where each column contains homogeneous data. These columns are represented as Arrow Arrays, which provide efficient storage and retrieval of data. Each column in a PyArrow Table has a specific data type, such as int, float, string, or datetime, among others.

When converting a PyArrow Table to a Numpy array, it is important to understand and handle the different data types supported by PyArrow. PyArrow supports a wide range of data types, including primitive types like int and float, as well as complex types like structured arrays and nested types. These data types can be mapped to their corresponding Numpy data types to ensure compatibility and consistency during the conversion process.

Converting PyArrow Table to Numpy Array

The process of converting a PyArrow Table to a Numpy array involves several steps. First, you need to extract the individual columns from the PyArrow Table and convert them into Numpy arrays. This can be done using the `to_numpy()` method provided by the PyArrow Table object.

During the conversion process, it is important to handle missing values and nulls appropriately. PyArrow provides support for nullable data types, which can be represented as Numpy masked arrays to indicate missing values. By using masked arrays, you can preserve the integrity and consistency of the data while handling missing values effectively.

In some cases, you may need to implement custom converters to handle specific data transformations during the conversion process. This can involve complex data manipulations or modifications to the data structure to achieve the desired results. PyArrow provides powerful functions and operations for such custom conversions, allowing you to tailor the conversion process according to your specific requirements.

Handling Large Datasets and Memory Optimization

When dealing with large datasets, it is important to adopt strategies that ensure efficient processing and memory management during the conversion process. PyArrow provides several techniques for handling large datasets effectively.

One approach is to partition the data into smaller chunks or batches, allowing for parallel processing and reducing memory usage. By partitioning the data, you can distribute the conversion process across multiple threads or processes, improving performance and reducing the overall memory footprint.

Another technique for memory optimization is to leverage PyArrow’s ability to read data directly from disk or other storage systems. PyArrow supports various file formats and protocols, such as Parquet, CSV, and S3, allowing for efficient reading and conversion of data without loading the entire dataset into memory.

Performance Considerations and Optimization Techniques

To optimize the conversion process, it is important to identify potential bottlenecks and areas for improvement. PyArrow provides several features and techniques to enhance the performance of the conversion from PyArrow Table to Numpy array.

Multi-threading and parallel processing can significantly speed up the conversion process, especially when dealing with large datasets. PyArrow supports multi-threading and parallel execution, enabling concurrent processing of data and utilizing the available computing resources more effectively.

Leveraging specialized functions and operations provided by PyArrow can also lead to improved performance. PyArrow offers optimized algorithms for various data manipulations, such as filtering, sorting, and aggregating. By utilizing these specialized functions, you can reduce the processing time and improve the overall performance of the conversion process.

Error Handling and Data Validation

During the conversion process, it is important to handle common errors and exceptions that may occur. PyArrow provides robust error handling mechanisms, allowing you to catch and handle errors gracefully.

Additionally, it is crucial to validate the integrity and consistency of the converted Numpy array. PyArrow provides functions and utilities for data validation, ensuring that the converted array meets the expected data quality standards. This validation process involves checking data types, dimensions, and other properties to ensure the accuracy and reliability of the converted data.

For specific use cases, you may need to implement custom error handling mechanisms. PyArrow allows you to define your own error handling logic, enabling you to handle exceptional cases and customize the behavior of the conversion process accordingly.

Advanced Features and Functionality

PyArrow offers advanced features and functionality for converting PyArrow Table to Numpy arrays. One such feature is the ability to handle complex nested data structures and nested schemas. PyArrow provides functions and utilities to unpack and flatten nested data, facilitating the conversion process and ensuring compatibility with Numpy arrays.

Working with large metadata and schema information is another important aspect of PyArrow Table to Numpy conversion. PyArrow allows you to access and manipulate the metadata and schema information associated with the data, enabling comprehensive analysis and processing of the converted Numpy array.

Integration with Other Libraries and Tools

The converted Numpy array can be seamlessly integrated with other data analysis libraries, leveraging their capabilities for further analysis and visualization. PyArrow provides interoperability with popular libraries like Pandas, allowing you to convert a PyArrow Table to a Pandas DataFrame and vice versa. This integration enables a smooth data flow between PyArrow, Numpy, and Pandas, enabling you to leverage the strengths of each library for your data analysis tasks.

In addition, PyArrow can be integrated with various machine learning frameworks for model training and prediction. By converting the PyArrow Table to a Numpy array, you can readily feed the data into machine learning algorithms implemented in frameworks like scikit-learn or TensorFlow, opening up opportunities for advanced data analysis and modeling.

FAQs

Q: How do I install PyArrow?

A: PyArrow can be installed using pip. Simply run `pip install pyarrow` to install the library and its dependencies.

Q: What is Apache Arrow?

A: Apache Arrow is an in-memory columnar data format that provides efficient storage and retrieval of data. PyArrow is the Python implementation of Apache Arrow, providing a seamless interface between Arrow and Python data structures.

Q: How can I convert a Pandas DataFrame to a PyArrow Table?

A: PyArrow provides the `pyarrow.Table.from_pandas()` function to convert a Pandas DataFrame to a PyArrow Table. This allows for easy interoperability between Pandas and PyArrow.

Q: What is PyArrow compute?

A: PyArrow compute is a submodule of PyArrow that provides a set of high-level functions for data manipulation and analysis. It allows you to perform common operations like filtering, aggregating, and transforming data efficiently.

Q: What is PyArrow dataset?

A: PyArrow dataset is a submodule of PyArrow that provides a set of utilities for working with datasets. It allows you to handle large, multi-file datasets efficiently, enabling parallel processing and distributed computations.

Q: What is Pandas PyArrow?

A: Pandas PyArrow is a library that provides optimized functions for data conversion between Pandas and PyArrow. It aims to improve the performance and efficiency of data conversion operations.

Q: Can I write a PyArrow Table to a dataset?

A: Yes, PyArrow provides the `pyarrow.dataset.write_to_dataset()` function, which allows you to write a PyArrow Table to a dataset. This function enables easy and efficient writing of data to various storage systems like Parquet or CSV.

Q: What is PyArrow fsspec?

A: PyArrow fsspec is a submodule of PyArrow that provides an integration with the fsspec library. This integration allows you to work with various file systems and protocols, such as S3 or Hadoop, using the familiar interface of PyArrow.

Q: How can I convert a Numpy array to a PyArrow Table?

A: PyArrow provides the `pyarrow.Table.from_pandas()` function, which can be used to convert a Numpy array to a PyArrow Table indirectly by first converting the Numpy array to a Pandas DataFrame.

In conclusion, PyArrow offers a powerful and efficient solution for converting PyArrow Table to Numpy arrays. By understanding the table schema, handling large datasets efficiently, optimizing performance, and leveraging advanced features, you can seamlessly integrate data from PyArrow into the extensive ecosystem of Numpy and other data analysis libraries.

Pyarrow And The Future Of Data Analytics – Presented By Alessandro Molina

How To Convert Dataframe To Numpy?

If you are working with data analysis or machine learning tasks in Python, you would undoubtedly encounter two powerful libraries: Pandas and NumPy. Pandas provides a highly efficient data structure called DataFrame, which allows you to easily manipulate, analyze, and visualize data. On the other hand, NumPy offers support for performing mathematical operations on large, multi-dimensional arrays and matrices. However, there may be instances where you need to convert your Pandas DataFrame to a NumPy array. In this article, we will explore different methods to convert a DataFrame to NumPy and highlight their potential use cases.

Why convert a DataFrame to NumPy?

Before diving into the conversion methods, it is crucial to understand why you might need to convert a DataFrame to a NumPy array. Although DataFrame offers a wide range of functionalities like data cleaning, filtering, and aggregation, NumPy provides a number of advanced mathematical operations and statistical functions that are not readily available in Pandas. Hence, by converting your data to NumPy arrays, you can take advantage of these additional functionalities to perform complex operations on your datasets.

Conversion Methods:

1. Using the `.values` attribute:

The simplest way to convert a DataFrame to NumPy is by using the `.values` attribute. The attribute returns the data of the DataFrame as a two-dimensional NumPy array. For example, if you have a DataFrame named `df`, you can obtain the corresponding NumPy array by calling `df.values`.

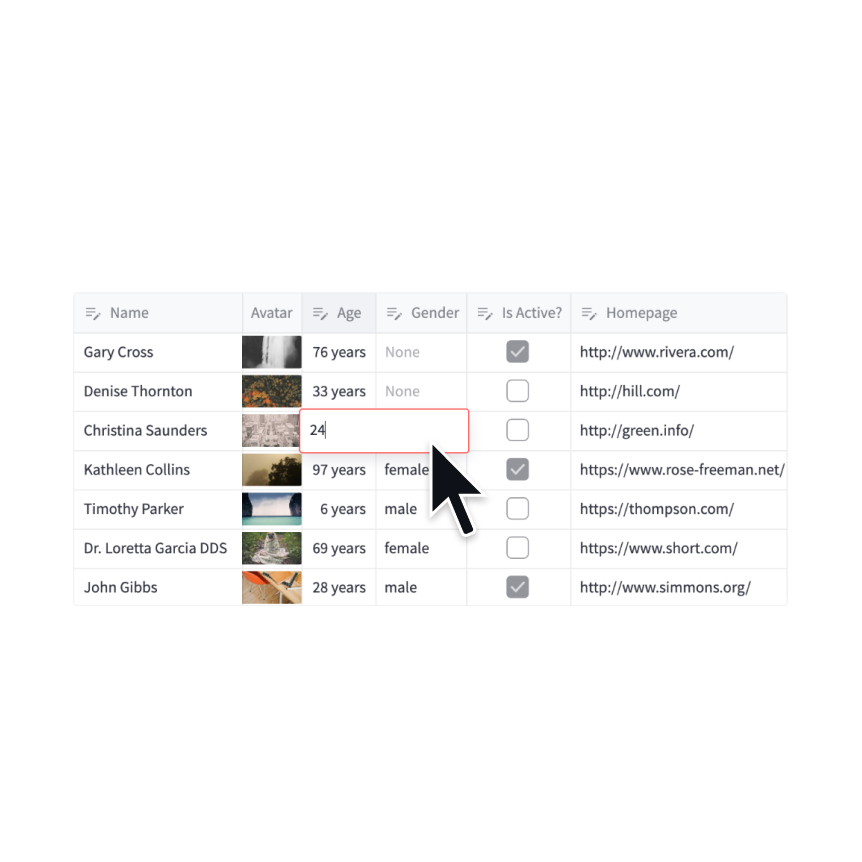

2. Using the `to_numpy()` method:

Pandas provides a method called `to_numpy()`, which can be directly applied to a DataFrame object to convert it to a NumPy array. This method provides additional control over the conversion process by allowing you to specify the data type of the resulting array.

3. Specifying numerical dtypes:

By default, Pandas assigns dtypes to columns in a DataFrame based on the underlying data. To control the dtypes of the resulting NumPy array, you can explicitly specify the dtypes when creating the DataFrame. For instance, you can use the `dtype` parameter in the Pandas `DataFrame()` function to assign dtypes. By doing so, the resulting NumPy array will have the desired types.

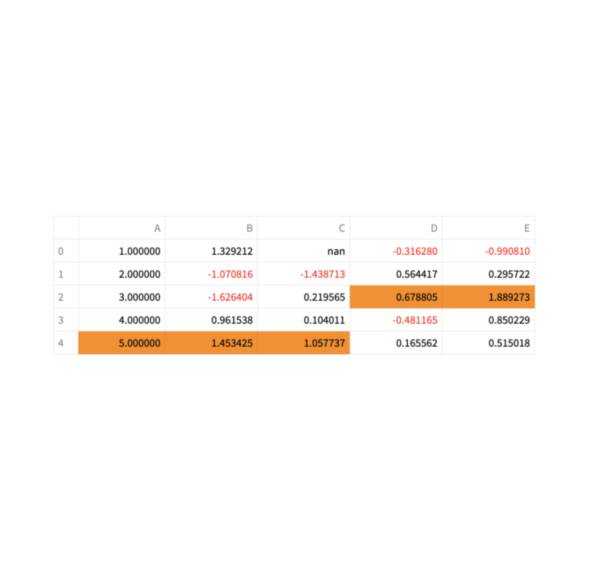

4. Handling missing values:

While converting a DataFrame to NumPy, it is vital to handle missing values appropriately. NumPy arrays cannot handle missing or NaN (Not a Number) values. To mitigate this issue, Pandas replaces the missing values with a special floating-point value called `NaN`, which stands for “Not a Number.” When converting a DataFrame to NumPy, these NaN values will be retained in the resulting array. To remove or replace these missing values within the NumPy array, you can use NumPy’s built-in functions or methods.

FAQs:

Q1. Can I convert a DataFrame with non-numeric data to a NumPy array?

A1. Yes, you can convert a DataFrame with non-numeric columns to a NumPy array. However, the resulting NumPy array will have a data type of `object`, as it cannot represent non-numeric values as numeric arrays.

Q2. Will the conversion preserve the column names and index labels of the DataFrame?

A2. No, by default, the conversion methods discussed in this article do not preserve the column names and index labels of the DataFrame. The resulting NumPy array will only contain the underlying data. If you want to retain the column names and index labels, you can store them separately as NumPy arrays or convert them to lists.

Q3. Can I convert a multi-index DataFrame to a NumPy array?

A3. Yes, you can convert a multi-index DataFrame to a NumPy array using the methods mentioned in this article. However, the resulting NumPy array will not preserve the hierarchical structure of the multi-index. It will be a two-dimensional array containing the flattened data.

In conclusion, understanding how to convert a DataFrame to NumPy arrays can be immensely beneficial when you need to leverage the advanced mathematical and statistical functionalities provided by NumPy. By using the methods described in this article, you can easily convert your Pandas DataFrames to NumPy arrays while considering the nuances of missing values and data types.

How To Convert Panda Series To Numpy?

Pandas, the open-source data analysis and manipulation library, provides powerful tools for working with structured data. One of its primary data structures is the Series, which is similar to a one-dimensional array. While the Pandas Series is highly efficient for data analysis tasks, there may be situations where you need to convert the Series into a NumPy array. Converting a Pandas Series to NumPy is a straightforward process that allows you to leverage the wide range of NumPy capabilities. In this article, we will explore various ways to convert a Panda Series to a NumPy array and also discuss the frequently asked questions about this topic.

Converting a Panda Series to NumPy using the `values` attribute:

The simplest and most commonly used method to convert a Pandas Series to a NumPy array is to access the `values` attribute. The `values` attribute returns a NumPy array containing the data from the Series. Let’s consider an example where we have a Pandas Series called `series_data`:

“`

import pandas as pd

import numpy as np

series_data = pd.Series([1, 2, 3, 4, 5])

numpy_array = series_data.values

“`

In the code snippet above, the `values` attribute returns a NumPy array `[1, 2, 3, 4, 5]`, which is stored in the variable `numpy_array`. Now, you can utilize NumPy’s vast array operations and mathematical functions on this converted array.

Converting a Pandas Series to NumPy using the `to_numpy()` method:

Another way to convert a Pandas Series to a NumPy array is by using the `to_numpy()` method. This method was introduced in Pandas version 0.24.0 and provides a more explicit way of converting a Pandas Series to a NumPy array. Here’s an example that demonstrates its usage:

“`

numpy_array = series_data.to_numpy()

“`

The `to_numpy()` method converts the `series_data` into a NumPy array, which is then assigned to `numpy_array`. It is worth noting that the `to_numpy()` method provides enhanced support for nullable integer and boolean Series, as it preserves the underlying data type.

Converting a Pandas Series to NumPy using the `array` function:

An alternative approach to convert a Pandas Series to NumPy is by using the `array` function available in the NumPy library. Here is an example showcasing its usage:

“`

numpy_array = np.array(series_data)

“`

In this example, we utilize the `array` function of NumPy to convert the `series_data` into a NumPy array, which is assigned to `numpy_array`.

Frequently Asked Questions:

Q1: Why would I need to convert a Panda Series to a NumPy array?

A1: While the Pandas Series offers extensive functionality for data analysis, NumPy arrays provide a broader range of mathematical operations and functions for scientific computing. Converting a Pandas Series to a NumPy array allows you to take advantage of these additional capabilities.

Q2: How does converting a Panda Series to a NumPy array affect performance?

A2: Converting a Panda Series to a NumPy array usually incurs minimal performance overhead. However, in some scenarios where large datasets are involved, converting to a NumPy array can lead to more efficient memory usage and faster execution of complex calculations.

Q3: Can I convert a multi-dimensional Panda Series to a NumPy array?

A3: Yes, you can convert a multi-dimensional Panda Series to a NumPy array. The resulting NumPy array will maintain the same dimensions as the original series, enabling you to perform multi-dimensional array operations using NumPy.

Q4: Does converting a Panda Series to a NumPy array modify the original series?

A4: No, converting a Panda Series to a NumPy array does not modify the original series. The conversion creates a copy of the series, allowing you to work with the NumPy array independently without altering the original data.

Q5: Are there any limitations or considerations when converting a Panda Series to a NumPy array?

A5: It is important to note that converting a Pandas Series to a NumPy array results in the loss of any associated metadata or index labels. Therefore, if you require the additional information provided by the series, it is advisable to store it separately or create a custom data structure that combines the metadata and the converted array.

In conclusion, converting a Pandas Series to a NumPy array offers flexibility and access to a broader range of mathematical and scientific computing capabilities. Whether you choose to utilize the `values` attribute, the `to_numpy()` method, or the `array` function, the conversion process is straightforward. Remember to consider any implications of losing metadata and index labels when converting the series. By leveraging the power of NumPy, you can further enhance your data analysis and manipulation workflows.

Keywords searched by users: pyarrow table to numpy Pyarrow compute, Pyarrow dataset, Pandas PyArrow, Install pyarrow, Apache Arrow, Pandas to pyarrow, Pyarrow fsspec, Write_to_dataset

Categories: Top 55 Pyarrow Table To Numpy

See more here: nhanvietluanvan.com

Pyarrow Compute

Data processing and analytics are crucial components in today’s data-driven world. With the ever-increasing volume and complexity of data, it has become imperative to have efficient tools and frameworks that can handle the processing and analysis of large datasets. PyArrow Compute is one such tool that has gained significant popularity among data scientists and engineers. In this article, we will delve into the world of PyArrow Compute, exploring its features, use cases, and advantages.

What is PyArrow Compute?

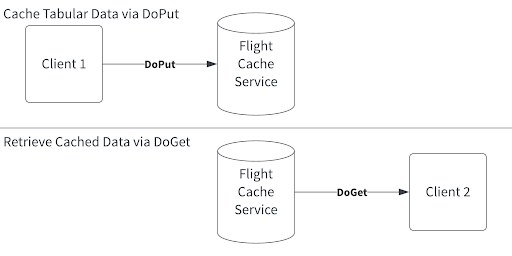

PyArrow Compute is an extension of the Apache Arrow project, designed to bring compute functionality to the Arrow memory model. Apache Arrow, known for its in-memory columnar format, provides an effective way to represent, manipulate, and transport large datasets efficiently across different programming languages and platforms. PyArrow Compute takes this concept a step further by introducing a high-performance compute engine built on top of the Arrow memory model.

Built with performance and scalability in mind, PyArrow Compute allows users to execute computations on in-memory data structures directly, without the need for intermediate data serialization or deserialization. This unique feature of PyArrow Compute enables seamless integration with existing data processing and analytics frameworks like Pandas, NumPy, and Apache Spark, making it a versatile tool for data scientists and engineers.

Features of PyArrow Compute

1. Columnar Processing: PyArrow Compute leverages the columnar format of Apache Arrow to perform operations on columns instead of rows, which significantly improves the efficiency of processing and analysis. This architecture allows for vectorized computations, reducing the overhead of loop-based operations and enhancing the performance of data processing tasks.

2. Broad Language Support: PyArrow Compute is written in C++ to ensure optimal performance. However, it provides language bindings for Python, R, and other popular programming languages, allowing users to work in their preferred language while enjoying the benefits of PyArrow Compute.

3. Seamless Integration: PyArrow Compute seamlessly integrates with existing data processing and analytics libraries, including Pandas, NumPy, and Apache Spark. This makes it easy to leverage the power of PyArrow Compute without having to rewrite or refactor existing codebases.

4. Expressive API: PyArrow Compute provides a rich API for performing a wide range of computations, including aggregation, filtering, sorting, and arithmetic operations. The API is designed to be intuitive and expressive, enabling users to write concise and readable code for complex data processing tasks.

Use Cases of PyArrow Compute

PyArrow Compute can be applied to various data processing and analytical tasks, including:

1. Data Wrangling: PyArrow Compute simplifies the process of cleaning, transforming, and reshaping datasets. Its efficient columnar processing capabilities enable fast filtering, sorting, and aggregation operations, making it an excellent choice for data wrangling tasks.

2. Exploratory Data Analysis: When exploring and analyzing large datasets, PyArrow Compute provides a high-performance environment for performing statistical calculations, correlation analysis, and data visualization. Its ability to seamlessly integrate with Pandas and NumPy makes it an invaluable tool for data scientists.

3. Machine Learning: PyArrow Compute can enhance the performance of machine learning workflows. By efficiently performing feature engineering tasks and enabling fast computations on large datasets, PyArrow Compute accelerates the training and evaluation of machine learning models.

Benefits of PyArrow Compute

1. Improved Performance: By leveraging the columnar format of Apache Arrow and vectorized computations, PyArrow Compute delivers exceptional performance for data processing and analytics tasks. It significantly reduces the execution time of operations and enables the processing of larger datasets in memory.

2. Language Flexibility: With language bindings for Python, R, and other languages, PyArrow Compute allows data scientists and engineers to work with their preferred language while enjoying the benefits of a high-performance compute engine. This flexibility eliminates the need for language-specific workarounds and facilitates seamless collaboration across teams with diverse language preferences.

3. Scalability: PyArrow Compute’s architecture and integration with frameworks like Apache Spark allow for distributed computing and scaling across clusters. This scalability is essential for handling large-scale data processing and analytics applications that require parallel execution across multiple machines.

FAQs

1. Is PyArrow Compute suitable for small datasets?

Yes, PyArrow Compute is designed to efficiently process both small and large datasets. Its performance enhancements, such as columnar processing and vectorized computations, offer improved execution times for data processing tasks, regardless of dataset size.

2. Can PyArrow Compute be used with Apache Spark?

Yes, PyArrow Compute seamlessly integrates with Apache Spark, allowing users to combine PyArrow Compute’s high-performance compute engine with Spark’s distributed computing capabilities. This integration enables efficient processing and analysis of large datasets across clusters.

3. Does PyArrow Compute support data visualization?

While PyArrow Compute focuses primarily on data processing and analytics, it can provide high-performance data transformations that can be used as a step towards data visualization. By leveraging PyArrow Compute’s fast operations, users can prepare their data for visualization libraries like Matplotlib or Plotly.

4. What are the memory requirements for PyArrow Compute?

PyArrow Compute operates on in-memory data structures, leveraging the Apache Arrow memory model. The memory requirements largely depend on the size of the dataset being processed. However, PyArrow Compute is designed to optimize memory usage and minimize memory overhead.

In conclusion, PyArrow Compute is a powerful tool for data processing and analytics, providing high-performance compute capabilities on in-memory data structures. With its columnar processing, broad language support, and seamless integration with popular data processing frameworks, PyArrow compute is gaining traction among data scientists and engineers. Whether it be data wrangling, exploratory data analysis, or machine learning tasks, PyArrow Compute delivers improved performance, language flexibility, and scalability, making it an indispensable asset in the world of data-driven insights.

Pyarrow Dataset

Introduction

Data manipulation and analysis form the backbone of many industries today. Organizations rely on robust and efficient tools to handle large datasets, perform computations, and extract meaningful insights. Pyarrow is a powerful open-source framework built to address these needs, offering a fast and efficient way to handle, process, and analyze large datasets in Python. With its extensive capabilities and ease of use, Pyarrow has gained popularity among data scientists and engineers worldwide. In this article, we will delve into Pyarrow’s dataset module and explore its features, benefits, and how it can enhance your data pipeline.

What is Pyarrow Dataset?

Pyarrow dataset is a module within the Pyarrow ecosystem, specially designed for working with large datasets in memory. It provides a high-level abstraction over dataset operations and seamlessly integrates with other Pyarrow components, making it a versatile tool for efficient data processing. Pyarrow dataset is built on Apache Arrow, a columnar in-memory data representation framework that offers superior performance and compatibility across various programming languages.

Key Features of Pyarrow Dataset

1. Efficient Data Loading: Pyarrow dataset provides efficient mechanisms for loading data from multiple file formats, such as Parquet, CSV, Apache ORC, and more. It leverages Arrow’s columnar storage format to minimize memory overhead while loading and storing large datasets.

2. Parallel Processing: Pyarrow dataset enables parallelization of data operations, allowing users to take full advantage of multi-core processors. This feature significantly accelerates data processing tasks, making it perfect for handling large datasets that can’t fit into memory.

3. Query Optimization: The dataset module incorporates powerful query optimization techniques, including predicate and projection pushdown, to accelerate query execution. By pushing down operations to the data source, Pyarrow dataset minimizes unnecessary data reading and reduces latency.

4. Type Inference: Pyarrow dataset supports automatic type inference, eliminating the need for explicit type declarations. It intelligently recognizes data types based on the source schema, simplifying the data loading process and saving valuable development time.

5. Data Transformations and Filters: Pyarrow dataset offers a wide range of built-in transformations and filtering operations to process datasets. These include aggregations, selecting columns, sorting, filtering, and more. These operations can be chained together to construct complex data processing pipelines effortlessly.

Benefits of Pyarrow Dataset

1. High Performance: Pyarrow dataset leverages the power of Apache Arrow’s columnar in-memory format, providing exceptional performance and efficient memory utilization. It enables faster data loading, processing, and querying, allowing users to analyze large datasets with ease.

2. Memory Efficiency: By utilizing Arrow’s columnar format, Pyarrow dataset reduces memory overhead and enables efficient memory utilization. It optimizes memory allocation for better performance, making it suitable for handling datasets that exceed memory capacity.

3. Scalability: Pyarrow dataset supports parallel processing, enabling scalability across multi-core processors. It seamlessly integrates with distributed systems, making it ideal for handling distributed datasets and big data processing scenarios.

4. Interoperability: Pyarrow dataset is built on the Apache Arrow framework, which ensures compatibility with various programming languages. This interoperability allows for seamless integration with other data manipulation libraries like Pandas, Dask, and NumPy.

5. Simplified Data Processing: Pyarrow dataset offers an intuitive and easy-to-use API for common data operations. Its expressive syntax allows users to perform complex transformations and filtering with minimal code, reducing development efforts and improving code readability.

Frequently Asked Questions (FAQs)

Q1. What types of datasets can Pyarrow handle?

Pyarrow dataset can handle a wide range of datasets, including Parquet, CSV, Apache ORC, Apache Avro, JSON, and more. It supports both local and distributed file systems.

Q2. Can Pyarrow dataset handle streaming data?

Pyarrow dataset primarily focuses on in-memory processing and is designed for batch data processing. However, Pyarrow can integrate with other frameworks like Apache Kafka or Apache Pulsar to handle streaming data.

Q3. Can Pyarrow dataset handle complex data transformations?

Yes, Pyarrow dataset provides a rich set of built-in operations for handling complex data transformations, aggregations, sorting, filtering, and more. These operations can be easily chained together to construct complex data processing pipelines.

Q4. How does Pyarrow dataset compare to other data manipulation libraries like Pandas?

Pyarrow dataset complements libraries like Pandas, providing enhanced performance and memory efficiency for handling large datasets. It seamlessly integrates with Pandas, allowing users to leverage Pyarrow’s capabilities while maintaining compatibility with existing Pandas workflows.

Q5. Is Pyarrow dataset suitable for use in a distributed computing environment?

Yes, Pyarrow dataset is designed to work with distributed systems and supports parallel processing across multiple cores. It integrates well with distributed computing frameworks like Apache Spark, making it ideal for distributed data processing scenarios.

Conclusion

In today’s data-driven world, efficient handling and processing of large datasets are crucial for making informed decisions. Pyarrow dataset offers an excellent solution for performing data operations on large datasets with superior performance and memory efficiency. With its intuitive API, seamless integration with other libraries, and ability to handle complex transformations, Pyarrow dataset is a powerful tool for any data scientist or engineer. Incorporate Pyarrow dataset into your data pipeline to unlock the full potential of your data processing tasks and accelerate your data analysis workflow.

Pandas Pyarrow

In the world of data analysis and manipulation, Python’s pandas library has gained immense popularity due to its simplicity and flexibility. However, as datasets grow larger and more complex, there is a need for improved performance and efficient handling of big data. This is where Pandas PyArrow steps in. In this article, we will dive deep into Pandas PyArrow, exploring its features, advantages, and how you can leverage it for efficient data transfer and serialization.

What is Pandas PyArrow?

PyArrow is an open-source cross-language development platform that supports efficient data interchange between Python and other programming languages such as R, Java, C++, and more. It provides tools for high-performance data serialization, in-memory analytics, and distributed computing. Pandas PyArrow, on the other hand, is a specialized integration of PyArrow with pandas, enabling seamless integration and improved performance for data manipulation tasks.

Benefits of Using Pandas PyArrow:

1. Enhanced Performance: One of the significant advantages of Pandas PyArrow is its ability to handle large datasets efficiently. It leverages Arrow’s columnar memory layout and zero-copy serialization to accelerate data transfer between Python and other languages. This translates into faster execution times and reduced memory consumption, especially when dealing with big data workloads.

2. Interoperability: Pandas PyArrow facilitates seamless interoperability between Python and other programming languages, making it an excellent choice for collaborative projects involving multiple teams with diverse language preferences. It allows you to exchange data seamlessly with other data processing tools and libraries, thereby expanding the scope and compatibility of your data analysis workflows.

3. Efficient Serialization: PyArrow provides an efficient serialization framework that allows you to serialize data structures such as pandas DataFrames without incurring heavy serialization costs. This is particularly useful when transferring data over a network or persisting data to disk. The ability to serialize data quickly and efficiently is especially valuable for streaming applications and distributed computing frameworks.

4. Arrow Architecture: Pandas PyArrow cohesively integrates with the Arrow columnar memory format, enabling efficient execution of analytical operations. By utilizing Arrow’s in-memory columnar representation, Pandas PyArrow optimizes both memory consumption and computational performance. It facilitates rapid data-processing pipelines and seamless integration with other systems or frameworks that support Arrow.

FAQs about Pandas PyArrow:

Q1. How does Pandas PyArrow compare to other serialization frameworks like pickle or Parquet?

Pandas PyArrow provides several advantages over other serialization frameworks. Unlike Pickle, which is Python-specific, PyArrow enables serialization and interchange of data across different programming languages. It also offers better performance due to its zero-copy serialization and columnar in-memory representation. When compared to Parquet, Pandas PyArrow provides low-latency random access to data, making it suitable for interactive and analytical workloads.

Q2. Can I use Pandas PyArrow with my existing Pandas codebase?

Absolutely! You can integrate Pandas PyArrow seamlessly into your existing Pandas codebase without any major changes. It acts as a drop-in replacement for the default pandas serialization methods, allowing you to take advantage of PyArrow’s performance benefits with minimal effort.

Q3. Does Pandas PyArrow support distributed computing?

Yes, Pandas PyArrow can be used in conjunction with distributed computing frameworks like Apache Spark. By leveraging the Arrow columnar format, PyArrow enables efficient data transfer between different systems, improving the performance of distributed analytical workloads. This makes it an ideal choice for big data processing on distributed clusters.

Q4. Is Pandas PyArrow suitable for production deployments?

Absolutely! Pandas PyArrow is extensively used in production environments due to its ability to handle large-scale datasets efficiently. Its broad compatibility with other data processing and analytics tools makes it an excellent choice for both small-scale tasks and large-scale deployments.

Q5. Are there any limitations or caveats when using Pandas PyArrow?

While Pandas PyArrow provides significant advantages for data manipulation and serialization, it’s important to note that certain operations may require additional considerations or adaptations. For instance, not all pandas data types are fully supported by PyArrow, and some advanced features might have limitations. It’s recommended to refer to the official documentation and perform appropriate testing before adopting Pandas PyArrow for critical workflows.

In conclusion, Pandas PyArrow brings enhanced performance, interoperability, and efficient serialization to the world of data analysis. By seamlessly integrating with Pandas and leveraging the power of the Arrow columnar memory format, Pandas PyArrow opens up new possibilities for handling big data workloads with ease. Whether you’re dealing with large datasets, collaborating across different programming languages, or working on distributed systems, Pandas PyArrow proves to be a powerful tool in your data manipulation arsenal.

Images related to the topic pyarrow table to numpy

Found 34 images related to pyarrow table to numpy theme

Article link: pyarrow table to numpy.

Learn more about the topic pyarrow table to numpy.

- NumPy Integration — Apache Arrow v12.0.1

- pyarrow.Table — Apache Arrow v12.0.1

- pyarrow.Table — Apache Arrow v7.0.0

- pyarrow.Array — Apache Arrow v12.0.1

- Creating Arrow Objects — Apache Arrow Python Cookbook …

- Fastest way to write numpy array in arrow format

- How To Convert Pandas DataFrame Into NumPy Array

- How To Convert Pandas To Numpy – ActiveState

- PyArrow Functionality — pandas 2.0.3 documentation

- How to Install pyarrow in Python? – Finxter

- How to use the pyarrow.Table.from_arrays function in … – Snyk

- pyarrow.Table — Apache Arrow v0.12.1.dev425+g828b4377f …

- PyArrow Functionality — pandas 2.1.0.dev0+1129 … – PyData |

- Utilizing PyArrow to Improve pandas and Dask Workflows

See more: nhanvietluanvan.com/luat-hoc