Parallel Processing Jupyter Notebook

Introduction

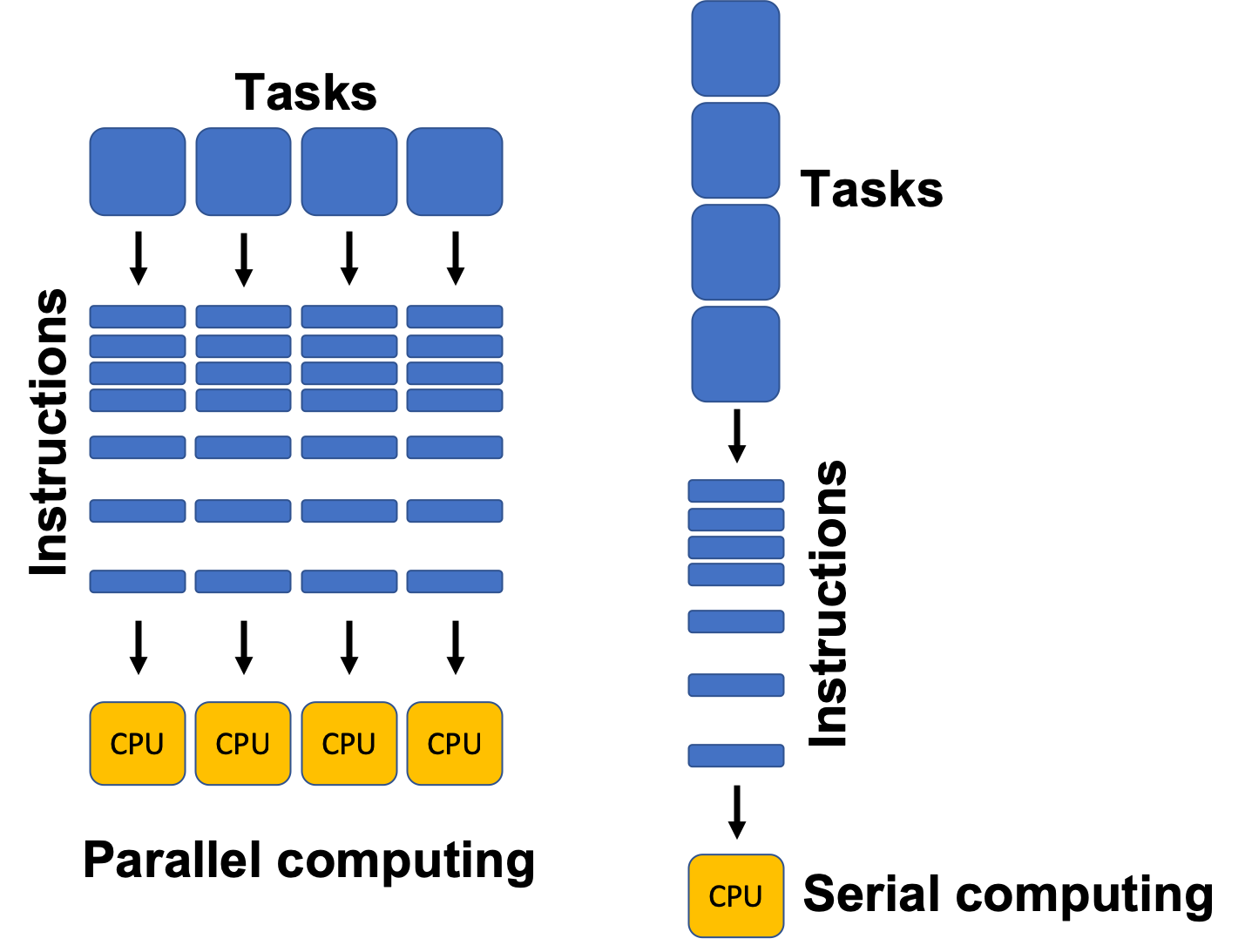

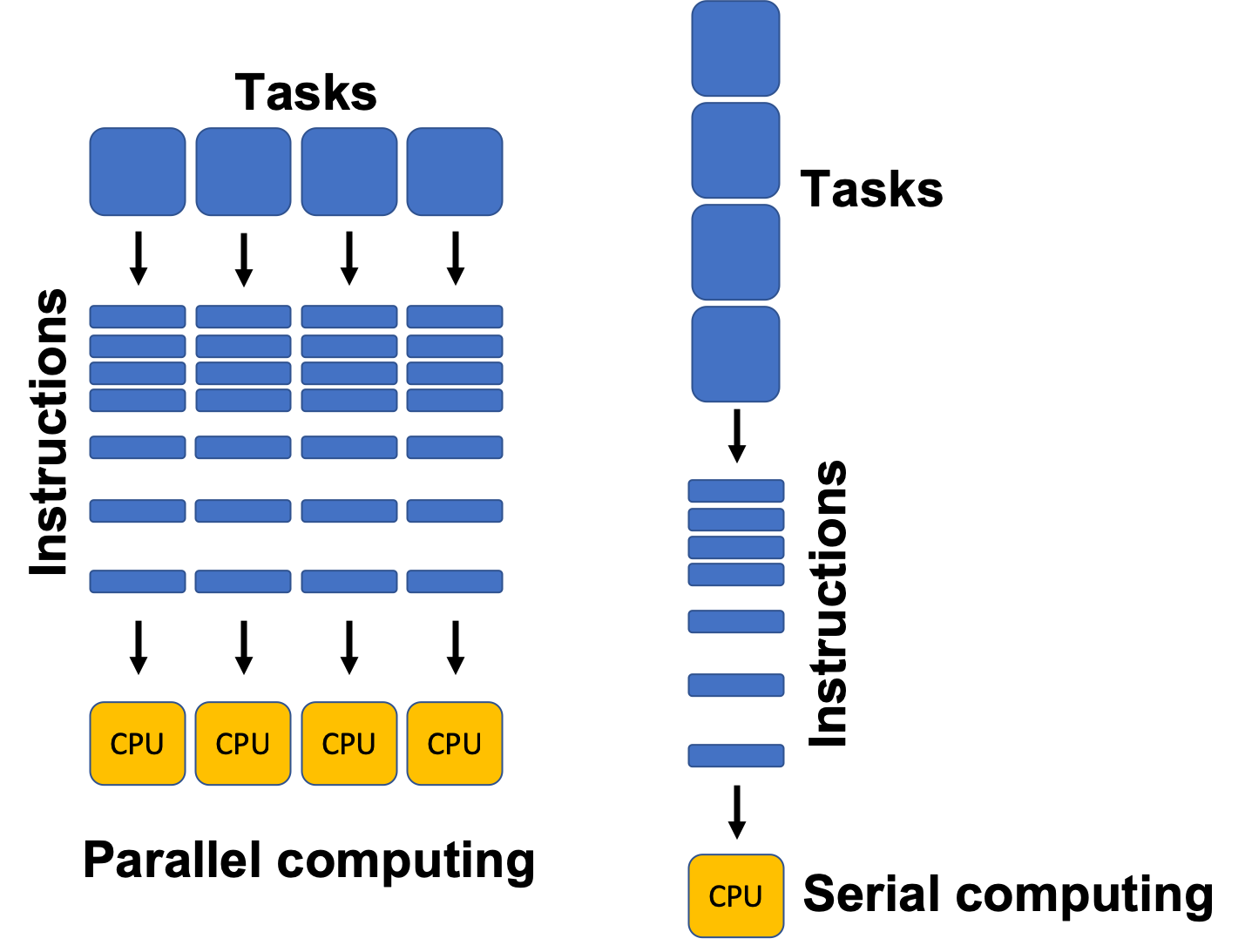

With the increasing demand for performing complex computations efficiently, parallel processing has become an indispensable technique for data scientists and programmers. Parallel processing allows for the simultaneous execution of multiple tasks to achieve faster and more efficient computations. In this article, we will explore the concept of parallel processing in Jupyter Notebook, a popular web-based interactive computing environment.

Benefits of Parallel Processing

Parallel processing offers several advantages, including:

1. Improved Performance: By distributing tasks across multiple processors or cores, parallel processing significantly reduces the time required to execute computations. This leads to faster results and increased productivity.

2. Resource Utilization: Parallel processing optimizes the utilization of computing resources by effectively utilizing the processing power of multiple cores. It enables users to leverage the power of modern multi-core processors, which are prevalent in today’s computing systems.

3. Scalability: With parallel processing, users can easily scale their computations to handle larger datasets or more complex algorithms. This flexibility allows for efficient computation on both small and large-scale problems.

Different Approaches of Parallel Processing

There are various approaches to implementing parallel processing, each with its own characteristics and use cases. Some popular approaches include:

1. Multithreading: Multithreading enables concurrent execution of multiple threads within a single process. Each thread shares the same memory space, allowing for efficient communication and synchronization between threads. However, due to the Global Interpreter Lock (GIL) in CPython, multithreading may not provide true parallelism for CPU-bound tasks.

2. Multiprocessing: Multiprocessing involves running multiple processes concurrently, each with its own memory space. This approach allows for true parallelism on multi-core systems as each process runs on a separate core. However, communication between processes can be more complex and may require explicit message passing.

3. Distributed Computing: Distributed computing involves executing tasks across multiple machines connected over a network. This approach is suitable for large-scale computations that cannot be efficiently handled by a single machine. It requires additional infrastructure and coordination mechanisms, such as message passing or distributed file systems.

Parallel Processing in Jupyter Notebook

Jupyter Notebook is a powerful tool for interactive computing and data analysis. By default, Jupyter Notebook executes code in a single-threaded manner, which may not fully exploit the capabilities of modern multi-core processors. However, with the help of additional libraries and extensions, parallel processing can be seamlessly integrated into Jupyter Notebook.

Implementing Parallel Processing in Jupyter Notebook

There are multiple ways to implement parallel processing in Jupyter Notebook, including:

1. Run Multiple Jupyter Notebooks Sequentially: This approach involves opening multiple Jupyter Notebook instances and executing them sequentially. While this method is simple, it may not provide true parallelism as each notebook runs in its own process.

2. IPython Parallel: IPython Parallel is a powerful library that enables parallel and distributed computing in Jupyter Notebook. It allows users to execute code across multiple engines (processes) in a coordinated manner. This approach provides true parallelism and supports both local and distributed execution.

3. Cell-Based Parallel Execution: Some Jupyter Notebook extensions, such as “ipyparallel” or “ipyparallel Magics,” allow for parallel execution of individual cells. This approach enables users to annotate specific cells for parallel execution, while the rest of the notebook runs sequentially. It offers a fine-grained control over parallelism within a notebook.

Challenges and Considerations in Parallel Processing

While parallel processing offers significant benefits, there are several challenges and considerations to keep in mind:

1. Scalability: Not all tasks are suitable for parallel processing. Some algorithms or computations may have inherent dependencies or sequential nature, making them difficult to parallelize efficiently. It is crucial to analyze the problem and determine the potential benefits of parallel processing before implementation.

2. Synchronization and Communication: When executing tasks in parallel, it is important to properly synchronize and communicate between processes or threads to ensure correct results. This can involve complexities such as race conditions, deadlocks, or managing shared resources.

3. Overhead: Parallel processing introduces additional overhead due to communication, synchronization, and coordination. It is essential to carefully evaluate the trade-off between computation speedup and the overhead introduced by parallel execution.

Examples and Use Cases of Parallel Processing in Jupyter Notebook

1. Run Multiple Jupyter Notebooks Sequentially: This approach can be useful when you want to execute multiple notebooks with different dependencies or parameters but do not require true parallelism. For example, you can run several notebooks in a loop to process different subsets of a large dataset.

2. IPython Parallel Jupyter Example: IPython Parallel provides a simple and efficient way to distribute computations across multiple engines. It is well-suited for tasks that can be easily parallelized, such as embarrassingly parallel problems or parameter sweeps. By leveraging IPython Parallel, you can harness the full power of multi-core processors and achieve significant speedups.

3. Jupyter Multithreading: Although Jupyter Notebook’s default implementation does not support true multithreading due to the GIL, you can still utilize multithreading for I/O-bound tasks or to improve responsiveness during interactive computing sessions. However, for CPU-bound tasks, alternative approaches such as multiprocessing or distributed computing may be more effective.

4. Can I Run Two Jupyter Notebooks at the Same Time? Yes, you can run multiple Jupyter Notebook instances simultaneously, each in its own process. However, true parallel execution across notebooks would require a more sophisticated approach, such as IPython Parallel or distributed computing.

5. Python Parallel Processing: In addition to Jupyter Notebook-specific approaches, Python offers various libraries and frameworks for parallel processing, such as multiprocessing, concurrent.futures, or Dask. These tools can be used in conjunction with Jupyter Notebook to achieve parallelism in a broader context.

Conclusion

Parallel processing is a powerful technique for improving performance and efficiency in data analysis, computations, and simulations. In Jupyter Notebook, various approaches and tools can be employed to harness the full potential of parallel processing. From running multiple notebooks sequentially to utilizing IPython Parallel, these methods enable users to leverage the power of modern multi-core processors and tackle complex problems with ease. However, it is essential to carefully consider the challenges and trade-offs associated with parallel execution to ensure optimal results.

Multiprocessing Using Python || Run Multiple Instances In Parallel

Can I Run 2 Jupyter Notebooks At The Same Time?

Jupyter notebooks have become an essential tool for many data scientists, analysts, and programmers due to their versatility and interactivity. These notebooks allow you to code, execute, and visualize your work all in one place. However, a common question that arises is whether it’s possible to run multiple Jupyter notebooks simultaneously.

The answer is yes, you can indeed run multiple Jupyter notebooks at the same time. But before diving into the specifics of running multiple notebooks, let’s understand what a Jupyter notebook is and how it functions.

What is a Jupyter notebook?

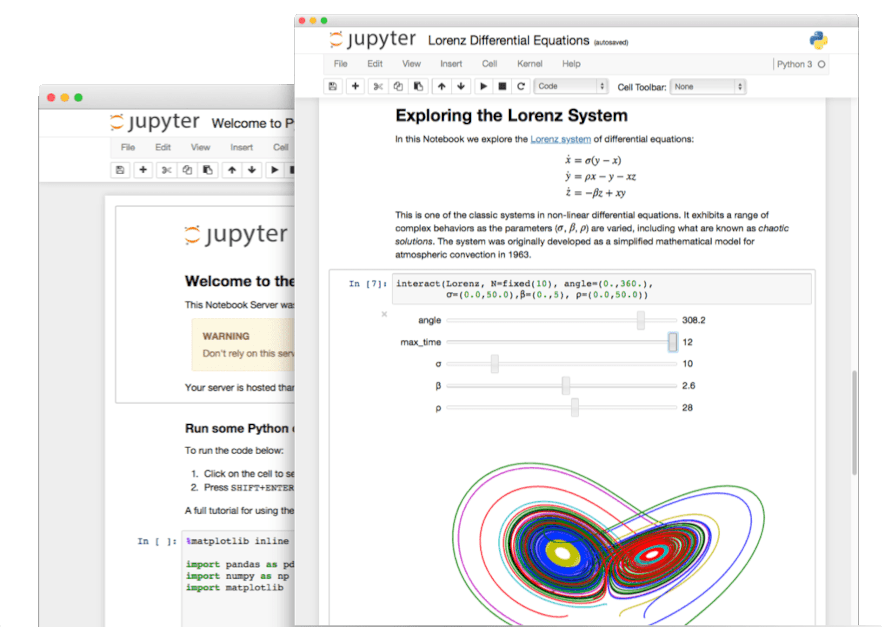

Jupyter notebook is an open-source web application that enables you to create and share documents that contain live code, equations, visualizations, and explanatory text. It provides an interactive computing environment where you can write and execute code in various programming languages such as Python, R, and Julia.

Jupyter notebooks are organized into individual cells, allowing you to break your code into manageable sections. Each cell can be executed independently, making it easy to test and tweak code snippets.

Running multiple Jupyter notebooks

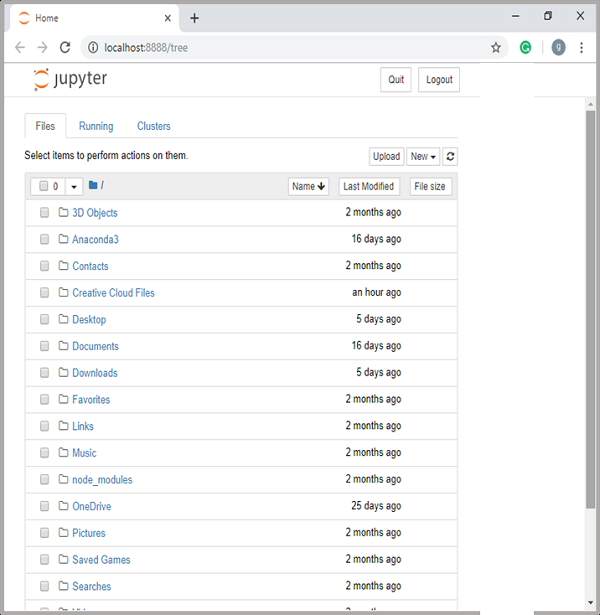

To run multiple Jupyter notebooks simultaneously, you have a few options depending on your specific requirements and preferences.

1. Using multiple browser tabs: The simplest method to run multiple notebooks is by opening each notebook in a separate browser tab. You can create a new tab by right-clicking on the notebook file and selecting “Open link in new tab.” This way, you can have multiple notebooks running independently and switch between them easily.

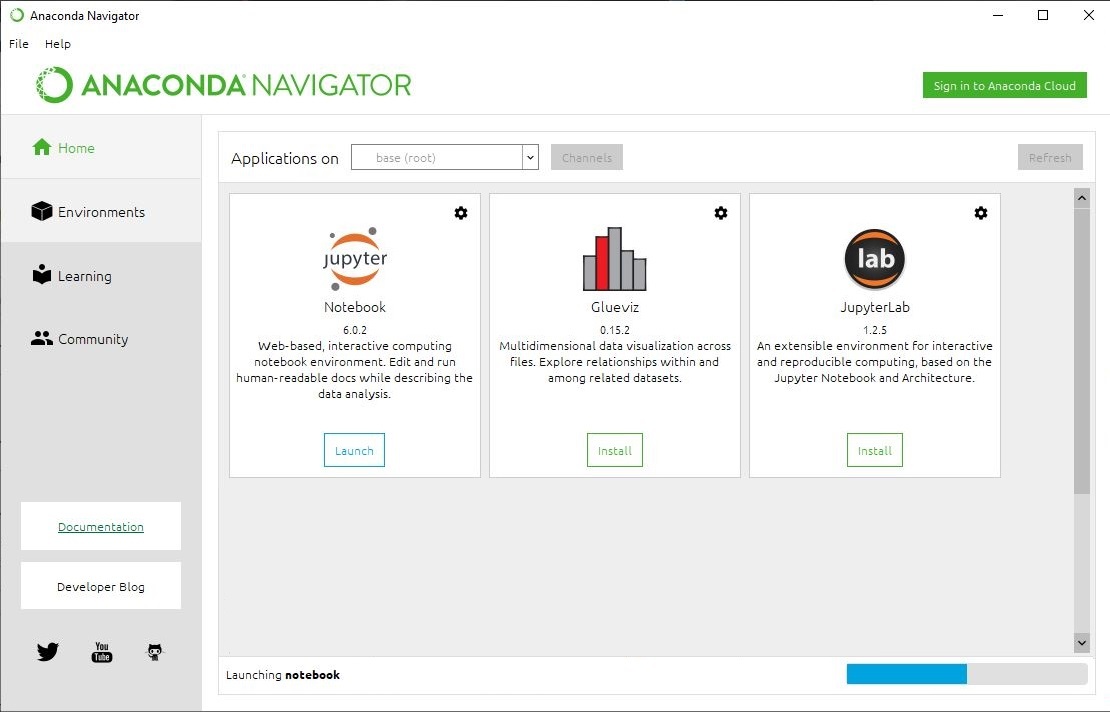

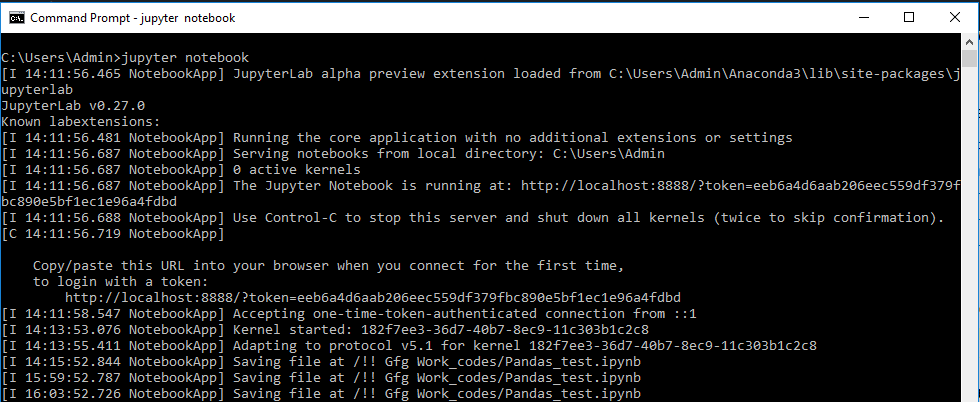

2. Using multiple instances of Jupyter: Another approach is to run multiple instances of the Jupyter notebook server. By default, Jupyter notebooks run on a local server accessible through your web browser. To launch additional instances, you need to open multiple terminal or command prompt windows and run the command `jupyter notebook` in each window. Each instance will start a separate server on a different port, allowing you to work on multiple notebooks simultaneously.

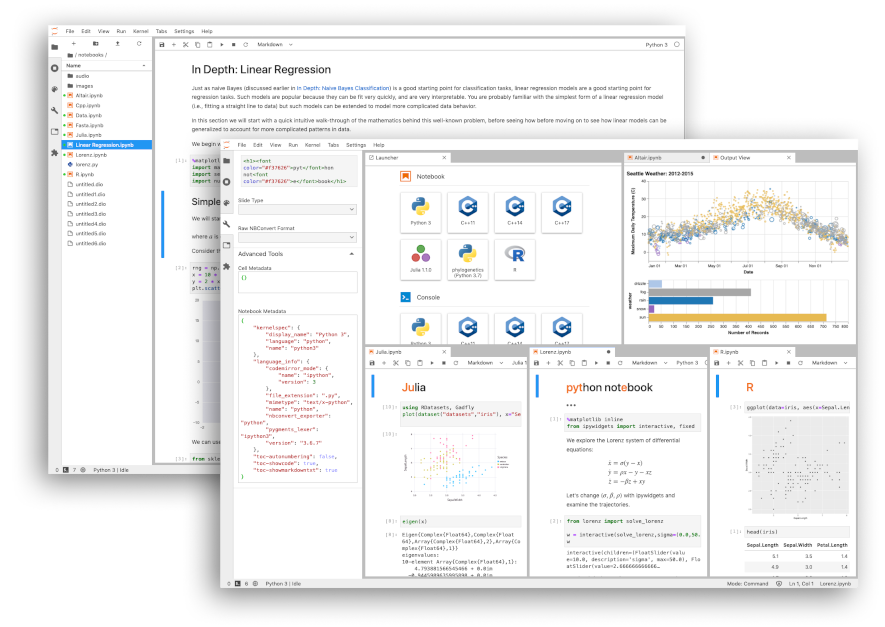

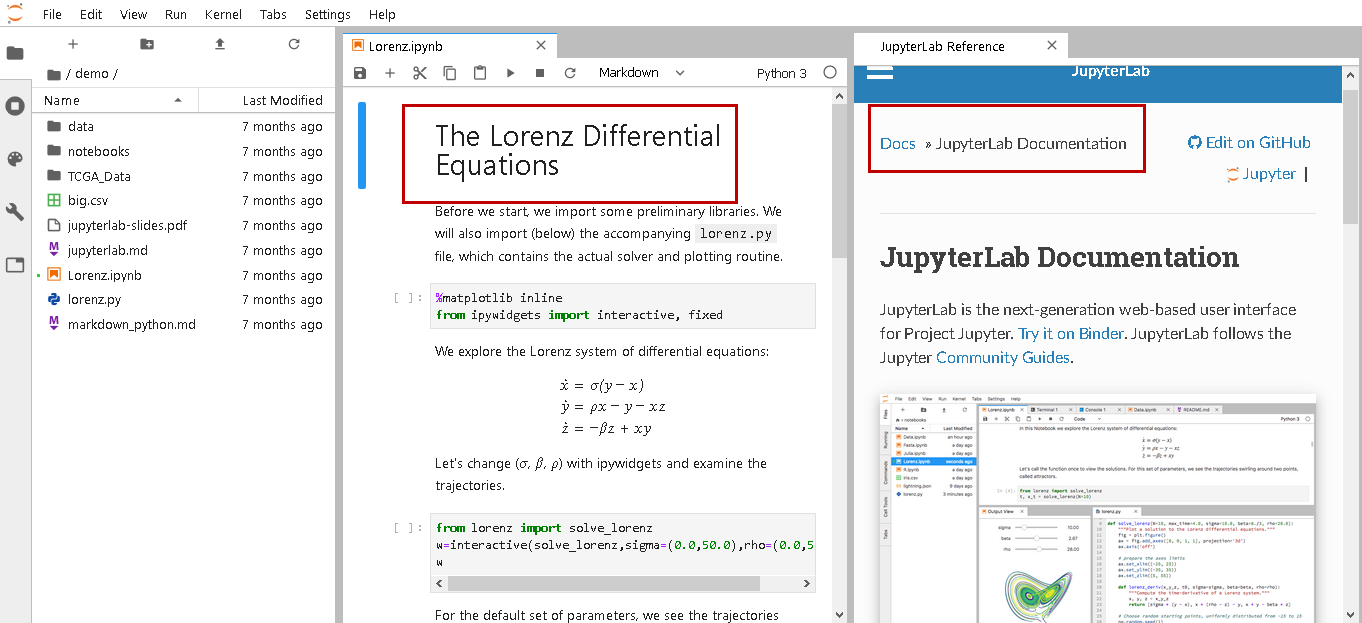

3. Using JupyterLab: JupyterLab is an enhanced version of the Jupyter notebook interface that provides a more flexible and feature-rich environment. It allows you to arrange notebooks, code, and output in a tabular format. JupyterLab has a built-in tab system, allowing you to open and work on multiple notebooks side by side.

Frequently Asked Questions (FAQs):

Q1. Can I run Jupyter notebooks on a remote server?

Yes, Jupyter notebooks can be run on a remote server. You can connect to a remote server using SSH and launch Jupyter notebooks there. This is particularly useful when you want to utilize the computing resources of a more powerful server or collaborate with others on the same notebooks.

Q2. Can I share my Jupyter notebook with others while running it?

Yes, you can share live Jupyter notebooks with others using Jupyter’s built-in sharing capabilities. With the notebook running, you can click on the “File” menu and select “Download as” to save the notebook in various formats like HTML or PDF. Alternatively, you can use Jupyter’s “File” menu and select “Share static link” to generate a shareable URL that others can access even if they don’t have Jupyter installed.

Q3. Will running multiple Jupyter notebooks simultaneously affect performance?

Running multiple Jupyter notebooks simultaneously may impact performance if your computer’s resources are limited. Each notebook instance consumes memory and processing power, so running too many notebooks or notebooks that require significant computational resources might slow down your system. However, with modern hardware, running a few notebooks simultaneously shouldn’t pose a problem for most users.

Q4. Can I run Jupyter notebooks on multiple machines simultaneously?

Yes, you can run Jupyter notebooks on multiple machines simultaneously. This can be achieved by launching Jupyter notebooks on each machine separately and accessing them from different web browsers. However, keep in mind that the notebooks on different machines won’t be synchronized automatically unless you’re using a version control system or a networked file system.

Q5. Is there a limit to the number of notebooks I can run at the same time?

There is no inherent limit to the number of Jupyter notebooks you can run simultaneously. However, the limit may be imposed by your computer’s resources, such as memory or processing power. Running a large number of notebooks might lead to performance degradation, so it’s important to monitor resource usage and adjust accordingly.

In conclusion, running multiple Jupyter notebooks simultaneously is not only possible but also a powerful way to work on multiple coding tasks or analyses concurrently. Whether you choose to open multiple browser tabs, launch multiple Jupyter instances, or utilize JupyterLab’s enhanced features, the ability to run multiple notebooks empowers you to be more efficient and productive in your data exploration and analysis endeavors.

Is Python Good For Parallel Processing?

Python is a versatile and powerful programming language that is widely used across various industries and domains. With its simplicity, readability, and rich ecosystem of libraries and frameworks, Python has become a popular choice for many developers. However, when it comes to parallel processing, there are questions about the efficiency and capability of Python. In this article, we will explore the parallel processing capabilities of Python and discuss whether it is a good choice for parallel computing tasks.

Parallel processing is the execution of multiple tasks concurrently, where each task is divided into smaller subtasks that can be executed simultaneously. This approach allows for faster and more efficient execution of computationally-intensive tasks, making it an appealing option for applications that require high-performance computing. Python, being an interpreted language, may not seem like an obvious choice for parallel processing due to concerns about performance and the Global Interpreter Lock (GIL).

The Global Interpreter Lock is a mechanism in the CPython interpreter (the standard Python implementation) that allows only one thread to execute Python bytecode at a time. This lock ensures thread safety and simplifies memory management but can potentially limit the performance improvements gained from parallel processing. As a result, traditional parallel processing techniques that rely heavily on multi-threading may face limitations in Python.

Nevertheless, Python offers several alternatives for achieving parallelism, each suited for different use cases. One of the most popular options in Python for parallel processing is the multiprocessing module. This module provides an easy-to-use interface for parallel programming by spawning multiple processes, each with its own Python interpreter. With multiprocessing, Python can effectively leverage multiple cores and make use of all available resources on a machine.

The multiprocessing module overcomes the limitations of the GIL by allowing processes to run in separate memory spaces, effectively bypassing the lock. This makes it suitable for CPU-bound tasks where the parallel execution of multiple processes can significantly improve performance. By taking advantage of the multiprocessing module, Python can achieve true parallelism and leverage all available CPU resources.

In addition to the multiprocessing module, Python also offers other parallel programming libraries such as concurrent.futures, joblib, and dask. These libraries provide higher-level abstractions and more advanced features for parallel computing. For instance, concurrent.futures provides a high-level interface for submitting tasks asynchronously and processing results as they become available. Joblib, on the other hand, focuses on task-based parallelism and provides efficient memory management for parallel computations. Dask is a powerful library that extends the capabilities of Python for parallel computing, enabling efficient handling of larger-than-memory datasets across multiple machines.

Python’s ecosystem also includes specialized libraries such as NumPy, SciPy, and pandas, which are extensively used in scientific computing and data analysis. These libraries provide efficient algorithms and data structures for numerical computations, making them well-suited for parallel processing tasks. Moreover, Python allows for seamless integration with lower-level languages like C and Fortran, providing the flexibility to combine the performance advantages of compiled languages with the ease of use of Python.

Now let’s address some frequently asked questions about Python and parallel processing:

Q: Can Python achieve the same level of performance as lower-level languages like C or C++ for parallel processing tasks?

A: While Python may not match the raw performance of lower-level languages, its ease of use and extensive libraries make it a compelling choice for many applications. By leveraging specialized libraries and interfacing with compiled languages, Python can achieve high performance for parallel processing tasks.

Q: Is Python suitable for embarrassingly parallel tasks?

A: Yes, Python is particularly well-suited for embarrassingly parallel tasks where minimal communication or synchronization is required between processes. The multiprocessing and concurrent.futures modules in Python provide simple and efficient ways to handle such tasks.

Q: Are there any limitations in using Python for distributed parallel processing?

A: Python can handle distributed parallel processing tasks, but it may not be the most efficient option in terms of communication overhead and scalability. Libraries like Dask and PySpark can help overcome some of these limitations by providing distributed computing capabilities.

In conclusion, while Python may have some limitations due to the Global Interpreter Lock, it offers several alternatives and libraries that can effectively harness parallel processing. The multiprocessing module, along with other parallel programming libraries, provides the means to achieve true parallelism and leverage the power of multiple cores. With its simplicity, extensive ecosystem, and strong integration capabilities with other languages, Python remains a good choice for parallel processing in many use cases.

Keywords searched by users: parallel processing jupyter notebook run multiple jupyter notebook sequentially, ipyparallel jupyter notebook, jupyter notebook ‘run cells in parallel, ipyparallel jupyter example, jupyter multithreading, can i run two jupyter notebooks at the same time, ipython parallel example, python parallel processing

Categories: Top 35 Parallel Processing Jupyter Notebook

See more here: nhanvietluanvan.com

Run Multiple Jupyter Notebook Sequentially

Jupyter Notebook is a popular open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and narrative text. It is extensively used by data scientists, researchers, and developers to perform data analysis, create interactive data visualizations, and experiment with machine learning models.

While working on complex projects or analyzing large datasets, you might find yourself needing to run multiple Jupyter notebooks sequentially. Running notebooks sequentially can help ensure that the notebooks are executed in the desired order and that the output from one notebook is available as input to another. In this article, we will explore how to run multiple Jupyter notebooks sequentially, step by step.

Step 1: Organizing the Notebooks

Before we start running notebooks sequentially, it’s important to organize them properly. Ensure that the notebooks are named in a way that represents their execution order. For example, you can prefix the notebooks with numbers (e.g., 01_data_preprocessing.ipynb, 02_feature_extraction.ipynb, 03_model_training.ipynb).

Step 2: Using a Shell Script

One way to run multiple Jupyter notebooks sequentially is by using a shell script. This method requires some basic knowledge of shell scripting. Here’s a step-by-step guide:

1. Open a text editor and create a new file. Save it with a .sh extension (e.g., run_notebooks.sh).

2. In the script file, use the jupyter nbconvert command to execute the notebooks. Each command should be followed by a notebook file name.

“`

jupyter nbconvert –to notebook –execute notebook1.ipynb

jupyter nbconvert –to notebook –execute notebook2.ipynb

jupyter nbconvert –to notebook –execute notebook3.ipynb

“`

3. Save the script file and close the text editor.

4. Open the terminal or command prompt and navigate to the directory where the script file is saved.

5. Run the script by executing the following command:

“`

./run_notebooks.sh

“`

This will execute the notebooks in sequential order one by one.

Step 3: Using a Python Script

Another approach to run Jupyter notebooks sequentially is by using a Python script. This method requires the installation of the nbconvert library. Follow these steps:

1. Open a text editor and create a new file. Save it with a .py extension (e.g., run_notebooks.py).

2. Import the necessary libraries:

“`python

import nbformat

from nbconvert.preprocessors import ExecutePreprocessor

“`

3. Create a list of notebook file names in the desired execution order:

“`python

notebooks = [‘notebook1.ipynb’, ‘notebook2.ipynb’, ‘notebook3.ipynb’]

“`

4. Define a function to execute a notebook:

“`python

def run_notebook(notebook):

with open(notebook) as file:

notebook_content = nbformat.read(file, as_version=4)

ep = ExecutePreprocessor(timeout=600)

ep.preprocess(notebook_content)

“`

5. Iterate over the list of notebook file names and execute each notebook:

“`python

for notebook in notebooks:

run_notebook(notebook)

“`

6. Save the script file and close the text editor.

7. Open the terminal or command prompt and navigate to the directory where the script file is saved.

8. Run the script by executing the following command:

“`

python run_notebooks.py

“`

This will execute the notebooks in sequential order one by one.

Frequently Asked Questions (FAQs):

Q: Can I customize the execution order of notebooks?

A: Yes, you can arrange the order of notebooks in the shell script or Python script. Ensure that you update the script accordingly.

Q: How can I check the output or errors generated during execution?

A: Both methods mentioned above will display the output and any errors generated during execution in the terminal or command prompt window.

Q: Do I need to save the notebooks before running them sequentially?

A: Yes, it is recommended to save the notebooks before running them sequentially to ensure that the latest changes are included in the execution.

Q: Is it possible to pass variables or data between notebooks?

A: Yes, you can pass variables or data between notebooks by saving them in files or by using libraries like pandas or pickle for serialization.

Q: Can I run Jupyter notebooks sequentially in JupyterLab?

A: Yes, JupyterLab also allows you to run notebooks sequentially by using the same methods mentioned in this article. Simply open a terminal within JupyterLab and follow the steps accordingly.

In conclusion, running multiple Jupyter notebooks sequentially can be achieved using shell scripts or Python scripts. By organizing the notebooks in the desired order and executing them sequentially, you can effectively manage complex projects and ensure the flow of data between notebooks. Consider the use of shell scripts or Python scripts based on your familiarity with scripting languages.

Ipyparallel Jupyter Notebook

Introduction:

In the field of data analysis and scientific computing, the ability to efficiently process large datasets and perform complex computations is of utmost importance. Traditional serial processing can be time-consuming and inefficient, leading to considerable delays in achieving desired results. However, with the advent of parallel computing, these challenges can be effectively addressed. One of the powerful tools that facilitate parallel computing is the IPyparallel Jupyter Notebook. In this article, we will explore the capabilities and benefits of IPyparallel Jupyter Notebook, as well as delve into its functionalities in depth.

IPyparallel Jupyter Notebook: A Brief Overview:

IPyparallel is a parallel computing library for Python, designed to enable efficient distribution of computational tasks across multiple processors or computing nodes. It allows users to harness the power of parallel computing with ease and convenience, utilizing the familiar and user-friendly Jupyter Notebook interface.

IPyparallel follows a client-server architecture, where a single IPython controller acts as the centralized connection point for distributing tasks among multiple engines or processing nodes. The engines can be located on the same machine or spread across a network of machines, providing users with flexibility in scaling up their computing resources.

Key Features of IPyparallel Jupyter Notebook:

1. Seamless Integration with Jupyter Notebook: IPyparallel integrates seamlessly with the popular Jupyter Notebook, enabling users to leverage the interactive and intuitive interface for writing, executing, and visualizing parallel computations.

2. Easy Task Distribution: IPyparallel simplifies the process of distributing tasks by providing a high-level interface. Users can easily parallelize functions or methods using decorators, allowing for automatic load balancing among available engines.

3. Load Balancing: IPyparallel incorporates intelligent load balancing algorithms, ensuring that tasks are distributed evenly among available engines. This feature optimizes the utilization of computing resources, maximizing efficiency and reducing processing time.

4. Fault Tolerance: In the event of an engine or computing node failure, IPyparallel provides fault tolerance capabilities. It automatically detects and recovers from failures, allowing the remaining engines to continue processing without interruption, minimizing the impact on overall performance.

5. Interactive Parallel Computing: IPyparallel enables users to perform interactive parallel computing in real-time, allowing for quick iterations and adjustments during computation. Additionally, it provides functionality for viewing progress and results as they are generated.

6. Extensibility: IPyparallel is highly extensible, allowing users to tailor the parallel computing environment to their specific requirements. It provides support for third-party packages and custom libraries, enhancing the flexibility and versatility of parallel computations.

7. Code Reusability: With IPyparallel, existing code can be effortlessly parallelized with minimal modifications. This makes it an ideal solution for adapting sequential code to leverage parallel computing capabilities without a complete code rewrite.

Frequently Asked Questions (FAQs):

Q1. Is IPyparallel limited to a certain number of engines or nodes?

A: No, IPyparallel is not limited to the number of engines or nodes. It provides scalability by enabling users to distribute tasks across multiple engines, either on a single machine or across a network of machines.

Q2. Can IPyparallel handle large datasets efficiently?

A: Yes, IPyparallel is designed to handle large datasets efficiently. It automatically partitions and distributes data across available engines, eliminating the need for manual data splitting, which can significantly improve processing times.

Q3. Does IPyparallel support different operating systems?

A: Yes, IPyparallel supports multiple operating systems, including Windows, macOS, and Linux distributions. This cross-platform compatibility ensures its accessibility to a wide range of users.

Q4. How does IPyparallel compare to other parallel computing libraries?

A: IPyparallel stands out for its seamless integration with the Jupyter Notebook interface, as well as its user-friendly APIs and load balancing algorithms. This makes it a popular choice among data scientists and researchers who prefer working in a familiar and interactive environment.

Q5. Can IPyparallel be used for distributed computing in a cluster environment?

A: Yes, IPyparallel is well-suited for distributed computing in a cluster environment. By configuring the IPython controller to interact with engines across different machines, users can harness the collective computational power of multiple nodes.

Conclusion:

IPyparallel Jupyter Notebook revolutionizes parallel computing by combining the unparalleled interactivity of Jupyter Notebook with efficient distribution and execution of tasks across multiple computing nodes. Its seamless integration, load balancing capabilities, fault tolerance, and extensibility make it a versatile solution for tackling complex computational challenges in a fast and efficient manner. With IPyparallel, users can explore the vast potential of parallel computing without compromising on usability or convenience.

Jupyter Notebook ‘Run Cells In Parallel

Jupyter Notebook is a popular web-based interactive computational environment that allows users to create and share data-driven documents. It supports over 40 programming languages, providing a flexible platform for data science, machine learning, and scientific computing tasks. One of the key features of Jupyter Notebook is the ability to run cells in parallel, allowing users to speed up their computations and enhance productivity.

Running cells in parallel is particularly useful when dealing with computationally intensive tasks, large datasets, or complex simulations. Instead of executing cells sequentially, one after the other, you can divide your code into multiple cells and execute them concurrently. This approach utilizes available computer resources, such as multiple CPU cores or even distributed computing clusters, to speed up the execution time.

How to Run Cells in Parallel in Jupyter Notebook?

Jupyter Notebook provides several options to enable parallel execution of cells. Let’s explore a few popular ones:

1. IPython.parallel: IPython.parallel is a powerful library that allows parallel and distributed computing in Jupyter Notebook. It enables you to execute cells across multiple engines, either on a single machine or across a cluster. By using the `%px` cell magic command, you can specify which engines should execute a particular cell, achieving parallelism.

2. Dask: Dask is a flexible parallel computing library that integrates seamlessly with Jupyter Notebook. It provides a high-level interface for creating parallelizable computations and leverages existing libraries like NumPy, Pandas, and Scikit-learn. With Dask, you can parallelize your code by utilizing task scheduling and lazy evaluation.

3. Joblib: Joblib is a lightweight library that allows easy parallelization of CPU-bound tasks in Jupyter Notebook. It provides a simple interface to execute functions in parallel, either using multiple cores or distributed computing. By using the `Parallel` class, you can parallelize your code and speed up its execution time.

4. Multiprocessing: Python’s built-in `multiprocessing` module is another option to run cells in parallel. It provides a high-level interface for parallelism, allowing you to spawn multiple processes for concurrent execution. By utilizing the `Process` class, you can execute cells in parallel and leverage the full power of your computer’s CPU cores.

Frequently Asked Questions (FAQs):

Q: Why should I run cells in parallel?

A: Running cells in parallel can significantly speed up the execution time of computationally intensive tasks, allowing you to complete your analysis or simulations much faster. It also enables you to utilize available computational resources effectively and enhances your productivity.

Q: Are there any limitations to running cells in parallel?

A: Yes, there are a few limitations to consider. First, not all tasks are parallelizable. Some operations inherently require sequential execution. Additionally, running cells in parallel may lead to synchronization or communication issues, especially in cases where cells depend on the results of previous cells.

Q: Is it possible to run cells in parallel across multiple machines?

A: Yes, it is possible to run cells in parallel across multiple machines by utilizing distributed computing frameworks such as Apache Spark or Dask. These frameworks allow you to create a cluster of machines and distribute the workload across them.

Q: Can I parallelize existing code in Jupyter Notebook?

A: Yes, you can parallelize existing code in Jupyter Notebook by identifying parts of your code that can be executed independently and dividing them into cells. You can then utilize one of the parallel computing libraries or modules mentioned earlier to execute these cells concurrently.

Q: How much speedup can I expect by running cells in parallel?

A: The speedup achieved by running cells in parallel depends on various factors such as the nature of your code, the number of available CPU cores, and the efficiency of parallelization. In some cases, significant speedup can be achieved, while in others, the performance gain may be limited.

In conclusion, running cells in parallel in Jupyter Notebook can greatly enhance your productivity and reduce the execution time of computationally intensive tasks. By leveraging parallel computing libraries and techniques, you can effectively utilize available computational resources and achieve faster results. However, it is important to carefully analyze your code and identify parallelizable parts to ensure optimal performance.

Images related to the topic parallel processing jupyter notebook

Found 18 images related to parallel processing jupyter notebook theme

![Top Jupyter Notebook Courses Online - Updated [July 2023] | Udemy Top Jupyter Notebook Courses Online - Updated [July 2023] | Udemy](https://img-c.udemycdn.com/course/480x270/2547911_a66a_2.jpg)

Article link: parallel processing jupyter notebook.

Learn more about the topic parallel processing jupyter notebook.

- Python Simple Loop Parallelization Jupyter Notebook

- Parallelization in Jupyter Notebooks – Research IT

- Using IPython for parallel computing — ipyparallel 8.6.2.dev …

- Short guide to parallelism in Python (Jupyter Notebook) – Reddit

- Running Jupyter Notebooks – Qubole Data Service Documentation

- Python concurrency and parallelism explained – InfoWorld

- Jupyter Notebook Multiprocessing: Boosting Your Data Analysis …

- Popular 6 Python libraries for Parallel Processing – GUVI Blogs

- Running Jupyter notebooks in parallel – Ploomber

- IPython Parallel in 2021 – Jupyter Blog

- jupyter-notebook/3-parallel.ipynb at master – GitHub

- Julia parallel processing | Learning Jupyter – Packt Subscription

- Introduction to parallel computing – HPC Carpentry

See more: nhanvietluanvan.com/luat-hoc