Does Postgres Cache Queries

1. Introduction to query caching in Postgres

Query caching is a valuable feature in databases that can greatly improve performance by storing the results of a query and retrieving them directly from the cache instead of re-executing the query every time it is called. In the case of PostgreSQL (Postgres), query caching can be a game-changer in terms of optimizing query performance and reducing the load on the database server.

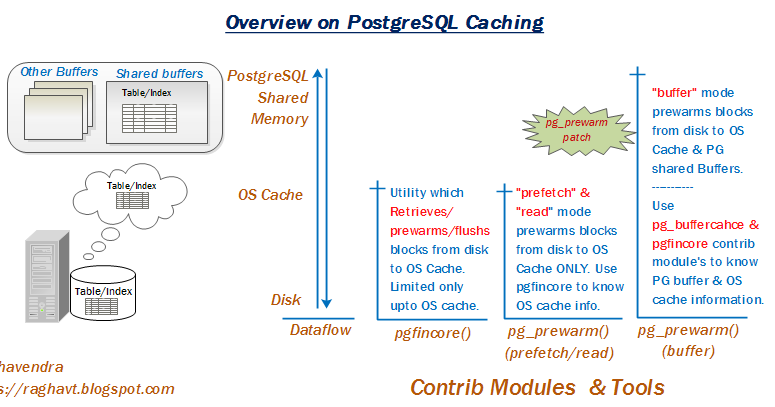

Postgres handles query caching in a unique way, using a shared memory area known as the “shared_buffers.” This memory area is used to cache both data pages and query results, making it an effective solution for accelerating queries.

2. Mechanics of query caching in Postgres

Postgres employs a mechanism called “standard caching” to store query results in the shared_buffers. When a query is executed, Postgres generates a unique cache key based on the query text, parameters, and other relevant factors. This cache key is used to look up the result in the shared_buffers.

The cache lookup process begins by examining the cache to check if the query result is already cached. If a match is found, the cached result is returned, saving the execution time and resources required to process the query again from scratch. If the query is not present in the cache or if the cache has been invalidated, Postgres proceeds to execute the query and caches the result for future use.

3. Configuring query caching in Postgres

Postgres provides several configuration parameters that can be adjusted to optimize query caching. The most important parameter to consider is the “shared_buffers.” It determines the amount of memory allocated to the cache. Increasing the value of shared_buffers allows for a larger cache and can improve query performance, provided there is enough available memory on the system.

Other relevant configuration parameters include “effective_cache_size,” which allows Postgres to estimate the cache hit rate more accurately, and “work_mem,” which influences the amount of memory used for query execution and sorting operations.

To enable query caching in Postgres, the shared_buffers parameter must be set to a non-zero value. Ideally, it should be set to a size that provides a sufficient cache for the workload while leaving enough memory for other database operations.

4. Understanding cache hit and miss in Postgres

Cache hit and miss ratios are important metrics when evaluating the effectiveness of query caching in Postgres. A cache hit occurs when the requested query result is found in the cache, while a cache miss happens when the result is not present, requiring the query to be executed and cached afterward.

A higher cache hit rate indicates that the cache is effectively storing and retrieving query results, leading to better performance. On the other hand, a higher cache miss rate suggests that the cache is not efficiently storing the frequently accessed query results, reducing its effectiveness.

The cache hit rate can be influenced by several factors, including the size of the shared_buffers, the volatility of the data being queried, and the frequency of cache invalidations. A larger cache size generally leads to a higher cache hit rate, while frequent cache invalidations can decrease the effectiveness of the cache.

5. Managing query cache invalidation in Postgres

Postgres handles cache invalidation automatically to ensure that the cached query results remain up-to-date. When a change occurs in the data that affects a cached query’s result, Postgres marks the query entry in the cache as “invalid.” Subsequent requests for the same query will trigger the execution of the query and the updating of the cache with the new result.

Managing cache invalidation efficiently is crucial to ensure optimal performance. For frequently updated data, it is important to consider the balance between the benefit of caching and the cost of frequent cache invalidations. Certain strategies, such as using shorter cache expiration times or selectively invalidating specific queries, can help mitigate cache invalidation overhead.

6. Performance considerations and best practices

While query caching can greatly improve performance in Postgres, it is important to consider potential performance trade-offs and implement best practices for optimal results.

To optimize query performance, it is essential to understand the characteristics of the workload and adjust relevant configuration parameters accordingly. Regularly monitoring cache hit and miss ratios can provide insights into the cache’s effectiveness and guide tuning efforts.

It is also important to be aware of common pitfalls when using query caching. Over-allocating memory for the cache (shared_buffers) may lead to excessive usage of system resources, impacting overall performance. Careful consideration should be given to balancing the cache size with other memory requirements and workload patterns.

Additionally, optimizing queries themselves by utilizing appropriate indexes, designing efficient database schemas, and making use of in-memory tables can further enhance the overall performance.

FAQs:

Q: Can I use query caching in any version of Postgres?

A: Yes, query caching is supported in all versions of Postgres. However, the mechanisms and configuration options may vary slightly.

Q: Do I need to modify my queries to enable query caching in Postgres?

A: No, query caching in Postgres is transparent and does not require any changes to your queries. The caching mechanism handles the caching and retrieval automatically.

Q: Can I use query caching for all types of queries?

A: Yes, query caching can be utilized for various types of queries in Postgres, including complex queries with multiple joins and aggregations. However, the effectiveness of caching may vary depending on the specific workload and data volatility.

Q: Is it recommended to enable query caching for all queries?

A: Query caching should be enabled selectively, focusing on queries that are executed frequently and consume significant resources. For queries with high volatility or those that return a large amount of data, caching may not provide significant benefits.

Q: How can I determine the optimal value for the shared_buffers parameter?

A: The optimal value for shared_buffers depends on various factors, such as available memory, workload characteristics, and the overall database configuration. It is recommended to monitor cache hit and miss ratios and adjust the shared_buffers parameter accordingly, while considering the system’s memory constraints.

In conclusion, Postgres does indeed cache queries, utilizing a shared memory area known as shared_buffers. By configuring relevant parameters and understanding cache hit and miss ratios, Postgres users can optimize query caching to significantly improve the performance of their database queries. Managing cache invalidation efficiently and considering performance considerations and best practices can further enhance the benefits of query caching in Postgres.

How To Make Your Db Fast By Using Caching – Devlog #11

Does Postgres Store Query History?

PostgreSQL, often referred to as Postgres, is a powerful and highly reliable open-source relational database management system. It is widely valued for its robustness, interoperability, and extensibility. When working with any database management system, it is crucial to have a clear understanding of how queries are handled, and whether or not the system stores query history. In this article, we will explore whether Postgres stores query history and provide a comprehensive analysis of how this feature can be leveraged.

Postgres does provide mechanisms to store query history, but by default, it does not automatically save every query executed. However, it offers various methods to configure and enable query logging, making it possible to record and monitor queries executed on a Postgres database. Let’s delve into the different approaches to enabling query history in Postgres.

1. Log file-based query history:

One method is to enable query logging to a file. Postgres allows you to define the level of detail you want to include in the log, such as timestamps, durations, and session identifiers. These logs can help in diagnosing performance issues, identifying expensive queries, and analyzing database usage patterns. It is critical to balance the level of detail you want to record with the impact it may have on overall performance.

2. Query history with pg_stat_statements:

Another useful extension provided by Postgres is pg_stat_statements. This extension records the text of each query executed, along with its execution statistics such as the total number of executions, runtime, and average runtime. This feature is invaluable for identifying frequently executed queries and optimizing their performance. However, it should be noted that enabling pg_stat_statements may introduce some overhead due to the additional tracking.

3. Fine-grained query logging with log_statement:

Postgres also offers the ability to log different types of statements selectively. By configuring the log_statement parameter, you can choose to log all statements, only those that modify the database, or none at all. This level of customization ensures that you can focus on the specific queries that matter to you without logging unnecessary information.

4. Query history with third-party tools:

In addition to the built-in options provided by Postgres, there are several third-party tools available that can help manage and store query history. These tools often offer more advanced features such as graphical interfaces, search capabilities, and integration with other monitoring and alerting systems. Some popular options include pgAdmin, Postico, and Navicat.

FAQs:

Q: Can I retrieve the query history of a specific user?

A: Yes, once query logging is enabled, you can filter and search the logs based on various criteria such as user, connection, or timestamp.

Q: How can query history be useful?

A: Query history is beneficial for performance monitoring, troubleshooting, profiling, and identifying potential security threats.

Q: Will enabling query history impact the performance of my Postgres database?

A: Enabling query history does introduce some additional overhead, mainly due to the disk I/O involved in writing logs. It is important to carefully consider the trade-off between the level of detail you want to log and the potential impact on performance.

Q: Can I configure query history to be stored in a different location or database?

A: Yes, Postgres provides flexibility in specifying the log destination, whether it be a file, a separate database, or even a remote server.

Q: Does query history include sensitive information such as passwords?

A: By default, the log does not include any sensitive information, such as passwords. However, it is still essential to consider the security implications of storing query history, especially in regulated environments.

In conclusion, while Postgres does not automatically store query history, it offers various methods to enable and configure this feature. By analyzing query history, developers and database administrators can gain valuable insights into their applications’ performance, identify optimization opportunities, and troubleshoot issues. It is crucial to strike a balance between the level of detail to log and the performance impact as each application has unique requirements. With the flexibility provided by Postgres, users can choose the method that best suits their needs and utilize third-party tools to enhance query history management.

How Does Postgresql Query Work?

PostgreSQL, also known as Postgres, is a powerful open-source relational database management system. It is widely used by organizations and individuals for handling complex data needs. One of the key functionalities of PostgreSQL is its ability to efficiently execute queries and retrieve data. In this article, we will explore how PostgreSQL queries work under the hood and gain an understanding of the processes involved.

Understanding the Query Lifecycle:

When a query is executed in PostgreSQL, it goes through several stages to retrieve the desired data. Let’s have a look at the step-by-step process of how a PostgreSQL query works:

1. Parsing: The first step in query execution is parsing, where the system analyzes the query to ensure its syntactical correctness. This involves breaking down the query into its different components, such as the SELECT statement, FROM clause, WHERE conditions, etc.

2. Query Planning: After parsing, PostgreSQL generates an optimal query execution plan based on the parsed query. The query planner takes into account factors such as indexes, table statistics, and available hardware resources to create an execution plan. The goal is to minimize the time and resources required to execute the query.

3. Query Optimization: Once the execution plan is generated, PostgreSQL’s query optimizer steps in to refine the plan further. The optimizer evaluates different strategies for accessing the data, joins, and aggregations to determine the most efficient way to execute the query. It considers various heuristics and cost estimations to select the optimal plan.

4. Execution: Once the query optimization is complete, PostgreSQL starts executing the plan. This involves fetching the data from disk, applying filters based on the WHERE conditions, and performing any necessary joins or aggregations. PostgreSQL has a sophisticated multi-process architecture that allows for parallelism and efficient resource utilization during query execution.

5. Result Generation: As the query plan is executed, PostgreSQL generates the desired result set. The result can be a single row, multiple rows, or aggregate values, depending on the query. The result is then delivered back to the client application for further processing or display.

6. Cleanup: After the query is executed, PostgreSQL performs necessary cleanup tasks, such as releasing locks, closing temporary objects, and freeing up resources.

FAQs:

Q: How does PostgreSQL handle large datasets efficiently?

A: PostgreSQL employs several techniques to handle large datasets efficiently. It utilizes indexing to allow for faster data retrieval based on specific criteria. It also supports partitioning, which divides large tables into smaller, more manageable chunks. Additionally, PostgreSQL’s query planner and optimizer help in selecting optimal execution plans for large queries.

Q: Can PostgreSQL execute complex queries involving multiple joins and subqueries?

A: Yes, PostgreSQL is well-equipped to handle complex queries. Its query planner and optimizer can effectively handle multiple joins, subqueries, and complex aggregations. However, it is important to ensure that appropriate indexes are present to support efficient query execution.

Q: Does PostgreSQL support parallel query execution?

A: Yes, PostgreSQL supports parallel query execution, which allows multiple CPU cores to be utilized for query processing. Parallelism can significantly improve query performance, especially for queries that involve large datasets or complex operations.

Q: How does PostgreSQL ensure data consistency during transaction processing?

A: PostgreSQL follows the ACID (Atomicity, Consistency, Isolation, Durability) principles to ensure data consistency. It uses a technique called MVCC (Multi-Version Concurrency Control) to provide transaction isolation while allowing concurrent access to data. This ensures that changes made by one transaction do not interfere with other transactions.

Q: Can PostgreSQL optimize queries dynamically based on changing data and system statistics?

A: Yes, PostgreSQL’s query optimizer takes into account various statistics, such as table sizes, index usage, and system resources, to dynamically adjust the query execution plan. This allows PostgreSQL to adapt to changing data and system conditions, resulting in efficient query performance.

Q: What are some recommended practices to optimize PostgreSQL queries?

A: To optimize PostgreSQL queries, it is essential to analyze query performance using tools like EXPLAIN and EXPLAIN ANALYZE. Creating appropriate indexes, designing efficient data models, and using appropriate join and aggregation techniques can further improve query performance. It is also crucial to regularly update statistics and tune PostgreSQL’s configuration parameters based on workload characteristics.

In conclusion, PostgreSQL’s query execution process involves parsing, planning, optimization, execution, and result generation. It employs sophisticated techniques such as indexing, partitioning, and parallel execution to handle large datasets and complex queries efficiently. By understanding how PostgreSQL queries work, developers and database administrators can fine-tune their database systems for optimal performance.

Keywords searched by users: does postgres cache queries Optimize query PostgreSQL, PostgreSQL in-memory table

Categories: Top 25 Does Postgres Cache Queries

See more here: nhanvietluanvan.com

Optimize Query Postgresql

PostgreSQL, an open-source database management system, is widely admired for its powerful features and robustness. With an array of advanced functionalities, it is a popular choice for many applications, ranging from small-scale websites to large enterprise-grade systems. However, as the volume of data and complexity of queries increase, optimizing PostgreSQL queries becomes crucial to ensure optimal performance and efficiency. In this article, we will delve into various strategies and techniques to optimize query performances in PostgreSQL and address frequently asked questions on the topic.

Understanding Query Optimization in PostgreSQL

Query optimization is the process of improving the performance of queries by selecting the most efficient execution plan. PostgreSQL’s query optimizer is designed to find the best execution plan based on several factors such as available indexes, statistics, and cost estimates. Here are some key considerations to optimize queries effectively:

1. Indexing: Indexes play a vital role in query optimization. They provide quick access to data and accelerate query execution. Analyze your queries and identify columns frequently used in the WHERE clause, JOIN conditions, or ORDER BY clauses. These columns can benefit from proper indexing, improving overall query performance.

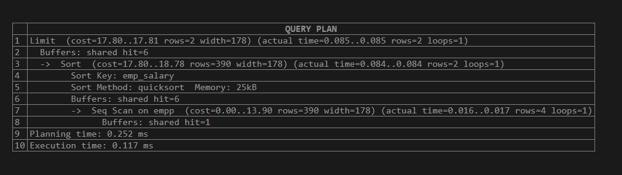

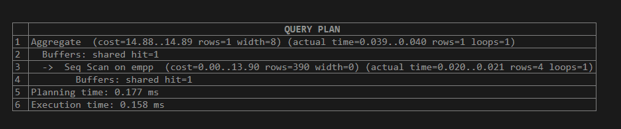

2. Analyzing Query Execution Plans: PostgreSQL’s EXPLAIN command is a powerful tool to understand how a query is executed. It provides insights into the execution plan chosen by the query optimizer, helping you identify potential bottlenecks or inefficient operations. Use EXPLAIN to investigate and fine-tune your query plans.

3. Regularly Updating Statistics: PostgreSQL maintains statistics about tables and indexes to help the optimizer estimate the cost of various execution plans. Ensure that these statistics are up-to-date by running regular ANALYZE commands or enabling autovacuum to automate the process. Accurate statistics enable the optimizer to make better decisions when generating query plans.

4. Selective Filtering: Apply filters to your queries to narrow down the result set before performing computationally expensive operations. Restricting the volume of data manipulated by the query can significantly enhance performance. Utilize the most selective filters at the earliest possible stage in your query.

5. Minimize Joins and Subqueries: Excessive joins and subqueries can significantly impact query performance. Review your queries to identify opportunities to simplify or eliminate redundant joins and subqueries. Use appropriate join conditions and consider rewriting subqueries as JOINs whenever possible.

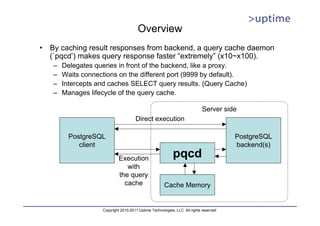

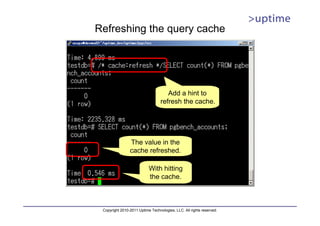

6. Query Caching: PostgreSQL offers various caching mechanisms to reduce query execution time. Utilize features like prepared statements, query caching extensions, or connection pooling to avoid unnecessary query parsing and planning overhead. Leveraging these techniques can significantly improve the response time for frequently executed queries.

7. Tuning Parameters: PostgreSQL provides several configuration parameters to fine-tune its behavior based on your workload requirements. Parameters like shared_buffers, work_mem, and max_connections can be adjusted to optimize query memory usage, sorting behavior, and connection management.

Frequently Asked Questions (FAQs):

Q1. How can I identify slow queries in PostgreSQL?

A: PostgreSQL keeps track of slow queries in the log file. Enable query logging and set the log_min_duration_statement parameter to determine the minimum execution time threshold for logging. Additionally, consider utilizing tools like pg_stat_statements or third-party monitoring applications to analyze query performance.

Q2. Is it possible to force specific query plans in PostgreSQL?

A: Yes, PostgreSQL allows you to influence the query optimizer’s decision through query hints or plan guides. However, forcing specific query plans should be used sparingly and only after careful consideration, as it may lead to suboptimal execution plans for different scenarios or database updates.

Q3. How can I decide which columns need indexing?

A: Analyzing query patterns and identifying frequently used columns can provide insights into which columns should be indexed. Consider adding indexes to columns used in WHERE clauses, JOIN conditions, or frequently ordered columns. However, be cautious as adding too many indexes may adversely impact insert/update performance and increase storage requirements.

Q4. How often should I update database statistics?

A: Regularly updating statistics is important to ensure accurate query planning. It is recommended to utilize the autovacuum feature, which automates the analysis and maintenance of statistics. Adjust autovacuum settings based on your workload to strike a balance between resource usage and update frequency.

Q5. Can query performance be affected by hardware limitations?

A: Yes, hardware limitations can impact query performance. Factors such as disk speed, memory, CPU capacity, and network latency can significantly influence database performance. Analyze your hardware infrastructure and consider upgrading critical components if necessary.

Q6. Are there any tools available to assist in PostgreSQL query optimization?

A: Yes, there are several tools available to help optimize PostgreSQL queries. Tools like pgBadger, pg_stat_statements, and EXPLAIN ANALYZE provide valuable insights into query performance and execution plans. Additionally, commercial tools like pgTune and pgAdmin offer query optimization recommendations and graphical query plan analysis.

Q7. What are some common pitfalls to avoid while optimizing PostgreSQL queries?

A: Avoid over-indexing, as it can negatively impact write operations, increase disk space requirements, and introduce index maintenance overhead. Additionally, be cautious when forcing specific query plans and test thoroughly to ensure optimal execution plans across different scenarios. Regularly monitor and tune your system to accommodate evolving query patterns and growing data volumes.

In conclusion, optimizing query performance in PostgreSQL is crucial to ensure efficient and responsive database operations. By leveraging indexing, analyzing execution plans, updating statistics, and applying various optimization techniques, you can enhance the overall performance of your PostgreSQL based applications. Additionally, monitoring and fine-tuning the system regularly, along with careful hardware considerations, can further improve query performance. By following these guidelines and avoiding common pitfalls, you can optimize query execution in PostgreSQL effectively.

Postgresql In-Memory Table

PostgreSQL, commonly known as Postgres, is one of the most powerful and feature-rich open-source relational database management systems (RDBMS) available today. It is known for its robustness, extensibility, and scalability. In recent years, PostgreSQL has gained a lot of attention due to its support for in-memory tables, which can significantly enhance database performance and speed. In this article, we will delve into the world of PostgreSQL in-memory tables, exploring how they work, their benefits, limitations, and use cases.

Understanding PostgreSQL In-Memory Tables

Traditional databases store data on disk, which introduces some overhead due to disk I/O operations. PostgreSQL addresses this limitation by offering the option to create in-memory tables, also known as memory-optimized tables or memory-resident tables. An in-memory table utilizes the main memory (RAM) of the system to store data, eliminating disk I/O operations and reducing access time. As a result, queries and operations on in-memory tables can be significantly faster than their disk-based counterparts, depending on the nature and size of the dataset.

Benefits of PostgreSQL In-Memory Tables

1. Accelerated Performance: By utilizing the main memory, which offers much faster access than disk-based storage, PostgreSQL in-memory tables provide a significant performance boost for read and write operations. These tables are particularly beneficial for workloads that involve frequent querying or data modification, such as caching or real-time analytics.

2. Reduced Latency: Since in-memory tables eliminate disk I/O operations, they minimize the time required to fetch data from storage, resulting in reduced latency. This is especially advantageous for applications that require low response times, such as web applications or real-time data processing systems.

3. Simplified Database Schema: PostgreSQL in-memory tables allow for a simplified database schema design by omitting features like indexes, constraints, or triggers. This simplicity leads to faster data manipulation and retrieval, as the database engine has fewer computations to perform.

4. Enhanced Scalability: In-memory tables in PostgreSQL can significantly improve the scalability of applications. By reducing the I/O bottleneck, more concurrent connections can be handled, and higher throughput can be achieved. This is particularly useful for applications with intense workloads or high user concurrency.

Limitations and Considerations

While PostgreSQL in-memory tables offer numerous advantages, it is essential to consider their limitations and appropriate use cases.

1. Limited Memory Capacity: In-memory tables rely on the system’s RAM, which is generally more limited in capacity compared to disk-based storage. Therefore, it is crucial to ensure that the available memory is sufficient to accommodate the entire dataset. Otherwise, the performance benefits of in-memory tables may not be realized.

2. Data Durability: As the name suggests, in-memory tables store data exclusively in RAM. Consequently, data in these tables can be lost in the event of a system crash or restart. PostgreSQL offers various mechanisms to improve data durability, such as write-ahead logging (WAL) and replication, but these introduce additional overhead.

3. Cost of Memory: RAM is generally more expensive than disk storage per unit of capacity. Consequently, the cost of deploying and maintaining a PostgreSQL setup with ample memory can be higher than traditional disk-based databases. Consideration of cost implications is essential, especially for large-scale deployments.

4. In-Memory Table Size: In-memory tables are often best suited for datasets that can comfortably fit within the available RAM. If the dataset size exceeds the memory capacity, PostgreSQL may start swapping the data to disk, negating the benefits of in-memory tables. Therefore, it is crucial to carefully assess the dataset size and available memory before utilizing in-memory tables.

Use Cases for PostgreSQL In-Memory Tables

PostgreSQL in-memory tables find their applications in various scenarios, depending on the workload requirements. Some prominent use cases include:

1. Real-Time Analytics: In-memory tables are ideal for real-time analytical workloads that involve frequent aggregations, calculations, and queries. The improved performance and reduced latency make it an excellent choice for processing large volumes of data quickly.

2. Caching: Web applications can benefit greatly from in-memory tables as they can store frequently accessed data, such as user session information or static content. By caching data in memory, the application can serve requests faster, reducing load times and improving user experience.

3. High-Concurrency Environments: Applications experiencing a high level of concurrent read or write operations, such as social media platforms or e-commerce websites, can leverage in-memory tables to handle increased user activity without significant performance degradation.

4. Temporary Data Storage: In-memory tables can serve as temporary storage for intermediate results during complex data transformations or calculations, especially in scenarios where immediate access and fast processing are essential.

FAQs about PostgreSQL In-Memory Tables

Q1: Can I convert an existing disk-based table to an in-memory table in PostgreSQL?

A1: No, in PostgreSQL, you need to create a separate table explicitly as an in-memory table. Migrating data from a disk-based table to an in-memory table would require manual intervention.

Q2: Are there any specific performance considerations while using PostgreSQL in-memory tables?

A2: Yes, although in-memory tables can significantly improve performance, you should consider factors such as memory capacity, query optimization, and workload characteristics to make the most of their benefits.

Q3: Can I mix in-memory tables with traditional disk-based tables in PostgreSQL?

A3: Yes, PostgreSQL allows you to have a combination of both in-memory tables and disk-based tables in the same database. This flexibility enables you to choose the most suitable storage option based on your specific needs.

Q4: Are in-memory tables suitable for all types of data?

A4: In-memory tables are most effective when dealing with datasets that can fit comfortably within the available RAM. If the dataset size exceeds the memory capacity, the performance advantages might diminish.

Q5: Can I use indexes or constraints on in-memory tables in PostgreSQL?

A5: No, in-memory tables in PostgreSQL do not support indexes or constraints. Simplifying the table schema by removing such features is one of the reasons why in-memory tables can offer faster data access and manipulation.

Conclusion

PostgreSQL in-memory tables provide a powerful tool for enhancing the performance and speed of database operations. By leveraging the system’s RAM, these tables can eliminate disk I/O overhead and offer accelerated data retrieval and manipulation. While they come with limitations in terms of memory capacity and data durability, in-memory tables find their utility in real-time analytics, caching, high-concurrency environments, and temporary data storage. With careful consideration of use cases, PostgreSQL users can harness the potential of in-memory tables to unlock new levels of performance and scalability.

Images related to the topic does postgres cache queries

Found 34 images related to does postgres cache queries theme

.png)

Article link: does postgres cache queries.

Learn more about the topic does postgres cache queries.

- How does PostgreSQL cache statements and data?

- Does PostgreSQL do any kind of caching of query results on …

- How to Check Query History in PostgreSQL? – CommandPrompt Inc.

- Understanding How PostgreSQL Executes a Query – eTutorials.org

- How Caching works in PostgreSQL? – eduCBA

- An Overview of Caching for PostgreSQL – Severalnines

- Database Scaling: PostgreSQL Caching Explained – Timescale

- PostgreSQL Query Cache released

- Understanding Heroku Postgres Data Caching

- Cache – Postgres Guide

- AnalyticDB for PostgreSQL:Query cache – Alibaba Cloud

- How to Boost PostgreSQL Cache Performance – Code Red