Delete Unassigned Shards Elasticsearch

Elasticsearch is a distributed, open-source search and analytics engine that is widely used for its scalability, reliability, and ease of use. In Elasticsearch, data is stored in shards, which are units of data that can be distributed across multiple nodes for improved performance and fault tolerance. However, there are instances when shards become unassigned, meaning they are not associated with any node in the cluster. It is important to delete these unassigned shards in Elasticsearch to ensure optimal performance and resource utilization.

Types of Unassigned Shards in Elasticsearch

There are two types of unassigned shards in Elasticsearch: primary unassigned shards and replica unassigned shards.

1. Primary Unassigned Shards: In a distributed environment, each index is divided into multiple primary shards, which are responsible for handling read and write operations for that index. If a primary shard becomes unassigned, it means that the data it contains is unavailable for querying, which can result in data loss and inconsistent search results.

2. Replica Unassigned Shards: Replica shards are copies of the primary shards, and they provide redundancy and fault tolerance. If a replica shard becomes unassigned, it means that there is no backup copy of the data it contains, which can impact the availability and reliability of the cluster.

Reasons for Unassigned Shards in Elasticsearch

Several factors can lead to the presence of unassigned shards in Elasticsearch:

1. Node Failure or Restart: If a node in the Elasticsearch cluster fails or undergoes a restart, the shards assigned to that node may become unassigned. This can happen if the node fails to rejoin the cluster properly or if there are connectivity issues between nodes.

2. Insufficient Disk Space: If the disk space on a node becomes full, Elasticsearch may not be able to allocate new shards to that node. This can result in unassigned shards until sufficient disk space is made available.

3. Mapping Conflicts: Elasticsearch uses mappings to define the schema and data types of fields in an index. If there are mapping conflicts between the primary and replica shards, it can prevent the replica shards from being assigned to a node.

Methods to Delete Unassigned Shards in Elasticsearch

There are several methods to delete unassigned shards in Elasticsearch:

1. Automatic Allocation: By default, Elasticsearch tries to allocate unassigned shards automatically whenever a new node joins the cluster or when an existing node becomes available again. This automatic allocation can be triggered by adjusting the cluster.routing.allocation.enable setting in the Elasticsearch configuration.

2. Manual Allocation: In some cases, automatic allocation may not be successful, or it may not be desirable due to resource constraints. In such situations, administrators can manually assign unassigned shards to specific nodes using the Elasticsearch APIs or the cluster management tool.

3. Using the Cluster Routing Allocation API: The Cluster Routing Allocation API provides fine-grained control over shard allocation in Elasticsearch. Using this API, administrators can retrieve information about unassigned shards and take actions to delete or reassign them.

Best Practices for Deleting Unassigned Shards in Elasticsearch

To effectively manage unassigned shards in Elasticsearch, consider the following best practices:

1. Regular Monitoring and Maintenance: Implement a monitoring system to track the status of shards and detect any unassigned shards promptly. Regular maintenance tasks, such as monitoring disk space usage and handling node failures, can help prevent the accumulation of unassigned shards.

2. Proper Configuration of Shard Allocation Settings: Configure the shard allocation settings in Elasticsearch to optimize shard assignment and prevent unassigned shards. For example, you can set the cluster.routing.allocation.enable setting to control automatic shard allocation or adjust the cluster.routing.allocation.disk.watermark settings to prevent disk space issues.

3. Efficient Disk Space Management: Monitor and manage disk space usage on each node in the Elasticsearch cluster to prevent unassigned shards due to insufficient disk space. Regularly clean up old indices or consider scaling up your infrastructure to accommodate growing data volumes.

FAQs

Q: What are the consequences of having unassigned shards in Elasticsearch?

A: Unassigned shards in Elasticsearch can lead to data loss, inconsistent search results, and reduced availability of the cluster.

Q: How can I check for unassigned shards in Elasticsearch?

A: You can use the Cluster Health API or various monitoring tools to check the status of shards and identify any unassigned shards in Elasticsearch.

Q: How can I delete unassigned shards in OpenSearch?

A: The process of deleting unassigned shards in OpenSearch is similar to Elasticsearch. You can use automatic or manual allocation methods or leverage the Cluster Routing Allocation API.

Q: Is there a maximum limit to the number of shards in Elasticsearch?

A: Yes, Elasticsearch imposes a maximum limit on the number of shards that can be opened in a cluster, which is defined by the indices.query.bool.max_clause_count setting.

Q: Can I delete an entire index to remove unassigned shards in Elasticsearch?

A: Yes, you can delete an entire index to remove both assigned and unassigned shards in Elasticsearch. However, be cautious as it will permanently delete all data associated with the index.

Q: How can I calculate the percentage of active shards in Elasticsearch?

A: You can use the Active Shards Percent API or query the active_shards_percent_as_number metric to calculate the percentage of active shards in Elasticsearch.

In conclusion, deleting unassigned shards in Elasticsearch is crucial to maintain optimal performance, prevent data loss, and ensure the availability of the cluster. By understanding the types and reasons for unassigned shards, and utilizing appropriate methods and best practices, administrators can effectively manage and delete unassigned shards, leading to a smoother Elasticsearch deployment.

Databases: Delete Unassigned Shards In Elasticsearch

Can We Delete A Shard In Elasticsearch?

ElasticSearch is a widely used and highly scalable search and analytics engine. It is built on top of the Apache Lucene library, which provides powerful indexing and searching capabilities. One of the key concepts in ElasticSearch is the shard, which is a subset of the complete data in an index. Sharding plays a crucial role in distributing data across multiple nodes in a cluster, ensuring efficient querying and high availability. However, there might be scenarios where we need to delete a shard in ElasticSearch. In this article, we will explore the possibilities and implications of deleting a shard and provide an in-depth understanding of this topic.

Understanding Shards in ElasticSearch

Before diving into the process of deleting a shard, it is important to grasp the concept of shards in ElasticSearch. When creating an index, ElasticSearch by default splits the data into multiple shards and distributes them across different nodes in a cluster. Each shard is an independent index with its own document set and search capabilities. Sharding helps to increase the overall performance and scalability of the system, as data and search queries can be processed in parallel across multiple shards.

Shards are further classified into primary and replica shards. Primary shards are responsible for accepting write operations and indexing the data while replica shards are read-only copies of the primary shards. Replicas improve the system’s fault tolerance and search scalability by allowing parallel search execution on multiple nodes simultaneously.

Deleting a Shard

Although ElasticSearch allows creating and modifying shards dynamically, it does not provide a direct mechanism to delete a shard. The reason behind this limitation is the complexity associated with reassigning data from the shard that should be deleted. This process involves redistributing data among the remaining shards, which can be resource-intensive and time-consuming, particularly for large-scale deployments.

However, ElasticSearch provides an alternative approach to accomplish the goal of deleting a shard. The suggested method is to delete the entire index associated with the shard. By deleting the index, all the shards within that index will also be removed. This approach is efficient as it avoids the need to manually rebalance the data across the cluster.

Deleting an Index to Remove a Shard

To delete an index in ElasticSearch and remove all its associated shards, you can use the `_delete` API. The following curl command demonstrates how to delete an index named “my_index”:

“`

curl -X DELETE “http://localhost:9200/my_index”

“`

After executing this command, the entire index and its shards will be removed from the cluster. It is important to note that this operation is irreversible, and all the data stored in the index will be permanently deleted.

FAQs:

Q: Can I delete a specific shard without deleting the entire index?

A: No, ElasticSearch does not provide a direct mechanism to delete a specific shard. Deleting an index is the recommended approach to remove a shard.

Q: How can I check the status of shards in my cluster?

A: ElasticSearch provides a “_cat/shards” API endpoint to display the detailed information about the shards in the cluster. You can execute the following curl command to retrieve shard information:

“`

curl -X GET “http://localhost:9200/_cat/shards”

“`

Q: What are the potential risks of deleting an index?

A: Deleting an index will permanently remove all the associated data. It is crucial to double-check and ensure that the index being deleted is the correct one, as this operation cannot be reversed.

Q: How can I prevent accidental deletion of indexes?

A: ElasticSearch provides a feature called “index-level settings” that allows configuring index-level permissions and restrictions. By setting the appropriate access control settings, accidental deletions can be avoided.

Conclusion

In conclusion, while ElasticSearch does not allow direct deletion of a specific shard, it provides an efficient approach to remove a shard by deleting the entire index. Sharding is an integral part of ElasticSearch, enabling scalability and distributed processing. By understanding the concepts and implications of deleting a shard, you can effectively manage and maintain your ElasticSearch cluster.

Why Are My Shards Unassigned?

Sharding is a commonly used technique in distributed databases to manage and distribute data across multiple servers. It improves overall database performance by allowing parallel processing of data and reducing the load on individual servers. However, sometimes users encounter a situation where their shards become unassigned, causing delays or even failures in database operations. In this article, we will explore the reasons behind unassigned shards and discuss potential solutions to address this issue.

Understanding Shards

Before diving into the reasons for unassigned shards, it’s important to have a basic understanding of what sharding entails. In a sharded database, data is horizontally partitioned into smaller subsets called shards. Each shard contains a unique subset of the overall data. These shards are then distributed across multiple database servers for improved scalability and performance.

Reasons for Unassigned Shards

1. Uneven Distribution: One possible reason for unassigned shards is an uneven distribution of data across servers. Sharding algorithms usually ensure even distribution to maintain load balance. However, when new servers are added or if there are hardware or network failures, the distribution may become skewed, leading to unassigned shards.

2. Indexing Issues: Shards are typically indexed to speed up search and retrieval operations. However, if the indexing is not correctly implemented or suffers from inconsistencies, it can lead to unassigned shards. In such cases, the system fails to find the appropriate shard for a given query, resulting in unassigned shards.

3. Configuration Errors: Incorrect configuration settings can also cause shards to remain unassigned. If the configuration files are not properly updated or if there are typos or errors in the configuration settings, the system may fail to assign shards to database servers correctly.

4. Replication Failures: Sharding often involves data replication across multiple servers for fault tolerance and high availability. If the replication process fails or encounters errors, it can prevent the assignment of shards to servers, leaving them unassigned.

5. Hardware or Network Failures: Shards can also become unassigned due to hardware or network failures. If a server hosting a shard goes down or experiences connectivity issues, the shard becomes temporarily unassigned until the issue is resolved.

Solutions and Best Practices

1. Regular Monitoring: Monitoring the status and health of database servers and shards is crucial. Automated monitoring systems can quickly identify unassigned shards, enabling prompt actions to resolve the issue.

2. Load Balancing: Ensuring even distribution of data across servers is crucial for reducing the chances of unassigned shards. Regularly monitoring the load on servers and redistributing shards as needed helps maintain load balance.

3. Proper Indexing: Correct implementation and maintenance of indexes are essential to prevent unassigned shards. Consistency checks and periodic index rebuilds can help address any indexing issues that may arise.

4. Configuration Management: Paying close attention to configuration files and settings is vital to avoid unassigned shards caused by configuration errors. Implement proper version control and regular reviews to ensure the configuration is accurate and up to date.

5. Replication Monitoring: Monitoring the replication process is critical to detect any failures or errors promptly. Implementing mechanisms for automatic detection and recovery, such as replicas re-syncing and error alerts, can help prevent unassigned shards.

Frequently Asked Questions (FAQs)

Q1: Can unassigned shards cause data loss?

A1: Unassigned shards do not directly cause data loss. However, they can result in delays or failures in data retrieval or modification operations until the shards are properly assigned.

Q2: How can I identify unassigned shards?

A2: Monitoring tools and database management systems generally provide information about the status and assignment of shards. By regularly monitoring the system, you can quickly identify any unassigned shards.

Q3: What should I do if I have unassigned shards?

A3: If you have unassigned shards, start by investigating the possible causes mentioned in this article. Correct any configuration errors, perform index maintenance, redistribute shards for load balancing, and address any issues related to replication or hardware/network failures.

Q4: How can I prevent unassigned shards in the future?

A4: Following the solutions and best practices mentioned in this article, such as regular monitoring, load balancing, proper indexing, configuration management, and replication monitoring, can significantly reduce the occurrence of unassigned shards.

Q5: Can unassigned shards be resolved automatically?

A5: In some cases, unassigned shards may be automatically reassigned after the underlying issue causing the unassignment is resolved. However, it’s important to proactively monitor and identify unassigned shards to ensure timely resolution.

Conclusion

While sharding offers numerous benefits in terms of scalability and performance, unassigned shards can cause delays and issues in database operations. By understanding the possible reasons behind unassigned shards and following the suggested solutions and best practices, users can mitigate the occurrence of unassigned shards and ensure smoother database operations. Regular monitoring, load balancing, proper indexing, configuration management, and replication monitoring are key elements in addressing and preventing unassigned shards in distributed databases.

Keywords searched by users: delete unassigned shards elasticsearch Unassigned shards Elasticsearch, Check shards in elasticsearch, elasticsearch unassigned node_left, how to delete shards in opensearch, Maximum shards open elasticsearch, Delete index Elasticsearch, Elasticsearch shard, Active_shards_percent_as_number

Categories: Top 60 Delete Unassigned Shards Elasticsearch

See more here: nhanvietluanvan.com

Unassigned Shards Elasticsearch

Introduction to Unassigned Shards in Elasticsearch

Elasticsearch is an open-source distributed search and analytics engine that allows for real-time data analysis and storage. It is designed to handle large volumes of data and provide quick search responses. In an Elasticsearch cluster, data is divided into multiple shards, which are then distributed across different nodes, enabling parallel processing and high availability.

However, there are instances where shards become unassigned, resulting in potential issues in the cluster’s stability and performance. Unassigned shards refer to shards that do not have any corresponding active or assigned replica or primary copy. In such cases, Elasticsearch is unable to utilize the allocated resources effectively, leading to reduced search capabilities and potential data loss.

Understanding the Causes of Unassigned Shards

Unassigned shards can occur due to various reasons, and identifying the root cause is crucial for resolving them effectively. Let’s explore some common causes:

1. Cluster Changes: When there are changes in the cluster configuration or cluster state, Elasticsearch may fail to assign shards properly. These changes could be due to node failures, cluster scaling, or configuration updates.

2. Disk Space Issues: Shards require an adequate amount of disk space to function properly. If a node runs out of disk space, Elasticsearch marks the corresponding shards as unassigned until more disk space becomes available.

3. Network Partitioning: Network connectivity issues or network partitioning within the cluster can hinder the communication between nodes, leading to unassigned shards.

4. Data Corruption: In certain cases, data corruption can cause Elasticsearch to mark shards as unassigned, preventing their proper allocation.

Implications of Unassigned Shards

Unassigned shards can have several implications for an Elasticsearch cluster, including:

1. Reduced Search and Query Performance: Unassigned shards lead to an imbalance in the cluster, causing increased search latency and slower query response times. This can impact the user experience and undermine the efficiency of data analysis.

2. Increased Risk of Data Loss: Unassigned shards result in copies of data being inaccessible or missing, increasing the risk of data loss. In a distributed environment where data redundancy is vital for fault tolerance, unassigned shards can restrict data availability and resilience.

3. Cluster Instability: The presence of unassigned shards indicates an unstable cluster state. This instability can further exacerbate the performance and reliability issues.

Solutions to Unassigned Shards

Resolving unassigned shards is crucial to restore cluster stability and ensure optimal performance. Here are some key solutions:

1. Investigate and Resolve the Root Cause: To address unassigned shards, it is essential to identify and rectify the underlying cause. Reviewing Elasticsearch logs, cluster configuration changes, and node status can help pinpoint the origin of the problem. Once identified, fixing the root cause should resolve the unassigned shards issue.

2. Reallocating Shards: Elasticsearch provides mechanisms to manually allocate unassigned shards to available nodes. This involves executing commands using the Elasticsearch API or the cluster management interface, directing the cluster to assign the unassigned shards to appropriate nodes.

3. Adjusting Cluster Settings: Configuration tweaks can often resolve unassigned shard problems. For example, increasing the disk space allocation per node, modifying the replication factor, or adjusting network settings can help prevent unassigned shards caused by resource constraints or communication issues.

4. Recovering Corrupted Data: If the unassigned shards are due to data corruption, specific recovery mechanisms such as the Elasticsearch snapshot and restore API can help restore the data integrity. It is important to ensure regular backups to minimize the risk of data loss in such scenarios.

Unassigned Shards FAQs

Q1. How can I check the status of unassigned shards in Elasticsearch?

A1. Elasticsearch provides an API endpoint to monitor the cluster health, including tracking unassigned shards. By querying the `_cluster/health` endpoint, you can obtain relevant information on the cluster’s health and unassigned shards.

Q2. Is it safe to manually allocate unassigned shards?

A2. Manually allocating unassigned shards is generally safe, but caution must be exercised. It is crucial to understand the root cause and ensure sufficient resources are available before reallocating shards. In some cases, Elasticsearch may automatically reassign shards, making manual allocation unnecessary.

Q3. Can unassigned shards lead to data loss?

A3. Unassigned shards can increase the risk of data loss, as these shards are inaccessible until they are appropriately assigned. It is recommended to address unassigned shards promptly to minimize the potential for data loss.

Q4. How can I prevent unassigned shards?

A4. Regular monitoring of cluster health, maintaining adequate disk space, ensuring network stability, and performing routine backups are essential preventive measures. Additionally, keeping Elasticsearch and the underlying infrastructure up to date can also help avoid unassigned shard issues.

Conclusion

Unassigned shards can pose stability and performance challenges in an Elasticsearch cluster. Understanding the causes behind unassigned shards and implementing appropriate solutions is essential for ensuring an optimized cluster performance, minimizing data loss risks, and maintaining data availability. By proactively addressing unassigned shards, organizations can leverage the full potential of Elasticsearch for their search and analytics needs.

Check Shards In Elasticsearch

Introduction:

Elasticsearch is widely used as a search and analytics engine, known for its scalability and near real-time search capabilities. It utilizes a distributed architecture that breaks down data into smaller units called shards, distributed across multiple nodes in a cluster. This distribution allows for efficient indexing, searching, and data retrieval. However, ensuring the health and proper functioning of these shards is essential to maintain optimal performance and reliability within an Elasticsearch cluster. In this article, we will explore the significance of checking shards in Elasticsearch and discuss the best practices to ensure a healthy cluster.

Understanding Shards in Elasticsearch:

Before diving into the importance of checking shards, it is crucial to understand what they are and how they function. Sharding is the mechanism used to distribute data across multiple nodes, allowing Elasticsearch to scale horizontally. Each shard is a self-contained index with its own mapping and configuration settings, representing a portion of the complete dataset. By dividing data into multiple shards, Elasticsearch can distribute the processing workload, achieving higher search and indexing performance.

Why Should You Check Shards?

While Elasticsearch automatically handles shard allocation and rebalancing, it is crucial to monitor and verify the health of these shards. Checking shards helps detect and mitigate various issues that can significantly impact both performance and reliability:

1. Shard Failures: Shards can fail due to hardware or network failures, disk space constraints, or even human errors. Monitoring shard status helps identify these failures promptly, enabling quick recovery and minimizing the impact on the overall system.

2. Uneven Shard Distribution: Elasticsearch aims to distribute shards evenly across nodes within a cluster. An uneven distribution can result in some nodes being overloaded while others remain underutilized. Regular checks on shard allocation ensure a balanced distribution, enabling efficient resource utilization.

3. Data Loss: A faulty shard can lead to data loss, which can be catastrophic, especially for critical applications. Periodic checks help identify any missing or corrupted shards, allowing for data recovery and maintaining data integrity.

4. Performance Bottlenecks: Checking shards can help identify performance bottlenecks, such as slow queries or high resource utilization. Monitoring shard-level performance metrics allows for optimization and fine-tuning to ensure optimal performance across the cluster.

Best Practices for Checking Shards:

To maintain a healthy Elasticsearch cluster, it is essential to follow best practices while checking shards. Here are some key practices to consider:

1. Monitoring Cluster Health: Elasticsearch provides a comprehensive monitoring API to track the overall health of the cluster, including shard status, node availability, and resource utilization. Setting up monitoring tools and systems like Elasticsearch’s own Monitoring plugin or third-party solutions allows for proactive monitoring, alerting, and easy troubleshooting.

2. Shard Allocation Awareness: Elasticsearch offers shard allocation awareness, which ensures that shards belonging to the same index are not allocated on the same physical node. This practice enhances fault tolerance and prevents complete index loss in case of node failures. Configuring shard allocation awareness is relatively simple and significantly reduces the risk of data loss.

3. Regular Shard Recovery Checks: Proactively checking and monitoring shard recovery is crucial to identify issues affecting the replication process. Monitoring indices recovering from primary or replica shards helps detect failures and enables prompt troubleshooting to maintain data consistency.

4. Shard Rebalancing: A proper shard distribution is vital for optimal performance. Monitoring and tuning shard allocation is necessary to balance the load across nodes and avoid overloading any specific node. Elasticsearch provides tools like shard allocation filtering and throttling to ensure efficient shard reallocation during rebalancing.

5. Diagnostic Tools: Elasticsearch offers several diagnostic tools to analyze and troubleshoot shard-related issues. Tools like the Cluster Allocation Explain API can determine why a shard is not allocated to a specific node, providing insights for troubleshooting. Similarly, the Cluster State API helps visualize shard distribution, aiding in detecting potential problems or imbalances.

FAQs:

Q: How do I check the status of shards in Elasticsearch?

A: The cluster health API can be used to check the status of shards. By making a GET request to the endpoint “/_cluster/health”, you can retrieve information about the cluster, including shard status and health.

Q: How can I ensure even shard distribution across nodes?

A: Elastic’s Index Templates allow you to define shard configuration while creating an index. By setting “number_of_shards” appropriately and enabling shard allocation awareness, you can achieve an even distribution. Monitoring shard allocation using the shard allocation explain API can also help identify any imbalances.

Q: What is the impact of uneven shard distribution?

A: Uneven shard distribution can lead to resource imbalances, resulting in some nodes being overloaded while others remain underutilized. It can cause slower search and indexing performance on overloaded nodes and adversely affect query response times.

Q: Why should I monitor shard recoveries?

A: Monitoring shard recoveries is important to ensure that replicas are successfully synchronized with primary shards. Recovering from a failed primary shard or replica helps maintain data consistency and avoids data loss in case of failures.

Q: Can I optimize shard performance?

A: Yes, you can optimize shard performance by analyzing slow queries using tools like the Slow Log API, tuning system resources, and redistributing shards to balance the load. Regular monitoring and performance tuning can improve overall cluster performance.

Conclusion:

Checking shards in Elasticsearch is crucial for maintaining optimal performance and reliability within an Elasticsearch cluster. Regular monitoring of shard status, allocation, recovery, and performance allows for proactive troubleshooting and ensures the health of your Elasticsearch cluster. By following best practices and utilizing diagnostic tools provided by Elasticsearch, you can mitigate potential issues, prevent data loss, and enhance the efficiency of your Elasticsearch deployment.

Elasticsearch Unassigned Node_Left

In this article, we will explore the reasons behind the “unassigned node_left” error in Elasticsearch, how it affects the cluster, and what steps can be taken to resolve this issue. We will also provide answers to some frequently asked questions related to this topic.

Understanding the “unassigned node_left” error:

When an Elasticsearch node abruptly leaves the cluster, either due to a hardware failure or network interruption, it triggers the “unassigned node_left” error on the remaining nodes in the cluster. This error occurs when the cluster cannot automatically reassign the unallocated shards (individual pieces of data) left behind by the departed node to other available nodes.

The impact of the “unassigned node_left” error:

The “unassigned node_left” error can lead to degraded performance and disruption in the cluster. As the unallocated shards are not actively managed, the cluster becomes imbalanced and might struggle to provide search results efficiently. It may also result in increased allocation time for new shards, impacting the overall performance of the cluster.

Resolving the “unassigned node_left” error:

There are several approaches to resolving the “unassigned node_left” error in Elasticsearch. Here are some possible steps that can be taken:

1. Investigate the cause: The first step is to identify the reason behind the node leaving the cluster. Check the logs and system metrics to see if there were any hardware or network issues. It is also crucial to ensure that proper monitoring tools are in place to identify potential problems before they escalate.

2. Reallocate unassigned shards: Once the cause of the node leaving the cluster is determined and resolved, the next step is to manually reallocate the unassigned shards. Elasticsearch provides APIs to perform shard allocation manually. By rerouting the unallocated shards to other available nodes, the cluster can regain balance and improve overall performance.

3. Adjust shard allocation settings: Elasticsearch allows users to configure shard allocation settings. By modifying these settings, you can control how the shards are assigned and distributed across the cluster. For example, you can adjust the “shard allocation awareness” settings to ensure that shards are allocated across different racks or availability zones, minimizing the impact of nodes leaving the cluster.

4. Increase shard replica count: In Elasticsearch, shards can be replicated and distributed across multiple nodes. By increasing the shard replica count, you can ensure that there are enough copies of each shard available in the cluster. This redundancy helps improve fault tolerance, as there will be replicas available even if a node leaves the cluster unexpectedly.

5. Tune auto-recovery settings: Elasticsearch provides various auto-recovery settings that control how the cluster handles failed or departed nodes. By fine-tuning these settings, you can optimize the recovery process and reduce the impact of node departures on the cluster. Experimenting with these settings should be done carefully, as improper configuration might lead to undesired consequences.

FAQs:

Q: Can the “unassigned node_left” error occur in a single node Elasticsearch cluster?

A: No, the “unassigned node_left” error occurs specifically in a cluster with multiple nodes. In a single node cluster, there is no issue of unallocated shards as there is only one node to handle the data.

Q: Is it possible to manually assign shards after the “unassigned node_left” error occurs?

A: Yes, Elasticsearch provides APIs to manually assign shards. By using the reroute API, you can allocate shards to specific nodes, ensuring that the unallocated shards are assigned properly.

Q: How can I prevent the “unassigned node_left” error from occurring?

A: While it is not possible to completely eliminate the risk of node failures, there are measures you can take to minimize the likelihood of “unassigned node_left” errors. These include implementing proper monitoring and alerting systems, configuring fault-tolerant hardware and network setups, and following best practices for cluster management and configuration.

Q: Is it recommended to increase the shard replica count to avoid the “unassigned node_left” error?

A: Increasing the shard replica count is indeed a good practice to ensure high availability, fault tolerance, and better performance. By having more replicas of each shard, the cluster can distribute the load evenly, and when a node leaves, the cluster can still function properly with the available replicas.

In conclusion, the “unassigned node_left” error in Elasticsearch can be disruptive to the cluster’s performance. Understanding the causes behind this error and taking appropriate measures to resolve it can help ensure a stable and efficient Elasticsearch deployment. By investigating the cause, reallocating unassigned shards, adjusting allocation settings, increasing shard replica count, and tuning auto-recovery settings, users can mitigate the impact of this error and maintain a healthy Elasticsearch cluster.

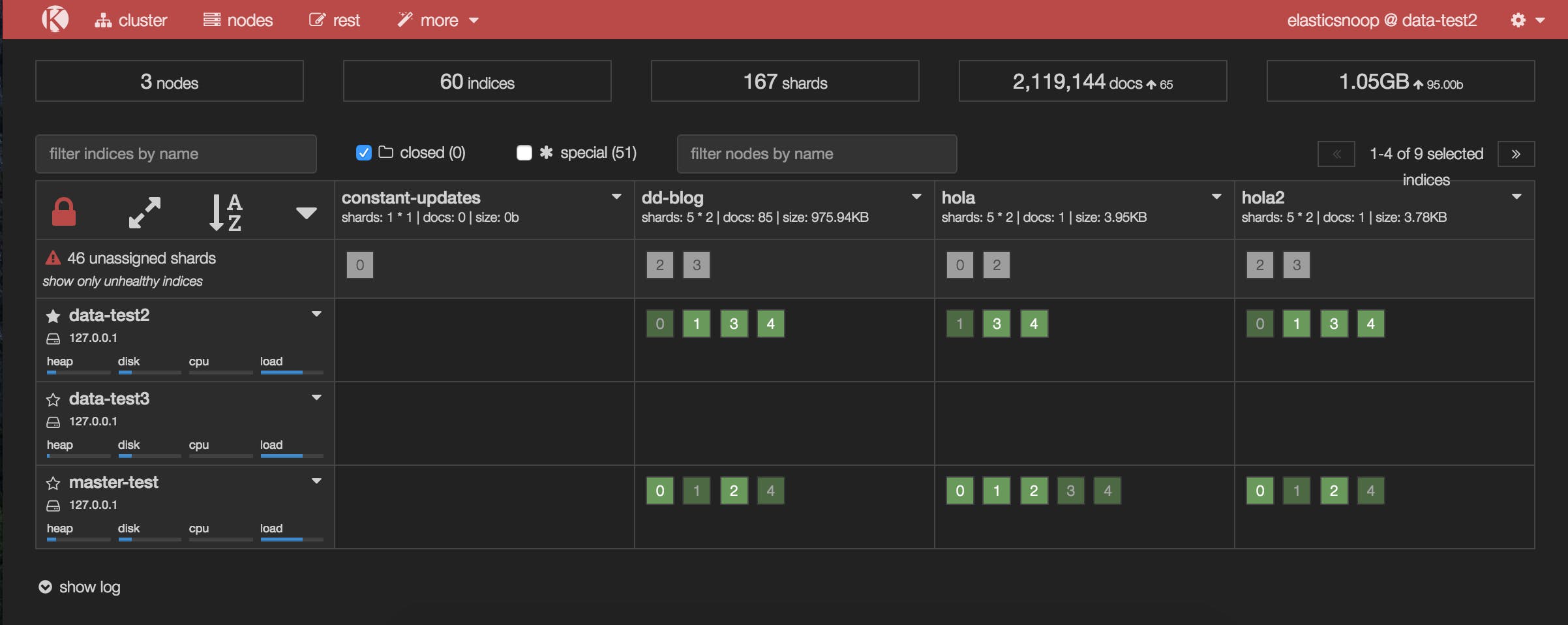

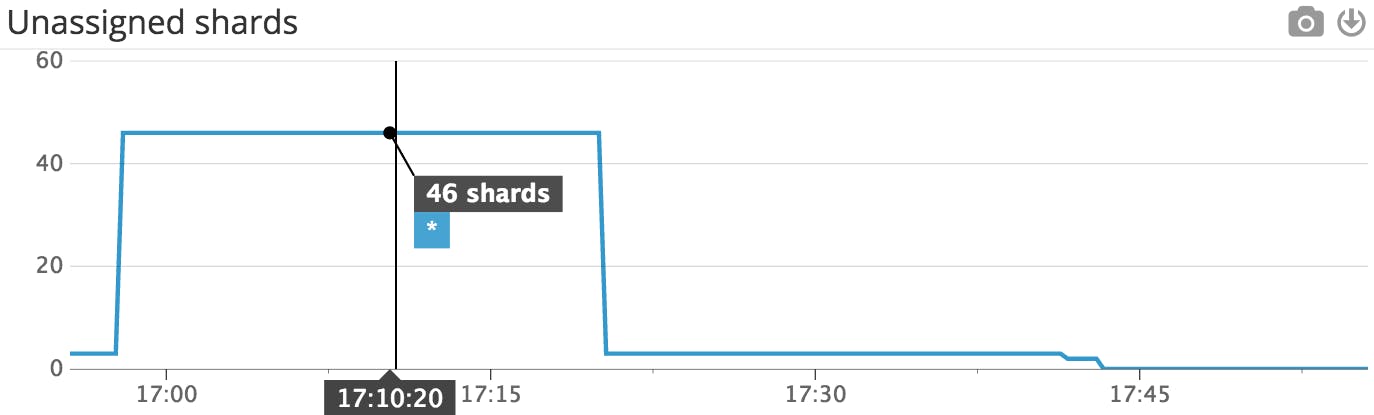

Images related to the topic delete unassigned shards elasticsearch

Found 41 images related to delete unassigned shards elasticsearch theme

![Elasticsearch 7.6] How do I fix unassigned shards issue - Elasticsearch - Discuss the Elastic Stack Elasticsearch 7.6] How Do I Fix Unassigned Shards Issue - Elasticsearch - Discuss The Elastic Stack](https://global.discourse-cdn.com/elastic/optimized/3X/b/6/b6a26cf4e8bb0d03a188c5dc760a7f993ba5fd9c_2_690x220.png)

Article link: delete unassigned shards elasticsearch.

Learn more about the topic delete unassigned shards elasticsearch.

- How to Delete Elasticsearch Unassigned Shards in 4 Easy …

- How can I delete unnassigned shards? – Elastic Discuss

- How to delete unassigned shards in elasticsearch?

- ELK: Deleting unassigned shards to restore cluster health

- How to delete a specific shard of an ElasticSearch index – Stack Overflow

- Elasticsearch Shards – How to Handle Unassigned Shards and More

- Delete unassigned shards – Google Groups

- Delete unassigned shards from Elasticsearch – GitHub Gist

- How to Resolve Unassigned Shards in Elasticsearch – Datadog

- Delete unassigned shards in Elasticsearch

- Resolving red cluster or UNASSIGNED shards – IBM

- Elasticsearch unassigned replica shards on single node …

See more: https://nhanvietluanvan.com/luat-hoc