Cuda Out Of Memory

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA. It allows developers to utilize the power of NVIDIA GPUs (Graphics Processing Units) to accelerate various computing tasks. However, when working with CUDA, it is not uncommon to encounter “CUDA out of memory” errors. In this article, we will explore what this error means, the reasons behind it, and strategies to fix it.

What Does “CUDA Out of Memory” Mean?

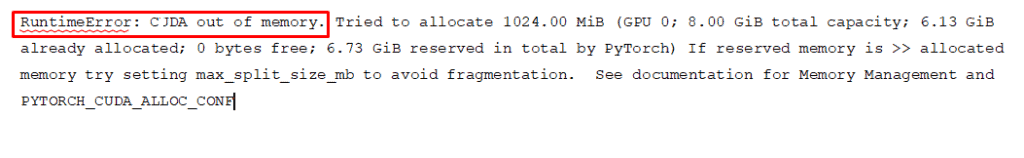

When you see the “CUDA out of memory” error, it means that your GPU does not have enough memory available to allocate for a certain operation or task. GPUs have a limited amount of memory, and if the required memory exceeds this limit, the CUDA runtime throws an error. This error can occur in various scenarios, such as when training deep learning models, running large-scale simulations, or processing massive amounts of data.

Reasons for CUDA Out of Memory Errors:

1. Insufficient GPU Memory:

The most common reason for CUDA out of memory errors is simply not having enough GPU memory to perform the desired computations. This can happen if your GPU has a low memory capacity, or if your workload exceeds the available memory due to large model sizes or inputs.

2. Oversubscription of GPU Memory:

Sometimes, multiple processes or tasks running concurrently on the GPU can collectively consume more memory than what is available. This can lead to CUDA out of memory errors, as there is not enough memory to satisfy the memory demands of all the tasks.

3. Memory Fragmentation:

Memory fragmentation refers to the phenomenon where free memory on the GPU is divided into small, non-contiguous chunks. When allocating memory, if the required memory size cannot be accommodated in any of the available memory chunks due to fragmentation, the CUDA runtime reports an out of memory error.

4. Large Batch Sizes or Input Sizes:

Using large batch sizes or input sizes can quickly exhaust the available GPU memory. This is particularly common in deep learning applications, where training on larger batches can lead to better convergence and accuracy. However, it can also cause CUDA out of memory errors if the GPU memory is not sufficient.

5. Memory Leaks:

Memory leaks occur when allocated memory is not properly deallocated or released after its intended use. Over time, these leaks can accumulate and consume a significant portion of GPU memory, leading to CUDA out of memory errors.

6. Inefficient Memory Allocation:

Inefficient memory management, such as frequent memory reallocations or unnecessary memory copies between the host (CPU) and the device (GPU), can contribute to CUDA out of memory errors.

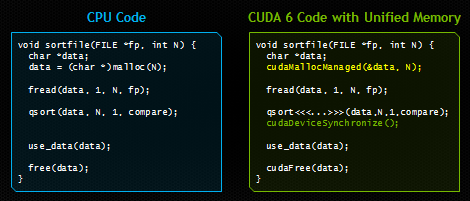

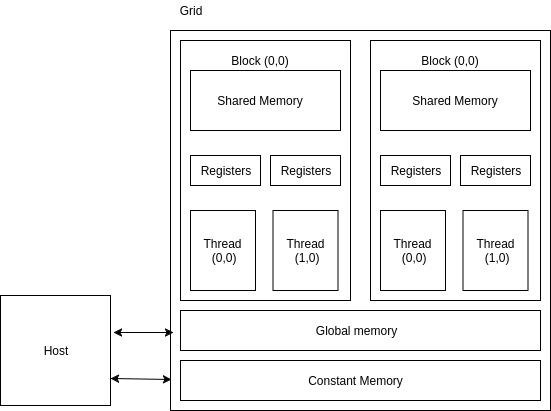

Memory Management in CUDA:

To effectively manage memory in CUDA, it is essential to understand the memory hierarchy and various memory types available:

1. Global Memory: This is the largest and slowest memory type available on the GPU. It is accessible by all threads and persists throughout the lifetime of the CUDA device.

2. Shared Memory: This memory type is shared among threads within a thread block and is relatively faster than global memory. It allows for faster data access and communication between threads.

3. Local Memory: Each thread has its own local memory, which is automatically allocated by the CUDA runtime. Local memory is used to store private variables and function call stack frames.

4. Constant Memory: Constant memory is read-only and provides faster access to frequently accessed data. It is especially useful for storing constant values or lookup tables.

Strategies to Fix CUDA Out of Memory Errors:

1. Reducing Model Complexity:

One approach to mitigate CUDA out of memory errors is to reduce the complexity of the model or algorithm being used. This can involve reducing the number of layers or features in a deep learning model, simplifying the computations, or using more memory-efficient algorithms.

2. Decreasing Batch Sizes or Input Sizes:

Reducing the batch size or input size can help alleviate the memory demands and prevent CUDA out of memory errors. However, it is important to find a suitable balance as decreasing the batch size too much can adversely affect the model’s performance or convergence.

3. Using GPU Memory Optimization Techniques:

Optimizing the GPU memory usage can involve various techniques, such as tensor compression, data pruning, or using mixed precision training. These techniques aim to reduce the memory footprint without significant loss in performance or accuracy.

4. Implementing Memory Usage Monitoring:

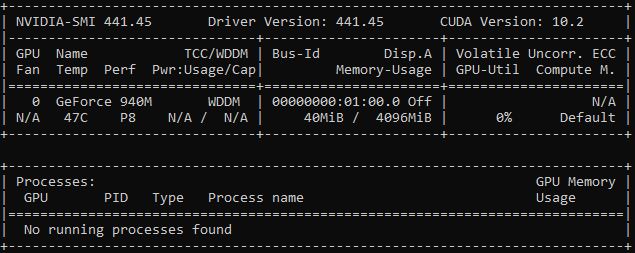

Monitoring the GPU memory usage during runtime can help identify potential memory bottlenecks and enable better memory management. Tools like NVIDIA System Management Interface (nvidia-smi) or GPU memory profilers can provide valuable insights into memory usage patterns.

5. Debugging and Profiling Tools for Memory Management:

CUDA provides powerful debugging and profiling tools that can assist in identifying memory-related issues, such as memory leaks or inefficient memory allocations. Tools like CUDA-MEMCHECK and NVIDIA Nsight can help detect and troubleshoot memory problems.

6. Optimizing Memory Allocation Strategies:

Efficient memory allocation strategies, such as memory pooling or reuse, can help minimize memory fragmentation and improve the overall memory management. Choosing appropriate memory allocation/deallocation functions and optimizing memory transfers between the host and device are also crucial.

Closing Remarks:

“CUDA out of memory” errors are a common challenge that developers and researchers face when working with GPU-accelerated computations. Understanding the reasons behind these errors and implementing effective strategies to resolve them can significantly enhance the performance and scalability of CUDA applications. By optimizing memory usage, reducing model complexity, and using suitable debugging and profiling tools, developers can overcome CUDA out of memory errors and harness the full potential of NVIDIA GPUs for their computational tasks.

FAQs:

Q: What should I do if I encounter a “CUDA out of memory” error?

A: If you encounter a “CUDA out of memory” error, consider reducing the model complexity, decreasing batch sizes or input sizes, using GPU memory optimization techniques, implementing memory usage monitoring, or optimizing memory allocation strategies.

Q: How can I monitor GPU memory usage during runtime?

A: Monitoring GPU memory usage can be done using tools like NVIDIA System Management Interface (nvidia-smi), GPU memory profilers, or by utilizing runtime APIs provided by CUDA.

Q: What are some common causes of CUDA out of memory errors?

A: Some common causes include insufficient GPU memory, oversubscription of GPU memory, memory fragmentation, large batch sizes or input sizes, memory leaks, and inefficient memory allocation.

Q: Are there any debugging and profiling tools available for memory management in CUDA?

A: Yes, CUDA provides powerful tools like CUDA-MEMCHECK and NVIDIA Nsight that can help detect and troubleshoot memory-related issues in CUDA applications.

Q: Can reducing the batch size or input size help prevent CUDA out of memory errors?

A: Yes, decreasing the batch size or input size can reduce the memory demands and help prevent CUDA out of memory errors. However, it is important to find a suitable balance to avoid adverse effects on model performance.

Running Out Of Vram In Stable Diffusion? Try This

Keywords searched by users: cuda out of memory CUDA out of memory stable Diffusion, OutOfMemoryError CUDA out of memory Stable Diffusion, CUDA out of memory PyTorch, Torch cuda OutOfMemoryError CUDA out of memory, CUDA out of memory tried to allocate, CUDA out of memory Google Colab, PYTORCH_CUDA_ALLOC_CONF, Max_split_size_mb

Categories: Top 53 Cuda Out Of Memory

See more here: nhanvietluanvan.com

Cuda Out Of Memory Stable Diffusion

In this article, we will delve deeper into the concept of CUDA out of memory stable diffusion, discussing its significance, benefits, and implementation strategies. Additionally, we will address a few frequently asked questions (FAQs) to provide a comprehensive understanding of this topic.

Significance of CUDA out of memory stable diffusion:

One of the key challenges in parallel computing is the limited memory capacity of GPUs. When computational tasks require a significant amount of memory, the available GPU memory may not be sufficient, resulting in OOM errors that halt the execution of the program. CUDA out of memory stable diffusion addresses this issue by dynamically allocating and optimizing memory usage, ensuring the efficient execution of memory-intensive algorithms on GPUs.

Benefits of CUDA out of memory stable diffusion:

1. Enhanced algorithm capabilities:

By efficiently managing memory usage, CUDA out of memory stable diffusion enables the execution of more complex and memory-intensive algorithms. This advancement opens doors for a wider range of applications in fields like computer graphics, deep learning, scientific simulations, and more.

2. Increased efficiency:

By preventing unnecessary OOM errors, CUDA out of memory stable diffusion significantly improves the efficiency of parallel computing. It allows developers to fully utilize the available GPU memory, leading to faster and smoother execution of programs.

3. Cost-saving:

By optimizing memory resources, CUDA out of memory stable diffusion reduces the need to upgrade GPU hardware to overcome memory limitations. This can result in cost savings for organizations, as they can achieve improved performance without investing in additional hardware.

Implementation strategies for CUDA out of memory stable diffusion:

1. Memory pooling:

Memory pooling is a common approach in CUDA out of memory stable diffusion. It involves creating a pool of memory blocks that can be dynamically allocated and released as needed during program execution. This strategy eliminates the fragmentation that can occur when memory is frequently allocated and deallocated, ensuring efficient utilization of GPU memory.

2. Memory reordering:

Another strategy is memory reordering, which involves rearranging memory allocations to minimize fragmentation and maximize memory utilization. This technique aims to optimize memory access patterns to improve computational efficiency.

3. Data compression:

Data compression techniques can also be used in CUDA out of memory stable diffusion to reduce memory usage. By compressing data before storing it in GPU memory, more data can be accommodated within the limited memory capacity.

4. Adaptive memory management:

Adaptive memory management techniques dynamically adjust memory usage based on program requirements to optimize memory utilization. These techniques monitor memory usage patterns and allocate resources accordingly, ensuring optimal performance and preventing OOM errors.

FAQs:

Q1. Can CUDA out of memory stable diffusion completely eliminate OOM errors?

A1. While CUDA out of memory stable diffusion significantly reduces the occurrence of OOM errors, it does not guarantee their complete elimination. Certain algorithms may still surpass the available GPU memory capacity, especially when dealing with extremely large datasets.

Q2. Are there any performance implications of implementing CUDA out of memory stable diffusion?

A2. Implementing CUDA out of memory stable diffusion techniques may introduce slight overhead due to the additional management and optimization processes. However, the benefits of preventing OOM errors and utilizing GPU memory more efficiently generally outweigh any performance impact.

Q3. Can CUDA out of memory stable diffusion be used with any GPU?

A3. CUDA out of memory stable diffusion techniques can be applied to GPUs that support the CUDA architecture. However, the effectiveness of these techniques may vary depending on the specific GPU’s memory capacity and capabilities.

Q4. Are there any limitations or challenges associated with CUDA out of memory stable diffusion?

A4. One limitation is that as the complexity and memory requirements of algorithms increase, it becomes harder to fully eliminate OOM errors. Additionally, implementing CUDA out of memory stable diffusion techniques may require additional development effort and expertise to ensure efficient memory management.

In conclusion, CUDA out of memory stable diffusion is a crucial technique in parallel computing that overcomes the limitations of GPU memory capacity. By implementing strategies like memory pooling, memory reordering, data compression, and adaptive memory management, it optimizes memory utilization and facilitates the execution of memory-intensive algorithms. While it may not completely eliminate OOM errors, CUDA out of memory stable diffusion significantly enhances algorithm capabilities, improves efficiency, and can lead to cost savings by maximizing GPU memory utilization.

Outofmemoryerror Cuda Out Of Memory Stable Diffusion

Introduction

When working with large datasets or running complex algorithms, encountering out of memory errors is not uncommon. In the field of GPU computing, CUDA is a widely used parallel computing platform and programming model that enables developers to utilize the power of graphics processing units (GPUs) for general-purpose computing. However, CUDA applications are not immune to out of memory errors, and one such error that users may come across is the “OutOfMemoryError CUDA out of memory Stable Diffusion.” In this article, we will delve into the details of this error, understand its possible causes, and explore potential solutions to overcome it.

Understanding OutOfMemoryError CUDA out of memory Stable Diffusion

OutOfMemoryError CUDA out of memory Stable Diffusion is an error that occurs when a CUDA application running on a GPU runs out of memory during the execution of the Stable Diffusion algorithm. Stable Diffusion is a computational algorithm used in a variety of fields such as image and signal processing, computational fluid dynamics, and more. It is designed to solve partial differential equations efficiently by iteratively diffusing information across the computational domain.

Causes of OutOfMemoryError CUDA out of memory Stable Diffusion

Several factors can contribute to the occurrence of the OutOfMemoryError CUDA out of memory Stable Diffusion. Let’s explore some of the common causes:

1. Insufficient GPU memory: This is the most common cause of the error. When the GPU does not have enough memory to accommodate the data required for the Stable Diffusion algorithm, the out of memory error is triggered.

2. Large datasets: If the input data for the Stable Diffusion algorithm is too large to fit into the available GPU memory, the out of memory error may occur. This situation often arises when dealing with high-resolution images or massive computational grids.

3. Inefficient memory management: Improper memory management in the CUDA application can lead to memory leaks or excessive memory consumption, eventually causing the out of memory error.

Solutions to OutOfMemoryError CUDA out of memory Stable Diffusion

Now that we understand the potential causes of this error, let’s discuss some strategies to overcome it:

1. Reduce input data size: If the input dataset exceeds the available GPU memory, consider reducing its size through downsampling or compression techniques. These methods can help ensure that the data can fit within the available memory.

2. Optimize memory usage: Evaluate the memory requirements of the algorithm and optimize the memory usage to minimize memory consumption. This can be achieved by reusing memory buffers, using smaller data types when possible, and deallocating unused memory promptly.

3. Use memory-efficient algorithms: Explore alternative algorithms or variations of the Stable Diffusion algorithm that require less memory. These algorithms may sacrifice some level of accuracy or performance, but they can help overcome the memory limitations.

4. Utilize memory transfers: If the input data is too large to fit in the GPU memory but can be divided into smaller chunks, consider using memory transfers to load portions of the data into the GPU memory selectively. This approach allows processing the data in smaller batches, reducing the overall memory requirements.

5. Upgrade GPU hardware: If none of the above solutions are sufficient, it may be necessary to upgrade the GPU hardware to one with more memory capacity. This can provide a more permanent solution to the out of memory error.

FAQs

1. Can I encounter the OutOfMemoryError CUDA out of memory Stable Diffusion even if I have a powerful GPU?

Yes, it is possible to encounter this error even with a powerful GPU. The memory capacity of the GPU determines its ability to store and process data for the Stable Diffusion algorithm. If the algorithm requires more memory than the GPU provides, the error will occur.

2. Are there any tools available to diagnose memory-related issues in CUDA applications?

Yes, NVIDIA provides tools such as CUDA-MEMCHECK and CUDA-MEMADVISE that can help diagnose and debug memory-related issues in CUDA applications. These tools can provide insights into memory allocation and deallocation errors, memory leaks, and other memory-related problems.

3. Can I increase the GPU memory capacity?

No, it is not possible to increase the GPU memory capacity once a GPU is manufactured. The memory capacity is one of the hardware limitations of the GPU and cannot be changed. Upgrading the GPU to a model with higher memory capacity is the only solution to increase the available memory.

4. How can I check the memory usage of my CUDA application?

You can monitor the memory usage of your CUDA application using various profiling tools provided by NVIDIA, such as NVIDIA Visual Profiler (nvvp) or NVIDIA System Management Interface (nvidia-smi). These tools can provide detailed information about memory utilization, allowing you to identify potential bottlenecks or memory-related issues.

5. Are there any software libraries or frameworks that can help manage GPU memory efficiently?

Yes, there are several software libraries and frameworks available, such as CUDA Unified Memory (UM), which can help manage GPU memory efficiently. These libraries provide automatic memory management techniques, allowing developers to focus on the algorithmic aspects of their CUDA applications while leaving memory management to the framework.

Conclusion

OutOfMemoryError CUDA out of memory Stable Diffusion is a common error encountered by CUDA applications running the Stable Diffusion algorithm. Insufficient GPU memory, large datasets, and inefficient memory management are some of the key contributors to this error. By reducing input data size, optimizing memory usage, and considering alternative algorithms, users can overcome this error. Additionally, utilizing memory transfers and upgrading GPU hardware can provide further solutions. Remember to monitor memory usage and utilize tools provided by NVIDIA for debugging and profiling purposes to ensure efficient memory management in your CUDA applications.

Cuda Out Of Memory Pytorch

Deep learning has revolutionized various fields such as computer vision, natural language processing, and speech recognition. PyTorch, an open-source deep learning framework, has gained significant popularity due to its flexibility and easy-to-use interface. However, when working with large datasets or complex models, one common issue that arises is running into CUDA out of memory errors. In this article, we will dive deep into understanding these errors and explore ways to handle them effectively.

Understanding CUDA out of Memory Errors

CUDA out of memory errors occur when the GPU does not have enough memory to process the current operation. GPUs have limited memory compared to CPUs, and complex models or large datasets can quickly consume all available GPU memory. When this happens, PyTorch throws an error indicating that the CUDA memory has been exhausted.

Possible Causes of CUDA out of Memory Errors

1. Model Size: Deep learning models with a large number of parameters require more memory. Increasing the size of the model will increase the memory demand on the GPU.

2. Batch Size: Larger batch sizes process more samples simultaneously, but they also require more memory. Higher batch sizes can quickly exhaust GPU memory.

3. Input Data Size: Large input datasets can consume a significant amount of GPU memory, especially when combined with larger models or batch sizes.

4. Forward and Backward Pass: Each forward and backward pass allocates memory for intermediate results. Complex models with many layers or operations can quickly consume GPU memory during training.

Strategies to Avoid CUDA out of Memory Errors

1. Reduce Batch Size: Decreasing the batch size reduces the memory requirement for each iteration. However, smaller batch sizes can result in slower training convergence and increased computational overhead.

2. Reduce Model Size: Consider optimizing the model architecture by reducing the number of layers or decreasing the number of parameters. This can significantly reduce the memory footprint.

3. Use Mixed Precision Training: PyTorch provides mixed precision training to utilize half-precision (float16) instead of single-precision (float32), reducing memory requirements.

4. Gradient Accumulation: Instead of performing weight updates after every batch, accumulate gradients over multiple mini-batches before updating the model. This reduces the memory requirement for intermediate gradients and allows training with larger effective batch sizes.

5. Data Augmentation and Image Loading: On-the-fly data augmentation techniques like random cropping or flipping can be applied during training, reducing the need to load and store additional images in memory.

6. DataLoader Configuration: Set the number of workers and pin_memory options appropriately in the PyTorch DataLoader to maximize GPU memory utilization.

FAQs

Q1. Why does increasing batch size lead to CUDA out of memory errors?

A1. Larger batch sizes require more memory as all samples in the batch are processed simultaneously. If the batch size exceeds the available GPU memory, the CUDA out of memory error occurs.

Q2. Can I use multiple GPUs to avoid CUDA out of memory errors?

A2. Yes, you can use PyTorch’s DataParallel or DistributedDataParallel to train models across multiple GPUs. This distributes the memory load and allows larger models to fit in memory.

Q3. What if reducing the model or batch size is not possible?

A3. If reducing the model or batch size is not feasible, you can consider using a GPU with a higher memory capacity or using cloud-based GPU instances for training.

Q4. Does increasing GPU memory solve the issue permanently?

A4. Increasing GPU memory can provide temporary relief but may not solve the problem in the long term if memory demand continuously increases. Optimizing model architecture and exploring memory-efficient techniques is recommended.

Q5. Does CUDA out of memory error occur only during training?

A5. No, CUDA out of memory errors can occur during both training and inference if the model or input data size exceeds the available GPU memory.

Conclusion

CUDA out of memory errors can be a common issue faced by deep learning practitioners. By understanding the causes of these errors and implementing strategies like reducing batch size, optimizing model architecture, and utilizing mixed precision training, users can effectively handle memory constraints in PyTorch. Remember to experiment and find a balance between model complexity and available GPU memory to achieve optimal training performance.

Images related to the topic cuda out of memory

Found 49 images related to cuda out of memory theme

![SOLVED] Runtimeerror cuda out of memory stable diffusion Solved] Runtimeerror Cuda Out Of Memory Stable Diffusion](https://itsourcecode.com/wp-content/uploads/2023/05/Runtimeerror-cuda-out-of-memory-stable-diffusion.png)

.svg)

![Solved][PyTorch] RuntimeError: CUDA out of memory. Tried to allocate 2.0 GiB - Clay-Technology World Solved][Pytorch] Runtimeerror: Cuda Out Of Memory. Tried To Allocate 2.0 Gib - Clay-Technology World](https://i0.wp.com/clay-atlas.com/wp-content/uploads/2020/08/python.jpg?fit=768%2C457&ssl=1&resize=350%2C200)

Article link: cuda out of memory.

Learn more about the topic cuda out of memory.

- “RuntimeError: CUDA error: out of memory”? – Stack Overflow

- CUDA out of memory issue #1654 – GitHub

- How to Solve CUDA Out of Memory Error in PyTorch

- Solving the “RuntimeError: CUDA Out of memory” error

- Stable Diffusion Cuda Out of Memory Issue: 7 Fixes Listed

- Solving “CUDA out of memory” Error – Kaggle

- Resolving CUDA Being Out of Memory With Gradient …

- How to resolve “RuntimeError: CUDA out of memory”?

- Stable Diffusion CUDA Out of Memory: How to Fix

- Cuda Out Of Memory: Understanding The Torch.Cuda …