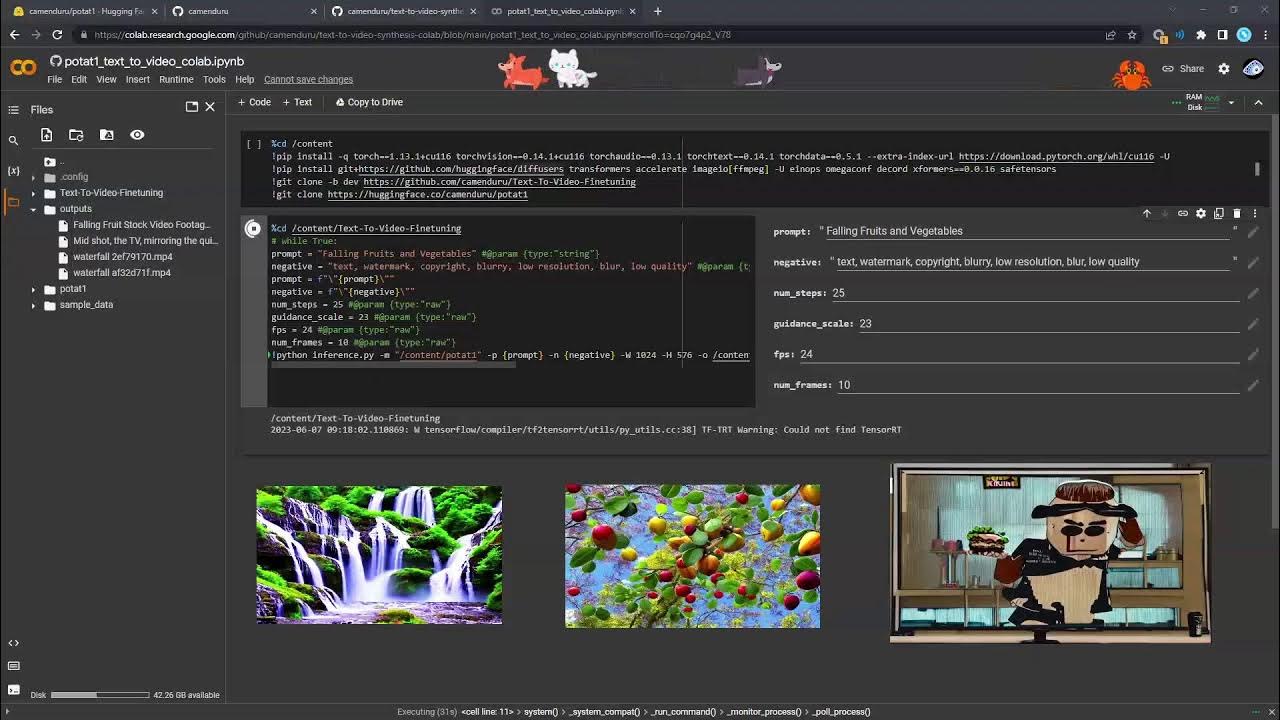

Could Not Find Tensorrt

1. Overview of TensorRT and its Importance in Deep Learning

TensorRT is a high-performance deep learning inference optimizer and runtime library developed by NVIDIA. It is designed to enhance the efficiency and speed of deep learning inference on NVIDIA GPUs. TensorRT optimizes and deploys trained deep neural networks into production environments such as self-driving cars, intelligent video analytics, and embedded devices.

The importance of TensorRT lies in its ability to accelerate deep learning models, reducing their memory footprint and computational requirements. By incorporating TensorRT into your deep learning pipeline, you can achieve significant performance improvements, faster inference times, and more efficient use of GPU resources.

2. Possible Reasons for Not Finding TensorRT

There could be several reasons why you are unable to find TensorRT on your system. Some possibilities include:

a) Incompatible Hardware: TensorRT requires NVIDIA GPUs with CUDA compute capability 5.3 or higher. If you do not have a compatible GPU, TensorRT cannot be utilized.

b) Missing or Incompatible Software Dependencies: TensorRT has specific software dependencies, such as CUDA, cuDNN, and TensorFlow. If these dependencies are not installed or incompatible versions are present, TensorRT may not be available.

c) Incorrect Installation: If TensorRT was not installed properly, it may not be discoverable. Incomplete installations or errors during the installation process can lead to this issue.

3. Checking for Compatible Hardware and Software Requirements

To ensure TensorRT availability, it is crucial to verify both hardware and software requirements. Follow these steps:

a) Hardware Requirements: First, check if your GPU meets the minimum requirements. NVIDIA GPUs with CUDA compute capability 5.3 or higher are required for TensorRT. You can find the compute capability of your GPU on NVIDIA’s official website.

b) Software Dependencies: Ensure that all necessary software dependencies are installed. These include CUDA, cuDNN, and TensorFlow or any other deep learning framework you are using.

4. Verifying TensorRT Installation and Version

If you have confirmed that your system meets the requirements, the next step is to verify the installation of TensorRT. Here’s how:

a) Linux: Open a terminal window and use the following command to check if TensorRT is installed:

`$ dpkg -l | grep TensorRT`

If TensorRT is installed, you should see a list of packages related to TensorRT along with their versions.

b) Windows: Open the command prompt and enter the following command to verify the TensorRT installation:

`C:\> nvidia-smi`

If TensorRT is installed, you will see a section labeled “TensorRT” with the corresponding version.

5. Resolving Common Issues with TensorRT Installation

If you couldn’t find TensorRT, there might be some common issues that can be resolved. Consider the following troubleshooting steps:

a) Update NVIDIA Driver: Ensure that you have the latest NVIDIA driver installed. Outdated drivers can cause compatibility issues with TensorRT.

b) Reinstall TensorRT: If you have previously installed TensorRT, try reinstalling it. Using NVIDIA’s official installation guide or package manager (e.g., apt-get or Conda) is recommended.

c) Verify Software Compatibility: Double-check that all the software dependencies, such as CUDA, cuDNN, and deep learning frameworks, are compatible with TensorRT. Incompatible versions can lead to conflicts and prevent TensorRT from being discovered.

6. Debugging Techniques to Locate TensorRT Installation Errors

If you are still unable to find TensorRT, debugging the installation errors can help identify the problem. Consider the following techniques:

a) Check Log Files: Look for relevant log files in the installation directory or system logs. Pay attention to any error messages or warnings related to TensorRT.

b) Network Connectivity: Ensure that your system has an active internet connection during installation. Some installations may require downloading additional files or dependencies.

c) Consult NVIDIA Developer Forums: NVIDIA’s official developer forums are a valuable resource for troubleshooting installation issues. Search for similar issues or post a question detailing your problem.

7. Alternative Approaches if TensorRT is Still Unavailable

If all attempts to find TensorRT fail, there are alternative approaches you can consider:

a) Upgrade Hardware: If your GPU does not meet the minimum requirements, upgrading to a compatible GPU with higher CUDA compute capability can enable TensorRT support.

b) Cloud-based GPU Instances: Utilize cloud-based GPU instances provided by services like AWS, Google Cloud, or Microsoft Azure. These platforms often have pre-configured environments with TensorRT available.

8. Importance of Properly Configuring and Using TensorRT

Properly configuring and using TensorRT is vital to maximize its benefits. Consider the following factors:

a) Model Optimization: TensorRT supports layer fusion, precision calibration, and dynamic tensor memory management. Leverage these features to optimize your trained models for high-performance inference.

b) Efficient Memory Usage: TensorRT’s optimizations result in reduced memory consumption. Ensure your model’s memory requirements are within the allocated GPU memory to avoid out-of-memory errors.

c) Profiling and Benchmarking: Use TensorRT’s built-in profiling and benchmarking tools to identify performance bottlenecks. This allows you to fine-tune the configuration and optimize the system further.

d) Keep Up with Updates: Regularly check for updates and newer versions of TensorRT. NVIDIA frequently releases updates that improve performance, fix bugs, and introduce new features.

e) Access Documentation and Resources: Refer to NVIDIA’s official documentation, tutorials, and online resources for comprehensive guidance on TensorRT’s usage and advanced features.

FAQs:

Q1. How can I install TensorRT?

A: TensorRT can be installed via package managers like Conda or by following NVIDIA’s official installation guide specific to your operating system.

Q2. I couldn’t find TensorRT using CMake. What should I do?

A: Ensure that you have installed TensorRT correctly and that the necessary CMake files are present. You may need to adjust the CMake configuration or paths to locate TensorRT’s installation.

Q3. How can I install TensorRT on a NVIDIA Jetson Nano?

A: TensorRT can be installed on a Jetson Nano by following NVIDIA’s Jetson-specific installation guide. This guide provides instructions tailored to the Jetson platform.

Q4. I tried using Conda to install TensorRT, but it didn’t work. What could be the issue?

A: Conda might not have the necessary channels configured to access the TensorRT package. Ensure that you have added the correct channels or try alternative installation methods.

Q5. I encountered the error: “Could not load dynamic library ‘libnvinfer.so.7’ – could not find TensorRT.” How can I fix this?

A: This error suggests that the dynamic library for TensorRT is not found or properly linked. Verify the installation and ensure that the library file exists in the specified path or is configured correctly in your environment variables.

In conclusion, TensorRT plays a crucial role in optimizing deep learning inference. If you are unable to find TensorRT, it is important to verify hardware compatibility, software dependencies, and proper installation. By following the troubleshooting steps and considering alternative approaches, you can effectively resolve the issue and harness the power of TensorRT for accelerated deep learning inference.

20 Installing And Using Tenssorrt For Nvidia Users

Keywords searched by users: could not find tensorrt TensorRT, Install tensorrt, Find tensorrt cmake, Install tensorrt on Jetson Nano, Conda install tensorrt, Could not load dynamic library ‘libnvinfer so 7

Categories: Top 37 Could Not Find Tensorrt

See more here: nhanvietluanvan.com

Tensorrt

In the world of Artificial Intelligence (AI), the ability to quickly and efficiently process large amounts of data is crucial. Neural networks, the building blocks of AI, have revolutionized various applications, including image classification, object detection, speech recognition, and natural language processing. However, running these complex models on traditional hardware can be computationally expensive and time-consuming. This is where TensorRT comes into play, providing developers with a powerful tool to optimize and accelerate AI models for deployment.

What is TensorRT?

TensorRT, short for TensorRT Inference Runtime, is a deep learning inference optimizer and runtime library developed by NVIDIA. It is specifically designed to optimize and accelerate inference for deep learning models. TensorRT takes trained neural networks, which are often computationally intensive, and optimizes them for deployment on a range of NVIDIA GPUs. This allows models to run with significantly reduced latency and higher throughput, making it ideal for real-time AI applications.

How does TensorRT work?

TensorRT employs various techniques to optimize and accelerate AI inference. It performs network surgery, refining the model by reducing redundant layers, combining operations, and fusing layers for improved efficiency. This optimization process reduces the overall computational workload and memory consumption, resulting in faster inference times.

Another crucial technique used by TensorRT is precision calibration. Deep learning models often use float32 precision (32-bit floating-point numbers) for inference, which can be computationally expensive. TensorRT analyzes the model and determines where lower-precision data types, such as float16 (16-bit floating-point numbers), can be used without significant loss in accuracy. By utilizing lower-precision data types, TensorRT can speed up inference while maintaining reasonable accuracy levels.

Furthermore, TensorRT takes advantage of the specialized hardware and features available in NVIDIA GPUs. It leverages Tensor Cores, a cutting-edge technology that performs mixed-precision matrix operations, dramatically accelerating computation. TensorRT also utilizes dynamic tensor memory management, which optimizes memory allocation and eliminates unnecessary memory transfers, further enhancing performance.

Benefits of using TensorRT

By utilizing TensorRT, developers can enjoy a wide range of benefits when deploying their AI models:

1. Accelerated Inference: TensorRT significantly speeds up AI inference, enabling real-time applications that require low latency and high throughput.

2. Optimal Memory Usage: TensorRT optimizes memory allocation, reducing overall memory consumption and facilitating efficient use of system resources.

3. Reduced Precision: By employing lower-precision data types, TensorRT achieves faster inference while maintaining reasonable accuracy levels, without the need for full float32 precision.

4. Platform Flexibility: TensorRT is compatible with various GPU architectures, making it easier to deploy models on a variety of NVIDIA GPUs, including desktop GPUs and embedded platforms.

5. Easy Integration: TensorRT seamlessly integrates with popular deep learning frameworks such as TensorFlow, PyTorch, and ONNX, making it straightforward to optimize and deploy models developed using these frameworks.

Frequently Asked Questions

Q: Does TensorRT work only with NVIDIA GPUs?

A: Yes, TensorRT is specifically designed to harness the power of NVIDIA GPUs. It leverages the specialized features and capabilities of NVIDIA GPUs to accelerate inference. Therefore, it requires an NVIDIA GPU to function effectively.

Q: Can TensorRT work with models from any deep learning framework?

A: TensorRT is compatible with popular deep learning frameworks such as TensorFlow, PyTorch, and ONNX. It provides plugins and parsers to seamlessly integrate with these frameworks, allowing users to optimize and deploy models developed using these frameworks.

Q: Does using lower precision data types affect the accuracy of the model?

A: Although using lower precision data types can introduce slight approximation errors, TensorRT employs precision calibration techniques to minimize the impact on model accuracy. In most cases, the reduction in precision does not significantly affect the accuracy of the model.

Q: What kind of performance improvement can one expect when using TensorRT?

A: The performance improvement achieved by TensorRT depends on various factors, including the complexity of the model and the specific hardware used. In general, TensorRT can provide substantial speedups, with inference times being several times faster compared to running models without optimization.

Q: Is TensorRT only suitable for real-time applications?

A: While TensorRT excels at accelerating real-time applications due to its low-latency inference capabilities, it can also be beneficial for batch processing scenarios. For any application that requires efficient AI inference, TensorRT can help optimize performance and reduce computation time.

Conclusion

TensorRT is a powerful tool for developers looking to optimize and accelerate AI inference. By leveraging advanced optimization techniques and taking advantage of NVIDIA GPU features, TensorRT significantly improves inference performance, making it ideal for real-time AI applications. With its memory optimization, precision calibration, and integration with popular deep learning frameworks, developers can easily deploy high-performance AI models. By harnessing the power of TensorRT, AI capabilities can be enhanced, enabling faster and more efficient processing of vast amounts of data.

Install Tensorrt

Introduction:

TensorRT is a powerful deep learning inference optimizer and runtime library developed by NVIDIA. By optimizing trained neural networks for deployment on NVIDIA GPUs, TensorRT delivers exceptional speed and efficiency, enabling developers to unlock the full potential of their deep learning models. This article aims to provide a detailed guide on how to install TensorRT, along with answers to some frequently asked questions.

I. Installation Process:

Before diving into the installation process, it’s essential to ensure that your system meets the minimum requirements. TensorRT is compatible with Linux x86_64 systems and NVIDIA GPUs with compute capability 6.0 or higher.

1. Prerequisites:

To successfully install TensorRT, follow these pre-installation steps:

– Install CUDA Toolkit: TensorRT requires the CUDA Toolkit to be installed. Get the CUDA Toolkit version compatible with your GPU from the NVIDIA Developer website.

– Install cuDNN library: Download and install the cuDNN library suitable for your system from the NVIDIA Developer website.

– Install TensorFlow: While not mandatory, installing TensorFlow before TensorRT can help maximize compatibility and leverage the TensorFlow-TensorRT integration.

2. Downloading TensorRT:

To acquire the TensorRT package, navigate to the NVIDIA Developer website and download the TensorRT package that corresponds to your CUDA and cuDNN installations. The package is generally distributed as a tar file, and the filename should mention the compatible CUDA version.

3. Extracting and Installing:

Follow these steps to extract and install TensorRT:

– Open a terminal and navigate to the directory where the TensorRT tar file is stored.

– Execute the following command to extract the contents of the tar file: `$ tar -xvf

– Navigate to the extracted folder: `$ cd TensorRT-

– Run the installation script: `$ sudo ./python/setup.py install`

4. Verifying the Installation:

To determine if the installation was successful, you can check for the presence of the library files and verify the library version:

– Look for the TensorRT library files in the `/usr/lib` or `/usr/local/lib` location.

– Run the following command to display the installed TensorRT version: `$ tensorrt –version`

Now that TensorRT is successfully installed, let’s address some common questions related to its usage and benefits:

Frequently Asked Questions (FAQs):

Q1: Can TensorRT be used with any deep learning framework?

A: Yes, TensorRT supports integration with popular deep learning frameworks, including TensorFlow, PyTorch, and ONNX (Open Neural Network Exchange). This compatibility allows users to optimize and deploy models trained on these frameworks using TensorRT.

Q2: What are the main benefits of using TensorRT?

A: TensorRT offers several advantages:

1. Higher inference performance: TensorRT optimizes deep learning models to run efficiently on NVIDIA GPUs, significantly accelerating inference time.

2. Reduced memory consumption: It maximizes memory efficiency by combining layers and optimizing data layouts.

3. Platform flexibility: TensorRT supports deployment across various platforms, including cloud, data centers, and edge devices.

4. Custom layer support: It provides a plugin API for developers to define and optimize custom layers specific to their project needs.

Q3: How to optimize a deep learning model using TensorRT?

A: To optimize a model with TensorRT, follow these steps:

1. Convert the trained model to an inference engine format (e.g., TensorFlow SavedModel, ONNX).

2. Load the converted model into TensorRT.

3. Apply optimizations by specifying precision, calibration, dynamic tensor memory, and other parameters.

4. Serialize the optimized model for deployment.

Q4: Can TensorRT be used for real-time video or image processing?

A: Absolutely. TensorRT’s accelerated inference capabilities are well-suited for real-time image and video processing tasks, such as object detection, segmentation, and classification. By exploiting GPU parallelism, TensorRT ensures high frame rates and low latency.

Q5: Does TensorRT support quantization?

A: Yes, TensorRT provides quantization support, allowing developers to reduce the precision of weights and activations for optimal performance. Quantization helps save memory and improves inference speed, especially on lower-precision hardware.

Q6: Are there any documentation and resources available for further learning?

A: Yes, NVIDIA offers comprehensive documentation, tutorials, and sample codes on its official website. Additionally, the NVIDIA Developer Forums and GitHub repositories host active communities where users can seek assistance and share ideas.

Conclusion:

Installing TensorRT can significantly boost the performance of deep learning models, enabling efficient inference on NVIDIA GPUs. This guide provided a comprehensive overview of the installation process, including the necessary prerequisites and essential steps. By following the outlined instructions, you can successfully integrate TensorRT into your development environment and leverage its remarkable benefits for accelerated deep learning inference.

Find Tensorrt Cmake

Introduction:

In the world of deep learning, TensorRT has emerged as a powerful software library that optimizes neural network inference on NVIDIA GPUs. It provides low-latency, high-performance execution for deep learning models, allowing developers to deploy their trained models efficiently. If you’re a deep learning enthusiast or practitioner, chances are you’ll want to harness the benefits of TensorRT for your projects. This article aims to guide you through the process of finding TensorRT CMake for seamless integration into your workflow.

What is CMake?

Before we dive into the specifics of TensorRT CMake, let’s briefly touch upon CMake. CMake is an open-source cross-platform build system that assists in the compilation of source code into executable binaries. It’s widely adopted by the software development community as it provides an easy-to-use and robust method for building projects across different platforms and operating systems.

The Value of TensorRT CMake:

TensorRT CMake comes into the picture when you want to build and link your TensorRT projects with ease. It helps in automating the build process, managing dependencies, and configuring the project for various hardware and software combinations. By leveraging CMake, you can achieve a more streamlined and maintainable workflow, allowing for better productivity in deep learning inference tasks.

Finding TensorRT CMake:

To find TensorRT CMake, you first need to navigate to the official NVIDIA/TensorRT repository on GitHub. Once there, you’ll find the necessary files and documentation required to utilize CMake with TensorRT. TensorRT CMake is commonly distributed as a part of the TensorRT package, so make sure you have the latest version of TensorRT installed on your system before proceeding.

Once inside the repository, locate the CMakeLists.txt file. This file contains the instructions CMake uses to build your project. You can download the file directly or clone the entire repository to your local machine for easier access. The CMakeLists.txt file can then be placed in the root directory of your project.

Once you have obtained the CMakeLists.txt file, open it using a text editor of your choice. This file will contain a series of commands that CMake will execute to build your project successfully. You may need to make modifications to these commands based on the specifics of your project, such as the path to the TensorRT installation or external libraries you’re utilizing.

Once you’re satisfied with the modifications, navigate to the directory where your project resides in a terminal or command prompt. Create a build directory in the project directory and navigate inside it. Run the command “cmake ..” to generate the appropriate build files for your particular operating system and configuration. After the cmake command executes without any errors, you can proceed to build your project using the corresponding build command (e.g., make for Linux, or Visual Studio solution for Windows).

Once the project is successfully built, you can execute it and verify that the integration of TensorRT CMake has occurred seamlessly and that the benefits of TensorRT have been incorporated effectively into your deep learning inference workflow.

FAQs:

Q: Is TensorRT CMake compatible with all operating systems?

A: Yes, CMake is a cross-platform build system, and TensorRT CMake can be utilized on various operating systems, including Linux, Windows, and macOS.

Q: Can I integrate TensorRT CMake with other deep learning frameworks like TensorFlow, PyTorch, or ONNX?

A: Yes, TensorRT is fully compatible with a wide range of deep learning frameworks, including TensorFlow, PyTorch, and ONNX. You can utilize TensorRT CMake to seamlessly integrate and optimize inference of models from these frameworks.

Q: Are there any specific hardware requirements for using TensorRT CMake?

A: TensorRT itself has specific hardware requirements, primarily a CUDA-enabled NVIDIA GPU. However, as long as your system meets the requirements for TensorRT, you can utilize TensorRT CMake without any issues.

Q: Can I use TensorRT CMake with pre-trained models?

A: Absolutely! TensorRT CMake is designed to optimize the inference of any pre-trained deep learning model, allowing for faster and more efficient execution on GPUs.

Conclusion:

In the world of deep learning inference, TensorRT CMake proves to be a valuable tool for efficiently integrating TensorRT into your projects. By automating the build process and managing dependencies, TensorRT CMake enables seamless execution and optimization of deep learning models. By following the steps outlined in this article, you can easily find TensorRT CMake, integrate it into your workflow, and leverage the power of TensorRT for high-performance deep learning inference.

Images related to the topic could not find tensorrt

Found 5 images related to could not find tensorrt theme

Article link: could not find tensorrt.

Learn more about the topic could not find tensorrt.

- tensorflow object detection TF-TRT Warning: Could not find …

- Could NOT find TENSORRT (missing: TENSORRT_LIBRARY)

- Could Not Find Tensorrt – Troubleshooting Guide

- TensorFlow Installation Error – General Discussion

- Getting started with TensorRT – IBM

- Installation — Torch-TensorRT v1.4.0+7d1d80773 … – PyTorch

- How to Install TensorRT on Ubuntu – Level Up Coding

- TensorRT Support — mmdeploy 1.1.0 documentation

See more: nhanvietluanvan.com/luat-hoc