Spark Save As Table

In the world of big data, Apache Spark has emerged as a powerful computing engine for processing and analyzing large datasets. One of the key features of Spark is the ability to save data as tables, which provides a structured and organized way to store and access data. In this article, we will explore the concept of saving Spark data as tables and discuss the different file formats and options available.

The Importance and Benefits of Saving Spark Data as a Table

Saving Spark data as a table offers several advantages over other data storage methods. Firstly, it provides a structured format that allows for efficient querying and analysis of data. Tables make it easier to apply filters, transformations, and aggregations on the data, making it simpler to derive insights and make informed decisions.

Additionally, tables provide a way to organize and manage data in a centralized manner. Instead of scattered files, tables offer a cohesive structure, simplifying data management and maintenance. Tables also enable easy sharing and collaboration among multiple users, as they can be accessed and queried by anyone with the appropriate permissions.

Different File Formats for Saving Spark Data as a Table

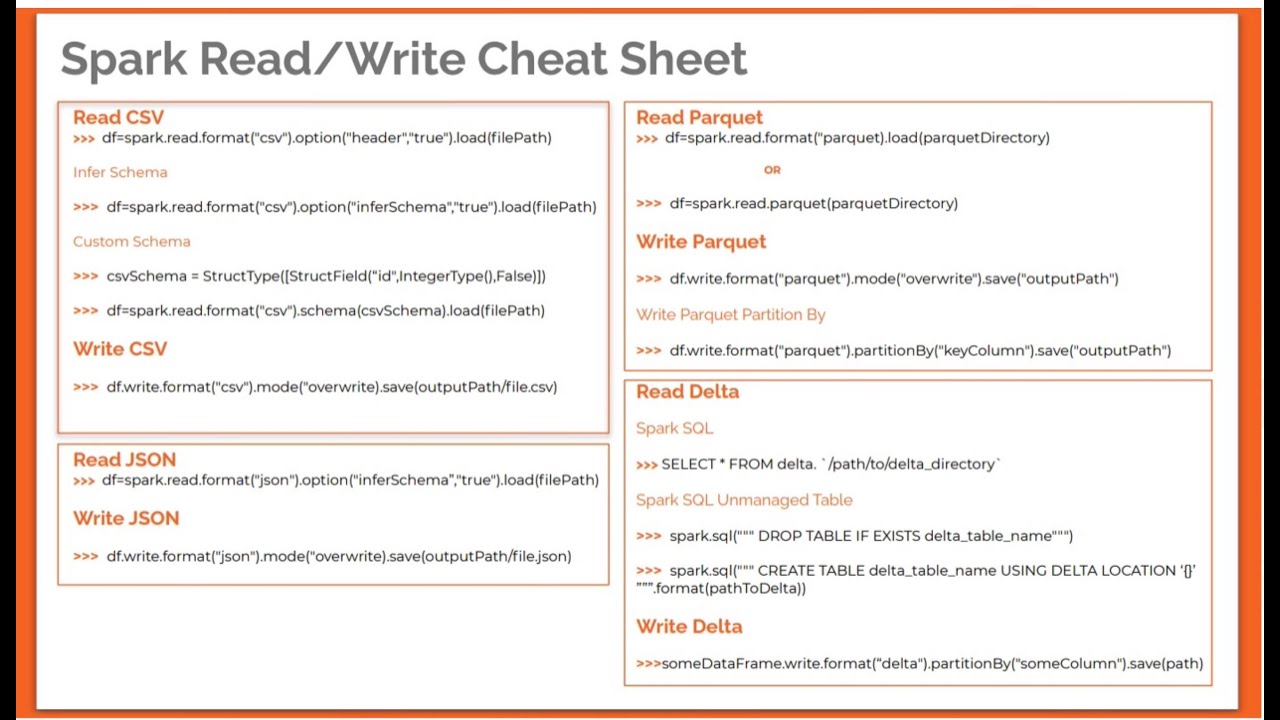

Spark supports various file formats for saving data as tables, each with its own advantages and considerations. Some of the commonly used file formats include Parquet, ORC, CSV, JSON, Avro, and Hive.

Saving Spark Data as a Parquet Table

Parquet is a columnar storage format that offers efficient compression and encoding techniques. It is designed to optimize read and write performance, making it an excellent choice for big data workloads. By saving Spark data as a Parquet table, you can achieve faster query execution and reduce storage costs.

Saving Spark Data as an ORC Table

ORC (Optimized Row Columnar) is another columnar storage format that is highly optimized for big data analytics. It provides excellent compression and indexing capabilities, enabling faster query processing. By saving Spark data as an ORC table, you can achieve higher performance and improved resource utilization.

Saving Spark Data as a CSV Table

CSV (Comma-Separated Values) is a simple and widely used file format for tabular data. It is human-readable and compatible with many applications. While CSV may not provide the same level of performance as columnar formats, it offers ease of use and portability, making it suitable for certain use cases.

Saving Spark Data as a JSON Table

JSON (JavaScript Object Notation) is a popular format for storing and exchanging data. It is flexible and self-describing, allowing for complex and nested structures. Saving Spark data as a JSON table preserves the underlying structure of the data and enables seamless integration with other JSON-based systems and tools.

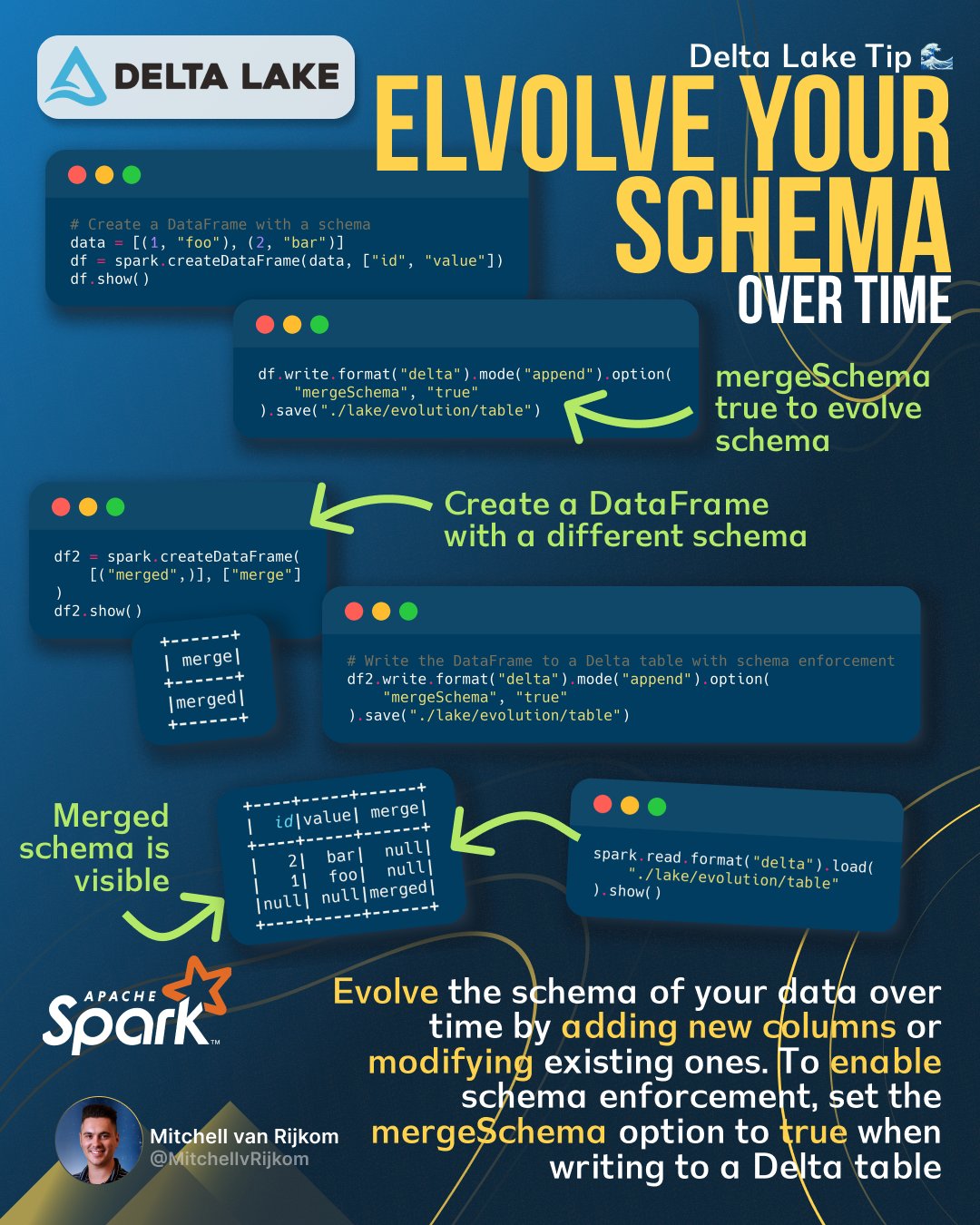

Saving Spark Data as an Avro Table

Avro is a binary serialization format that provides schema evolution capabilities. It supports schema evolution without requiring an upgrade of the entire dataset, making it suitable for scenarios where data schemas evolve over time. Saving Spark data as an Avro table ensures compatibility and flexibility in data processing and storage.

Saving Spark Data as a Hive Table

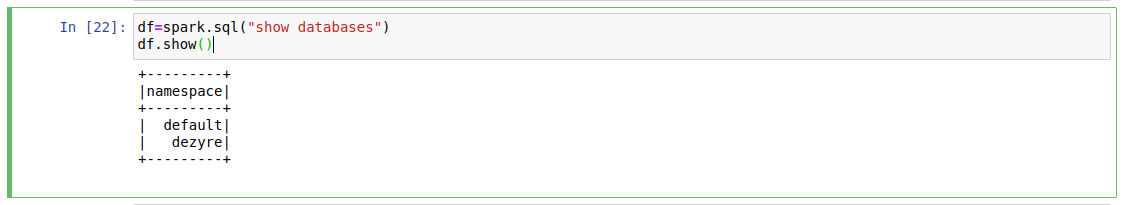

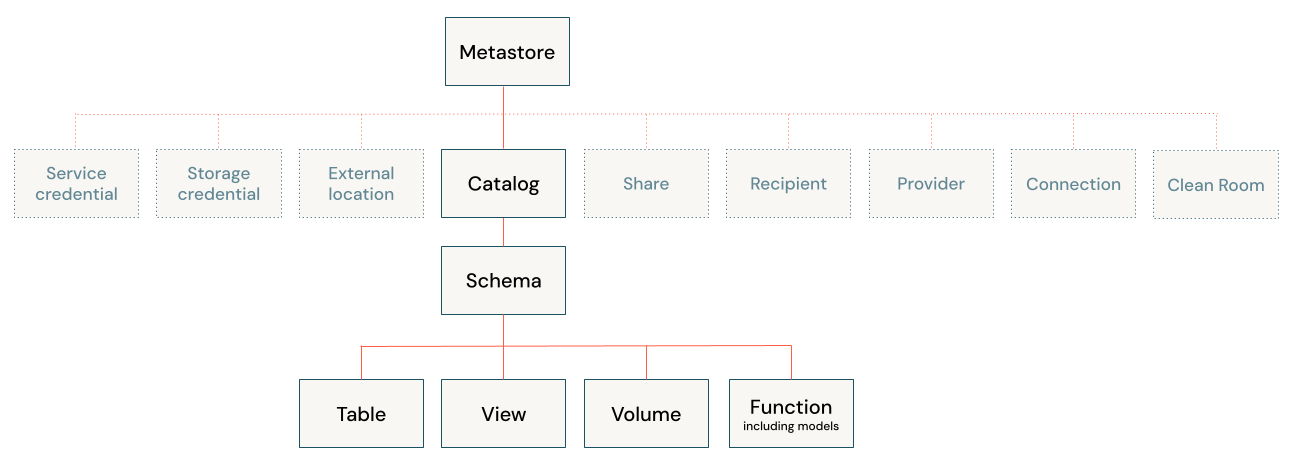

Hive is a data warehousing infrastructure built on top of Spark that provides SQL-like query capabilities. You can save Spark data as a Hive table, which allows you to leverage the rich ecosystem of Hive tools and compatibility. Hive tables can be accessed by other applications and systems that support the Hive Metastore.

Managing and Accessing Spark Tables

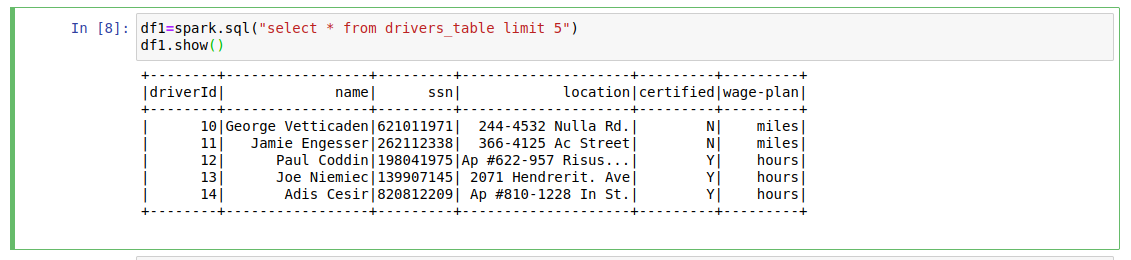

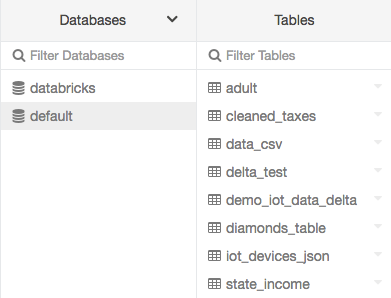

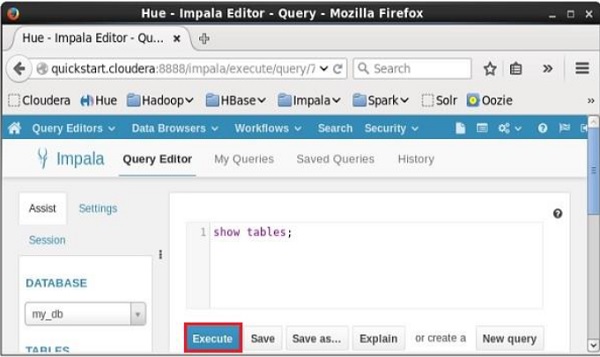

Once you have saved Spark data as tables, you can manage and access them using Spark SQL or other programming interfaces. Spark SQL provides a declarative API for querying and manipulating structured data. You can use SQL-like syntax or DataFrame/Dataset API to interact with the tables.

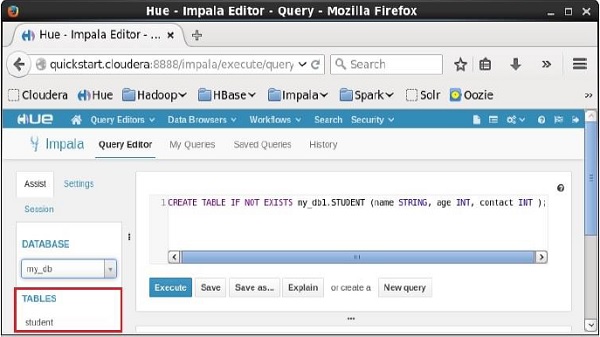

To create a table in Spark SQL, you can use the `CREATE TABLE` statement, specifying the table name, schema, and file format. For example, you can create a Parquet table called “employees” with columns “name” and “salary” as follows:

“`

CREATE TABLE employees (name STRING, salary FLOAT) USING PARQUET;

“`

After creating a table, you can query it using standard SQL syntax. For example, you can retrieve all records from the “employees” table as follows:

“`

SELECT * FROM employees;

“`

Performance Considerations when Saving Spark Data as a Table

When saving Spark data as tables, it is essential to consider performance considerations. The choice of file format can significantly impact query execution times and storage efficiency. Columnar formats like Parquet and ORC are optimized for analytical workloads and generally offer better performance compared to row-based formats like CSV.

Additionally, partitioning can improve performance by reducing the data volume that needs to be scanned during queries. You can use the `PARTITIONED BY` clause when creating a table to specify the partitioning columns. For example, you can partition the “employees” table by the “year” and “month” columns as follows:

“`

CREATE TABLE employees (name STRING, salary FLOAT)

USING PARQUET

PARTITIONED BY (year INT, month INT);

“`

Partitioning enables efficient pruning of data during query execution, leading to faster and more optimized queries.

FAQs

Q: Can I save Spark data as multiple file formats within a single table?

A: No, each table can have a single file format. However, you can create multiple tables with different file formats to store the same data differently.

Q: Can I use Spark SQL to interact with tables saved in Hive?

A: Yes, Spark SQL provides seamless integration with Hive tables. You can use Spark SQL to query and manipulate Hive tables just like any other tables.

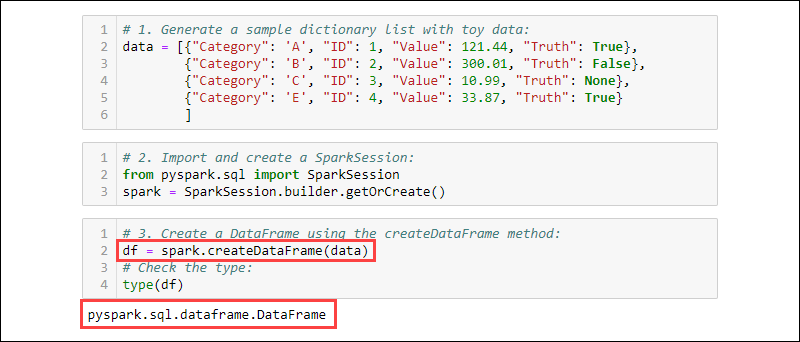

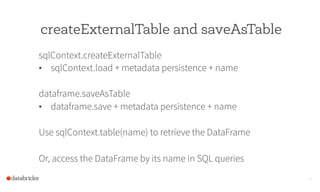

Q: Can I convert a Spark DataFrame to a table?

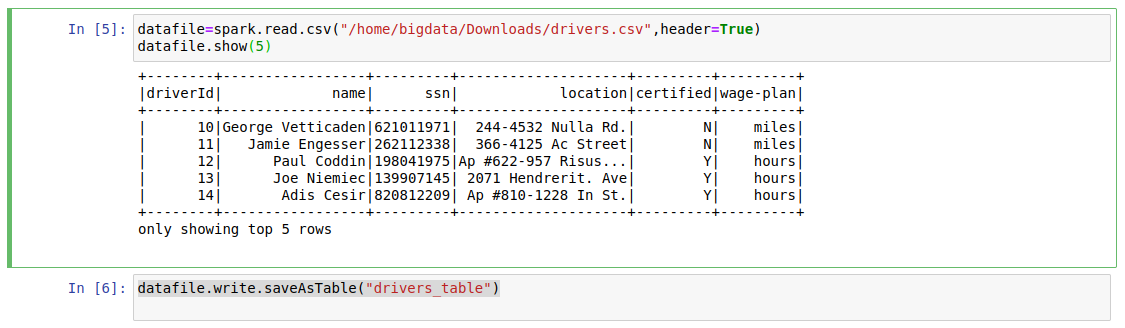

A: Yes, you can save a Spark DataFrame as a table using the `saveAsTable` method. This allows you to persist the DataFrame as a table in a specified file format.

Q: Can I perform partition pruning on tables saved as CSV or JSON?

A: Partition pruning is more effective on columnar formats like Parquet and ORC. While it is technically possible to partition tables in CSV or JSON formats, the performance benefits may be limited.

Q: Can I update or insert new records into a Spark table?

A: Yes, you can use the `INSERT INTO` statement to add new records or update existing records in a Spark table. This allows you to modify the data stored in the table.

In conclusion, saving Spark data as tables provides a structured and organized way to store and access data efficiently. By choosing the right file format and considering performance considerations, you can optimize query execution and maximize storage efficiency. Spark offers a wide range of options for saving data as tables, allowing you to choose the format that best suits your specific use case.

Pyspark Saveastable | Save Spark Dataframe As Parquet File And Table

What Is The Difference Between Save As Table And Insert Into Spark?

Apache Spark is a powerful big data processing and analytics framework that provides various operations to manipulate and process large-scale datasets. One of the essential functionalities in Spark is the ability to store data in tabular formats. In this article, we will explore two methods of saving data, Save As Table and Insert Into, and discuss their differences.

Save As Table:

Save As Table is a command in Spark that allows you to save the contents of a DataFrame or Dataset as a table in a database system or a Hive metastore. This method is commonly used when you want to store the data for later retrieval or use it in downstream applications. When using Save As Table, Spark will create a new table or replace an existing table in the specified database with the data from the DataFrame or Dataset.

One important aspect to note about Save As Table is that it does not support the append mode by default. When using this method, if the table already exists, Spark will overwrite the entire table with the new data. This behavior ensures consistency and enables optimization opportunities in distributed systems.

To use Save As Table, you need to specify the format of the table (e.g., Parquet, ORC, CSV), the table name, and the location where the table should be stored. Optionally, you can provide additional properties to configure the behavior of table creation, such as schema enforcement or partitioning options.

Insert Into:

Insert Into is another Spark command used to insert the contents of a DataFrame or Dataset into an existing table. This method is primarily used for incremental data updates or appending new data to an existing table. When using Insert Into, Spark will insert the data into the specified table without overwriting any existing data. It is worth mentioning that the destination table should be created beforehand.

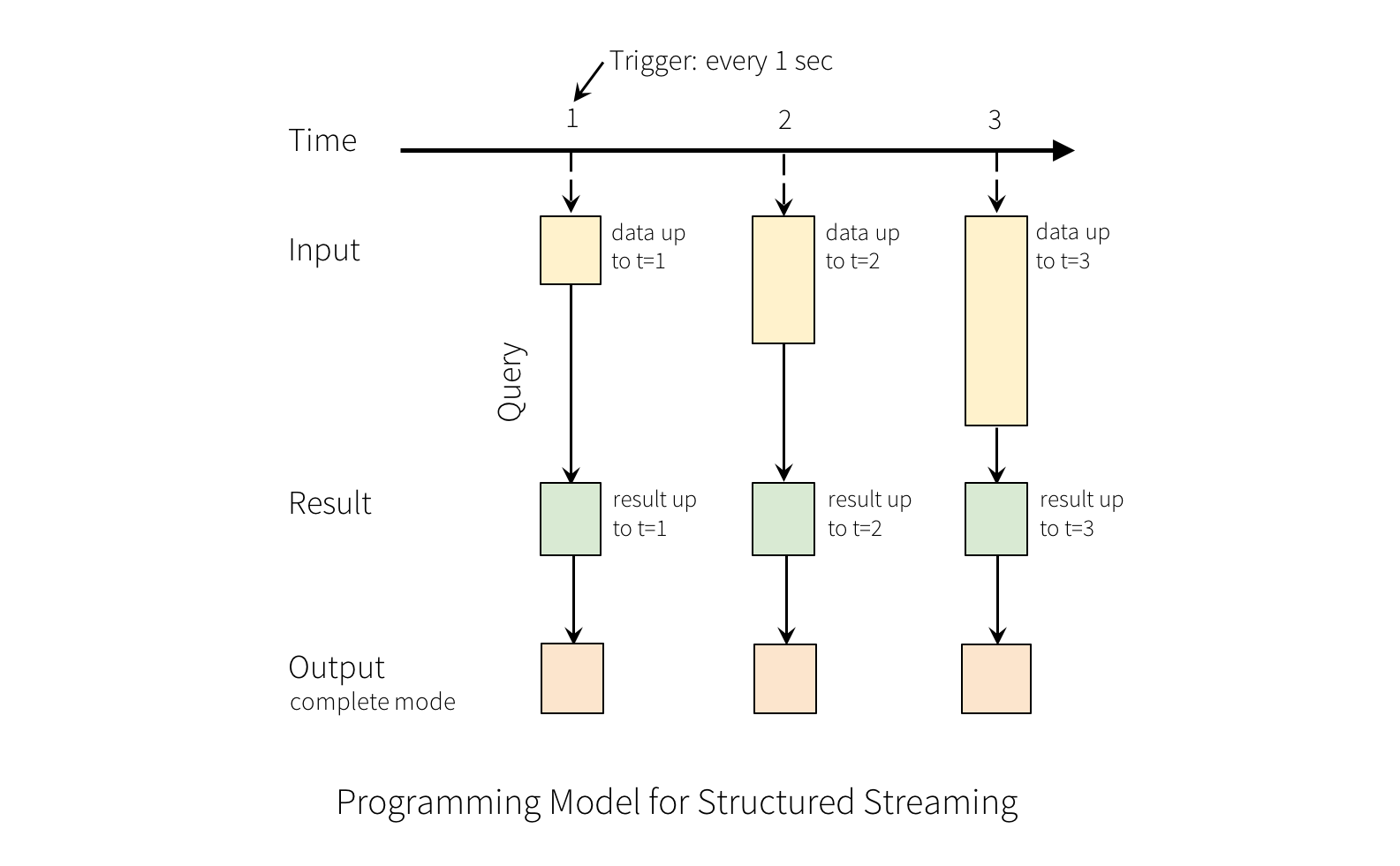

Unlike Save As Table, Insert Into supports the append mode by default. When appending new data to an existing table, Spark will ensure that the new records are added to the table without affecting the existing records. This makes Insert Into a convenient method for handling streaming data or incremental batch updates.

Similar to Save As Table, you need to specify the format of the table, the table name, and the location where the table is stored. Additionally, you need to ensure that the table structure matches the schema of the DataFrame or Dataset you are inserting.

Differences between Save As Table and Insert Into:

Now that we understand the basic concepts of Save As Table and Insert Into in Spark, let’s discuss their key differences:

1. Table Creation: Save As Table is responsible for both table creation and data storage. It can create a new table or replace an existing table with the data from the DataFrame or Dataset. On the other hand, Insert Into assumes that the destination table already exists, and its main task is to insert new data into the table.

2. Data Overwrite: Save As Table overwrites the entire table with the new data each time it is executed. It does not support appending new data to an existing table. In contrast, Insert Into appends new data to the existing table without affecting the existing records. It is designed for incremental updates.

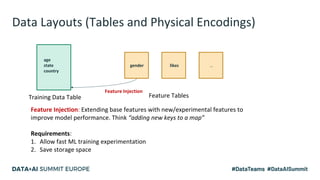

3. Schema Enforcement: Save As Table provides more flexibility when it comes to specifying table properties and enforcing schema rules. It allows you to configure various options during table creation, such as partitioning or schema validation. In contrast, Insert Into assumes that the table structure matches the schema of the inserted DataFrame or Dataset.

4. Use Cases: Save As Table is suitable for scenarios where you want to store the entire DataFrame or Dataset for later retrieval or downstream applications. It is commonly used for batch data processing or creating data warehouses. Insert Into, on the other hand, is ideal for incremental data updates, real-time stream processing, or scenarios where you want to continuously append new data to an existing table.

FAQs:

Q: Can Save As Table create a new table from an existing DataFrame or Dataset?

A: Yes, Save As Table can create a new table using the contents of an existing DataFrame or Dataset. It will overwrite or replace any existing table with the new data.

Q: Is it possible to append new data to an existing table using Save As Table?

A: No, Save As Table overwrites the entire table with the new data each time it is executed. It does not support appending new data to an existing table.

Q: Why would I use Save As Table instead of Insert Into?

A: Save As Table is useful when you want to store the entire DataFrame or Dataset as a table for future retrieval or use in downstream applications. It is commonly used for batch data processing or creating data warehouses.

Q: Can I use Insert Into to create a new table?

A: No, Insert Into assumes that the destination table already exists. It is designed to insert new data into an existing table without affecting the existing records.

Q: Which method is suitable for handling incremental data updates?

A: Insert Into is ideal for handling incremental data updates. It appends new data to an existing table, making it suitable for scenarios like real-time stream processing or continuously appending new data.

In conclusion, Save As Table and Insert Into are two important methods in Spark for storing DataFrame or Dataset contents as tables. While Save As Table is mainly used for creating or replacing tables, Insert Into is designed for incremental data updates. Understanding the differences between these two methods will help you choose the appropriate approach based on your specific use case.

How Can A Spark Dataframe Be Saved As A Persistent Table?

Apache Spark is an open-source distributed computing system that allows users to process massive amounts of data using a cluster of computers. One of the core components of Spark is the DataFrame, which is a distributed collection of data organized into named columns. Spark DataFrames provide a high-level API for working with structured and semi-structured data, making it easy to manipulate and analyze large datasets.

In many cases, it is not sufficient to perform one-time analyses on data; instead, there is often a need to persist data for future use or for sharing with other users. Spark provides various ways to save a DataFrame as a persistent table, which allows data to be stored in a structured and queryable format. In this article, we will explore some of the popular options for saving DataFrames as persistent tables in Spark.

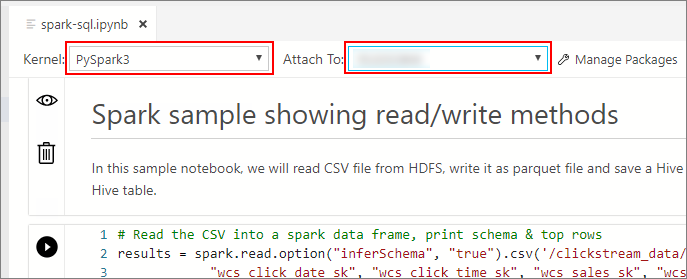

1. Save as a Parquet file:

Parquet is a columnar storage format that is optimized for large-scale data processing. It is highly efficient in terms of both storage and query performance. Spark enables saving DataFrames as Parquet files using the write.parquet() method. Here’s an example of how to save a DataFrame called “myDF” as a Parquet file:

“`

myDF.write.parquet(“path/to/save.parquet”)

“`

2. Save as an ORC file:

ORC (Optimized Row Columnar) is another columnar storage format supported by Spark. Similar to Parquet, ORC provides excellent performance for both reading and writing large datasets. To save a DataFrame as an ORC file, use the write.orc() method, like this:

“`

myDF.write.orc(“path/to/save.orc”)

“`

3. Save as a CSV file:

Comma-separated values (CSV) is a plain text format commonly used for data exchange between different systems. Although not as optimized as the columnar formats mentioned previously, CSV files are widely supported and easy to work with. To save a DataFrame as a CSV file, use the write.csv() method:

“`

myDF.write.csv(“path/to/save.csv”)

“`

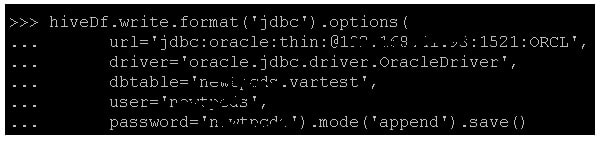

4. Save to a database:

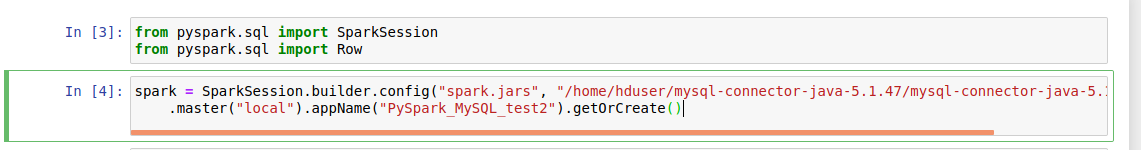

Spark provides connectors for various relational databases, such as MySQL, PostgreSQL, and Oracle. These connectors can be used to write DataFrames directly to database tables. To save a DataFrame to a database table, you need to configure the appropriate connection properties and use the write.jdbc() method. Here’s an example of saving a DataFrame to a MySQL database:

“`

myDF.write.jdbc(url=”jdbc:mysql://localhost/mydb”, table=”mytable”, mode=”overwrite”, properties={“user”: “username”, “password”: “password”})

“`

5. Save to Hive:

Hive is a data warehouse infrastructure built on top of Hadoop that provides a SQL-like interface for querying and managing large datasets. Spark has built-in integration with Hive, allowing DataFrames to be saved as managed tables in Hive’s metastore. To save a DataFrame to Hive, use the saveAsTable() method:

“`

myDF.saveAsTable(“mytable”)

“`

This will create a managed table named “mytable” in Hive and store the DataFrame’s contents.

FAQs:

Q1. Can I save a DataFrame to multiple file formats simultaneously?

Yes, Spark allows you to save a DataFrame to multiple file formats simultaneously. You can chain multiple write methods together to achieve this, as shown in the example below:

“`

myDF.write.parquet(“path/to/save.parquet”).csv(“path/to/save.csv”)

“`

This will save the DataFrame as both a Parquet file and a CSV file.

Q2. How can I specify custom options when saving a DataFrame?

Spark provides the option() method that can be used to specify custom options when saving a DataFrame. This method takes a key-value pair as input, where the key represents the option name and the value represents the option value. Here’s an example:

“`

myDF.write.option(“header”, “true”).csv(“path/to/save.csv”)

“`

In this example, the “header” option is set to “true” to include a header row in the CSV file.

Q3. Can I append data to an existing persistent table?

Yes, Spark allows you to append data to an existing persistent table by using the mode option when saving the DataFrame. Set the mode to “append” to add the DataFrame’s contents to the existing table. For example:

“`

myDF.write.mode(“append”).parquet(“path/to/save.parquet”)

“`

Q4. How can I overwrite an existing persistent table?

To overwrite an existing persistent table, set the mode option to “overwrite” when saving the DataFrame. This will replace the existing contents of the table with the DataFrame’s data. Here’s an example:

“`

myDF.write.mode(“overwrite”).orc(“path/to/save.orc”)

“`

In conclusion, Spark provides multiple options for saving DataFrames as persistent tables. Whether you prefer columnar formats like Parquet and ORC for optimal performance or more universal formats like CSV, Spark has you covered. Additionally, Spark allows seamless integration with relational databases and Hive, enabling you to save DataFrames directly to database tables or Hive managed tables. So, choose the format and storage option that best suits your needs and make the most of Spark’s powerful capabilities for persistent data storage and analysis.

Keywords searched by users: spark save as table Spark save hive table with partition, Spark write CSV, Spark read option, CREATE TABLE – Spark SQL, Date_format spark, Dataframe save as table, PySpark write CSV, INSERT into Hive table

Categories: Top 94 Spark Save As Table

See more here: nhanvietluanvan.com

Spark Save Hive Table With Partition

Introduction:

Apache Spark is an open-source, distributed computing system widely used for big data processing and analytics tasks. Spark seamlessly integrates with Apache Hive, a data warehouse infrastructure built on top of Hadoop, providing a convenient way to query and manage large datasets. In this article, we’ll dive into the process of saving a Spark DataFrame as a partitioned Hive table, offering an efficient and organized way to store and access our data.

Saving a Spark DataFrame as a Partitioned Hive Table:

Spark simplifies the process of saving a DataFrame as a Hive table by providing an easy-to-use API. By leveraging the partitioning capability of Hive, we can break down our data into logical units based on specific columns and store them in separate directories.

To save a DataFrame as a Hive table with partitions, we first need to ensure that Hive support is enabled in Spark. This can be achieved by adding the following configuration line in our Spark application code:

“`

spark.sqlContext.setConf(“hive.exec.dynamic.partition”, “true”)

“`

Once Hive support is enabled, we can proceed to save our DataFrame. Here’s an example illustrating how to save a DataFrame called `df` as a partitioned Hive table named `my_table` with partitions based on the `date` column:

“`scala

df.write

.partitionBy(“date”)

.saveAsTable(“my_table”)

“`

In the above example, the `partitionBy` method instructs Spark to partition the data based on the `date` column. Each distinct value in the `date` column will create a separate directory within the Hive table location.

When saving a DataFrame as a Hive table, it’s essential to define the partition column(s) along with their respective data types in the table schema. Spark automatically infers the data types when reading the data, but we can explicitly set them using the `option` method:

“`scala

df.write

.partitionBy(“date”)

.option(“partition”, “date:DATE”)

.saveAsTable(“my_table”)

“`

In the above code snippet, the `option` method sets the `date` column’s data type as `DATE`. This step ensures the correct partitioning when reading and querying the Hive table.

Frequently Asked Questions:

Q1. What is the purpose of partitioning a Hive table?

Partitioning a Hive table provides several advantages:

– Improved query performance: Partitioning enables pruning, where only relevant partitions are scanned during query execution, significantly reducing the amount of data processed.

– Simplified data management: Partitioning allows for more efficient data organization and retrieval based on specific criteria, such as dates, regions, or categories.

– Granular control over data: Partitioning makes it easier to add or remove data subsets from a table without affecting the entire dataset.

Q2. Can I create multiple levels of partitions in a Hive table?

Yes, Hive supports hierarchical partitioning. You can define multiple levels of partition columns, representing different grouping levels. For example, you could partition data by year, then by month, and finally by day, creating a multi-level directory structure.

Q3. Can I dynamically add partitions to a Hive table?

Yes, Spark allows you to dynamically add partitions to a Hive table. You can use the `spark.sql` API to run HiveQL queries and execute the `ALTER TABLE ADD PARTITION` command to add partitions on the fly.

Q4. How can I specify a custom partition format in Hive?

By default, Hive uses the `key=value` format to name and organize partition directories. However, you can customize the partition format using the `hive.exec.dynamic.partition.mode` configuration property. Options include `strict` (default), `nonstrict`, and `nostrict`, offering different levels of flexibility in partition naming.

Q5. Are there any limitations to partitioning in Hive?

Partitioning in Hive has a few limitations to note:

– Large number of partitions: Managing an excessive number of partitions can impact query and metadata performance. Consider using higher-level abstractions or optimizing your partitioning strategy if you encounter this issue.

– Overhead of large data updates: In cases where a significant portion of partitions requires updates, it might be more efficient to overwrite the entire table instead of performing individual updates.

Conclusion:

In this comprehensive guide, we explored the process of saving a Spark DataFrame as a partitioned Hive table. We learned how to enable Hive support in Spark, define partition columns, set their data types, and save the DataFrame as a Hive table. Additionally, we discussed the benefits of partitioning, creating multi-level partitions, dynamic partitioning, and customizing partition formats. By leveraging Spark and Hive together, we can efficiently manage and query large datasets, improving performance and simplifying data organization.

Spark Write Csv

Introduction:

Apache Spark, an open-source distributed computing system, offers powerful functionalities for large-scale data processing, analytics, and machine learning. One of its key features is the ability to handle diverse data formats efficiently, including CSV (Comma-Separated Values). In this article, we will delve into the various aspects of Spark’s CSV writing capabilities, exploring its implementation, optimizations, and commonly encountered challenges. Additionally, we will address frequently asked questions to provide a comprehensive understanding of Spark’s CSV-writing functionality.

I. Basics of Writing CSV Files in Spark:

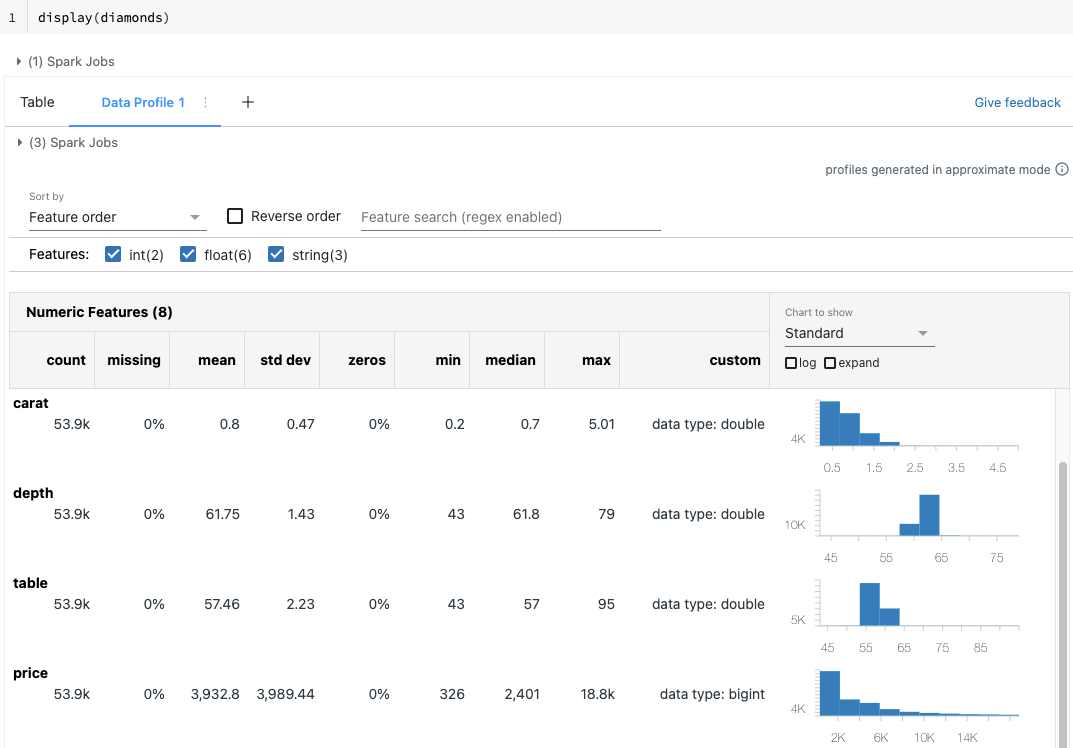

Apache Spark’s DataFrames and Datasets APIs provide high-level abstractions for structured data processing. These APIs simplify the process of writing CSV files, offering flexibility and performance optimizations. Below, we outline the fundamental steps to write CSV files using Spark:

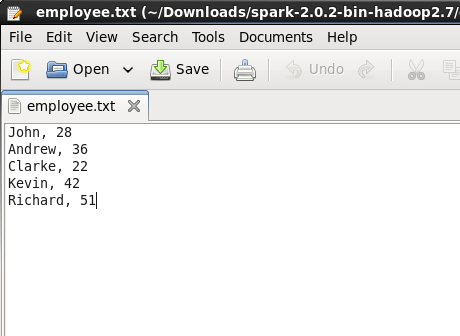

1. Create a DataFrame or Dataset: Begin by creating a DataFrame or Dataset representing the data you want to write to a CSV file. This can be done by reading data from various sources, such as files, databases, or streaming systems.

2. Apply transformations (if required): Perform any necessary transformations on the DataFrame or Dataset, such as filtering, grouping, or aggregations, to prepare the data for CSV writing.

3. Define output options: Set the desired options for CSV writing, including the file format, delimiter, header inclusion, and other specific requirements.

4. Write DataFrame/Dataset to CSV: Utilize the Spark API’s write method to save the DataFrame or Dataset as a CSV file, providing the desired output path.

II. CSV Writing Options in Spark:

Spark offers a wide range of options to customize the CSV writing process. Some important options include:

1. File format: Specify the output file format using the format(“csv”) API. When writing CSV files, Spark internally uses the “CSV” format, so this step can be skipped.

2. Delimiter and separator: Customize the delimiter and separator characters using options such as “sep” and “delimiter”. By default, Spark uses a comma (“,”) as the delimiter character.

3. Header inclusion: Include or exclude headers in the output file using the option “header”. Setting it to “true” adds column headers to the file, while “false” excludes them. By default, headers are included.

4. Compression: Compress the output CSV file using codecs like gzip, bzip2, or deflate, specified using the “compression” option.

5. Control character escaping: Handle control characters, like newlines or delimiters, within field values using options like “escape” and “quote”, ensuring proper rendering of the CSV file.

III. Performance Optimizations:

Writing large CSV files efficiently can be challenging due to overheads associated with serialization, I/O operations, and network transfers. Spark mitigates these issues through various optimizations:

1. Partitioning: Partitioning the data allows Spark to write chunks of data in parallel, resulting in faster writes. Partitioning can be achieved by various means, such as repartitioning data based on specific columns or using the “bucketBy” method to hash partition data.

2. Coalescing: Coalescing reduces the number of output files by combining partitions with fewer records, optimizing the write process. It uses the “coalesce” method to reshuffle data based on the desired number of output partitions.

3. Resource allocation: Adjusting the driver and executor memory configurations, as well as the parallelism settings, can greatly impact write performance. It is crucial to monitor resource utilization and tune these parameters accordingly.

IV. FAQs (Frequently Asked Questions):

Q1. How do I specify a custom delimiter character?

A. Use the “option(“delimiter”, “your_delimiter”)” API to define a custom delimiter character.

Q2. Can I write DataFrames/Datasets to multiple CSV files?

A. Yes, you can write to multiple files by specifying a directory path instead of a file path in the write method.

Q3. How can I handle null values in CSV files?

A. Spark treats null values as empty strings by default. To handle null values differently, use the “option(“nullValue”, “your_null_value”)” API.

Q4. Can Spark write CSV files directly to cloud storage providers like S3 or Azure Blob Storage?

A. Yes, Spark supports writing CSV files directly to various cloud storage providers. You can provide the appropriate storage URLs or credentials for seamless integration.

Q5. Does Spark perform automatic schema inference while writing CSV files?

A. Spark does not automatically infer schemas while writing CSV files. To specify a schema explicitly, define it using the “schema” API before writing the data.

Conclusion:

Apache Spark’s ability to write CSV files efficiently makes it an extremely valuable tool for data engineers and analysts dealing with large-scale data processing. This article covered the basics of writing CSV files in Spark, explored various options for customization, and discussed performance optimization techniques. By harnessing the power of Spark’s CSV writing capabilities, users can seamlessly handle diverse datasets and leverage the strength of distributed computing to process and analyze their data effectively.

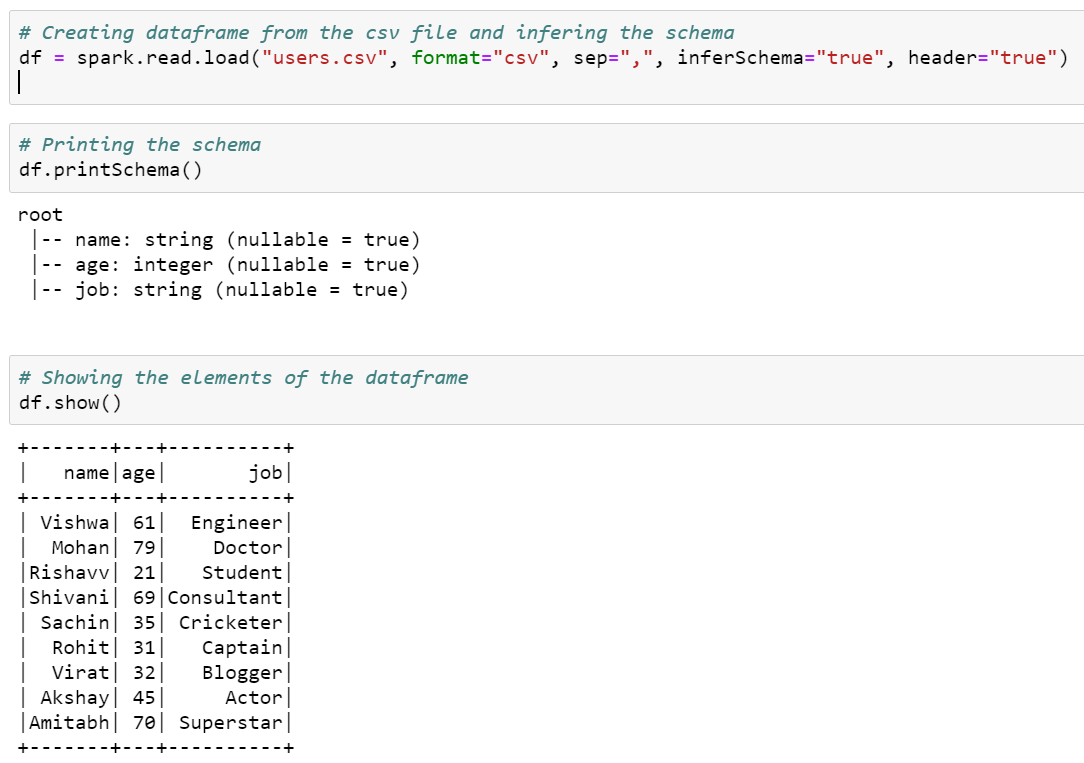

Spark Read Option

Introduction:

In the realm of Big Data processing, Apache Spark has emerged as a powerful tool due to its ability to handle massive datasets and execute complex distributed computations. Among its numerous features, the Spark Read Option stands out as an integral component in ingesting and processing data. In this article, we will explore the Spark Read Option, its functionalities, and advantages, shedding light on its underlying mechanics.

Spark Read Option Explained:

The Spark Read Option refers to the capability of Apache Spark to efficiently read and ingest data from various file formats, including CSV, Parquet, JSON, Avro, and more. By utilizing this option, developers can seamlessly load data into Spark DataFrames or RDDs, enabling subsequent processing and analysis.

Key Features and Benefits:

1. Flexibility: The Spark Read Option offers flexibility in terms of input formats, allowing data ingestion from diverse sources. This versatility simplifies the ingestion process, ensuring compatibility with different file formats commonly used in the industry.

2. Scalability: Spark leverages its distributed computing model to facilitate parallel processing, ensuring quick and efficient data loading, even for large datasets. The Spark Read Option maximizes performance by distributing the data across multiple executor nodes, enabling faster operations.

3. Ease of Use: Spark provides a user-friendly API for executing the Spark Read Option, making it accessible for both novice and experienced developers. This straightforward API streamlines the data loading process, minimizing complexities.

4. Fault Tolerance: Spark’s resilient distributed dataset (RDD) architecture ensures fault tolerance by automatically recovering from node failures. The Spark Read Option leverages this framework, enhancing overall reliability in processing large-scale data.

Supported File Formats:

The Spark Read Option supports numerous file formats, including, but not limited to:

1. CSV (Comma-separated values): A common format for tabular data storage, CSV files consist of rows and columns with values separated by commas.

2. Parquet: An efficient columnar storage format that offers fast query performance and high compression ratios, ideal for Big Data processing.

3. JSON (JavaScript Object Notation): JSON is a widely used format for data interchange and is readable by both humans and machines.

4. Avro: Avro is a binary data serialization format that supports schema evolution over time, making it suitable for evolving data models.

5. ORC (Optimized Row Columnar): ORC files are columnar storage formats specifically designed for Hadoop workloads, enhancing query performance.

FAQs:

Q1. Can the Spark Read Option handle compressed files?

Yes, the Spark Read Option is capable of reading compressed files. It supports various compression codecs (e.g., gzip, snappy, lzo), enabling efficient processing of compressed data.

Q2. Is it necessary to define the schema manually when using the Spark Read Option?

In most cases, Spark can automatically infer the schema of the input data, saving developers the effort of defining it manually. However, if needed, developers have the flexibility to specify the schema explicitly.

Q3. Can the Spark Read Option handle loading data from remote storage systems like Amazon S3 or Hadoop Distributed File System (HDFS)?

Absolutely. The Spark Read Option supports seamless integration with remote storage systems, enabling effortless data loading from popular platforms like Amazon S3 and HDFS.

Q4. Does the Spark Read Option introduce any performance optimizations for data ingestion?

Spark’s Read Option leverages data locality concepts, ensuring data is read from the closest possible location during ingestion. This optimization minimizes network overhead and contributes to faster data loading.

Q5. Are there any specific APIs available for using the Spark Read Option?

Yes, Spark provides dedicated APIs such as `spark.read` and `spark.readStream` to facilitate efficient data loading using the Spark Read Option. These APIs offer various configuration options and methods to customize data ingestion according to specific requirements.

Conclusion:

The Spark Read Option plays a pivotal role in Apache Spark’s capability to efficiently ingest and process large volumes of data. Its flexibility, scalability, and fault tolerance features make it an indispensable tool for data engineers and scientists working with Big Data. By supporting various file formats and employing parallel distributed computing, this option enables seamless data loading and paves the way for powerful analytics and insights in the world of Big Data.

Images related to the topic spark save as table

Found 44 images related to spark save as table theme

Article link: spark save as table.

Learn more about the topic spark save as table.

- Generic Load/Save Functions – Spark 3.4.1 Documentation

- How to save DataFrame directly to Hive? – Stack Overflow

- Spark – Save DataFrame to Hive Table – Kontext

- Understanding the Spark insertInto function | by Ronald Ángel

- Generic Load/Save Functions – Spark 3.4.1 Documentation

- CREATE DATASOURCE TABLE – Spark 3.4.1 Documentation

- How to write CSV data to a table in Hive in Pyspark – ProjectPro

- How to write CSV data to a table in Hive in Pyspark – ProjectPro

- 3 Ways To Create Tables With Apache Spark

- Saving to Persistent Tables – Data Science with Apache Spark

- How to write a Spark dataframe to Hive table in Pyspark? –

- 4. Spark SQL and DataFrames: Introduction to Built-in Data …

See more: https://nhanvietluanvan.com/luat-hoc/