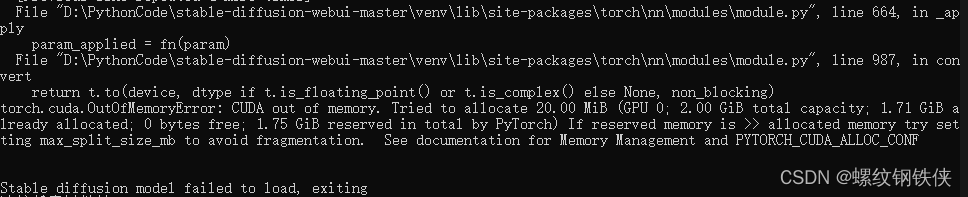

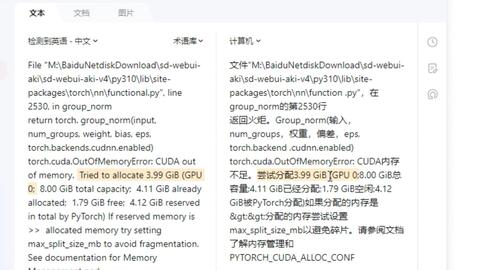

Torch.Cuda.Outofmemoryerror: Cuda Out Of Memory.

The torch.cuda.outofmemoryerror is a common error that occurs when training deep learning models using the PyTorch library. This error indicates that the GPU has run out of memory during the execution of a CUDA operation. In this article, we will discuss the common causes of this error and provide some solutions to overcome it.

Common Causes of torch.cuda.outofmemoryerror

1. Insufficient GPU Memory Allocation: One of the primary reasons for this error is insufficient GPU memory allocation. Deep learning models often require a large amount of memory to store the network parameters and intermediate results. If the allocated memory is not enough to accommodate the model and data, the out-of-memory error occurs.

2. Large Batch Sizes: Training a deep neural network with a large batch size requires more memory compared to a smaller batch size. If the batch size is too large, it can exceed the available GPU memory and cause the out-of-memory error.

3. High-dimensional Data or Complex Models: Models with a large number of parameters or complex architectures can consume a significant amount of GPU memory. Additionally, high-dimensional input data, such as images with large resolutions, can also contribute to higher memory requirements.

4. Memory Leakage in the Code: Memory leakage occurs when memory is allocated and not released properly. This can lead to a gradual increase in GPU memory usage over time, eventually causing the out-of-memory error.

5. Inadequate Error Handling: If the code does not handle errors properly, memory leaks or insufficient memory allocation may go unnoticed, resulting in the out-of-memory error.

6. Presence of Unnecessary Variables or Tensors: Keeping unnecessary variables or tensors in memory can consume valuable GPU memory. It is essential to remove unused variables or tensors promptly to prevent memory exhaustion.

7. Overlapping CPU and GPU Memory Usage: When transferring data between the CPU and GPU, there can be a delay in releasing CPU memory, leading to inefficient memory usage. It is crucial to ensure proper synchronization and deallocation of memory resources.

8. Insufficient System RAM: Although the GPU memory is the primary concern, insufficient system RAM can also contribute to the out-of-memory error. The GPU relies on the system RAM to perform some operations, and if it is limited, it can impact the overall GPU memory usage.

9. Outdated GPU Driver or CUDA Version: It is essential to keep your GPU driver and CUDA version up to date. Outdated versions may have memory management issues, which can result in the out-of-memory error.

Solutions to torch.cuda.outofmemoryerror

1. Reduce Batch Size: If the batch size is too large and causes the out-of-memory error, you can try reducing it. A smaller batch size requires less memory but may result in slower training.

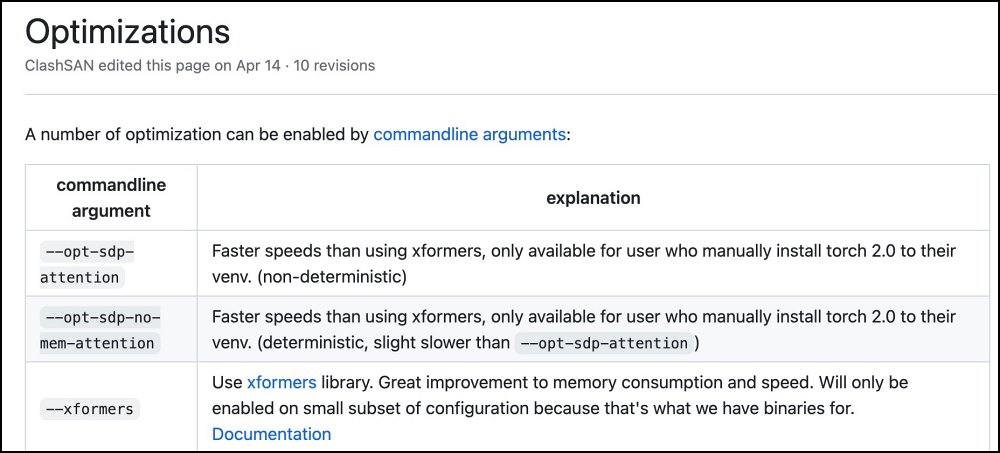

2. Increase GPU Memory: Depending on your hardware, you may have an option to increase the GPU memory allocation. This can be achieved through configuration settings or using GPU-specific libraries.

3. Use Mixed Precision Training: Mixed precision training uses lower precision (such as float16) for most operations, reducing memory consumption. However, it requires careful management of precision conversion to maintain accuracy.

4. Optimize Model Architecture: If your model architecture is too complex, consider simplifying it without compromising performance. This can reduce memory requirements and alleviate the out-of-memory error.

5. Monitor and Fix Memory Leakage: Regularly check your code for memory leaks and fix them promptly. Ensure that you release memory properly after use and avoid unnecessary memory allocations.

6. Implement Error Handling: Proper error handling can help you identify memory-related issues early on. Implement error checks and log any memory-related errors to gain insights into the root causes.

7. Clear Unnecessary Variables or Tensors: Remove unused variables or tensors from memory when they are no longer needed. This can free up memory and prevent excessive memory consumption.

8. Manage CPU and GPU Memory Usage: Optimize data transfers between the CPU and GPU to avoid memory overlaps. Ensure proper synchronization and deallocation of memory resources to maintain efficient memory usage.

9. Upgrade System RAM: If your system RAM is limited, consider upgrading it to provide sufficient resources for both the CPU and GPU.

10. Update GPU Driver and CUDA Version: Regularly update your GPU driver and CUDA version to benefit from the latest memory management improvements and bug fixes.

FAQs:

Q1: What is CUDA out of memory stable diffusion?

A1: CUDA out of memory stable diffusion refers to the distribution of stable versions of CUDA that address memory management issues and improve the stability of GPU memory usage.

Q2: How to resolve “cuda out of memory” in Google Colab?

A2: To resolve “cuda out of memory” in Google Colab, you can try reducing the batch size or using techniques like mixed precision training. Additionally, clearing unnecessary variables and tensors can help free up memory.

Q3: Why do I get “cuda out of memory” error in PyTorch even when there is enough memory?

A3: The “cuda out of memory” error in PyTorch can occur even when there is enough memory if the memory allocation is not done properly. It is essential to ensure that the allocated memory is sufficient and efficiently managed.

Q4: How to handle “cuda out of memory” in Amazon SageMaker?

A4: To handle “cuda out of memory” in Amazon SageMaker, you can follow similar approaches mentioned earlier, such as reducing batch size or optimizing memory usage. Additionally, upgrading the instance type to one with higher memory capacity can also help mitigate the issue.

In conclusion, torch.cuda.outofmemoryerror is a common error encountered during deep learning model training. By understanding the common causes and implementing the suggested solutions, you can effectively overcome this error and ensure smooth execution of your GPU-accelerated computations.

Fix \”Outofmemoryerror Cuda Out Of Memory Stable Difusion\” Tutorial 2 Ways To Fix

Keywords searched by users: torch.cuda.outofmemoryerror: cuda out of memory. CUDA out of memory stable Diffusion, OutOfMemoryError CUDA out of memory Stable Diffusion, whisper cuda out of memory, CUDA out of memory Google Colab, CUDA out of memory tried to allocate, CUDA out of memory PyTorch, cuda out of memory when there is enough memory, sagemaker cuda out of memory

Categories: Top 94 Torch.Cuda.Outofmemoryerror: Cuda Out Of Memory.

See more here: nhanvietluanvan.com

Cuda Out Of Memory Stable Diffusion

Introduction to CUDA Out of Memory Stable Diffusion

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by NVIDIA. It provides developers with a way to harness the power of NVIDIA GPUs (Graphics Processing Units) for general-purpose computing tasks. One of the common challenges developers face when utilizing CUDA for intensive computations is running out of memory, especially when dealing with large datasets or complex algorithms. In this article, we will discuss CUDA Out of Memory Stable Diffusion, a technique used to address this issue and ensure stable execution of CUDA applications even under memory constraints.

Understanding CUDA Memory Management

To comprehend the significance of CUDA Out of Memory (OOM) Stable Diffusion, it is essential to have a basic understanding of CUDA memory management. CUDA GPUs have separate memory spaces, namely the global memory, shared memory, and local memory. The global memory is the largest and is used for high-capacity storage. However, accessing global memory has higher latency compared to accessing shared or local memory.

To improve memory access efficiency, CUDA allows developers to utilize shared memory, which resides on a multiprocessor and can be accessed faster than global memory. Developers can also use local memory, private to each thread, for temporary data storage.

The Challenge: Out of Memory Errors

As CUDA applications manipulate and process large datasets, they may exhaust the available memory, leading to out of memory errors. In such situations, the GPU typically terminates the application execution, resulting in incorrect or incomplete results. This can be particularly problematic for long-running computations or applications that rely heavily on the GPU’s computational capabilities.

CUDA Out of Memory Stable Diffusion: An Overview

CUDA Out of Memory Stable Diffusion is a technique that allows developers to prevent out of memory errors by effectively managing available GPU memory. It achieves this by utilizing a diffused memory management approach, where memory allocations are distributed across multiple CUDA devices rather than a single GPU.

By diffusing memory allocations, the overall memory utilization becomes more balanced, reducing the risk of exhausting any single GPU’s memory. This technique helps maintain stability in execution and ensures that long-running computations can complete successfully. Furthermore, it enables the processing of more extensive datasets and complex algorithms that would otherwise exceed the memory limitations of a single GPU.

FAQs about CUDA Out of Memory Stable Diffusion

Q: How does CUDA Out of Memory Stable Diffusion distribute memory allocations?

A: CUDA Out of Memory Stable Diffusion relies on a load-balancing strategy to distribute memory allocations across multiple CUDA devices. The technique dynamically evaluates memory requirements and diverts memory allocations to different devices as needed. This prevents any single device from being overwhelmed by excessive memory demands, reducing the risk of out of memory errors.

Q: Is CUDA Out of Memory Stable Diffusion suitable for all applications?

A: CUDA Out of Memory Stable Diffusion is particularly useful for applications that involve memory-intensive computations, large datasets, or algorithms with high memory requirements. It is not always necessary for every CUDA application but can significantly benefit those that encounter out of memory errors frequently.

Q: Are there any performance trade-offs when using CUDA Out of Memory Stable Diffusion?

A: While CUDA Out of Memory Stable Diffusion helps maintain stable execution and prevents out of memory errors, it may introduce a slight performance impact due to the additional overhead required for memory diffusion across devices. However, this impact is generally outweighed by the benefits of stable execution and enables processing of larger datasets that would otherwise be impossible.

Q: Can CUDA Out of Memory Stable Diffusion be combined with other memory optimization techniques?

A: Yes, CUDA Out of Memory Stable Diffusion can be combined with other memory optimization techniques to further enhance memory utilization. Techniques like memory pooling, data compression, or reducing unnecessary memory transfers can complement the benefits of stable diffusion, enabling more efficient memory usage.

Conclusion

CUDA Out of Memory Stable Diffusion is a valuable technique for developers leveraging CUDA for memory-intensive computations. By diffusing memory allocations across multiple CUDA devices, this technique effectively prevents out of memory errors and ensures the stable execution of CUDA applications. Although there may be a slight performance impact, the benefits of stable execution and the ability to process larger datasets make CUDA Out of Memory Stable Diffusion a vital tool for optimizing CUDA-based applications.

Outofmemoryerror Cuda Out Of Memory Stable Diffusion

Introduction

OutOfMemoryError is a common error encountered by developers working with CUDA, the parallel computing platform and application programming interface model created by NVIDIA. This error occurs when the GPU is unable to allocate enough memory to perform the requested computation. One specific scenario where this error is often encountered is during stable diffusion computations. In this article, we will explore the causes of OutOfMemoryError in CUDA, specifically focusing on stable diffusion, and discuss potential solutions to overcome this issue.

Causes of OutOfMemoryError in CUDA

CUDA provides a powerful platform for parallel computing on NVIDIA GPUs, enabling developers to accelerate their applications by offloading the computations to the GPU. However, the limited memory on the GPU can sometimes pose a challenge, leading to OutOfMemoryError. Several factors contribute to this error, particularly in the context of stable diffusion computations.

1. Grid Size: Stable diffusion simulations typically involve a regular grid where computations are performed on each grid point. The size of this grid greatly influences the amount of memory required for the computation. As the grid size increases, so does the memory requirement, potentially pushing the GPU to its limits.

2. Time Steps: Stable diffusion computations are often performed iteratively over multiple time steps to simulate the diffusion process accurately. Each time step introduces additional memory requirements, further adding to the strain on the GPU’s memory.

3. Neighbourhood Stencil: The stencil used to determine the interaction between neighboring grid points in stable diffusion calculations also impacts the memory usage. The larger the stencil, the more memory is required to store the necessary data.

Strategies to mitigate OutOfMemoryError

When facing OutOfMemoryError in the context of stable diffusion computations in CUDA, there are several strategies that developers can employ to overcome this issue:

1. Reduce Grid Size: Adjusting the grid size is an effective way to reduce memory requirements. However, reducing the grid size may also sacrifice the accuracy or resolution of the simulation. Finding the right balance between memory constraints and desired accuracy is crucial.

2. Time Step Optimization: By carefully analyzing the nature of the simulation and the diffusion process, developers can potentially optimize the time step size. Increasing the time step size can reduce memory requirements as fewer time steps need to be computed. However, this can affect the accuracy of the simulation, so developers should consider the trade-off between memory savings and precision.

3. Memory Reuse: It is essential to minimize unnecessary memory allocations and deallocations during stable diffusion computations. Developers can explore techniques like memory pooling or reusing memory buffers efficiently to reduce memory fragmentation and improve overall memory utilization.

4. Interpolation Techniques: In some cases, developers may explore using interpolation techniques to reduce memory requirements. Instead of storing data for every grid point, certain values can be interpolated using neighboring grid points. This approach reduces memory consumption but introduces some approximation.

FAQs

1. What is the typical memory requirement for stable diffusion computations?

The memory requirement for stable diffusion computations varies based on factors like grid size, time steps, and the size of the neighborhood stencil. It is challenging to provide an exact value as it depends on the specific simulation parameters.

2. How can I check the GPU memory usage during computations?

You can use NVIDIA’s CUDA Profiler Tool (nvprof) or the CUDA Runtime API to measure GPU memory usage during computations. These tools provide insights into memory allocation and deallocation, allowing you to analyze memory usage patterns.

3. Are there any CUDA libraries available to aid with memory management?

Yes, NVIDIA provides libraries like CUB and Thrust that offer optimized memory management routines. These libraries can simplify memory management tasks and potentially reduce memory usage.

4. Can I increase the GPU memory to avoid OutOfMemoryError?

The GPU memory is fixed and cannot be increased. However, some GPUs offer different variants with larger memory capacities. Upgrading to a GPU with more memory can help mitigate OutOfMemoryError in certain cases.

Conclusion

OutOfMemoryError in CUDA stable diffusion computations is a common challenge faced by developers working with parallel computing on NVIDIA GPUs. Understanding the factors that contribute to this error and employing strategies like grid size optimization, time step optimization, memory reuse, and interpolation techniques can help mitigate this issue. It is crucial to strike a balance between memory constraints and desired accuracy to ensure reliable and efficient stable diffusion simulations.

Whisper Cuda Out Of Memory

Introduction:

Whisper CUDA is a parallel computing platform and programming model developed by NVIDIA for use with their GPUs (Graphics Processing Units). It enables developers to harness the immense computational power of GPUs for a wide range of applications, including artificial intelligence, scientific simulations, and deep learning. However, users sometimes encounter a common issue referred to as “Whisper CUDA Out of Memory.” In this article, we will delve into this issue, exploring its causes, potential solutions, and provide some frequently asked questions to help users better understand and troubleshoot the problem.

Understanding Whisper CUDA Out of Memory:

1. What causes the Out of Memory error?

The Out of Memory error occurs when the GPU lacks the necessary memory to perform a specific computation or load a data set. Whisper CUDA imposes certain memory limits on GPU usage, and when these limits are exceeded, the runtime environment throws an Out of Memory error. This error commonly happens when working with large data sets or complex neural network models that require substantial memory resources.

2. How does memory allocation work in Whisper CUDA?

Whisper CUDA utilizes a two-tier memory model: global memory and shared memory. Global memory is a large pool that can be accessed by all threads running on the GPU, while shared memory is a smaller, faster, and explicitly controlled memory space accessible only to threads within a block. When running a GPU program, the developer needs to manage memory allocation efficiently to avoid Out of Memory errors.

3. Are there any other factors that can contribute to the error?

Apart from insufficient global or shared memory, several other factors can impact memory allocation in Whisper CUDA. These include the memory requirements of other running processes on the GPU, fragmentation of GPU memory, improper usage of memory optimization techniques, and insufficient memory bandwidth.

Solutions to Whisper CUDA Out of Memory:

1. Reduce memory usage:

Since memory is the primary concern, reducing the memory consumption can alleviate the Out of Memory error. Here are a few strategies to achieve this:

– Use smaller batch sizes during training processes to lower memory requirements.

– Apply data pruning or feature selection techniques to reduce the size of the input dataset.

– Optimize memory-consuming operations (e.g., matrix multiplications) by implementing more memory-efficient algorithms.

2. Optimize memory transfers:

Minimizing data transfers between the CPU and GPU can improve the memory management process. Here are some techniques to consider:

– Utilize pinned memory, which enables faster transfers between the host (CPU) and device (GPU).

– Employ asynchronous memory copies to overlap data transfers with computations.

– Make use of memory streams to prioritize memory transfers and reduce delays.

3. Check for memory leaks:

Memory leaks occur when allocated memory is not properly deallocated after use, resulting in a gradual loss of memory resources. Tracking memory leaks using tools like CUDA-MEMCHECK or using appropriate programming practices can help identify and rectify these leaks promptly.

4. Adjust GPU memory allocation:

Whisper CUDA allocates the GPU memory dynamically. By altering the memory allocation limit, you can potentially avoid Out of Memory errors. This can be done by:

– Decreasing the memory reservation of the GPU to leave more memory for computation.

– Increasing the GPU page size to reduce the overhead caused by frequent memory page faults.

Frequently Asked Questions (FAQs):

Q1. Can I increase the GPU memory physically to resolve Out of Memory errors?

Unfortunately, it is not possible to increase the physical memory of a GPU, as it is fixed hardware. However, optimizing memory usage and employing memory management techniques can mitigate the Out of Memory issue.

Q2. Should I upgrade to a higher-end GPU to overcome Out of Memory errors?

While upgrading to a GPU with more memory can potentially alleviate some Out of Memory issues, it is not a foolproof solution. Optimizing memory consumption and utilizing memory management techniques remain crucial regardless of the GPU’s memory size.

Q3. Is there a way to estimate the memory consumption of a specific computation or model beforehand?

NVIDIA provides profiling tools like Nsight Compute that offer insights into the memory footprint of GPU kernels. These tools allow developers to analyze resource usage and estimate memory requirements, aiding in preemptive memory optimization.

Q4. How can I check the available memory on my GPU during runtime?

The CUDA API provides functions to query the total and available memory on a GPU. This can help users monitor memory usage and identify potential bottlenecks.

Q5. Is there a way to manually free GPU memory without restarting the application?

Yes, the “cudaFree” function in the CUDA API explicitly deallocates memory on the GPU. By ensuring proper memory deallocation within the program, developers can avoid memory leaks and optimize resource utilization.

Conclusion:

Whisper CUDA Out of Memory errors can be mitigated by understanding the memory allocation model, optimizing memory usage, and employing memory management techniques. By reducing memory consumption, streamlining memory transfers, and employing effective memory allocation strategies, users can improve their Whisper CUDA applications’ stability and performance. Remember to be proactive in profiling memory utilization and leveraging relevant tools for monitoring and debugging purposes. With these optimizations in place, overcoming Out of Memory issues in Whisper CUDA becomes a manageable task.

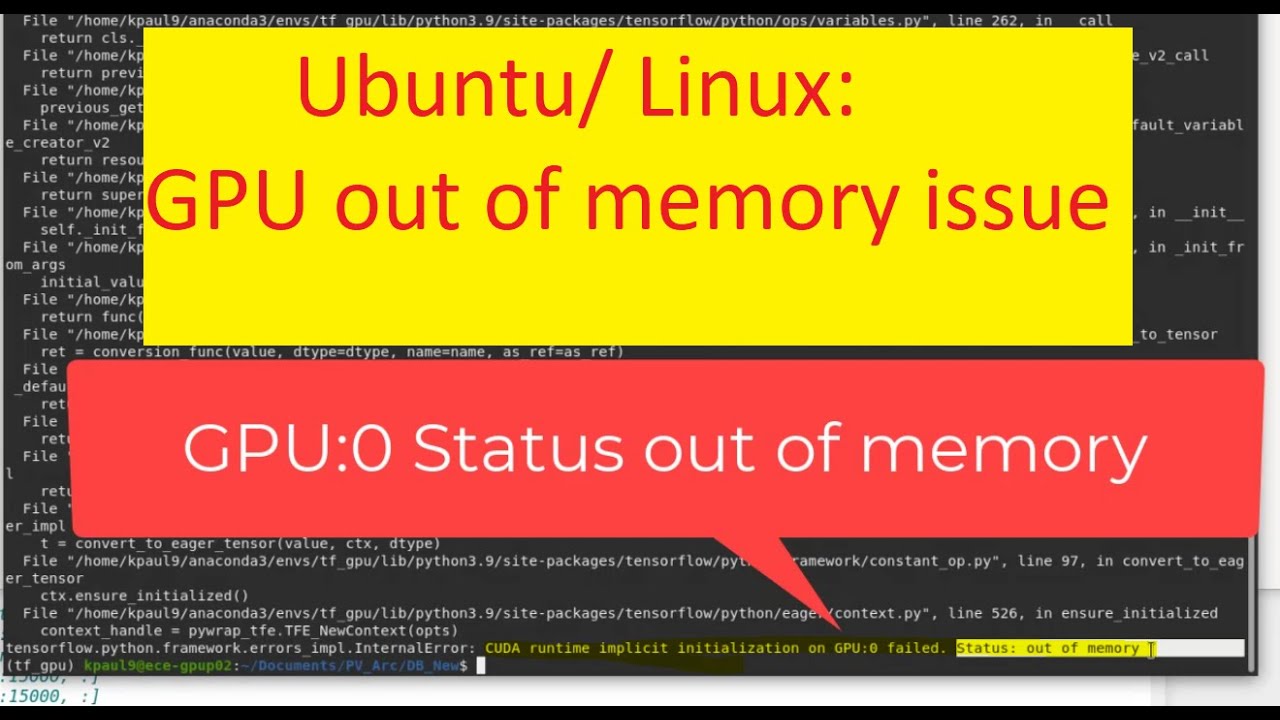

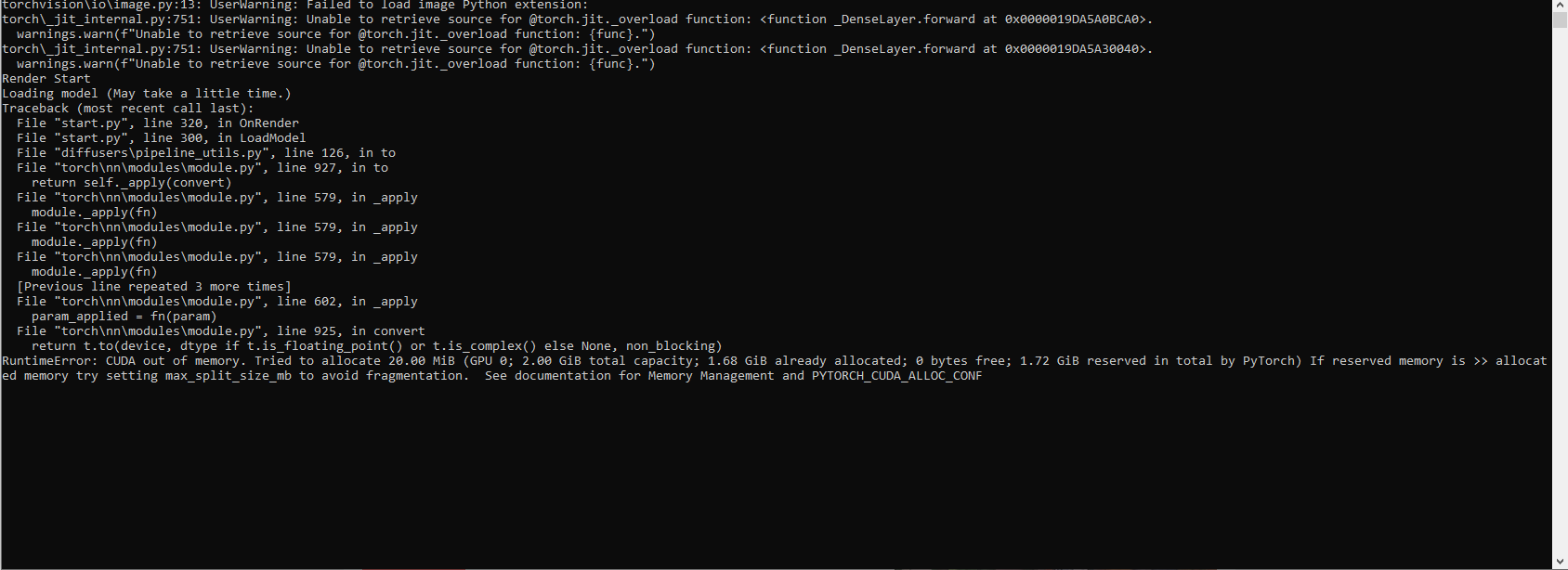

Images related to the topic torch.cuda.outofmemoryerror: cuda out of memory.

Found 12 images related to torch.cuda.outofmemoryerror: cuda out of memory. theme

![SOLVED] Runtimeerror cuda out of memory stable diffusion Solved] Runtimeerror Cuda Out Of Memory Stable Diffusion](https://itsourcecode.com/wp-content/uploads/2023/05/Runtimeerror-cuda-out-of-memory-stable-diffusion.png)

![Solved][PyTorch] RuntimeError: CUDA out of memory. Tried to allocate 2.0 GiB - Clay-Technology World Solved][Pytorch] Runtimeerror: Cuda Out Of Memory. Tried To Allocate 2.0 Gib - Clay-Technology World](https://i0.wp.com/clay-atlas.com/wp-content/uploads/2021/03/cropped-output.png?fit=350%2C350&ssl=1)

Article link: torch.cuda.outofmemoryerror: cuda out of memory..

Learn more about the topic torch.cuda.outofmemoryerror: cuda out of memory..

- “RuntimeError: CUDA error: out of memory”? – Stack Overflow

- CUDA out of memory issue #1654 – GitHub

- How to Solve CUDA Out of Memory Error in PyTorch

- Solved: torch.cuda.OutOfMemoryError: CUDA out of memory

- Solving “CUDA out of memory” Error – Kaggle

- Solving the “RuntimeError: CUDA Out of memory” error

- torch.cuda.OutOfMemoryError: CUDA out of memory. – Opacus

- Stable Diffusion Cuda Out of Memory Issue: 7 Fixes Listed

- torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to …

- How to resolve “RuntimeError: CUDA out of memory”?

See more: nhanvietluanvan.com/luat-hoc