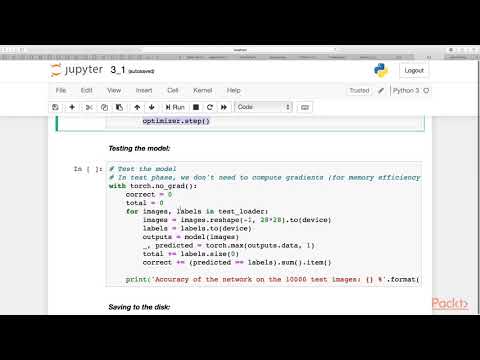

With Torch No Grad

What is Torch No Grad?

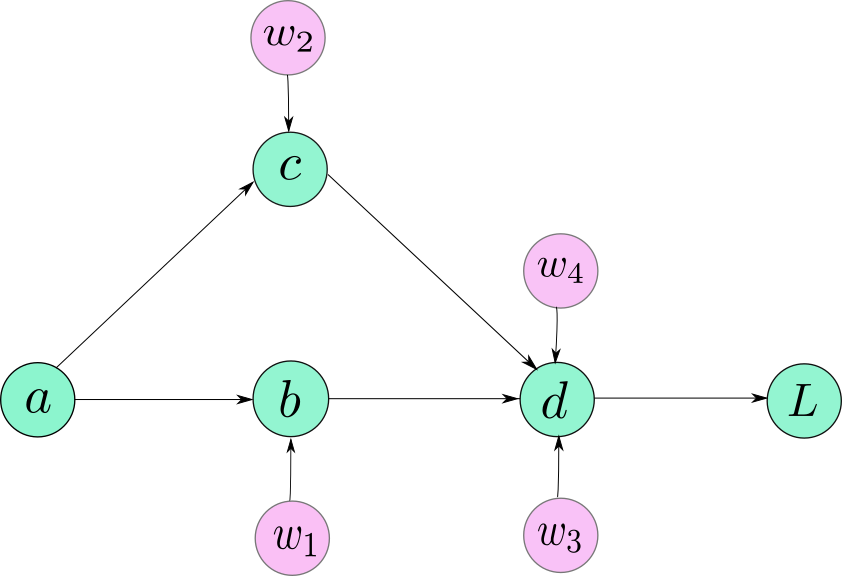

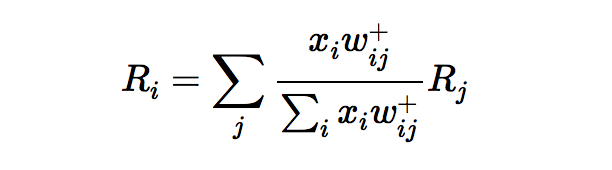

Torch No Grad is a feature provided by the PyTorch library that allows users to temporarily disable gradient calculation and updates. It is implemented using the torch.no_grad() context manager or by decorating a function with the @torch.no_grad() decorator. With Torch No Grad, the autograd engine of PyTorch skips building the computational graph for any operations performed within the context, leading to significant computational savings.

Why is Torch No Grad Important?

There are various reasons why Torch No Grad is important in deep learning workflows. Firstly, during model evaluation, gradients are not necessary since the model is not being trained. By disabling gradient computation, unnecessary computational overhead can be avoided, resulting in faster inference times.

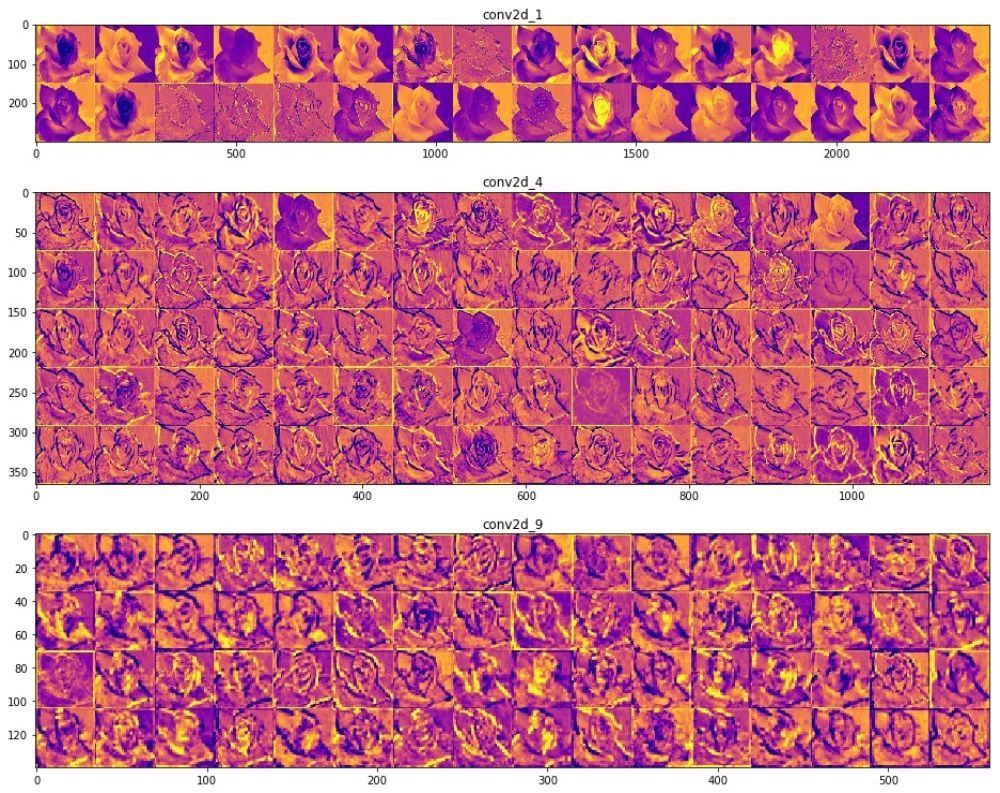

Additionally, when working with pre-trained models, it is common to freeze the weights of certain layers to prevent their modification. Torch No Grad plays a vital role in enabling this freezing mechanism, as it avoids updating the gradients of these frozen layers during backpropagation.

Moreover, Torch No Grad is also crucial in scenarios where memory consumption needs to be minimized. By disabling gradient calculations, memory resources can be efficiently managed, thereby allowing for larger models or batch sizes to be processed within the available memory constraints.

How Does Torch No Grad Work?

Torch No Grad works by creating a context in which gradient calculation and updates are disabled. Any operations performed within this context are not tracked by the autograd engine, and as a result, the computational graph is not built. This leads to reduced memory consumption and improved computational efficiency.

Benefits of Using Torch No Grad

The utilization of Torch No Grad provides several benefits to deep learning practitioners. Firstly, it significantly improves inference speed by avoiding unnecessary computations related to gradient calculation and updates.

Moreover, Torch No Grad also enables the freezing of specific layers during fine-tuning or transfer learning, allowing the retention of pre-trained weights without modification. This is particularly useful when working with models that have been trained on large datasets, saving both time and computational resources.

Additionally, Torch No Grad aids in reducing memory consumption, as it eliminates the need to store intermediate values required for gradient calculations. This can be particularly beneficial when dealing with limited memory resources or when training large-scale models.

Drawbacks and Limitations of Using Torch No Grad

While Torch No Grad offers several advantages, it also has certain drawbacks and limitations. One major limitation is that gradient-based optimization algorithms cannot be used within the Torch No Grad context. Therefore, if any update to the model’s parameters based on gradients is required, it needs to be explicitly performed outside the Torch No Grad context.

Moreover, since gradient calculation is disabled, any forward pass executed within the Torch No Grad context loses the ability to backpropagate gradients. This can limit the application of Torch No Grad in scenarios where both forward and backward computations are required simultaneously, such as in certain adversarial training techniques.

Applications of Torch No Grad in Deep Learning

Torch No Grad finds applications in various deep learning scenarios. It is commonly used during model evaluation, where gradient computation is unnecessary. By leveraging Torch No Grad, model evaluation can be performed more efficiently.

Another prominent application of Torch No Grad is in transfer learning and fine-tuning scenarios. By freezing specific layers using Torch No Grad, pre-trained models can be retained with their original weights, allowing for the modification of only a limited portion of the network during the training process.

Comparison of Torch No Grad with Other Gradient Tape Libraries

Torch No Grad is similar to other gradient tape libraries, such as TensorFlow’s tf.GradientTape with persistent=False. Both provide a means to selectively disable gradient calculation and updates during forward passes, thereby improving computational efficiency and memory consumption.

However, Torch No Grad has the advantage of being more accessible and user-friendly due to the simplicity of the torch.no_grad() context manager. Additionally, PyTorch’s dynamic computational graph allows for greater flexibility in disabling gradient computation within specific sections of the model, whereas other frameworks may have stricter constraints.

Tips and Tricks for Efficiently Using Torch No Grad

To maximize the benefits and efficiency of using Torch No Grad, consider the following tips and tricks:

1. Prioritize using Torch No Grad during model evaluation to avoid unnecessary gradient calculations.

2. Freeze the layers that are not being updated during training using Torch No Grad to prevent undesired weight modifications.

3. Place the minimum required code inside the Torch No Grad context to ensure that only the necessary computations are skipped.

4. Remember to manually perform updates to the model’s parameters outside the Torch No Grad context through explicit optimization steps.

Common Errors and Debugging Techniques in Torch No Grad

Sometimes users encounter common errors when utilizing Torch No Grad. One common mistake is forgetting to exit the Torch No Grad context after its usage. This can lead to unintended behavior in subsequent computations, as gradients may continue to be disabled.

Debugging techniques for such errors involve carefully reviewing the code and ensuring that the appropriate enter and exit points of the Torch No Grad context are correctly placed. Additionally, thorough testing and verification of the model’s behavior with enabled and disabled gradients can help identify any unexpected behaviors.

Future Developments and Updates for Torch No Grad

As PyTorch continues to evolve, it is expected that Torch No Grad will also see further developments and updates. These may include improvements to memory management, performance optimizations, and increased flexibility in gradient manipulation within the Torch No Grad context. It is essential to stay updated with the PyTorch documentation and community discussions for upcoming features and enhancements related to Torch No Grad.

In conclusion, Torch No Grad plays a significant role in deep learning workflows by efficiently disabling gradient calculation and updates. Its importance lies in improving computational efficiency, aiding model evaluation, freezing specific layers during fine-tuning, and managing memory consumption. While it offers multiple benefits, it also has limitations and considerations when applying it. By adopting appropriate tips, addressing common errors, and staying informed about future developments, practitioners can effectively leverage Torch No Grad for their deep learning tasks.

Pytorch Autograd Explained – In-Depth Tutorial

Keywords searched by users: with torch no grad Torch grad, Model eval and torch no_grad, Model eval vs torch no_grad, Requires_grad vs no_grad, Torch enable_grad, Torch set_grad_enabled, Pytorch detach vs requires_grad, PyTorch gradient

Categories: Top 48 With Torch No Grad

See more here: nhanvietluanvan.com

Torch Grad

In the fast-paced world of technology, innovation is constantly driving us towards a more convenient and efficient lifestyle. Among the many advancements that have transformed our homes, smart lighting has emerged as a game-changer. With the evolution of smart homes, lighting systems have evolved too, providing us with a wide range of options to enhance our living spaces. One such innovation that has been creating buzz in the market is Torch Grad.

Torch Grad is a cutting-edge lighting solution that brings unique features and unparalleled efficiency to smart homes. With its sleek design, easy installation process, and advanced control capabilities, Torch Grad has quickly become a favorite choice for tech-savvy homeowners around the world.

Design:

Torch Grad boasts a minimalistic and elegant design, flawlessly blending into any living space. Its sleek cylindrical shape exudes a sense of modernity, making it a perfect fit for contemporary interior designs. The compact size of the Torch Grad allows for easy installation in any area of the house, be it the living room, bedroom, kitchen, or even the bathroom. Its versatility and aesthetic appeal make it a sought-after lighting solution for both homeowners and interior designers.

Installation:

Gone are the days of complex and time-consuming installation processes. Torch Grad has revolutionized the way we install lighting systems. With its user-friendly design, Torch Grad can be easily mounted on any surface, whether it be a wall, ceiling, or table. The installation process is as simple as the twist of a screw, making it suitable for both professional electricians and DIY enthusiasts. Additionally, Torch Grad comes with a comprehensive installation guide, ensuring a hassle-free installation experience.

Control Capabilities:

One of the key features that sets Torch Grad apart from other lighting systems is its advanced control capabilities. With the integration of smart technology, Torch Grad can be controlled remotely through smartphones or voice-controlled virtual assistants like Amazon Alexa and Google Assistant. This allows homeowners to adjust the intensity, color, and even create personalized ambiance settings with a simple command or through the Torch Grad mobile app. Whether you desire a cozy warm light for a relaxing evening or a vibrant atmosphere for a party, Torch Grad offers endless possibilities at your fingertips.

Efficiency and Energy Savings:

Torch Grad prides itself on its energy-efficient lighting technology, which not only contributes to a greener planet but also helps homeowners save on their electricity bills. Equipped with LED bulbs, Torch Grad consumes significantly less energy compared to traditional lighting systems. The adjustable intensity feature ensures that only the necessary amount of light is emitted, optimizing energy consumption while maintaining an ideal atmosphere. Additionally, Torch Grad also includes a motion-sensor feature, automatically switching on and off when someone enters or leaves a room. This smart automation eliminates the need to manually turn on and off lights, further conserving energy.

Frequently Asked Questions:

1. Can Torch Grad be connected with existing smart home systems?

Yes, Torch Grad is compatible with a wide range of smart home systems. It can seamlessly integrate with popular platforms such as Apple HomeKit, Samsung SmartThings, and more.

2. What is the lifespan of Torch Grad’s LED bulbs?

Torch Grad’s LED bulbs are designed to last up to 25,000 hours, guaranteeing a long-lasting lighting solution for your home.

3. Is Torch Grad suitable for outdoor installation?

No, Torch Grad is designed specifically for indoor use and is not recommended for outdoor installation.

4. Can multiple Torch Grad units be synced together?

Yes, multiple Torch Grad units can be synchronized to create a unified lighting experience. This allows homeowners to control multiple lights simultaneously through a single command or mobile app.

5. Can I schedule Torch Grad to turn on and off at specific times?

Yes, Torch Grad’s mobile app allows you to set schedules for your lights, ensuring they turn on and off automatically according to your desired timing.

As the demand for smart home technologies continues to rise, Torch Grad has positioned itself as a groundbreaking lighting solution. Its sleek design, easy installation process, advanced control capabilities, and energy efficiency make it a must-have for any modern household. With Torch Grad, transforming your home into a smart living space is just a flicker away.

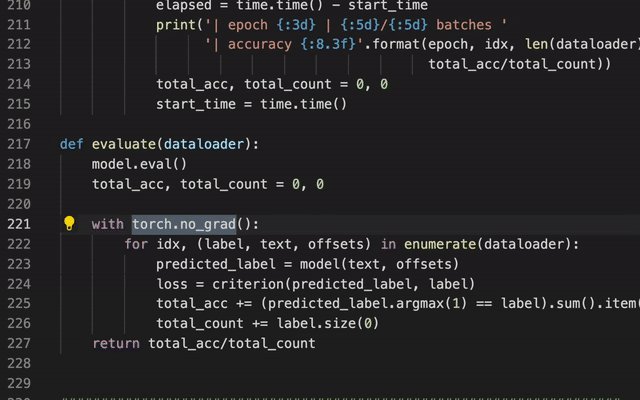

Model Eval And Torch No_Grad

Introduction:

In the rapidly evolving field of machine learning, model evaluation plays a pivotal role in determining the performance and effectiveness of a trained model. One of the popular frameworks that have gained significant attention is PyTorch, owing to its simplicity, flexibility, and extensive support for deep learning. In this article, we will delve into the methods and techniques used for model evaluation and explore the concept of torch.no_grad, an essential component in PyTorch to optimize memory usage during evaluation.

Model Evaluation:

Model evaluation refers to the process of assessing the performance of a trained machine learning model on unseen data. Evaluating a model involves understanding its abilities to generalize well to new instances and provide accurate predictions. The primary focus of model evaluation lies in quantitatively measuring metrics such as accuracy, precision, recall, and F1-score, among others, according to the nature of the problem at hand.

One of the standard practices in model evaluation is splitting the dataset into training and testing subsets. The model is trained on the training set and evaluated on the testing set. This separation ensures that the model is not biased towards the data it has already seen and provides a reliable estimation of its performance on unseen data.

Cross-validation is another technique widely used for model evaluation. It involves dividing the dataset into multiple subsets and iteratively using each subset for testing while the remaining subsets are used for training the model. Cross-validation helps in obtaining a clearer picture of the model’s performance and can be particularly useful when the dataset is limited.

Furthermore, model evaluation can be extended to more sophisticated techniques such as k-fold cross-validation and stratified sampling, which assign weights to different instances based on their representative distribution in the dataset. These techniques aim to improve the robustness of the performance evaluation process and assist in model selection and fine-tuning.

torch.no_grad in PyTorch:

PyTorch, a widely used deep learning framework, implements a handy feature called torch.no_grad, which temporarily disables gradient computations during model evaluation. By using this feature, unnecessary memory consumption and computation time associated with gradient computation are significantly reduced, resulting in faster evaluation without sacrificing accuracy.

When a model is set to evaluation mode using model.eval(), all the trainable parameters of the model are set to evaluation mode as well. During evaluation, PyTorch still tracks the operations that are applied, which allows for backpropagation during model training. However, the gradients are not calculated or stored when torch.no_grad is active, resulting in memory optimization and faster computation.

Using torch.no_grad effectively requires being cautious about its usage. It is best applied when performing inference on the trained model, i.e., when no more training or updates of the model parameters are required. By judiciously utilizing torch.no_grad, unnecessary overhead from memory, computation, and storage can be mitigated, leading to improved efficiency.

FAQs:

1. Why is model evaluation important?

Model evaluation helps to gauge the performance and effectiveness of a trained model, allowing researchers and practitioners to make informed decisions. It helps in assessing the generalization capabilities of the model and provides insights to refine and optimize the model further.

2. Can model evaluation be solely based on accuracy?

While accuracy is an essential metric, it might not always be sufficient to evaluate the performance of a model, especially in scenarios where the dataset is imbalanced or when different classes have varying importance. Depending on the problem at hand, metrics like precision, recall, F1-score, or area under the ROC curve (AUC-ROC) may be more appropriate for evaluation.

3. How does cross-validation improve model evaluation?

Cross-validation helps mitigate the biases that may arise from a single train-test split. By using multiple subsets of the dataset for training and testing, cross-validation provides a more representative estimate of the model’s performance and helps in choosing the best hyperparameters.

4. When should torch.no_grad be used?

torch.no_grad is best applied during model evaluation when no further updates to the model parameters are necessary. It helps in optimizing memory usage and computation time by disabling the storage and computation of gradients. However, it should not be used during the training phase, as it will disable gradient computation and hamper the learning process.

Conclusion:

Model evaluation is an essential component of the machine learning pipeline, facilitating the assessment of a trained model’s performance on unseen instances. PyTorch, with its torch.no_grad feature, brings memory optimization and faster computation during model evaluation. By understanding and utilizing these techniques effectively, researchers and practitioners can evaluate models more efficiently, making informed decisions and achieving better results in various deep learning applications.

Model Eval Vs Torch No_Grad

Introduction:

In the realm of deep learning and neural networks, the evaluation of models plays a crucial role in assessing their performance and effectiveness. In the framework of PyTorch, a popular deep learning library, there are two commonly used approaches for model evaluation: model.eval() and torch.no_grad(). While they may seem similar at first glance, there are fundamental differences between these two methods. In this article, we will explore these differences, understand their usage, and delve into scenarios where each approach is best suited.

Model Evaluation with model.eval():

The model.eval() method is explicitly used to switch the model to the evaluation mode. When the model is in this mode, it affects certain layers such as Dropout and Batch Normalization, making them behave differently during the evaluation phase compared to training. The primary function of model.eval() is to disable these layers such that they don’t affect the output.

During the training phase, Dropout layers randomly drop certain neuron activations, which helps in preventing overfitting. However, during evaluation, it is undesirable to drop any neuron activations randomly. By using model.eval(), these Dropout layers are deactivated, thus allowing the model to generate consistent output during evaluation.

Similarly, Batch Normalization layers have different behavior during training and evaluation phases. During training, they normalize the inputs to help the network converge faster and generalize better. However, during evaluation, this normalization is unnecessary, as it can interfere with the evaluation process. The model.eval() method ensures that Batch Normalization layers don’t normalize the inputs during evaluation.

Overall, the model.eval() method adjusts the behavior of certain layers within the model, ensuring that the evaluation process is consistent and free from the influence of Dropout and Batch Normalization layers.

Model Evaluation with torch.no_grad():

On the other hand, torch.no_grad() is a context manager that temporarily disables the gradient calculation mechanism in PyTorch. It is useful during the evaluation phase when gradients are not needed and calculating them would consume unnecessary computational resources.

By using torch.no_grad(), we remove the tracking of operations on tensors, including intermediate values, which conserves memory and speeds up the evaluation process. This is particularly advantageous when evaluating large models or when executing evaluations on memory-constrained devices.

Unlike model.eval(), torch.no_grad() does not influence the behavior of Dropout or Batch Normalization layers. Its primary purpose is to optimize the evaluation process by disabling gradient tracking, rather than altering the behavior of the model itself.

Choosing the Right Approach:

Now that we understand the differences between model.eval() and torch.no_grad(), it’s important to identify the situations in which each approach is best suited.

Use model.eval() when you need to evaluate the model’s performance while adjusting Dropout and Batch Normalization layers. This is especially important when the model employs these layers for regularization during training. By using model.eval() during evaluation, you ensure that the model’s behavior is consistent, providing accurate evaluation metrics.

On the other hand, employ torch.no_grad() when you solely need to evaluate the model’s performance and gradients are not required for further processing. This method is particularly useful when computational resources are limited or when evaluating large models.

Finally, bear in mind that both approaches can be used together. In fact, it is common practice to wrap the evaluation code with both model.eval() and torch.no_grad() to ensure the model produces consistent output while also optimizing resource usage.

FAQs:

Q: Can I use model.eval() during model training?

A: No, model.eval() is specifically used for evaluation purposes, and using it during training can result in inaccurate results.

Q: Should I always use torch.no_grad() during evaluation?

A: It depends on your specific requirements. If you don’t need gradients and want to optimize resource usage, torch.no_grad() is beneficial. However, if Dropout and Batch Normalization layers are integral to your model, model.eval() should be used in conjunction with torch.no_grad().

Q: Is disabling gradient calculation sufficient to speed up the evaluation process?

A: Disabling gradient calculation using torch.no_grad() helps speed up the evaluation process by reducing memory consumption. However, to achieve maximum efficiency, it is recommended to use both model.eval() and torch.no_grad() together.

Conclusion:

Model evaluation is a critical step in assessing the performance of deep learning models. PyTorch provides two approaches, model.eval() and torch.no_grad(), each serving different functions. While model.eval() adjusts the behavior of Dropout and Batch Normalization layers during evaluation, torch.no_grad() primarily focuses on optimizing resource consumption by disabling gradient calculation. By understanding the differences between these approaches, you can make informed decisions when evaluating your models and choose the most suitable approach based on your requirements.

Images related to the topic with torch no grad

Found 13 images related to with torch no grad theme

![PyTorch] How to check the model state is Pytorch] How To Check The Model State Is](https://i0.wp.com/clay-atlas.com/wp-content/uploads/2019/08/pytorch_logo.png?fit=611%2C428&ssl=1)

Article link: with torch no grad.

Learn more about the topic with torch no grad.

- no_grad — PyTorch 2.0 documentation

- What is the use of torch.no_grad in pytorch?

- What is the purpose of with torch.no_grad(): – Stack Overflow

- What does “with torch no_grad” do in PyTorch? – Tutorialspoint

- What is “with torch no_grad” in PyTorch? – GeeksforGeeks

- How to use the torch.no_grad function in torch – Snyk

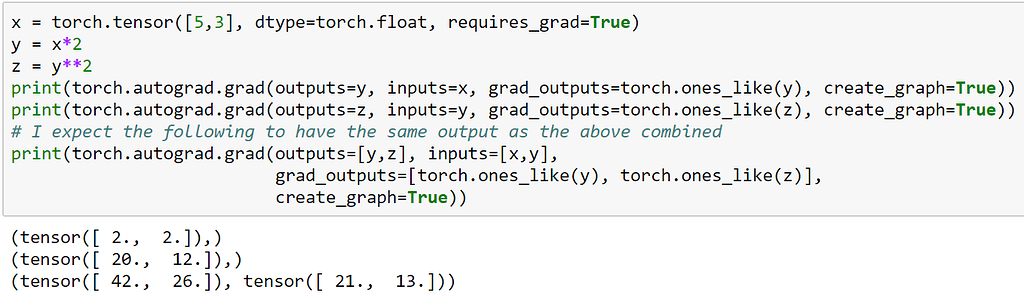

- Difference between “tensor.detach()” vs “with torch.no_grad()”

- What does torch no grad do – ProjectPro

See more: https://nhanvietluanvan.com/luat-hoc