Shap Summary Plot Example

Shape Summary, also known as SHAP (SHapley Additive exPlanations), is a powerful tool used in data analysis and storytelling. It provides a way to understand and interpret the impact of different features on the outcome of a model. By visualizing the relationship between features and model predictions, Shape Summary plots offer valuable insights into the underlying patterns and dynamics of the data.

II. Understanding the Importance of Plot

Plot is an essential component of any narrative, whether it’s a novel, a movie, or even a data analysis report. It serves as the backbone of the story, guiding the reader or viewer through a sequence of events and revealing the motivations and conflicts faced by the characters. In the context of data analysis, plot helps to make sense of complex information and draw meaningful conclusions.

III. Elements of a Plot Summary

A plot summary typically includes key elements such as exposition, rising action, climax, falling action, and resolution. These elements provide a framework for presenting the sequence of events in a cohesive and engaging manner. In data analysis, the plot summary focuses on presenting the main features or variables that influence the outcome of a model.

IV. Step-by-Step Guide to Creating a Shape Summary Plot

1. Identify the features: Start by identifying the relevant features or variables that you want to analyze and their corresponding values or categories.

2. Train the model: Use the selected features and the available data to train a predictive model. This model will be used to make predictions based on the given features.

3. Generate SHAP values: Calculate the SHAP values for each feature, which represent the contributions of the features to the model’s predictions. These values help to understand the individual and combined impacts of the features.

4. Sort the features: Sort the features based on their SHAP values in descending order to identify the most influential features.

5. Create the Shape Summary plot: Plot the features on the x-axis and their corresponding SHAP values on the y-axis. This will visualize the relationships between the features and the predicted outcome.

V. Example of a Shape Summary Plot

Let’s consider a hypothetical example of predicting house prices based on various features such as area, number of bedrooms, and location. After training a predictive model, we generate the SHAP values for each feature.

In the Shape Summary plot, we can visualize the importance of each feature on the predicted house prices. For example, if the SHAP value for the area feature is high, it indicates that larger houses tend to have higher prices.

VI. Analyzing the Structure and Development of the Example Plot

By analyzing the Shape Summary plot, we can identify the key features that contribute the most to the predicted outcome. In our example, we may find that the area and location have the highest SHAP values, indicating their significant impact on house prices. This analysis allows us to gain insights into the factors driving the predictions.

VII. Enhancing the Plot with Effective Shape Summary Techniques

To enhance the effectiveness of Shape Summary plots, several techniques can be employed. These include color-coding the features, adding additional dimensions (such as time or categories), and incorporating interactive elements for better exploration.

VIII. Exploring Different Types of Shape Summary Plots

Shape Summary plots can take different forms depending on the nature of the data and the modeling approach. Some common types of Shape Summary plots include SHAP plots bar example, SHAP decision plot, and SHAP summary_plot. Each type offers unique insights into the model’s behavior and the relationship between features and predictions.

IX. Benefits of Using Shape Summary Plots in Storytelling and Analysis

Shape Summary plots offer several benefits in storytelling and data analysis:

1. Transparent interpretation: Shape Summary plots provide a clearer and more interpretable way to understand how different features contribute to the outcome. This transparency leads to more informed decision-making.

2. Model validation: By visualizing the impact of features, Shape Summary plots can help validate the model’s accuracy and identify potential biases or limitations.

3. Insights and discovery: Shape Summary plots enable researchers and analysts to uncover hidden patterns and relationships within the data, leading to new insights and discoveries.

4. Communication and presentation: Shape Summary plots offer a visually engaging way to communicate complex information to a non-technical audience, making presentations and reports more accessible and engaging.

In conclusion, Shape Summary plots provide a powerful tool for understanding, interpreting, and communicating the relationships between features and predictions in data analysis and storytelling. By incorporating these plots into our analysis, we can gain deeper insights and make more informed decisions.

Shap – What Is Your Model Telling You? Interpret Catboost Regression And Classification Outputs

What Does Shap Summary Plot Show?

SHAP (SHapley Additive exPlanations) is a popular interpretability tool in machine learning and AI models. It helps users gain insights into how different features contribute to the predictions made by a specific model. SHAP summary plots provide a comprehensive visual representation of these contributions, enabling researchers, data scientists, and domain experts to better understand and explain the model’s behavior. In this article, we will explore SHAP summary plots in detail and discuss their significance in model interpretability.

Understanding SHAP Values

Before diving into SHAP summary plots, let’s briefly discuss SHAP values. SHAP assigns a value to each feature in a given instance, representing its impact on the model’s prediction compared to the prediction if that feature were not present. Positive SHAP values indicate that the feature increases the prediction, while negative values suggest the opposite. These values allow us to comprehend the relative importance of different features and their contributions to the overall prediction.

What Are SHAP Summary Plots?

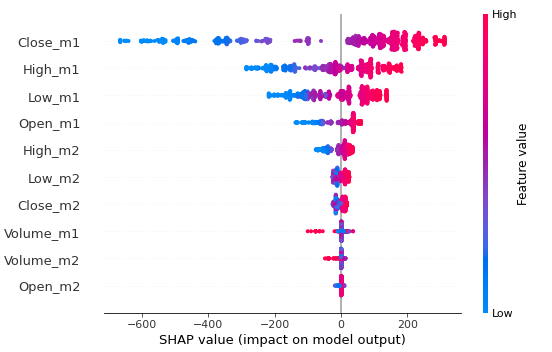

SHAP summary plots help us visualize the distribution of SHAP values for each feature across the entire dataset. They provide a summarized view of how different features influence the predictions made by the model. These plots plot the SHAP values on the x-axis and sort the features on the y-axis based on their importance or order of appearance. Each point in the plot represents a feature’s SHAP value for a specific instance in the dataset.

Interpreting SHAP Summary Plots

SHAP summary plots are extremely useful in understanding the global interpretability of the model. When examining these plots, we can identify the following key pieces of information:

1. Feature Importance: The features are sorted based on their importance, with the most important feature at the top. The importance is determined by the sum of absolute SHAP values across the entire dataset. This hierarchy provides a quick overview of which features have the most significant impact on the model’s predictions.

2. Range and Distribution of SHAP Values: The spread and distribution of the SHAP values for each feature indicate the range of contributions they make to the predictions. Features with a wide distribution of SHAP values suggest a varying impact on predictions, while those with a more concentrated distribution have a more consistent and predictable effect.

3. Impact Direction: The position of each feature’s SHAP value on the x-axis indicates whether it has a positive or negative impact on the prediction. Values on the right side of zero indicate positive contributions, while those on the left indicate negative contributions.

4. Interaction Effects: SHAP summary plots visualize potential interactions between features. If features are close to each other vertically, it suggests that they interact with each other, meaning their combined presence has a different impact on the prediction than their individual contributions. These interactions can give valuable insights into complex relationships within the model.

FAQs about SHAP Summary Plots:

Q: How are features sorted on the y-axis in SHAP summary plots?

A: Features are typically sorted based on their importance, which is determined by the sum of absolute SHAP values across the dataset. The most important feature appears at the top of the plot.

Q: Can SHAP summary plots be used with any machine learning model?

A: Yes, SHAP summary plots can be applied to any model that has SHAP values calculated. They are particularly helpful for models with black-box architectures, such as gradient boosting machines and neural networks.

Q: What is the advantage of using SHAP summary plots over other interpretability techniques?

A: SHAP summary plots provide a global interpretability view and are more comprehensive than techniques like feature importance or partial dependence plots. They consider the interactions between features and visualize the contributions of each feature across the dataset.

Q: Can SHAP summary plots reveal feature interactions?

A: Yes, SHAP summary plots can indicate potential interactions between features. When features are positioned close to each other vertically, it suggests an interaction effect, implying that their combined presence has a different impact on the prediction compared to their individual contributions.

Q: How can SHAP summary plots be used practically?

A: SHAP summary plots enable users to gain a deeper understanding of their AI models. They can help detect problematic features, identify influential factors, and provide insights for feature engineering or model improvements. Additionally, they allow domain experts to validate the model’s behavior against their knowledge.

In conclusion, SHAP summary plots are valuable tools that help unravel the inner workings of machine learning and AI models. Through these plots, we can comprehend the importance, range, and direction of feature contributions to predictions. These visual representations facilitate model interpretation, enable feature selection, and aid in the overall improvement of AI systems.

What Color Is The Shap Plot Summary?

SHAP (Shapley Additive exPlanations) is a framework for interpreting the output of any machine learning model, specifically focusing on understanding the contribution of each feature to the model’s prediction. It provides a unique approach to interpretability, enabling us to explore the importance of various features and their impact on the final prediction. While SHAP explanations are widely used, the notion of assigning colors to SHAP values might sound peculiar at first.

In the context of SHAP, color signifies the magnitude and direction of the feature’s contribution to the prediction. A positive contribution is represented by a blue color, while red indicates a negative contribution. The intensity of the color, ranging from light to dark, indicates the strength of the feature’s impact. While this color scheme might seem unconventional, it effectively allows users to visually understand and distinguish different feature contributions at a glance.

Understanding the color mapping can greatly enhance interpretability, making SHAP plots a powerful tool for users to comprehend complex machine learning models. With colors assigned to SHAP values, analysts and domain experts can identify the dominant factors driving a particular prediction. By visually examining these colors, patterns and insights hidden within the vast number of features can be quickly extracted and understood.

FAQs:

Q: How does color help in interpreting SHAP plots?

A: The color scheme employed in SHAP plots serves as a powerful aid for interpretability. With colors assigned to SHAP values, users can easily understand the magnitude, direction, and impact of each feature on the model’s prediction. Colors help to visually distinguish the contributions, and by analyzing these colors, patterns and insights can be discovered, leading to a better understanding of the model’s behavior.

Q: How are positive and negative contributions represented through color?

A: In SHAP plots, positive contributions are represented by shades of blue, while negative contributions are represented by shades of red. This color scheme is intuitive and consistent with commonly used color associations. The intensity of the color indicates the strength of the feature’s impact. By assigning colors to feature contributions, users can identify which features are driving a prediction positively or negatively.

Q: What advantages does the color mapping bring to SHAP plots?

A: The color mapping in SHAP plots provides several advantages. First, it simplifies the interpretability process by allowing users to quickly identify the dominant factors affecting a prediction. Second, color helps to visualize complex relationships between features and predictions, making the analysis more intuitive. Finally, color provides a compact representation of feature contributions, conveying information at a glance. By leveraging the color mapping, domain experts can efficiently interpret and communicate the output of machine learning models.

Q: Are there any limitations to using color in SHAP plots?

A: While colors in SHAP plots serve as a helpful interpretability aid, it is essential to keep in mind that color alone does not provide complete context. Understanding the underlying algorithm, the dataset, and the domain knowledge is equally important. Moreover, individuals’ perception of colors may vary, highlighting the necessity of clear legends and annotations on the plots. When interpreting SHAP plots, it is crucial to consider color as one aspect of the comprehensive analysis.

Q: Can the color mapping be customized in SHAP plots?

A: Yes, the color mapping in SHAP plots can be customized according to specific requirements or personal preferences. The SHAP library provides users with flexibility in choosing their color palette. This customization option enables users to align the colors to their brand guidelines or to accommodate any color-related requirements.

In conclusion, the color mapping in SHAP plots plays a crucial role in interpreting the output of machine learning models. Assigning colors to SHAP values enables users to visually understand the contribution and impact of each feature, offering a simplified way to explore and interpret complex models. By leveraging color in SHAP plots, users can quickly identify dominant factors, extract patterns, and gain a deeper understanding of the machine learning model’s behavior. While colors should not be the sole basis of interpretation, they provide a valuable tool alongside other analytical techniques and domain knowledge.

Keywords searched by users: shap summary plot example Shap summary plot interpretation, SHAP plots bar example, SHAP decision plot, SHAP value, SHAP summary_plot, The shap with more elegant charts, SHAP KernelExplainer, Shap value là gì

Categories: Top 17 Shap Summary Plot Example

See more here: nhanvietluanvan.com

Shap Summary Plot Interpretation

Introduction (approx. 100 words):

Graphical representation is an essential tool for interpreting data and conveying information succinctly. In recent years, the Shape Summary Plot (or Shap plot) has gained popularity as a powerful method for visualizing and comprehending the impact of feature variables on the prediction made by machine learning models. By employing an intuitive graphical depiction, the Shap plot offers a deeper understanding of the factors influencing these predictions. In this article, we will explore the ins and outs of Shap summary plot interpretation in English, providing a comprehensive guide to understanding and harnessing its analytical potential.

Understanding Shap Summary Plots (approx. 250 words):

Shap values, short for Shapley values, are derived from cooperative game theory and quantify the contribution of each feature variable to the predicted outcome of a machine learning model. The Shap summary plot combines these values with the feature variables’ values to create a compact visual summary of their effect on predictions.

The x-axis of the Shap summary plot represents individual data points or observations, ordered either by the actual outcome (y-axis) or the predicted outcome (if predictions are available). Each data point is represented by a horizontal bar, where the color indicates the feature value – warmer colors for higher values and cooler colors for lower values. The plot’s vertical axis shows the feature variables, with each bar revealing the Shap value associated with a specific variable. The position of the bars along the y-axis denotes the impact of each feature on the model’s prediction, with the plot’s baseline representing the average prediction.

Furthermore, the length of each bar in the Shap summary plot reflects the magnitude of the variable’s impact on the prediction. Longer bars indicate stronger influence, while shorter bars imply reduced relevance. It is crucial to understand that interpretation requires comparison within the plot itself, as the scale and magnitude of Shap values may vary significantly depending on the feature’s range and function.

Interpreting Shap Summary Plots (approx. 400 words):

To interpret a Shap summary plot, start by focusing on the highest bars for each feature variable. These indicate the most impactful features in making accurate predictions. Understanding the positive or negative direction of a bar’s shift from the baseline is equally important: a positive shift signifies an increase in likelihood, while a negative shift represents the opposite.

When examining longer bars, it is crucial to consider their direction and the magnitude of Shap values. Positive long bars imply a higher positive influence on the model’s prediction, while negative long bars represent a higher negative influence. Additionally, comparing bars across different features allows for insights into their relative importance in determining the predicted outcome.

Shap summary plots also present an interesting feature interaction perspective. By observing the relative positions of the feature bars, we can identify interacting pairs. If two bars seem to be colliding or crossing each other, it indicates a strong interplay between those features, where the importance of one feature varies depending on the value of the other.

The Shap summary plot’s vertical ordering of features allows for identification of trends or patterns. For instance, if variables associated with similar domains (e.g., demographics) are positioned adjacently, it suggests a cohesive impact on the prediction compared to variables from unrelated domains.

FAQs (approx. 260 words):

Q: Can Shap summary plots be used with any machine learning model?

A: Yes, Shap summary plots can be used with various machine learning models, including gradient boosting, random forests, linear regression, etc.

Q: Can Shap summary plots handle categorical or text features?

A: Yes, Shap summary plots can handle categorical features by encoding them using methods like one-hot encoding or target encoding. Text features can be transformed into numerical representations using techniques like Bag-of-words or TF-IDF.

Q: What are some limitations of interpreting Shap summary plots?

A: Shap summary plots may not capture complex interactions between multiple features, as they display only pairwise interactions. Additionally, the interpretation heavily relies on the quality and representativeness of the dataset used.

Q: How can one use Shap summary plots to enhance model performance?

A: Shap summary plots pinpoint influential features, allowing data scientists to engineer those features more effectively. By identifying and eliminating redundant or irrelevant features, model performance can be enhanced.

Q: Are there Python libraries that facilitate Shap summary plot generation?

A: Yes, Python libraries such as SHAP and XGBoost provide functionality for generating Shap summary plots. These libraries integrate easily with popular machine learning frameworks like scikit-learn.

Conclusion (approx. 100 words):

The Shape Summary Plot offers an intuitive and accessible means of understanding feature variable impacts on machine learning model predictions. By interpreting the shape, length, and relative position of bars in the plot, insightful conclusions can be drawn regarding feature importance, interactions, and trends. From model debugging to feature selection optimization, the Shap summary plot serves as a powerful tool for both data scientists and stakeholders, promoting transparency and trust in machine learning systems.

Shap Plots Bar Example

Understanding SHAP Plots:

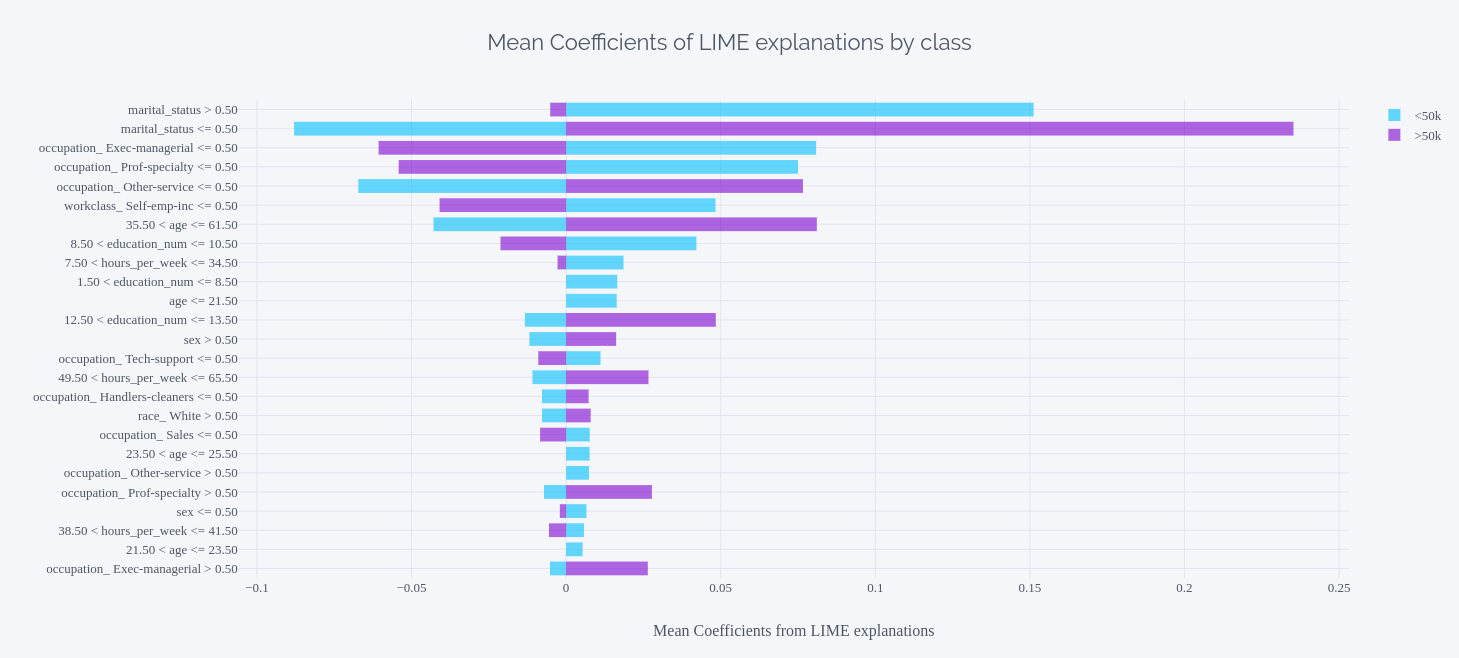

SHAP plots are built upon Shapley values, a concept borrowed from cooperative game theory. Shapley values assign a value to each feature depending on its contribution to the prediction, taking into account all possible combinations of features. These values are then used to determine the relative impact of each feature on the final outcome.

In the context of SHAP plots, the features of a model are represented on the x-axis of a bar chart, whereas the corresponding SHAP values are depicted on the y-axis. Each bar in the plot represents a specific feature, with its length indicating the magnitude of the SHAP value. The bars are colored based on the actual value of the feature, enabling us to observe the directionality of the relationship between a feature and the prediction. Positive SHAP values are often displayed in red tones, suggesting a feature that contributes positively to the final prediction, while negative values are typically represented in blue tones, indicating a feature’s negative impact.

Example: Bar Chart SHAP Plot

To illustrate the functionality of SHAP plots, let’s consider a hypothetical example involving a machine learning model trained to predict house prices. The model takes into account features such as the number of rooms, square footage, and location. A SHAP plot based on a bar chart visualization can provide valuable insights into how these specific features influence the price predictions.

In the resulting bar chart SHAP plot, we observe a substantial bar for the number of rooms feature, indicating its high impact on house prices. The length of this bar suggests that more rooms in a house significantly increase its predicted price. On the contrary, the bar for square footage appears shorter, suggesting a relatively smaller influence on price predictions. Finally, the location feature displays multiple bars representing different categories or regions, each with varying lengths. By comparing these bars, we can identify which locations have a greater positive or negative impact on house prices.

FAQs about SHAP Plots:

Q: Can SHAP plots be used for any machine learning model?

A: Yes, SHAP plots can be utilized with any model that allows for feature importance calculation. They are particularly effective for complex models like ensemble methods or neural networks.

Q: Are SHAP plots useful only for understanding feature importance?

A: SHAP plots not only provide insights into feature importance but also capture the interactions between different features. By analyzing the changes in SHAP values across multiple instances, we can uncover non-linear relationships and dependencies between features.

Q: Can SHAP plots handle missing or categorical data?

A: Yes, SHAP plots can handle missing data by imputing values or using specific techniques like mean imputation. For categorical variables, SHAP plots display separate bars for each category, making it easier to interpret their impact on predictions.

Q: Can SHAP plots be used to explain individual predictions?

A: Absolutely. In addition to understanding feature contributions in general, SHAP plots can also be used to explain individual predictions. By examining the SHAP values corresponding to specific instances, we can unveil the factors that influenced the model’s decision for that particular prediction.

Q: Are there any limitations or considerations when using SHAP plots?

A: Like any tool, SHAP plots have certain limitations. They can become challenging to interpret and analyze with an excessive number of features. Moreover, the interpretation of SHAP values may vary depending on the model’s nature and complexity. It is important to strike a balance between the interpretability of the plot and the accuracy of the model’s predictions.

In conclusion, SHAP plots offer a powerful means of interpreting machine learning models, providing intuitive visualizations that facilitate the analysis of feature importance and its impact on predictions. With the ability to explain individual predictions and identify complex interactions, SHAP plots assist in building trust and understanding in the decision-making process of increasingly complex models. Whether it is for uncovering insights into a house price prediction model or any other application, SHAP plots are a valuable tool for data scientists and machine learning practitioners alike.

Shap Decision Plot

In the era of machine learning and artificial intelligence, the accuracy of predictions has significantly improved. However, the complexity and “black box” nature of these models often makes it challenging to understand the reasoning behind their decisions. This lack of transparency not only limits our ability to trust the model’s predictions, but it also hampers our capability to detect biases and potential ethical issues. In recent years, the need for interpretable machine learning models has gained considerable attention, leading to the development of techniques like SHAP (SHapley Additive exPlanations) decision plots. In this article, we will explore the concept of SHAP decision plots, their benefits, and how they enhance transparency in machine learning models.

What is a SHAP Decision Plot?

A SHAP decision plot is a visualization tool that helps us understand how different features contribute to the predictions made by a machine learning model. SHAP values, which are the foundation of SHAP plots, provide a measure of the impact each feature has on the predictions. The plots employ color gradients to represent the extent of a feature’s influence, with red indicating a positive contribution, and blue indicating a negative contribution. By understanding the contributions of individual features, users can gain insights into the model’s behavior and decisions.

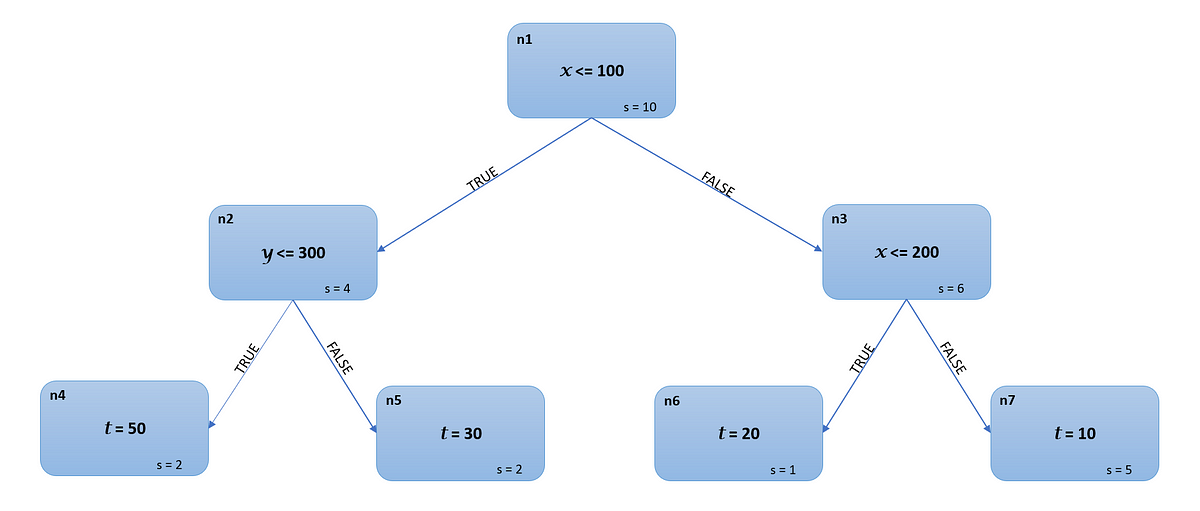

How Do SHAP Decision Plots Work?

To comprehend the workings of SHAP decision plots, we first need to understand the concept of Shapley values, which are rooted in cooperative game theory. Shapley values allocate the contributions of each feature to the overall prediction by calculating the average marginal contribution of every feature across all possible combinations.

The plots display SHAP values for each feature in a way that allows us to interpret the impact of individual features on a prediction. The vertical position of each point represents the value of the feature for a specific data point, while the horizontal axis displays the SHAP value. The combination of these points presents a comprehensive illustration of the features’ impact on the model’s prediction.

Benefits of SHAP Decision Plots

1. Interpretability: SHAP decision plots enhance the interpretability of complex models by providing clear insights into the contribution of each feature. This provides a deeper understanding of the model’s logic and promotes trust in its decisions.

2. Transparency: Traditional machine learning models often operate as “black boxes,” where it is difficult to comprehend how and why a particular prediction was made. SHAP decision plots offer a transparent methodology that allows us to visualize the decision-making process and identify potential biases or unfairness in the model.

3. Feature Importance: SHAP plots enable the identification of key features that drive a prediction. By understanding which features have the most substantial influence, data scientists can focus on improving those parts of the model further, leading to improved predictions.

4. Validation and Debugging: SHAP plots help validate the consistency of a machine learning model’s predictions. They allow for easy detection of potential errors or inconsistencies that may arise due to faulty feature engineering or data leakage.

5. Communicating Results: SHAP decision plots are excellent tools for communicating model results to stakeholders who may not possess technical expertise. The visual representation enables easy comprehension and fosters meaningful discussions.

FAQs about SHAP Decision Plots:

Q1. Can SHAP decision plots be applied to any machine learning model?

A1. Yes, SHAP decision plots can be used with any model that provides a prediction. They are not specific to a particular algorithm.

Q2. Do SHAP plots only provide insight into single predictions?

A2. SHAP plots can indeed provide insights into individual predictions, but they can also be aggregated to highlight general patterns within the model.

Q3. Is it possible to compare the importance of different features using SHAP decision plots?

A3. Absolutely! SHAP plots allow for direct comparison of feature importance by examining the relative distances of the points along the horizontal axis.

Q4. Are SHAP decision plots only beneficial for data scientists?

A4. The primary beneficiaries of SHAP decision plots are data scientists, but they can also aid stakeholders, including managers, policymakers, and even end-users in understanding and trusting the model’s predictions.

Q5. Are there any limitations or challenges when using SHAP decision plots?

A5. While SHAP decision plots are valuable, they do have their limitations. Interpreting plots can be challenging when dealing with high-dimensional data or complex models with numerous interactions between features. Additionally, they may not provide conclusive explanations for every prediction, as complex models sometimes produce results that are difficult to explain.

In conclusion, SHAP decision plots offer an innovative and transparent approach to understanding complex machine learning models. With their ability to visualize feature influences, they enhance interpretability, transparency, and trust in predictions. As the demand for explainable AI grows, SHAP decision plots are becoming indispensable tools in the toolbox of data scientists and ML practitioners alike.

Images related to the topic shap summary plot example

Found 36 images related to shap summary plot example theme

![SHAP Values - Interpret Machine Learning Model Predictions using Game Theoretic Approach [Python] Shap Values - Interpret Machine Learning Model Predictions Using Game Theoretic Approach [Python]](https://storage.googleapis.com/coderzcolumn/static/tutorials/machine_learning/shap_linear_explainer_2.jpg)

![SHAP Values - Interpret Machine Learning Model Predictions using Game Theoretic Approach [Python] Shap Values - Interpret Machine Learning Model Predictions Using Game Theoretic Approach [Python]](https://storage.googleapis.com/coderzcolumn/static/tutorials/machine_learning/shap_linear_explainer_20.jpg)

![SHAP Values - Interpret Machine Learning Model Predictions using Game Theoretic Approach [Python] Shap Values - Interpret Machine Learning Model Predictions Using Game Theoretic Approach [Python]](https://storage.googleapis.com/coderzcolumn/static/tutorials/machine_learning/shap_linear_explainer_15.jpg)

![SHAP Values - Interpret Machine Learning Model Predictions using Game Theoretic Approach [Python] Shap Values - Interpret Machine Learning Model Predictions Using Game Theoretic Approach [Python]](https://storage.googleapis.com/coderzcolumn/static/tutorials/machine_learning/shap_linear_explainer_19.jpg)

Article link: shap summary plot example.

Learn more about the topic shap summary plot example.

- Introduction to SHAP with Python – Towards Data Science

- Advanced Uses of SHAP Values – Kaggle

- SHAP Summary Plot and Mean Values displaying together

- How to use the shap.summary_plot function in shap – Snyk

- SHAP Summary Plot — h2o.shap_summary_plot • h2o

- How to Easily Customize SHAP Plots in Python – Towards Data Science

- beeswarm plot — SHAP latest documentation

- Introduction to SHAP with Python – Towards Data Science

- 9.6 SHAP (SHapley Additive exPlanations) | Interpretable …

- SHAP Summary Plot — h2o.shap_summary_plot • h2o

- Model explainability with SHAP (part 1/3) – What is SHAP?

See more: nhanvietluanvan.com/luat-hoc