Runtimeerror: Cuda Error: Device-Side Assert Triggered

CUDA (Compute Unified Device Architecture) is a parallel computing platform and API model created by NVIDIA. It allows developers to use the power of NVIDIA GPUs (Graphics Processing Units) to accelerate their applications significantly. However, sometimes during the execution of CUDA-enabled programs, you may encounter a runtime error known as “RuntimeError: CUDA error: device-side assert triggered.” This article aims to explain this error, its common causes, and how to fix it.

Common Causes of the Runtime Error: CUDA Error: Device-Side Assert Triggered:

1. Insufficient Memory Allocation:

When using CUDA, it is crucial to allocate sufficient memory on the GPU. If your program attempts to access more memory than available, it can lead to a device-side assert trigger. To resolve this, you can try reducing the memory usage or increasing the memory allocation appropriately.

2. Invalid Kernel Arguments:

Kernel functions are an essential part of CUDA programming, and invalid arguments passed to these kernels can trigger device-side assertions. Make sure that the kernel arguments are correctly defined and match the expected types in your CUDA code.

3. Errors in Memory Operations:

Improper memory operations, such as reading from or writing to an invalid memory location, can cause the device-side assert error. Be cautious about managing memory allocations, transfers, and deallocations correctly to avoid such errors.

4. Unsupported Compute Capability:

Different GPUs have varying compute capabilities, and certain CUDA features may not be supported on older GPUs. If you are using a GPU with an unsupported compute capability, it could trigger device-side assert errors. Ensure that your GPU is compatible with the CUDA code you are executing.

5. Bug in CUDA Code:

Similar to any other programming language, bugs in CUDA code can lead to runtime errors. Thoroughly review your code and double-check for any logical or programming errors that might trigger the device-side assert.

6. Environmental Issues:

Sometimes, conflicts with the programming environment or incorrect configurations can also cause the device-side assert error. Ensure that you have the necessary CUDA libraries and dependencies installed, and consider recompiling your code to ensure proper integration.

Troubleshooting and Fixing the Device-Side Assert Error:

1. Compile with `torch_use_cuda_dsa` to Enable Device-Side Assertions:

If you are using PyTorch, compiling your code with the `torch_use_cuda_dsa` flag can provide additional information about the error. This can help you pinpoint the exact cause of the device-side assert trigger.

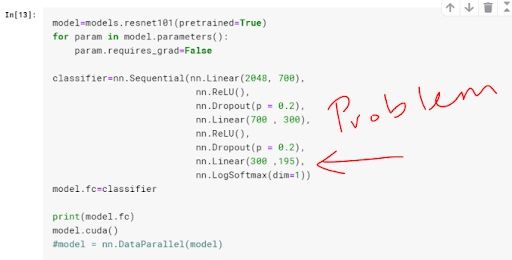

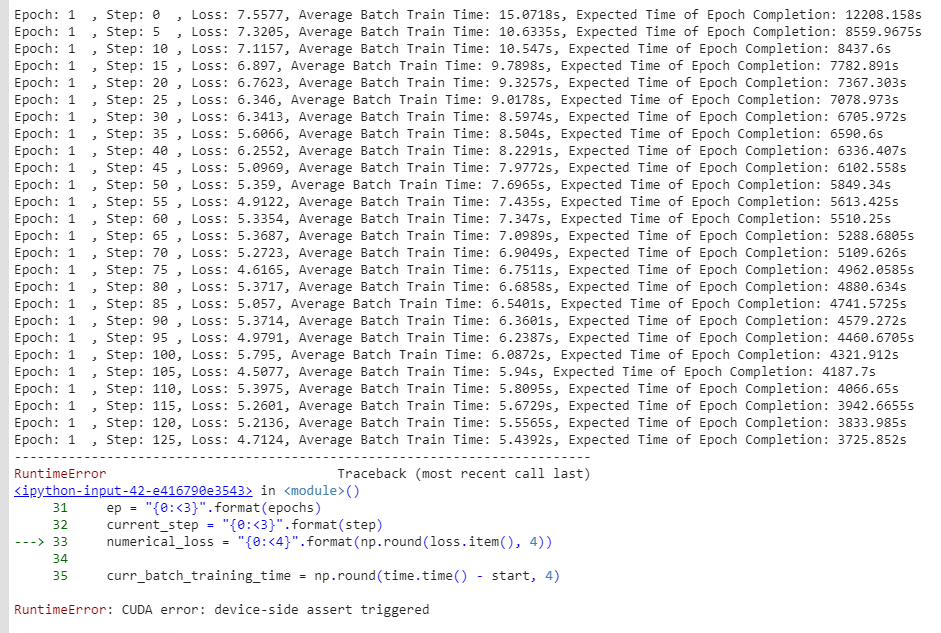

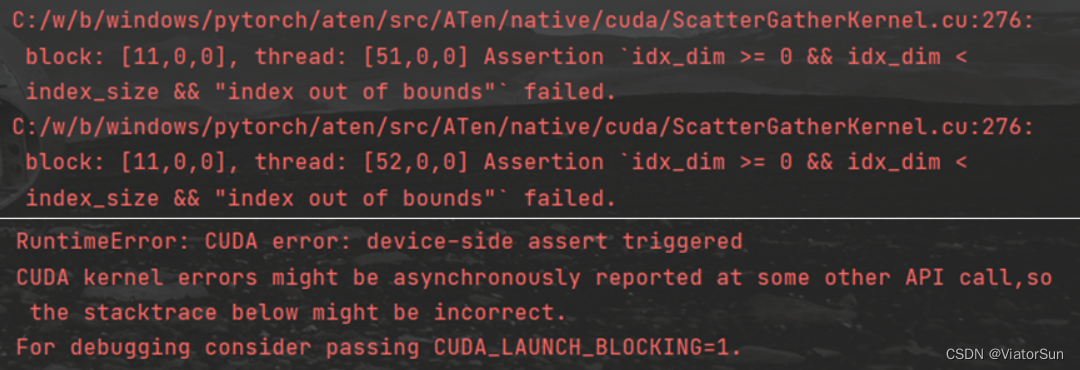

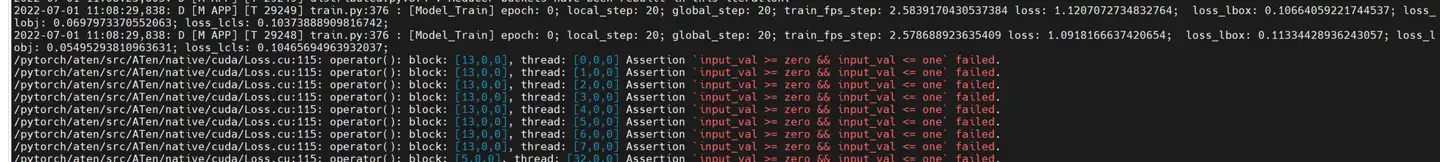

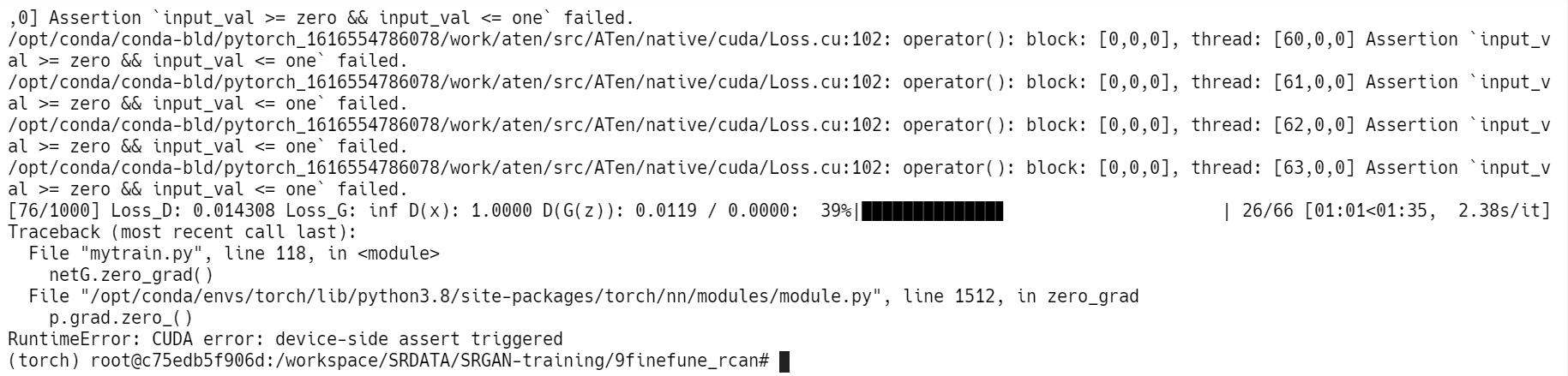

2. Assertion srcIndex < srcSelectDimSize Failed: This specific assertion typically occurs when indexing or selecting tensors in your CUDA code. Make sure you perform proper bounds checking and confirm that the index values are within the correct range. 3. CUDA_LAUNCH_BLOCKING 1 (Jupyter Notebook) / CUDA_LAUNCH_BLOCKING 1 (Colab): By setting the `CUDA_LAUNCH_BLOCKING` environment variable to 1, you can make CUDA error reporting synchronous. This can provide more accurate error messages and stack traces to help identify the cause of the device-side assert. 4. Assertion input_val >= zero && input_val <= one Failed: When encountering this assertion, it is related to a range check on input values. Ensure that you correctly normalize input data and check for any inconsistencies that might result in values beyond the expected range. 5. Assertion t >= 0 && t < n_classes Failed: This assertion typically appears when dealing with classification tasks involving a specific number of classes. Verify that your classification values correspond to valid class indices and ensure that you are using the correct number of classes in your code. 6. Utilizing BCEWithLogitsLoss: If you are using the BCEWithLogitsLoss loss function, it is essential to ensure that the input data is properly prepared, such as applying sigmoid activation to logits or scaling the input values. 7. RuntimeError: CUDA Error: Invalid Device Ordinal: This error occurs when you attempt to access a GPU device with an invalid ordinal. Make sure to select a valid GPU device for execution or check if the device IDs are correctly configured in your code. In conclusion, encountering the runtime error "RuntimeError: CUDA error: device-side assert triggered" can be frustrating but understanding its common causes and implementing the suggested troubleshooting steps can help identify and resolve the issue efficiently. Remember to thoroughly review your code, check for memory allocation errors, ensure proper kernel arguments, and investigate any environmental or compatibility issues. With dedication and attention to detail, you can overcome this error and harness the power of CUDA for your parallel computing needs. FAQs: Q1. What does the error "RuntimeError: CUDA error: device-side assert triggered" mean? A1. This error indicates that a device-side assert (assertion) condition failed during the execution of a CUDA program. Q2. How can I fix the "RuntimeError: CUDA error: device-side assert triggered" error? A2. Some potential fixes include checking for memory allocation issues, validating kernel arguments, verifying memory operations, ensuring compatibility with your GPU's compute capability, reviewing the code for any bugs or logical errors, and examining the programming environment and configurations. Q3. What role does `torch_use_cuda_dsa` play in fixing the error? A3. Compiling your code with `torch_use_cuda_dsa` enables device-side assertions, providing more detailed information about the error and aiding in its resolution. Q4. What is the significance of setting `CUDA_LAUNCH_BLOCKING` to 1? A4. By setting `CUDA_LAUNCH_BLOCKING` to 1, you can force synchronous error reporting in Jupyter Notebook or Colab environments, allowing for more precise error messages and stack traces. Q5. How can I resolve assertion failures related to tensor indexing or selecting? A5. Ensure you are performing proper bounds checking and that the index values used in your CUDA code are within the expected range. Q6. Why might I encounter an "Assertion input_val >= zero && input_val <= one failed" error? A6. This assertion error is typically related to a range check on input values. Ensure you correctly normalize the input data and check for any inconsistencies that might result in values beyond the expected range. Q7. What does an "Assertion t >= 0 && t < n_classes failed" error indicate? A7. This assertion typically arises when working with classification tasks involving a specific number of classes. Validate that your classification values correspond to valid class indices and ensure you are using the correct number of classes in your code. Q8. How should I handle the "RuntimeError: CUDA error: invalid device ordinal" error? A8. This error suggests that you are attempting to access a GPU device with an invalid ordinal. Select a valid GPU device for execution or ensure that the device IDs in your code are correctly configured.

Solving The “Runtimeerror: Cuda Out Of Memory” Error

What Is Device Side Assert Error In Cuda?

When it comes to parallel computing platforms, CUDA is one of the most popular choices. Developed by NVIDIA, CUDA allows developers to harness the power of NVIDIA GPUs for general-purpose computing. However, like any programming framework, CUDA has its own set of errors and pitfalls. One such error is the device side assert error.

In CUDA programming, assertions are used to check the correctness of certain assumptions in the code. They are particularly helpful during debugging, as they allow developers to catch any potential issues early on. Device side asserts, as the name suggests, are assertions that are used specifically on the device side of CUDA programs.

What causes a device side assert error?

A device side assert error occurs when a device side assert statement fails during program execution. This happens when an assertion condition is not true, indicating that an assumption made in the code does not hold. Device side asserts are commonly used to verify input validation, memory accesses, and array bounds.

There are several common scenarios that can lead to a device side assert error:

1. Invalid or out-of-bounds memory access: This can occur when trying to access memory that is not allocated or exceeds the allocated bounds.

2. Uninitialized variables: If a variable is not properly initialized before its use, it can trigger a device side assert error.

3. Unexpected input values: When assumptions on the input values made by the code do not hold, it can lead to assertion failures.

4. Incorrect algorithm implementation: If the underlying algorithm of a CUDA program is flawed or contains logical errors, it can result in assertion failures.

5. Race conditions: If multiple threads access and modify the same memory location without proper synchronization, it can lead to assertion failures.

How to handle device side assert errors?

Device side assert errors should not be taken lightly, as they indicate potential bugs or issues in the code. When a device side assert error occurs, the CUDA program will terminate, and an error message will be displayed indicating the location and cause of the error.

Here are some steps to handle device side assert errors effectively:

1. Debugging: Identify the location and cause of the assertion failure. The error message provided by CUDA will usually contain this information. Use debugging tools like NVIDIA Nsight to step through the code and find the root cause of the error.

2. Analyzing input values: Check the input values used in the failed assert condition. Verify if they match the assumptions made by the code. Inspecting invalid or unexpected input values could help discover the cause of the assertion failure.

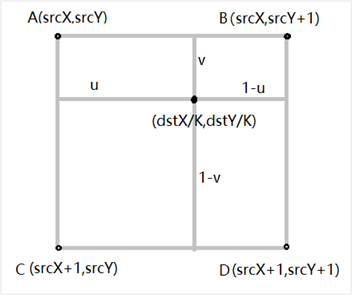

3. Memory access validation: Review the memory accesses in the code and ensure that they are within the allocated bounds. Check if the necessary memory allocations and copies are performed correctly.

4. Initialization: Make sure all variables are properly initialized before their use. Uninitialized variables can often lead to assertion failures.

5. Algorithm validation: Review the underlying algorithm implemented in the CUDA program. Ensure it is correctly implemented and does not contain any logical errors. Comparing the algorithm with a reference implementation or algorithm specification can be helpful.

6. Synchronization and thread safety: Inspect the code for potential race conditions where multiple threads access and modify the same memory location concurrently. Introduce proper synchronization mechanisms, such as mutexes or atomic operations, to avoid assertion failures due to race conditions.

7. Logging and error handling: Consider incorporating logging mechanisms within your CUDA program to record important events and errors. This can help in tracking down issues that occur during program execution.

FAQs about device side assert errors in CUDA programming:

Q: Can device side assert errors be disabled?

A: Yes, device side assert errors can be disabled by compiling the CUDA program in release mode. However, it is generally not recommended as it may hide potential bugs and make debugging more difficult.

Q: Are device side assert errors specific to NVIDIA GPUs?

A: Yes, device side assert errors are specific to CUDA programming on NVIDIA GPUs. They are not seen in other parallel computing frameworks or on different GPU architectures.

Q: Can device side assert errors be caught and handled programmatically?

A: No, device side assert errors are fatal errors that immediately terminate the CUDA program. They cannot be caught and handled programmatically like other exceptions in CUDA.

Q: What is the difference between device side asserts and host side asserts in CUDA?

A: Device side asserts are used to check assumptions and validate potential errors on the device side, whereas host side asserts are used on the host side of CUDA programs. Host side asserts are evaluated on the host CPU and provide information about failures in host code.

Q: How can I improve the performance of my CUDA program while handling device side assert errors?

A: Improving the performance of a CUDA program and handling device side assert errors go hand in hand. By fixing the root causes of the asserts, optimizing memory access patterns, and ensuring thread safety, you can both improve performance and reduce the occurrence of device side assert errors.

In conclusion, device side assert errors in CUDA programming indicate failed assertions on the device side of CUDA programs. These errors can be caused by invalid memory accesses, uninitialized variables, unexpected input values, flawed algorithms, and race conditions. Handling device side assert errors requires careful debugging, analyzing input values, validating memory accesses, ensuring proper initialization, validating algorithms, and managing synchronization. It is important to understand and address device side assert errors effectively to create robust and reliable CUDA programs.

What Is Cuda Error 59?

When running CUDA applications, CUDA error 59 may occur due to various reasons. In this article, we will explore the possible causes of this error and provide solutions to troubleshoot and fix it.

Possible Causes of CUDA Error 59:

1. Incompatible hardware: CUDA error 59 can occur if the installed GPU does not support the operations required by the CUDA application. Ensure that your GPU is compatible with the CUDA version and APIs being used.

2. Driver issues: GPU drivers play a crucial role in CUDA applications. Outdated, corrupt, or incompatible GPU drivers can trigger error 59. Make sure you have the latest GPU drivers installed that are compatible with your CUDA version.

3. Insufficient memory: If your application requires more GPU memory than available, the system may throw CUDA error 59. Check the memory requirements of your application and ensure that your GPU has enough memory to handle the workload.

4. Insufficient power supply: GPUs require a steady and adequate power supply. Insufficient power can lead to unexpected errors, including CUDA error 59. Verify that your GPU is receiving sufficient power, and consider upgrading the power supply if needed.

5. Interference with other software: Certain software conflicts can interfere with CUDA operations, resulting in error 59. Disable any interfering software, such as antivirus or firewall programs, and try running your CUDA application again.

How to Troubleshoot and Fix CUDA Error 59:

1. Update GPU Drivers: Visit the official NVIDIA website and download the latest drivers compatible with your GPU model and CUDA version. Install the drivers and restart your computer before running the CUDA application.

2. Check GPU Compatibility: Verify that your GPU supports the CUDA version and APIs used by your application. Refer to the official NVIDIA documentation or contact their support for GPU compatibility details.

3. Free Up GPU Memory: If your application has high memory requirements, consider optimizing your code or reducing the workload to fit within the available GPU memory. Alternatively, you can try using a GPU with higher memory capacity.

4. Verify Power Supply: Ensure that your GPU is adequately powered. Check the power connections and consider upgrading your power supply if it cannot handle the power demands of your GPU.

5. Disable Interfering Software: Temporarily disable any software that may interfere with CUDA operations, such as antivirus or firewall programs. If disabling them resolves the issue, add exceptions to allow your CUDA application to run without interference.

FAQs:

Q: Can CUDA error 59 occur on all operating systems?

A: Yes, CUDA error 59 can occur on various operating systems, including Windows, macOS, and Linux, if the underlying causes discussed above are present.

Q: Is CUDA error 59 specific to certain GPU models?

A: No, CUDA error 59 is not specific to any particular GPU model. It can potentially occur on any NVIDIA GPU if the mentioned causes are applicable.

Q: Are there tools available to diagnose and fix CUDA error 59?

A: NVIDIA provides CUDA toolkit and debugging tools that can help diagnose and troubleshoot CUDA errors, including error 59. These tools can assist in identifying specific issues and provide suggestions for fixing them.

Q: Can outdated CUDA libraries cause error 59?

A: Yes, outdated CUDA libraries may cause compatibility issues and trigger CUDA error 59. Always ensure that your CUDA libraries are up to date and compatible with your CUDA application.

In conclusion, CUDA error 59 can be a frustrating obstacle for developers using NVIDIA’s CUDA framework for parallel computing tasks. By understanding the potential causes and following the troubleshooting steps mentioned in this article, developers can effectively diagnose and fix this error. Remember to keep your GPU drivers up to date, verify GPU compatibility, manage memory efficiently, ensure sufficient power supply, and address any software interferences. With these steps, you can mitigate CUDA error 59 and achieve optimal performance in your CUDA applications.

Keywords searched by users: runtimeerror: cuda error: device-side assert triggered compile with `torch_use_cuda_dsa` to enable device-side assertions., Assertion srcIndex < srcSelectDimSize failed, CUDA_LAUNCH_BLOCKING 1 jupyter notebook, CUDA_LAUNCH_BLOCKING 1 Colab, Assertion input_val >= zero && input_val <= one failed, Assertion t 0 t n_classes failed, BCEWithLogitsLoss, RuntimeError: CUDA error: invalid device ordinal

Categories: Top 76 Runtimeerror: Cuda Error: Device-Side Assert Triggered

See more here: nhanvietluanvan.com

Compile With `Torch_Use_Cuda_Dsa` To Enable Device-Side Assertions.

The PyTorch library is renowned for its efficient deep learning capabilities, making it a popular choice among researchers and developers. To further enhance the performance and correctness of PyTorch-based applications, a useful feature called device-side assertions can be enabled with the compilation flag `torch_use_cuda_dsa`. In this article, we will delve into the details of device-side assertions, explore why they are beneficial, and provide answers to frequently asked questions.

## Understanding Device-Side Assertions

Assertions play a crucial role in software development, as they allow developers to identify and fix issues by testing assumptions about the program’s behavior. PyTorch takes this concept a step further by incorporating assertions on both the host (CPU) and device (GPU) sides. Device-side assertions enable immediate error detection at the source of execution, providing valuable insights into code correctness.

By default, PyTorch assertions run on the host side, which means that errors which might occur during GPU execution are only detected after data transfer between CPU and GPU. This delay can impede debugging and troubleshooting efforts, especially when dealing with large-scale models and datasets. Device-side assertions address this issue by enabling real-time error detection directly on the GPU, vastly improving developers’ ability to identify and resolve anomalies promptly.

## Enabling Device-Side Assertions

To take full advantage of device-side assertions, the compilation flag `torch_use_cuda_dsa` must be set when building PyTorch from source. This flag unlocks the potential for parallelizing assertion checks on the GPU, paving the way for instant error detection within GPU kernels. By enabling this feature, developers can easily identify problems without the need for costly data transfers between CPU and GPU.

To compile PyTorch with device-side assertions, follow these steps:

1. Clone the PyTorch repository: `git clone https://github.com/pytorch/pytorch.git`.

2. Enter the cloned repository: `cd pytorch`.

3. Enable the `torch_use_cuda_dsa` flag globally: `export USE_CUDA_DSA=1`.

4. Build PyTorch as per your desired configuration (e.g., `python setup.py install`).

It is important to note that device-side assertions are only available on systems supporting CUDA Dynamic Shared Memory (DSA). Therefore, before enabling this feature, ensure that your hardware supports the necessary CUDA version.

## FAQs

Q1: What are the benefits of using device-side assertions?

Device-side assertions improve debugging capabilities by providing real-time error detection on the GPU. They enable developers to promptly identify and solve issues at the source of execution, leading to faster debugging turnaround times and more efficient development processes.

Q2: Can I use device-side assertions in any PyTorch-based application?

Device-side assertions can be utilized in any PyTorch-based application, including both research and production environments. However, to take advantage of this feature, you must compile PyTorch with the `torch_use_cuda_dsa` flag enabled.

Q3: Do I need to make any code modifications to use device-side assertions?

No, device-side assertions do not require any modifications to your existing codebase. Once you have compiled PyTorch with device-side assertions enabled, any assertions present in your code will automatically be executed on the GPU.

Q4: Are there any performance implications when using device-side assertions?

Enabling device-side assertions comes with some performance overhead due to the additional checks performed on the GPU. However, the benefits of improved debugging and error detection usually outweigh this minor impact, especially in complex applications.

Q5: Can I use device-side assertions with distributed PyTorch frameworks such as Horovod?

Yes, device-side assertions are fully compatible with distributed PyTorch frameworks like Horovod. By enabling this feature, you ensure that assertion checks are executed on each GPU within the distributed setup, aiding in the detection of errors across multiple devices.

In conclusion, enabling device-side assertions through the `torch_use_cuda_dsa` flag during PyTorch compilation is a valuable step toward improving the development and debugging experience with GPU-accelerated deep learning applications. By enabling real-time error detection directly on the GPU, developers can quickly identify and resolve issues, leading to more robust and efficient code. Remember to check hardware compatibility before enabling this feature, and enjoy the enhanced debugging capabilities offered by device-side assertions!

Assertion Srcindex < Srcselectdimsize Failed

Images related to the topic runtimeerror: cuda error: device-side assert triggered

Found 6 images related to runtimeerror: cuda error: device-side assert triggered theme

![Solved] RuntimeError: CUDA error: device-side assert triggered - Clay-Technology World Solved] Runtimeerror: Cuda Error: Device-Side Assert Triggered - Clay-Technology World](https://i0.wp.com/clay-atlas.com/wp-content/uploads/2021/03/cropped-output.png?fit=350%2C350&ssl=1)

![PyTorch 오류 해결] RuntimeError: CUDA error: device-side assert triggered Pytorch 오류 해결] Runtimeerror: Cuda Error: Device-Side Assert Triggered](https://img1.daumcdn.net/thumb/C176x176/?fname=https://blog.kakaocdn.net/dn/chkbmc/btqOoNk7zhS/Mhn80Z5n7xKGZEK2utRXX0/img.png)

![Cuda Error记录]Cuda Error: Device-Side Assert Triggered的一种可能_](https://img-blog.csdnimg.cn/img_convert/8835211f095f359386d6f3c9d3a5c84c.png) = 0 && Idx_Dim < Index_Size &_正在变胖的喵喵的博客-Csdn博客" style="width:100%" title="CUDA error记录]CUDA error: device-side assert triggered的一种可能_"assertion `idx_dim >= 0 && idx_dim < index_size &_正在变胖的喵喵的博客-CSDN博客">

= 0 && Idx_Dim < Index_Size &_正在变胖的喵喵的博客-Csdn博客" style="width:100%" title="CUDA error记录]CUDA error: device-side assert triggered的一种可能_"assertion `idx_dim >= 0 && idx_dim < index_size &_正在变胖的喵喵的博客-CSDN博客">

Article link: runtimeerror: cuda error: device-side assert triggered.

Learn more about the topic runtimeerror: cuda error: device-side assert triggered.

- CUDA Error – Device-Side Assert Triggered in PyTorch – Built In

- CUDA runtime error (59) : device-side assert triggered

- How to fix “CUDA error: device-side assert triggered” error?

- [HELP] RuntimeError: CUDA error: device-side assert triggered

- CUDA Error – Device-Side Assert Triggered in PyTorch – Built In

- DaVinci Resolve Error Code 59 (4 Fixes That Work in 2023!) – Postudio

- Frequently Asked Questions — PyTorch 2.0 documentation

- passing cuda_launch_blocking=1 – AI Search Based Chat – You.com

- RuntimeError: CUDA error: device-side assert triggered

- Runtimeerror: cuda error: device-side assert triggered [SOLVED]

- Cuda IndexKernel error, device side assert triggered – Trainer

- device-side assert triggered cuda kernel errors might be …

See more: nhanvietluanvan.com/luat-hoc