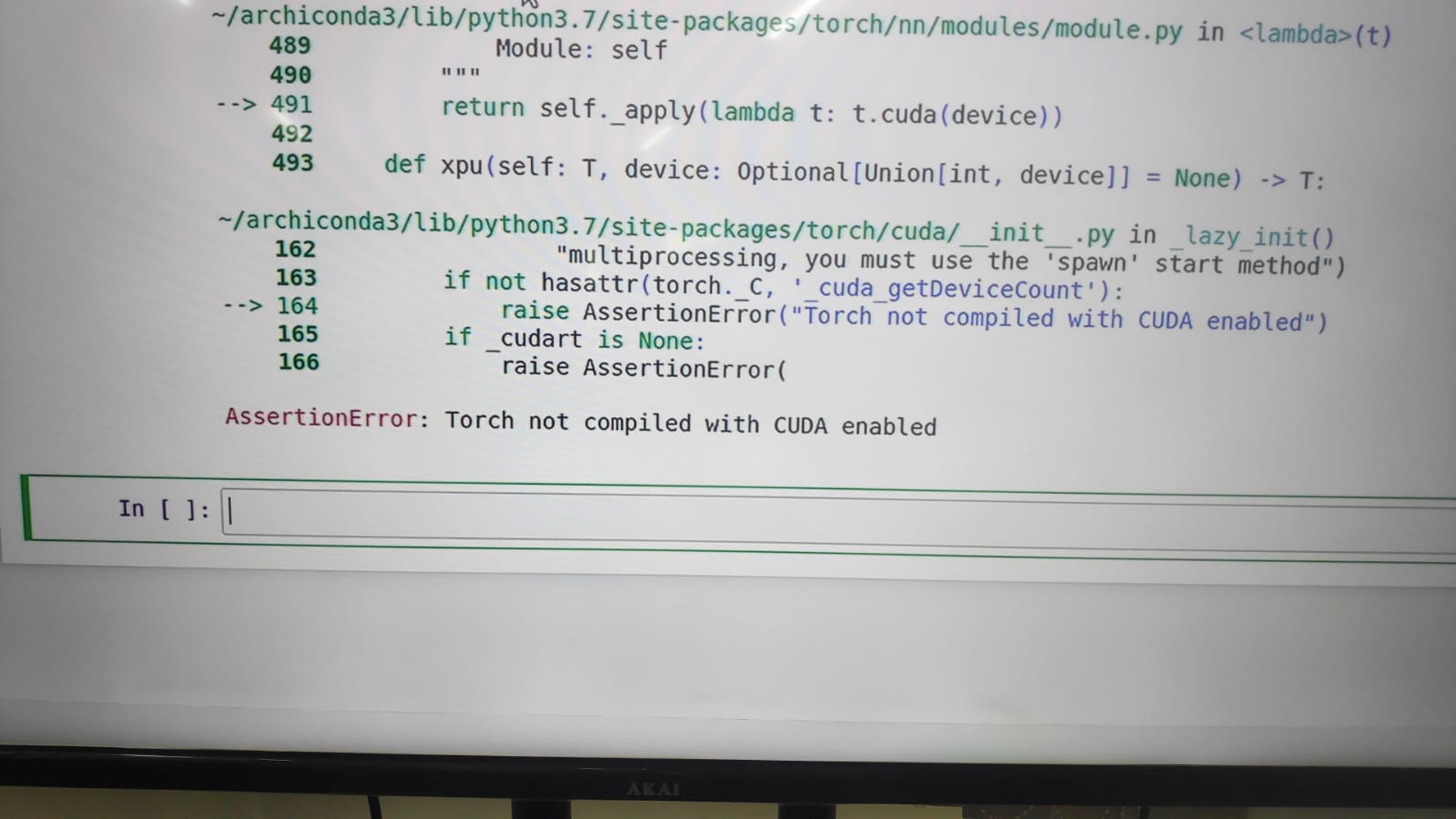

Assertionerror: Torch Not Compiled With Cuda Enabled

If you’ve encountered the assertion error “Torch not compiled with CUDA enabled,” you may be experiencing difficulties utilizing CUDA, a parallel computing platform and API model developed by NVIDIA. CUDA enables developers to use GPUs for general-purpose computing tasks, including accelerating deep learning algorithms. This article will explore the potential causes of this error and provide possible solutions to address it.

Causes of the Assertion Error: Torch not compiled with CUDA enabled

1. Failure to install CUDA toolkit:

One common cause of the “Torch not compiled with CUDA enabled” error is the absence of the CUDA toolkit on your system. The CUDA toolkit is required to compile and execute CUDA code. Ensure that you have successfully installed the CUDA toolkit, matching the version supported by your GPU.

2. Incompatible GPU:

Another cause could be an incompatible GPU. CUDA requires a compatible NVIDIA GPU to function properly. Check if your GPU meets the minimum requirements specified by CUDA. If your GPU is not supported, you may need to consider alternative methods for running PyTorch with CPU-only support.

3. Incorrect PyTorch installation:

Incorrect installation of PyTorch could also lead to the assertion error. Ensure that you have installed the correct version of PyTorch, compatible with CUDA. You can verify this by checking the PyTorch documentation or the official website for the recommended installation steps.

4. Missing or outdated CUDA drivers:

Outdated or missing CUDA drivers can cause compatibility issues with PyTorch. It is essential to keep your CUDA drivers up to date to ensure proper communication between PyTorch and your GPU. Visit the NVIDIA website to download and install the latest CUDA drivers suitable for your system.

5. Insufficient CUDA version:

Mismatched versions of PyTorch and CUDA can lead to the assertion error. Check if the versions of PyTorch and CUDA you have installed are compatible. In some cases, you may need to downgrade or upgrade either PyTorch or CUDA to achieve compatibility.

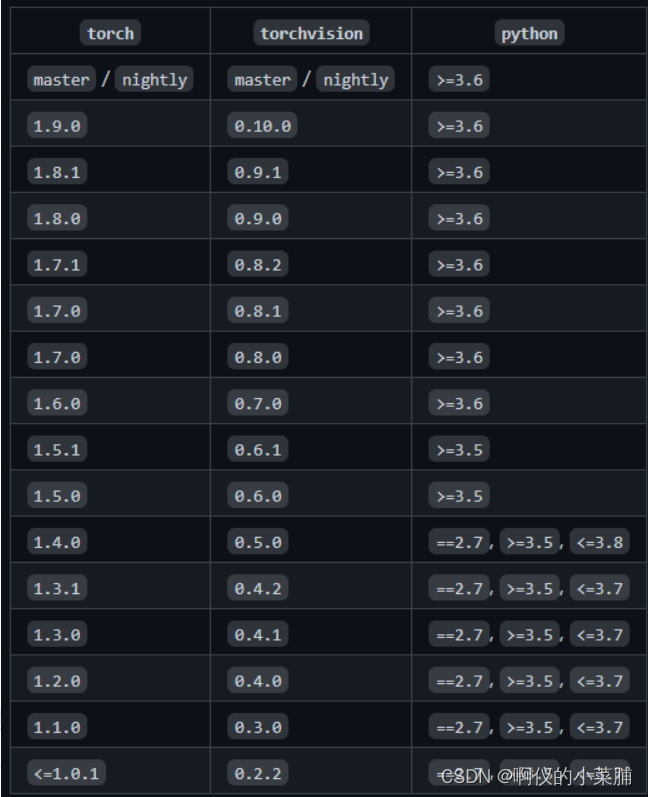

6. Python version incompatibility:

PyTorch and CUDA may have specific version requirements for Python. Ensure that you are using a compatible version of Python as specified in the PyTorch documentation. Incompatibility between Python versions can cause the “Torch not compiled with CUDA enabled” error.

7. Torch not built with CUDA support:

The specific PyTorch distribution you have installed may not have been compiled with CUDA support. Ensure that you have downloaded and installed the appropriate PyTorch version with CUDA support enabled. You can usually find this information on the PyTorch website or documentation.

8. Potential conflict with other libraries or packages:

Conflicts with other libraries or packages can sometimes interfere with PyTorch’s CUDA functionality. It is recommended to check for any potential conflicts with existing packages or libraries on your system. Compatibility issues can arise, particularly if you have multiple versions of PyTorch or CUDA installed simultaneously.

FAQs

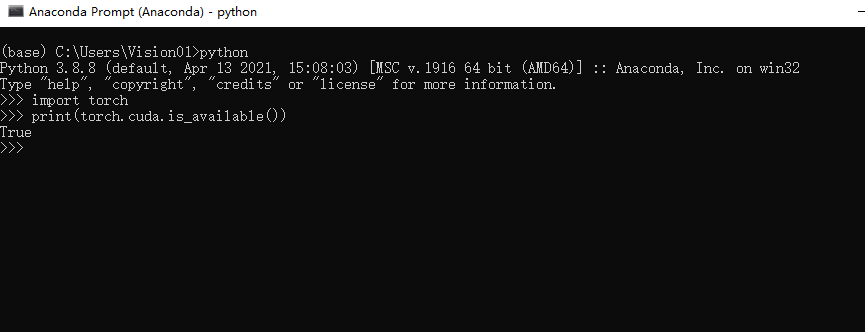

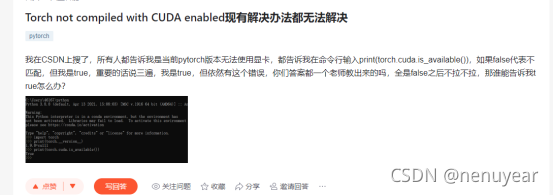

Q: How do I know if my PyTorch installation has CUDA support enabled?

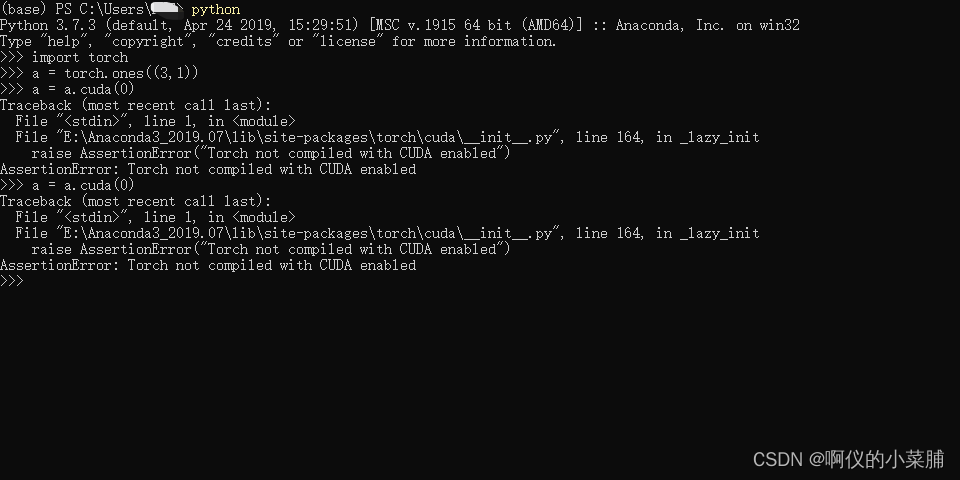

A: You can check if CUDA support is enabled in PyTorch by running the following line of code:

“`python

import torch

print(torch.cuda.is_available())

“`

If the output is `False`, it indicates that PyTorch was not compiled with CUDA support.

Q: I’m using PyCharm, and I encounter the “Torch not compiled with CUDA enabled” error. What should I do?

A: If you’re using PyCharm, ensure that you have set up the correct Python interpreter in your project settings. Verify that the interpreter used in the PyCharm environment matches the environment where you successfully installed PyTorch with CUDA support.

Q: I’m using a Mac, and I see the “Torch not compiled with CUDA enabled” error. What steps should I take?

A: Mac systems generally do not come with NVIDIA GPUs that support CUDA. In this case, you can only use PyTorch with CPU support. Make sure you have installed the appropriate CPU-supported version of PyTorch for your Mac system.

Q: How can I enable CUDA in PyTorch?

A: CUDA support should be enabled by default if you have installed the correct CUDA-enabled version of PyTorch. However, if you encounter errors indicating that CUDA is not enabled, make sure you have followed all the installation steps correctly, including the CUDA toolkit installation.

Q: My system defaults to the CPU despite CUDA availability. Why?

A: If your system still defaults to the CPU even though CUDA is available, it may be due to improper installation, incorrect library versions, or other configuration issues. Go through the installation steps and documentation again, ensuring that all the necessary dependencies are in place.

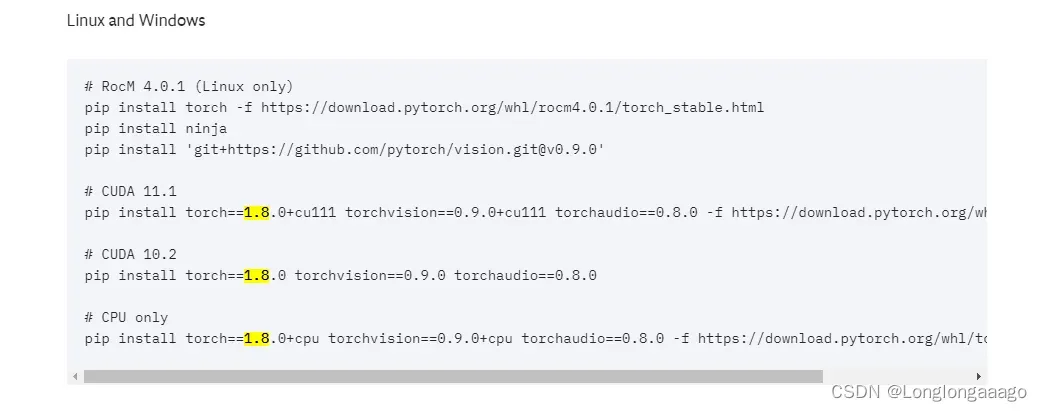

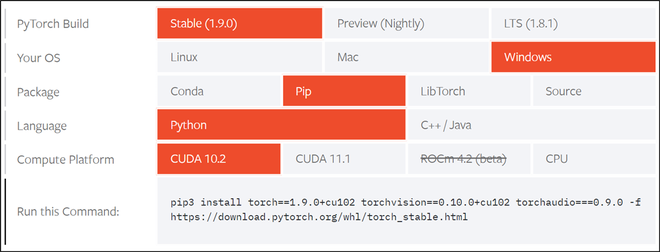

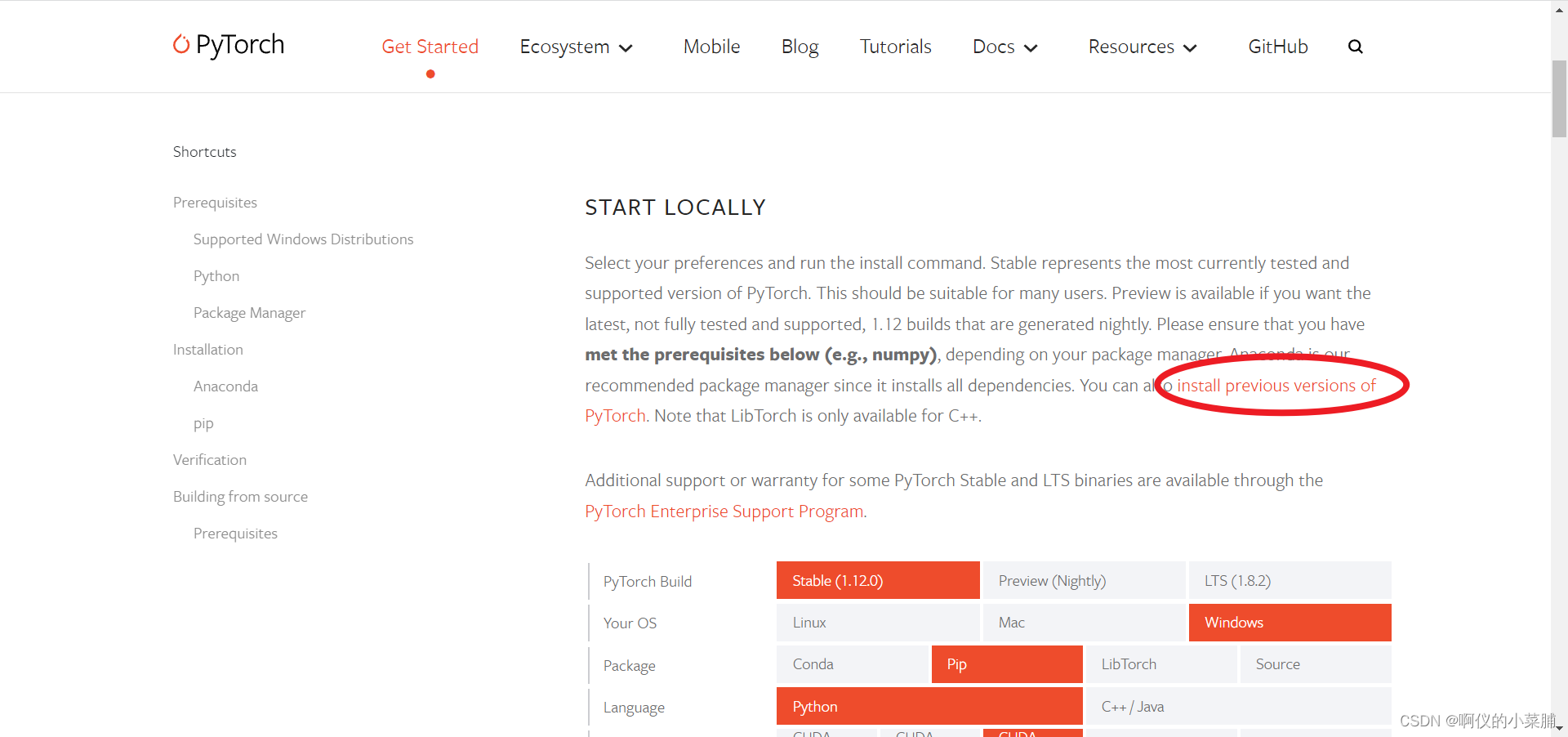

Q: How can I download PyTorch with CUDA support?

A: You can download the appropriate version of PyTorch with CUDA support from the official PyTorch website. Make sure to select the version compatible with your system and CUDA version.

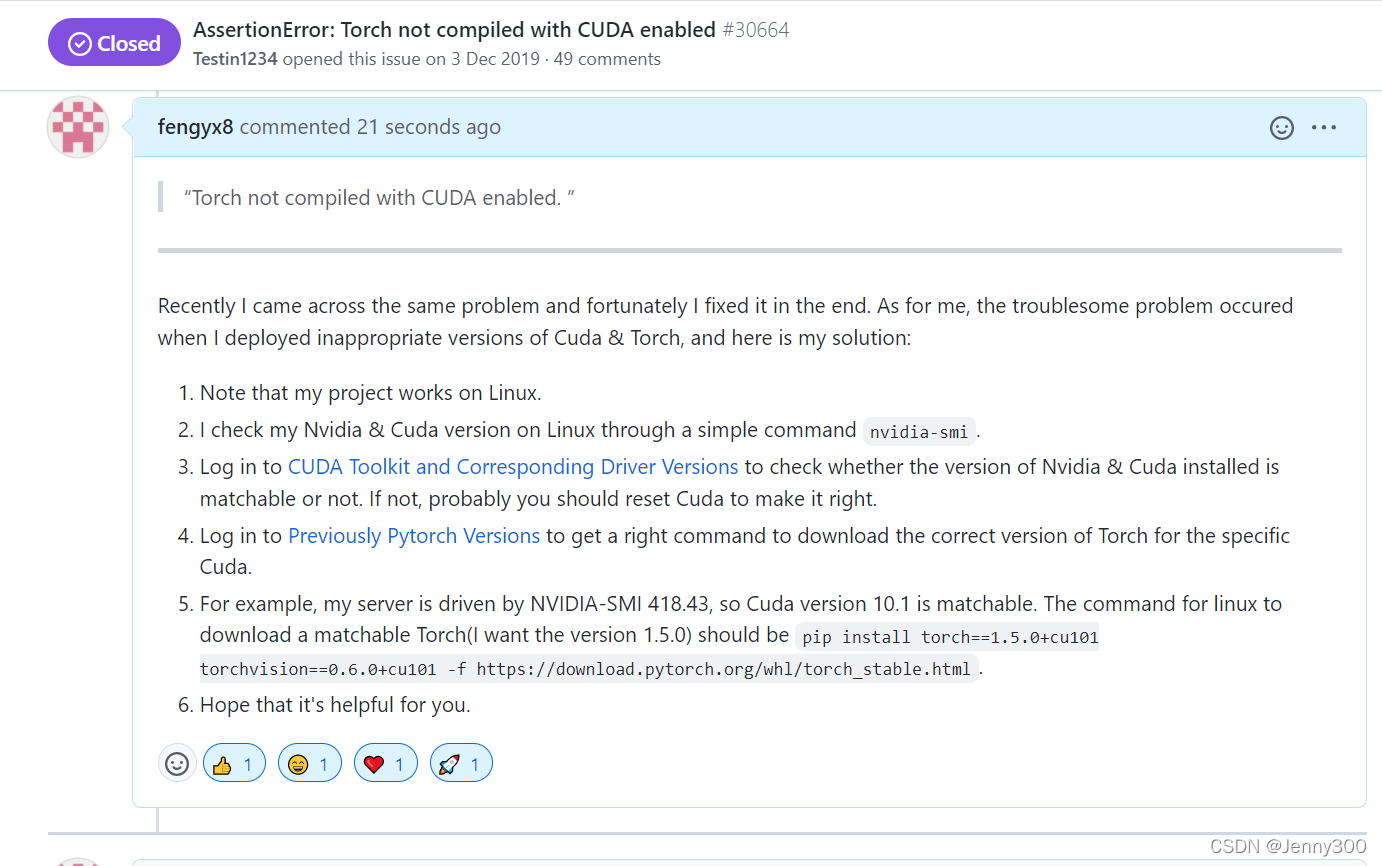

Q: I encounter the specific error “CUDAAssertionError: Torch not compiled with CUDA enabled.” How can I resolve it?

A: The specific error message indicates that the Torch library you are using was not built with CUDA support. Double-check your PyTorch installation and ensure that you have downloaded the correct version of PyTorch compiled with CUDA. If the problem persists, consider reinstalling PyTorch with the appropriate CUDA-enabled version.

In conclusion, encountering the assertion error “Torch not compiled with CUDA enabled” can be frustrating, but it is typically caused by issues relating to CUDA toolkit installation, GPU compatibility, incorrect PyTorch installation, outdated CUDA drivers, or version mismatches. By following the provided solutions and the related FAQs, you should be able to resolve the error and leverage the power of CUDA in PyTorch for efficient deep learning computations.

Grounding Dino | Assertionerror: Torch Not Compiled With Cuda Enabled | Solve Easily

What Is Assertionerror Torch Not Compiled With Cuda Enabled In Windows?

If you are a deep learning enthusiast or practitioner who uses the PyTorch library for your projects, you may have encountered the error message “AssertionError: torch not compiled with CUDA enabled” on your Windows machine. This error typically arises when you are attempting to run a PyTorch code that requires CUDA acceleration, but your installation does not support it. In this article, we will explore the reasons for this error, its implications, and the steps you can take to resolve it.

Understanding CUDA and PyTorch

Before diving into the intricacies of the error, let’s understand the two key concepts at play: CUDA and PyTorch.

CUDA (Compute Unified Device Architecture) is a parallel computing platform and API developed by NVIDIA. It provides a framework for harnessing the computational power of NVIDIA GPUs (Graphics Processing Units) to accelerate various computations, particularly those involving parallel processing. CUDA enables you to leverage the immense power of GPUs to expedite tasks like deep learning training and inference.

PyTorch, on the other hand, is an open-source machine learning library widely used in the deep learning community. Developed by Facebook’s AI Research lab, PyTorch provides an intuitive and flexible interface to build and train neural networks. It seamlessly integrates with CUDA to take advantage of GPU acceleration, significantly reducing training time and increasing overall performance.

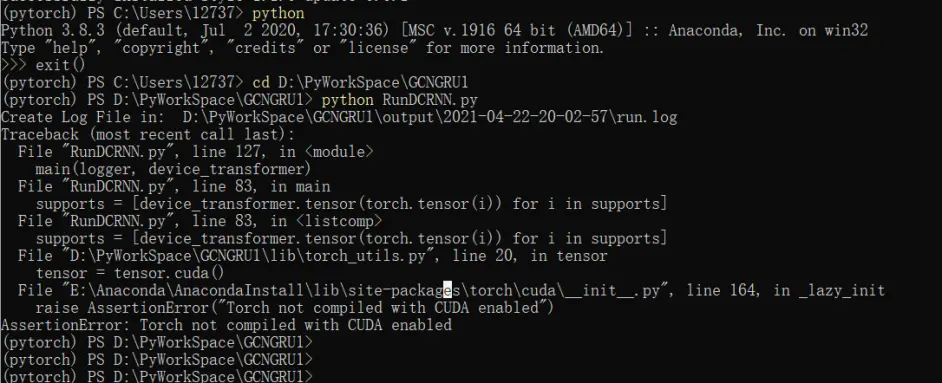

Why does the “AssertionError: torch not compiled with CUDA enabled” error occur?

The aforementioned error occurs when you attempt to execute PyTorch code that relies on CUDA support, but your installation of PyTorch lacks the necessary CUDA components. This error can be caused by several reasons:

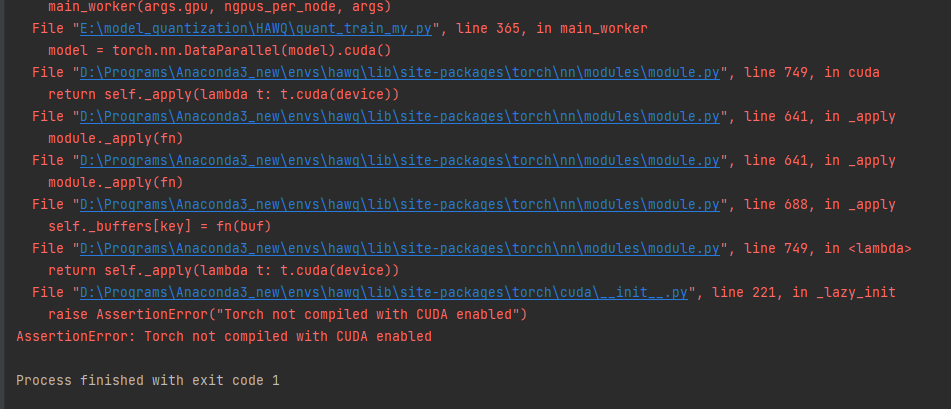

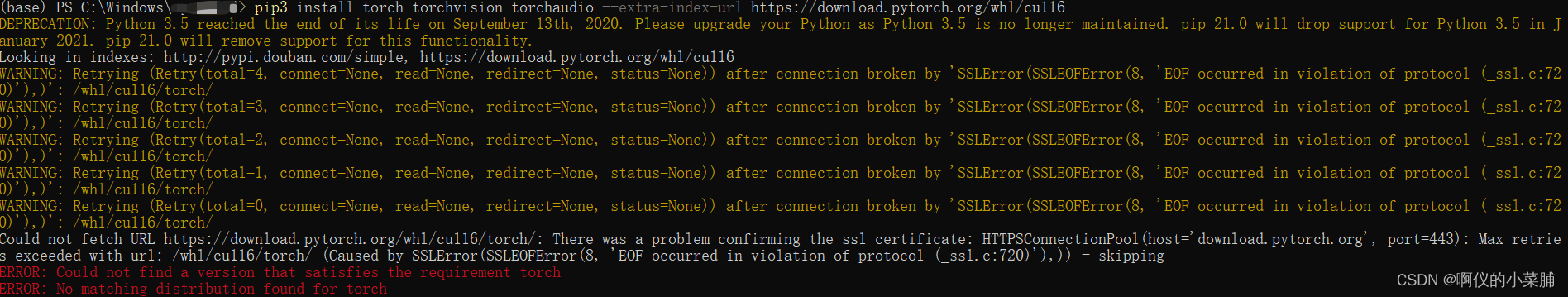

1. Incorrect PyTorch installation: If you did not install a CUDA-enabled version of PyTorch, this error is likely to occur. PyTorch provides builds with and without CUDA support, and it’s essential to choose the right version depending on your requirements.

2. Missing CUDA toolkit: The CUDA toolkit, which includes the necessary libraries, compilers, and tools, must be installed on your system alongside PyTorch. Failure to install this toolkit could lead to the “torch not compiled with CUDA enabled” error.

3. Outdated GPU drivers: Your GPU drivers must be up to date for your CUDA installation to function correctly. If you recently updated PyTorch or installed a new GPU, it is crucial to ensure that the GPU drivers are compatible with the installed CUDA version.

Resolving the “AssertionError” error

Now that we understand the possible causes of the error, let’s explore the solutions to resolve it:

1. Verify CUDA installation: First, check if you have installed the CUDA toolkit correctly on your Windows machine. Visit the NVIDIA website to download the latest version of the CUDA toolkit. Ensure that you select the appropriate version compatible with your GPU and Windows operating system.

2. Update GPU drivers: To update GPU drivers, visit the official website of your GPU manufacturer (e.g., NVIDIA, AMD, Intel) and download the latest drivers for your specific GPU model. Make sure to select the version that corresponds to your Windows operating system. After installing the updated drivers, restart your system and try running the PyTorch code again.

3. Reinstall PyTorch: If the above steps did not resolve the issue, consider reinstalling PyTorch with the appropriate CUDA support. Uninstall the existing PyTorch version and download the CUDA-enabled PyTorch build from the official website. Follow the installation instructions, and ensure that CUDA is enabled during the installation process.

4. Check environment variables: Verify that the required environment variables for CUDA and PyTorch are properly set. In Windows, navigate to the “Environment Variables” section (accessible through the system’s settings), and ensure that the relevant variables (e.g., CUDA_HOME) are correctly configured. If not, add them manually by browsing to the appropriate locations where CUDA and PyTorch are installed.

FAQs:

1. Can I use PyTorch without CUDA?

Yes, you can use PyTorch without CUDA. PyTorch provides builds that work on CPUs alone. While GPU acceleration offers improved performance, particularly for deep learning workloads, PyTorch supports both CPU and GPU computations.

2. How can I check if my PyTorch installation supports CUDA?

To verify if your PyTorch installation supports CUDA, execute the following line of code in a Python environment:

import torch

print(torch.cuda.is_available())

If the output is “True,” PyTorch has CUDA support. If it’s “False,” your current PyTorch installation lacks CUDA support.

In conclusion, the “AssertionError: torch not compiled with CUDA enabled” error often occurs when PyTorch is not compiled to support CUDA or when the CUDA toolkit is missing or incorrectly configured. By ensuring the correct installation of the CUDA toolkit, updating GPU drivers, and verifying the PyTorch installation, you can overcome this error and leverage the power of GPU acceleration for your deep learning projects.

How To Install Torch With Cuda Enabled?

Torch is a widely used deep learning framework that provides a seamless blend of flexibility and power. It comes equipped with a multitude of useful features, but perhaps one of its most impressive capabilities is its integration with CUDA, which allows for efficient GPU acceleration. In this article, we will guide you through the process of installing Torch with CUDA enabled, ensuring that you can make the most of this powerful combination.

Step 1: Installing CUDA Toolkit

Before we proceed with the installation of Torch, it is crucial to have the CUDA Toolkit installed on your system. CUDA is a parallel computing platform and application programming interface (API) model created by Nvidia, which facilitates the use of NVIDIA GPUs for general-purpose processing. You can download the CUDA Toolkit from the Nvidia website by selecting the appropriate version for your operating system.

Step 2: Downloading Torch

Once the CUDA Toolkit is successfully installed on your system, you can move on to downloading the Torch deep learning framework. The official Torch website provides an easy-to-use installation script. Open a terminal window and use the following command to download the installation script:

“`

$ git clone https://github.com/torch/distro.git ~/torch –recursive

“`

This command will create a new directory named ‘torch’ in your home directory and clone the Torch repository.

Step 3: Installing Torch Dependencies

Before we can install Torch, we need to ensure that all necessary dependencies are in place. Run the following command to install the required packages:

“`

$ cd ~/torch; bash install-deps

“`

This command will install various dependencies such as cmake, curl, libjpeg, and more. It may prompt for your password if it requires administrative privileges.

Step 4: Building and Installing Torch

After successfully installing the dependencies, we can proceed with building and installing Torch. Execute the following commands one by one:

“`

$ ./install.sh

“`

This command will initiate the Torch installation script. It may take some time to complete, as it will compile and configure the Torch framework according to your system specifications.

Step 5: Configuring CUDA Support

Now that Torch is installed, we need to configure it to enable CUDA support. Open the ‘.bashrc’ file located in your home directory using a text editor of your choice:

“`

$ nano ~/.bashrc

“`

Add the following lines to the end of the file:

“`

export CUDA_HOME=/usr/local/cuda

export LD_LIBRARY_PATH=”$CUDA_HOME/lib64:$LD_LIBRARY_PATH”

export PATH=”$CUDA_HOME/bin:$PATH”

export TORCH_CUDA_ARCH_LIST=”6.0″ # Replace with your GPU architecture if different

“`

Save the changes and exit the text editor. Make sure to replace ‘6.0’ in the last line with the architecture of your NVIDIA GPU. Refer to the official CUDA documentation for a list of supported architectures.

Step 6: Verifying the Installation

To ensure that Torch with CUDA support is correctly installed, open a new terminal window and type the following command:

“`

$ th

“`

This command will open the Torch Lua interactive shell. Now, execute the following command to check if Torch is utilizing CUDA acceleration:

“`

> require ‘cutorch’

> x = torch.Tensor(3,3):uniform():cuda()

> print(x)

“`

If you see a tensor object with random uniform values and a ‘CudaTensor’ label, congratulations! You have successfully installed Torch with CUDA support.

FAQs

Q: Can I install Torch with CUDA on Windows?

A: Yes, you can install Torch with CUDA on Windows. However, the process is more involved and requires additional steps. You will need to install MinGW and adjust some environment variables. Refer to the official Torch documentation for detailed instructions on Windows installation.

Q: What if I want to use a different version of CUDA?

A: The installation steps outlined here assume the use of the latest version of CUDA. If you wish to use an older version, make sure to download the corresponding CUDA Toolkit from the Nvidia website and update the ‘export CUDA_HOME’ line in the ‘.bashrc’ file accordingly.

Q: Can I utilize Torch with multiple GPUs?

A: Yes, Torch supports multi-GPU functionalities. By default, Torch will utilize all available GPUs. However, you can assign specific GPUs for Torch usage by setting the ‘CUDA_VISIBLE_DEVICES’ environment variable. For example, ‘CUDA_VISIBLE_DEVICES=1,2’ will restrict Torch to only use GPUs with IDs 1 and 2.

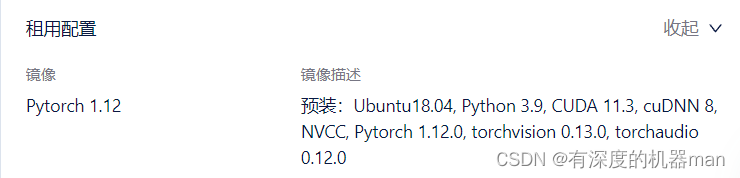

Q: Is it possible to install Torch with CUDA support using Anaconda?

A: Yes, Torch can be installed with CUDA support using Anaconda. You can use the Conda package manager to create a virtual environment and install Torch within it. Refer to the official Torch documentation for detailed instructions on installing with Anaconda.

Conclusion

Enabling CUDA support in Torch unleashes its full potential in terms of speed and performance, making it an ideal choice for deep learning tasks involving large datasets. By following the steps outlined in this article, you can ensure that Torch is properly configured to utilize your NVIDIA GPU and take advantage of the power CUDA provides. Now, you are ready to leverage the combined strength of Torch and CUDA for your deep learning assignments with confidence.

Keywords searched by users: assertionerror: torch not compiled with cuda enabled PyTorch, Torch not compiled with CUDA enabled pycharm, torch.cuda.is_available() false, Mac Torch not compiled with CUDA enabled, How to enable CUDA in PyTorch, CUDA not available – defaulting to CPU note This module is much faster with a GPU, Download pytorch with cuda, Torch CUDA

Categories: Top 26 Assertionerror: Torch Not Compiled With Cuda Enabled

See more here: nhanvietluanvan.com

Pytorch

Deep learning has become an integral part of various fields, including computer vision, natural language processing, and robotics. With its ability to handle complex data, deep learning has led to groundbreaking advancements. One of the key frameworks for deep learning is PyTorch, an open-source machine learning library developed by Facebook’s AI Research lab. In this article, we will delve into the details of PyTorch, its features, and its contribution to the field of deep learning.

What is PyTorch?

PyTorch is a scientific computing framework that provides a focal point for building various machine learning models and applications. It offers dynamic computational graphs, which allow models to be defined and modified on the go, enabling flexible and intuitive experimentation.

PyTorch’s Design Philosophy:

PyTorch has gained immense popularity due to its user-friendly design philosophy. Its major design goals include ease of use, modularity, and extensibility. These principles make it perfect for both researchers and developers who are constantly seeking new ways to innovate and enhance their models. By embracing Pythonic programming concepts, such as dynamic typing and eager execution, PyTorch minimizes the gap between research and production.

Key Features:

1. Dynamic Computational Graphs:

PyTorch’s unique feature is its dynamic computational graph. Unlike other frameworks, which rely on static graphs, PyTorch builds a computational graph on the fly as a model is being executed. This allows for greater flexibility and ease of debugging. Developers can define custom logic and modify models at runtime, making it ideal for tasks involving changing input shapes or non-trivial control flow.

2. Automatic Differentiation:

PyTorch boasts a powerful automatic differentiation engine called autograd. It allows developers to compute gradients effortlessly by keeping track of the operations performed on tensors. With autograd, PyTorch eliminates the need for manual gradient calculations, which can be error-prone. It simplifies the training process, especially when dealing with complex neural networks.

3. Neural Network Library:

PyTorch provides a rich neural network library with pre-built modules that simplify the creation of complex architectures. The nn package offers a wide range of layer types, such as convolutional, recurrent, and linear layers. These modules help in constructing neural networks with ease.

4. GPU Acceleration:

Deep learning often requires significant computational power, and PyTorch provides seamless integration with GPUs. It leverages CUDA, a parallel computing platform, to accelerate computations on NVIDIA GPUs. This GPU acceleration results in faster training and inference speeds, enabling researchers and developers to train models efficiently.

5. Distributed Training:

To handle the ever-increasing size of datasets and models, PyTorch offers distributed training capabilities. It supports parallel computations across multiple devices and machines, enabling researchers to scale their experiments effortlessly. The torch.nn.DataParallel module simplifies the distribution of computations across multiple GPUs.

6. Integration with NumPy:

PyTorch is well-integrated with NumPy, a powerful Python library for numerical operations. This allows developers to leverage their existing NumPy code easily. The seamless integration between the two libraries facilitates data preprocessing, post-processing, and visualization.

FAQs:

Q1. Is PyTorch suitable only for researchers or can developers also leverage its capabilities?

PyTorch caters to both researchers and developers. Its user-friendly design philosophy makes it easy for researchers to prototype and experiment with new ideas, while its extensibility and modularity make it suitable for building production-ready applications.

Q2. How does PyTorch compare to other deep learning frameworks like TensorFlow?

PyTorch and TensorFlow have their own strengths and weaknesses. PyTorch’s dynamic computational graph, along with its ease of use, make it a favorite among researchers. TensorFlow, on the other hand, provides static graphs and excels in deployment and production. Both frameworks have active communities and support a wide range of tasks.

Q3. Does PyTorch support deployment on mobile devices?

Yes, PyTorch supports deployment on mobile devices. With the introduction of TorchScript, a just-in-time (JIT) compiler, PyTorch models can be serialized and deployed on mobile platforms, opening up possibilities for running deep learning on edge devices.

Q4. Can PyTorch models be deployed in a production environment?

Absolutely, PyTorch models can be deployed in production. PyTorch provides tools like TorchServe and TorchScript, which help in model serving and deployment. These tools offer advantage to researchers and developers by bridging the gap between research and real-world applications.

Q5. Does PyTorch have a vast community and ecosystem?

PyTorch has a rapidly growing community. With active forums, documentation, and official tutorials, users can seek help or contribute to the community. Additionally, PyTorch boasts a wide ecosystem with various libraries, such as torchvision for computer vision and transformers for natural language processing, making it a versatile framework.

Conclusion:

PyTorch has revolutionized the field of deep learning with its dynamic computational graph, automatic differentiation, and ease of use. Its design philosophy bridges the gap between research and production, empowering researchers and developers to innovate and build state-of-the-art models. With its rich features and rapidly growing ecosystem, PyTorch remains one of the most popular frameworks for deep learning, shaping the future of AI.

Torch Not Compiled With Cuda Enabled Pycharm

When it comes to deep learning and machine learning frameworks, PyTorch, commonly known as Torch, has gained immense popularity due to its flexibility and ease of use. Torch, an open-source machine learning library, provides extensive support for numerical computations with deep neural networks. However, one limitation that users often face is PyCharm’s inability to compile Torch with CUDA enabled.

In this article, we will dive into the details of this limitation and explore some alternatives that can help users overcome this challenge. But before we delve deeper, let’s first understand CUDA and PyCharm.

Understanding CUDA and PyCharm

CUDA, short for Compute Unified Device Architecture, is a parallel computing platform and application programming interface (API) model created by NVIDIA. It allows developers to harness the power of the GPU (Graphics Processing Unit) to accelerate computational tasks such as deep learning.

On the other hand, PyCharm is an integrated development environment (IDE) specifically designed for Python programming. It provides various tools and features that enhance productivity and code readability. PyCharm supports multiple frameworks, including Torch, enabling users to develop and debug machine learning models efficiently.

The Limitation: Torch Not Compiled with CUDA Enabled PyCharm

Despite PyCharm’s excellent support for Torch, it has a limitation when it comes to compiling Torch with CUDA enabled. To elaborate, CUDA operates by utilizing the GPU’s parallel processing capabilities. However, PyCharm does not directly integrate with CUDA, preventing users from harnessing the full power of their GPUs for Torch computations.

This limitation means that when you run Torch code in PyCharm, it will execute on the CPU rather than the GPU, resulting in slower computations, especially for large-scale machine learning models. Consequently, users might not be able to leverage the maximum potential of their GPU resources for deep learning tasks.

Alternatives for Overcoming the Limitation

Although Torch not being compiled with CUDA enabled PyCharm could be disappointing for some users, there are alternative ways to make use of CUDA with Torch. Let’s explore a few possible workarounds:

1. Execute Torch Code from the Command Line: While PyCharm may not have direct support for CUDA, you can execute your Torch code from the command line. By utilizing the CUDA libraries and appropriate environment variables, you can ensure that your computations take place on the GPU.

2. Use Jupyter Notebooks: Another option is to use Jupyter Notebooks, which provide an interactive environment for data science and machine learning projects. Jupyter Notebooks support CUDA and Torch integration, allowing you to run your models on the GPU while benefiting from the rich features offered by Jupyter Notebooks.

3. Utilize Google Colab or Kaggle: Platforms like Google Colab and Kaggle offer pre-configured environments that support Torch with CUDA. These platforms allow you to access high-end GPUs and execute your Torch code without the limitations posed by PyCharm.

Frequently Asked Questions (FAQs)

Q1: Can I still use PyCharm with Torch even without CUDA support?

A: Absolutely, PyCharm is still an excellent choice for Torch development, as it offers many useful features for writing, debugging, and organizing your code. You can run your Torch code on the CPU within PyCharm and leverage its IDE capabilities.

Q2: Will running Torch code on the CPU impact the performance significantly?

A: Running Torch code on the CPU will generally result in slower computations, especially for complex models or large datasets. However, it is worth mentioning that you can still achieve satisfactory results in many scenarios without CUDA when working with smaller-scale problems.

Q3: Are there any plans to add CUDA support to PyCharm for Torch?

A: It’s difficult to predict future developments with certainty, but JetBrains, the company behind PyCharm, regularly enhances its IDE and adds new features. Given the popularity of Torch and CUDA, it is possible that support for CUDA-enabled PyCharm could be considered in the future.

In conclusion, while PyCharm does not compile Torch with CUDA enabled, there are alternative approaches to running Torch code on the GPU. By utilizing the command line, Jupyter Notebooks, or platforms like Google Colab and Kaggle, users can still benefit from CUDA’s parallel processing capabilities and execute their deep learning models with optimal performance.

Images related to the topic assertionerror: torch not compiled with cuda enabled

Found 37 images related to assertionerror: torch not compiled with cuda enabled theme

Article link: assertionerror: torch not compiled with cuda enabled.

Learn more about the topic assertionerror: torch not compiled with cuda enabled.

- Torch not compiled with CUDA enabled” in spite upgrading to …

- AssertionError: torch not compiled with cuda enabled ( Fix )

- AssertionError: Torch not compiled with CUDA enabled #30664

- How to Fix AssertionError: torch not compiled with cuda enabled

- AssertionError: torch not compiled with cuda enabled ( Fix )

- How to Install PyTorch with CUDA 10.0 – VarHowto

- Which PyTorch version is CUDA 30 compatible | Saturn Cloud Blog

- Setting up Tensorflow-GPU with Cuda and Anaconda on Windows

- Torch not compiled with CUDA enabled in PyTorch – LinuxPip

- Torch not compiled with CUDA enabled – Jetson AGX Xavier