Tf-Trt Warning: Could Not Find Tensorrt

Introduction:

TensorRT (TensorRT Inference Server) is a powerful tool in the realm of machine learning applications. It is an optimizer and runtime library that helps accelerate deep learning models, specifically designed to deliver high-performance inference on GPUs. Putting TensorFlow and TensorRT together provides developers with a framework that facilitates efficient deployment and performance improvements. However, when encountering the warning message “tf-trt warning: could not find TensorRT,” it is crucial to understand its meaning, potential reasons, and the troubleshooting steps involved. This article will discuss the importance of TensorRT, delve into the warning message and its possible causes, provide troubleshooting steps, explain the required dependencies for TensorRT, verify its compatibility with TensorFlow, address common issues, and highlight the advantages and limitations of using TensorRT in machine learning projects.

1. Explanation of TensorRT and its Importance in Machine Learning Applications:

TensorRT is NVIDIA’s inference optimizer and runtime library that primarily targets deep learning models. By optimizing the models with TensorRT, developers can witness significant improvements in inference performance. TensorRT achieves this by utilizing techniques such as layer fusion, precision calibration, and kernel auto-tuning. These optimizations lead to faster inference, reduced memory usage, and lower latency, making it an essential tool to maximize the computational efficiency of machine learning applications.

2. Overview of the Warning Message: “tf-trt warning: could not find TensorRT”:

The warning message “tf-trt warning: could not find TensorRT” typically appears when trying to use TensorFlow-TensorRT integration. It indicates that TensorRT cannot be found or is not installed correctly within the environment. This warning message can hinder the collaboration between TensorFlow and TensorRT, limiting the potential performance enhancements and optimizations offered by TensorRT.

3. Possible Reasons for the Warning Message and Troubleshooting Steps:

There are several potential reasons for encountering the “tf-trt warning: could not find TensorRT” message. One possible explanation could be an incomplete installation of TensorRT. To troubleshoot this issue, users should ensure they have followed the correct installation steps specified by NVIDIA and verify that the installation directory is properly set.

Another reason for the warning message could be a conflict between the versions of TensorFlow and TensorRT. It is essential to use compatible versions of both frameworks. Users should double-check the compatibility matrix provided by NVIDIA to ensure that the versions they are working with can cooperate seamlessly.

Furthermore, the warning might stem from an incorrect path configuration for TensorRT. Users should ensure that the proper environmental variables and paths are set to locate the TensorRT installation.

4. Understanding the Dependencies Required for TensorRT and their Installation:

TensorRT has certain dependencies that need to be correctly installed for it to function properly. These dependencies include CUDA, cuDNN, and specific TensorFlow versions. This section will provide an overview of these dependencies and guidelines for their installation. Ensuring all the required dependencies are present will help avoid issues such as the “tf-trt warning: could not find TensorRT” message.

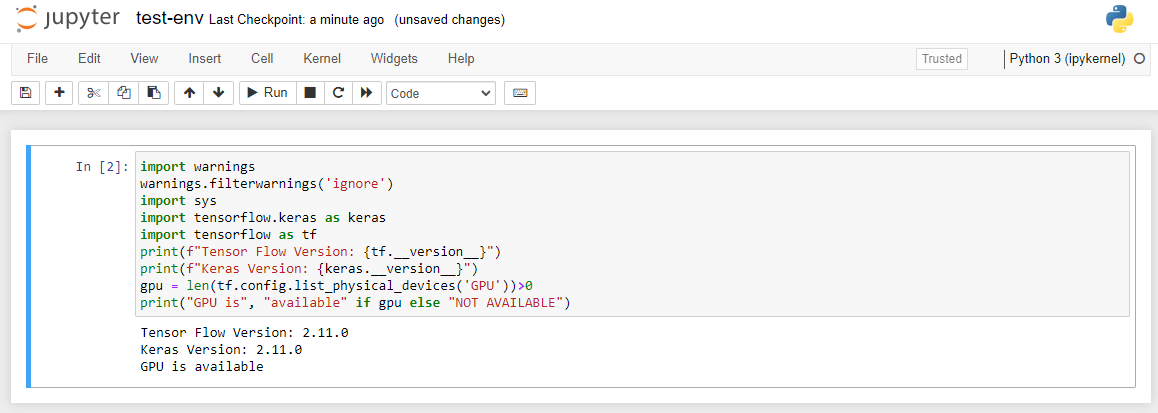

5. Verifying if TensorRT is Properly Installed and Compatible with TensorFlow:

Once TensorRT and all its dependencies are installed, it is essential to verify their correct installation and compatibility with TensorFlow. This step ensures that the framework is properly integrated and capable of leveraging the power of TensorRT. The article will provide instructions to verify the successful installation and compatibility, ensuring a smooth transition between TensorFlow and TensorRT.

6. Common Issues and Errors Related to TensorRT Integration and their Solutions:

During the integration of TensorRT with TensorFlow, users may encounter various issues or errors. Some common issues might include incompatible TensorRT versions, missing environmental variables, or conflicts between installed libraries. This section will address these common issues and provide appropriate solutions to rectify them, enabling the seamless integration of TensorFlow and TensorRT.

7. Optimizing TensorFlow Models with TensorRT to Improve Inference Performance:

The collaboration between TensorFlow and TensorRT empowers developers to optimize their machine learning models further. By implementing TensorRT optimizations, like graph transformations, precision calculations, and kernel auto-tuning, developers can witness substantial improvements in inference performance. This section will explore the various methods and techniques to optimize TensorFlow models using TensorRT, ultimately achieving faster and more efficient inference.

8. Advantages and Limitations of Using TensorRT with TensorFlow in Machine Learning Projects:

In the final section, we will discuss the advantages and limitations of integrating TensorRT with TensorFlow in machine learning projects. Understanding these pros and cons will help developers assess the potential benefits of using TensorRT in their projects and manage expectations accordingly.

FAQs:

Q1. What is TensorRT?

A1. TensorRT is NVIDIA’s inference optimizer and runtime library that accelerates deep learning models for faster inference performance on GPUs.

Q2. Why am I seeing the warning message “tf-trt warning: could not find TensorRT”?

A2. This warning message indicates that TensorRT cannot be located or is not installed correctly within your environment. It can hinder successful integration between TensorFlow and TensorRT.

Q3. How can I troubleshoot the “tf-trt warning: could not find TensorRT” message?

A3. Troubleshooting steps include verifying the installation of TensorRT, ensuring compatibility with TensorFlow, and correctly configuring the relevant paths and environmental variables.

Q4. What are the advantages of using TensorRT with TensorFlow?

A4. TensorRT offers optimization techniques that enhance inference performance, resulting in faster inference, reduced memory usage, and lower latency.

Q5. What are the limitations of using TensorRT with TensorFlow?

A5. Some limitations might include compatibility issues between TensorFlow and certain TensorRT versions, potential conflicts with other installed libraries, and dependencies on NVIDIA GPUs.

Conclusion:

TensorRT plays a critical role in accelerating machine learning models by optimizing inference performance. Understanding the warning message “tf-trt warning: could not find TensorRT” and its potential causes is essential for successful integration with TensorFlow. By following the troubleshooting steps, verifying installations and compatibility, and addressing common issues, developers can unlock the tremendous potential of TensorRT in their machine learning projects, thereby improving their overall efficiency and performance.

20 Installing And Using Tenssorrt For Nvidia Users

Keywords searched by users: tf-trt warning: could not find tensorrt

Categories: Top 87 Tf-Trt Warning: Could Not Find Tensorrt

See more here: nhanvietluanvan.com

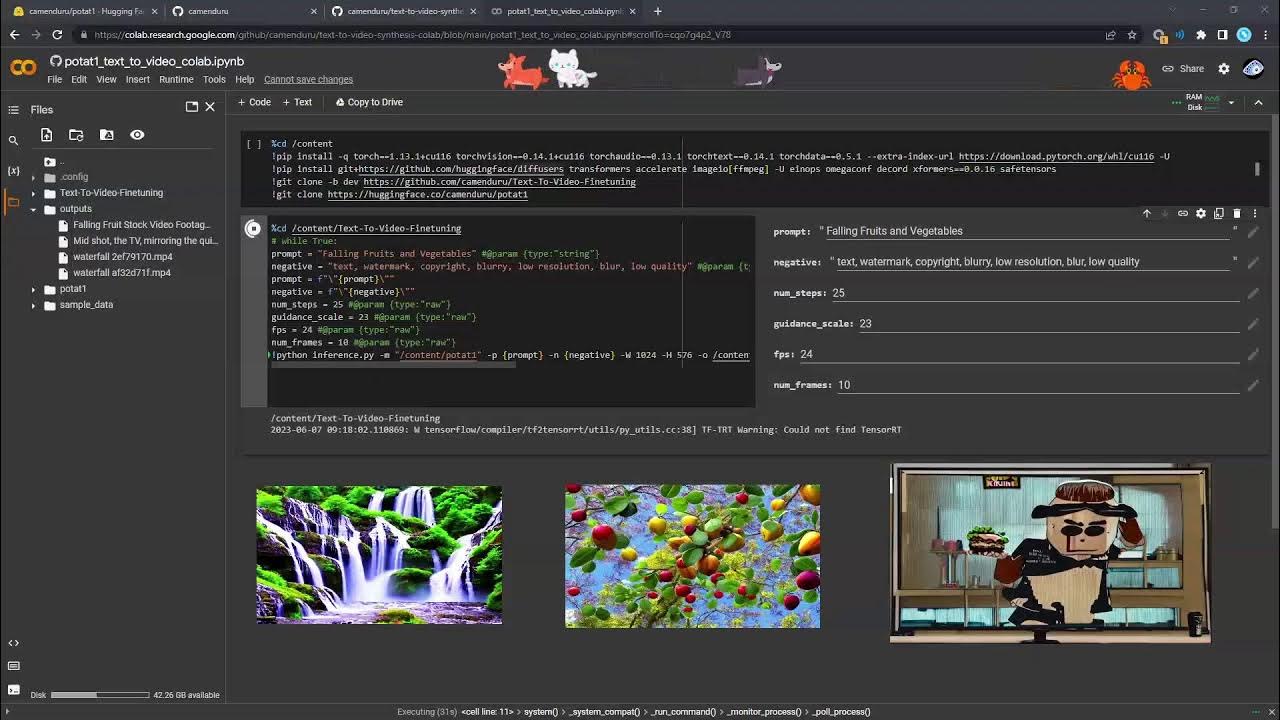

Images related to the topic tf-trt warning: could not find tensorrt

Found 37 images related to tf-trt warning: could not find tensorrt theme

Article link: tf-trt warning: could not find tensorrt.

Learn more about the topic tf-trt warning: could not find tensorrt.

- tensorflow object detection TF-TRT Warning: Could not find …

- TF-TRT Warning: Cannot dlopen some TensorRT libraries

- Getting started with TensorRT – IBM

- Φ Flow Cookbook

- deploy endpoint model machine learning

- Installing DLC, ran into problems with TensorFlow/libcudart.so …

See more: nhanvietluanvan.com/luat-hoc