Glm.Fit: Fitted Probabilities Numerically 0 Or 1 Occurred

glm.fit is a function in the statistical programming language R that performs maximum likelihood estimation for generalized linear models. It is one of the fundamental tools used in statistical modeling and is particularly useful for analyzing binary response data, where the outcome variable takes on one of two possible values.

The purpose of glm.fit is to estimate the parameters of a generalized linear model by maximizing the likelihood function. This involves finding the values of the model parameters that make the observed data most likely. The function takes as input the model formula, which specifies the relationship between the response variable and the predictors, as well as the dataset containing the observed data.

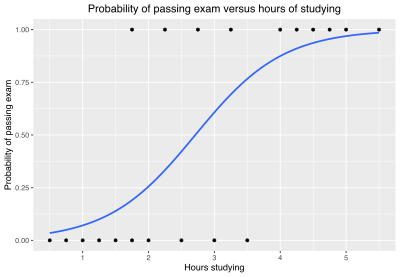

Exploring the concept of fitted probabilities in logistic regression

In logistic regression, glm.fit estimates the probabilities of a binary outcome variable, which can be interpreted as the likelihood of the outcome occurring given the predictor variables. These probabilities are referred to as fitted probabilities and are obtained by applying the logistic function to the linear predictor.

The logistic function, also known as the sigmoid function, maps any real number to a value between 0 and 1. In logistic regression, the linear predictor is calculated as a linear combination of the predictor variables, weighted by their corresponding estimated coefficients. The fitted probabilities are then obtained by applying the logistic function to the linear predictor.

Reasons behind the occurrence of numerically 0 or 1 fitted probabilities

In some cases, fitted probabilities of exactly 0 or 1 may occur in logistic regression. This can happen due to different reasons, including perfect separation, complete separation, or convergence issues in the optimization process.

Perfect separation refers to situations where there is a perfect association between the predictors and the outcome variable, resulting in the complete separation of the data points with different outcomes. This leads to infinite coefficient estimates, which can cause the fitted probabilities to be numerically equal to 0 or 1.

Complete separation occurs when there is a subset of the predictor variables that can perfectly predict the outcome variable. In such cases, the maximum likelihood estimation fails to converge, and glm.fit may return fitted probabilities of exactly 0 or 1.

Investigating the impact of perfect separation on fitted probabilities

Perfect separation can have a significant impact on the fitted probabilities in logistic regression. When perfect separation occurs, the estimated coefficients become infinite, and the fitted probabilities will be numerically equal to 0 or 1.

This can cause problems in both interpretation and inference. From an interpretation standpoint, it implies that the predictor variables have a strong and deterministic relationship with the outcome variable, which may raise concerns about the reliability and generalizability of the model. From an inference standpoint, infinite coefficient estimates lead to large standard errors and confidence intervals, making it difficult to make reliable statistical inferences.

Addressing the issue of complete separation and its effect on logistic regression

Complete separation poses a challenge in logistic regression because it leads to non-convergence of the maximum likelihood estimation process. When glm.fit encounters complete separation, it fails to find the optimal parameter estimates and may report fitted probabilities of exactly 0 or 1.

To address the issue of complete separation, various techniques can be employed. One approach is to include additional variables or modify the model specification to avoid perfect prediction. Another approach is to use penalized estimation methods, such as ridge regression or lasso, which introduce a penalty term to prevent extreme coefficient estimates.

Techniques for handling fitted probabilities numerically equal to 0 or 1

When glm.fit returns fitted probabilities of exactly 0 or 1, it may be necessary to handle them appropriately for further analysis. One common technique is to add a small value, known as a “pseudocount,” to the cells with 0 or 1 probabilities. This helps avoid numerical instability and allows for valid computations.

Another technique is to use a modified version of the logistic regression model, such as Firth’s penalized likelihood, which addresses the problem of separation and extreme coefficients by adding a bias term to the estimation process.

Overcoming the challenges of convergence issues in glm.fit

Convergence issues in glm.fit can arise due to various reasons, such as perfect separation or numerical instability. When convergence fails, it is important to investigate the underlying causes and implement appropriate solutions.

One common approach is to increase the maximum number of iterations allowed for the estimation process. This can be done by specifying a larger value for the “maxit” argument in the glm.fit function.

Another approach is to modify the optimization algorithm used by glm.fit. R offers different optimization algorithms, such as the Newton-Raphson method and the Fisher scoring method, which may work better in certain situations.

Assessing the implications and limitations of fitted probabilities in logistic regression

While fitted probabilities provide valuable insights into the relationship between predictor variables and a binary outcome, it is essential to consider their implications and limitations.

Fitted probabilities are estimates based on the observed data and are subject to sampling variability. It is important to interpret them with caution and take into account the associated standard errors and confidence intervals.

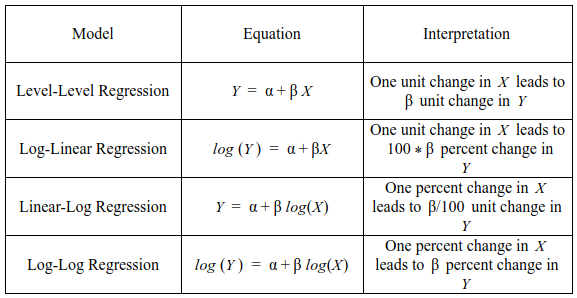

Furthermore, assuming linearity and additivity of the predictor variables may not always hold in practice. It is crucial to assess the model assumptions and consider other possible functional forms or interactions that may better capture the underlying relationship.

Practical considerations for interpreting and utilizing fitted probabilities in glm.fit results

Interpreting and utilizing fitted probabilities in glm.fit results require careful consideration of the specific research question and the context in which the model is applied.

For binary outcomes, the fitted probabilities can be interpreted as the probability of the event occurring, given the predictor variables. These probabilities can be used to make predictions and to assess the impact of different predictor variable values on the likelihood of the event.

It is also important to consider the confidence intervals associated with the fitted probabilities, as they provide information about the uncertainty in the estimates. Additionally, graphical tools, such as receiver operating characteristic (ROC) curves, can aid in assessing the discriminative ability of the model.

Overall, fitted probabilities obtained from glm.fit play a vital role in understanding the relationships between predictor variables and binary outcomes. However, it is crucial to be aware of the potential issues and limitations associated with numerically equal to 0 or 1 probabilities, as well as to employ appropriate techniques to handle these situations.

How To Solve Glm.Fit: Fitted Probabilities Numerically 0 Or 1 Occurred In R | R

What Does Fitted Probabilities Numerically 0 Or 1 Occurred Mean?

In statistical modeling and regression analysis, it is not uncommon to encounter a scenario where the fitted probabilities are numerically 0 or 1, resulting in the message “fitted probabilities numerically 0 or 1 occurred.” This occurrence carries important implications and understanding its significance is crucial for interpretation and decision-making based on statistical models.

To delve into this topic in depth, let us first explore the concept of fitted probabilities and their interpretation. Fitted probabilities are the calculated probabilities obtained from a statistical model, representing the likelihood of an event or outcome occurring. These probabilities are estimated using various mathematical techniques such as logistic regression, which is commonly employed in binary classification problems.

When we encounter the message “fitted probabilities numerically 0 or 1 occurred,” it means that the statistical model predicted a probability of either 0 or 1 for one or more cases in the dataset. Logically, probabilities should range between 0 and 1, where 0 indicates an impossible event and 1 represents a certain event. However, due to the limitations of both the modeling techniques and the dataset used, it is possible for the model to produce probabilities that are extremely close to 0 or 1, ultimately leading to the extreme values of 0 or 1.

There are several reasons why such extreme probabilities may occur. One potential reason is an issue called separation or complete separation. Separation occurs when a predictor variable or combination of variables perfectly predicts the outcome variable, resulting in a perfect separation of the data points. This often occurs when there is a high degree of correlation between the predictor variables or when one or more predictors strongly influence the outcome. In these cases, the model struggles to find a clear decision boundary and may assign probabilities of 0 or 1 to certain cases.

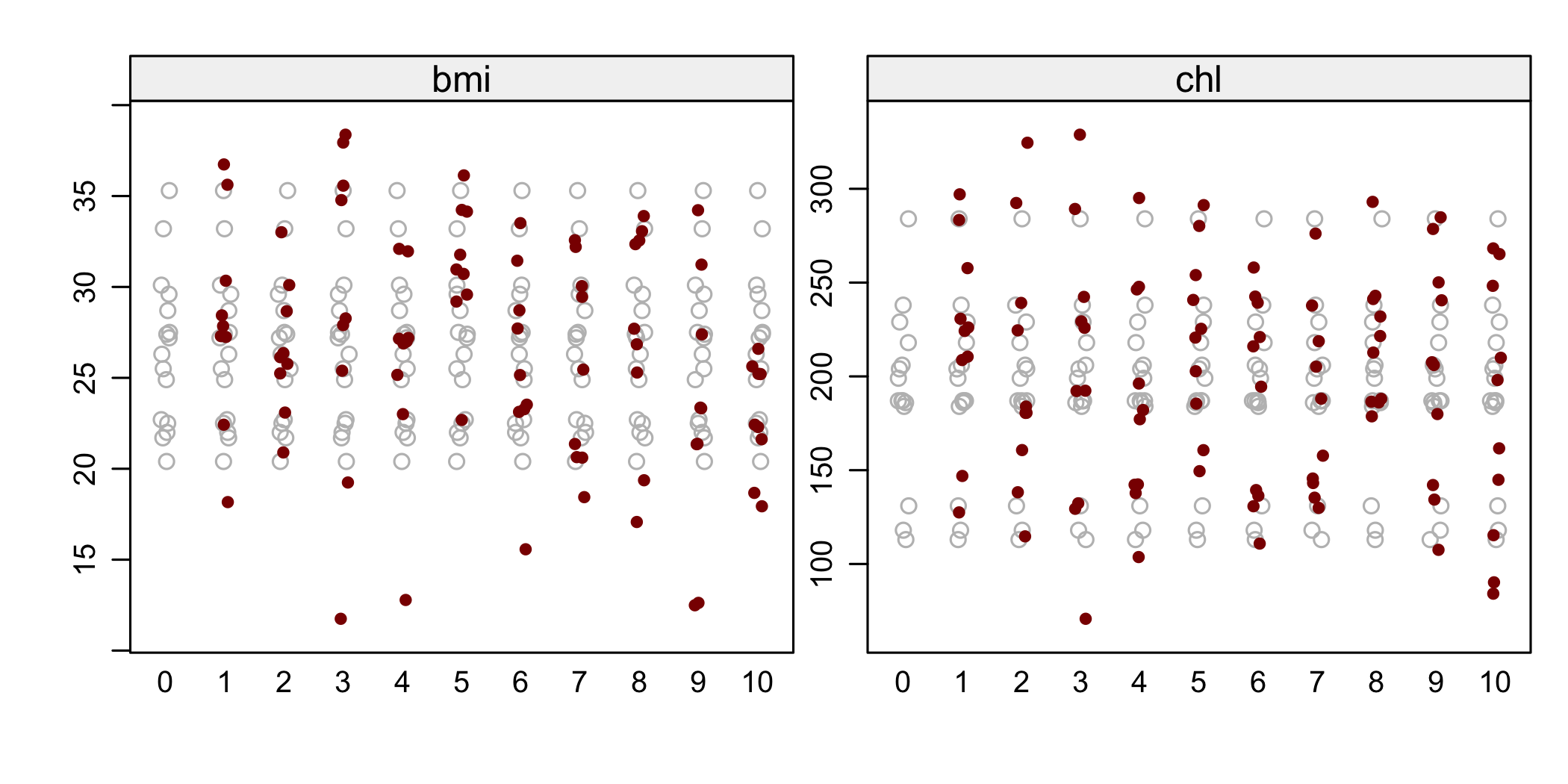

Another reason for observed extreme probabilities is the presence of outliers or influential data points that heavily influence the model’s predictions. If these outliers push the predictions towards one extreme, the model may end up assigning probabilities of 0 or 1. Additionally, if the dataset used for modeling is imbalanced, meaning that there is a significant discrepancy in the number of cases with one outcome compared to the other, extreme probabilities may arise.

It is crucial to interpret and handle the occurrence of fitted probabilities numerically 0 or 1 appropriately. In most cases, it is not advisable to take these extreme probabilities at face value, as they are likely a result of the aforementioned modeling issues. Instead, it is recommended to consider alternative approaches to address the problem and obtain more reliable predictions. One common strategy is to use regularization techniques such as ridge regression or LASSO, which introduce a penalty term to prevent extreme probabilities.

Now, let us address some frequently asked questions related to this topic:

Q: Can extreme probabilities of 0 or 1 impact the overall predictive performance of the model?

A: Yes, extreme probabilities can have a significant impact on the model’s performance. These extreme values may lead to incorrect predictions and classification errors. It is essential to identify and address the issue to improve the model’s accuracy.

Q: How can I determine whether the extreme probabilities are a result of separation or other issues?

A: Conducting a thorough analysis of your dataset and understanding the relationships between predictor and outcome variables can help identify whether the issue is related to separation or other factors. Consulting with a statistical expert or employing diagnostic techniques specific to your modeling approach can also assist in the identification process.

Q: Are there any specific diagnostic tools or techniques that can be used to identify the presence of extreme probabilities?

A: Yes, there are several diagnostic tools available for identifying the presence of extreme probabilities. For logistic regression models, the Hosmer-Lemeshow test, residual plots, and model fit statistics like AIC and BIC can provide insights into the performance of the model. Additionally, visually analyzing the relationships between predictor variables and the outcome can help identify potential issues.

Q: Should extreme probabilities always be treated as false and excluded from further analysis?

A: While extreme probabilities are usually unreliable, it is not advisable to exclude them from analysis without proper investigation. It is important to evaluate the reasons behind their occurrence and consider alternative modeling approaches. Ignoring extreme probabilities without a thorough understanding of their origin may result in biased or suboptimal decision-making.

In summary, encountering the message “fitted probabilities numerically 0 or 1 occurred” in statistical modeling indicates the presence of extreme probabilities that can significantly affect the model’s predictive performance. Understanding the underlying causes of these extreme values and employing appropriate strategies to address them are essential for accurate interpretation and decision-making based on statistical models.

What Is Fitted Probabilities?

In statistics and data analysis, fitted probabilities refer to the estimated probabilities of an event or outcome based on a statistical model. These probabilities are calculated using the observed data and the parameters estimated from the model. Fitted probabilities are commonly used in several statistical techniques such as logistic regression, probability models, and survival analysis.

When conducting a statistical analysis, researchers are often interested in understanding the likelihood or probability of a particular event occurring. For example, in logistic regression, the goal is to estimate and predict the probability of an event or outcome (e.g., success or failure) based on a set of predictor variables (e.g., age, gender, education level). Fitted probabilities provide a way to quantify and estimate these probabilities.

How are Fitted Probabilities Calculated?

To calculate fitted probabilities, statistical models are used to estimate the probability distribution of the response variable given the predictor variables. These models can be linear models, non-linear models, or more complex models depending on the nature of the data and the research question.

For example, in logistic regression, the response variable follows a binomial distribution (success or failure) and the model estimates the odds or log odds of success given the predictor variables. The odds are then transformed into probabilities using the logistic function, which maps the odds onto the probability scale between 0 and 1.

Once the model is estimated, the observed data is used to estimate the model parameters (e.g., coefficients in the case of logistic regression). These estimated parameters are then plugged into the model equation to calculate the fitted probabilities for each observation in the dataset.

What are the Applications of Fitted Probabilities?

Fitted probabilities have a wide range of applications in various fields, including:

1. Predictive Modeling: Fitted probabilities are used to estimate the probability of an event occurring, which is crucial for predictive modeling. For example, in credit scoring, fitted probabilities can be used to assess the likelihood of a borrower defaulting on a loan based on their credit history.

2. Risk Assessment: Fitted probabilities are often used in risk assessment models to estimate the probability of an adverse event happening. For instance, in medical research, these probabilities are used to assess the risk of developing certain diseases based on demographic characteristics and other risk factors.

3. Decision Making: Fitted probabilities provide valuable information for decision making. By understanding the likelihood of different outcomes, decision-makers can make more informed choices. For example, in marketing campaigns, fitted probabilities can be used to target customers who are more likely to respond positively to a promotional offer.

4. Survival Analysis: Fitted probabilities are used in survival analysis to estimate the probability of surviving or experiencing an event of interest over a specific time period. In medical research, these probabilities help estimate patients’ survival rates based on various factors such as treatment, age, and disease stage.

FAQs about Fitted Probabilities:

Q: Are fitted probabilities always accurate?

A: Fitted probabilities are estimates based on statistical models. While they provide useful insights, they are not always perfectly accurate. The accuracy of fitted probabilities depends on several factors, including the quality and representativeness of the data, the appropriateness of the model, and the assumptions made.

Q: Can fitted probabilities be greater than 1 or less than 0?

A: Fitted probabilities should ideally fall between 0 and 1 since they represent probabilities. However, in some cases, especially when using complex models that make use of transformations, the fitted probabilities can go beyond this range. In such cases, it is important to interpret them with caution and consider the limitations of the model.

Q: How can we assess the accuracy of fitted probabilities?

A: There are various methods to assess the accuracy of fitted probabilities, including goodness-of-fit tests, cross-validation, and diagnostic plots. These techniques help evaluate how well the model fits the data and whether the estimated probabilities are reliable.

Q: Can fitted probabilities be used for causal inference?

A: Fitted probabilities are mainly used for predictive and descriptive purposes rather than causal inference. While they can provide valuable insights into the likelihood of certain events, establishing causality often requires additional methods such as randomized controlled trials or carefully designed experiments.

Q: Are fitted probabilities sensitive to outliers?

A: Depending on the statistical model and the nature of the outliers, fitted probabilities can be sensitive to extreme observations. Outliers can have a significant impact on the model estimates, including the parameters and the probabilities calculated. Therefore, it is important to assess the influence of outliers and, if needed, consider robust modeling techniques to make the estimates more robust.

In conclusion, fitted probabilities play a crucial role in statistical modeling and data analysis. They provide valuable insights into the probabilities of specific events occurring based on observed data and estimated model parameters. Fitted probabilities have a wide range of applications in predictive modeling, risk assessment, decision making, and survival analysis. By understanding and interpreting fitted probabilities correctly, researchers and decision-makers can make informed choices and gain deeper insights into the underlying processes.

Keywords searched by users: glm.fit: fitted probabilities numerically 0 or 1 occurred Error: data and reference should be factors with the same levels, Dispersion parameter for binomial family taken to be 1

Categories: Top 82 Glm.Fit: Fitted Probabilities Numerically 0 Or 1 Occurred

See more here: nhanvietluanvan.com

Error: Data And Reference Should Be Factors With The Same Levels

Introduction

In the world of data analysis, ensuring that your data is accurate and reliable is of utmost importance. However, there are times when errors can occur, leading to inaccurate results. One such error is when the data and reference should be factors with the same levels. This article aims to explore this error in detail, explaining what it means, why it is important to address, and how to fix it. Additionally, a FAQ section is provided to answer common questions about this error.

Understanding the Error

When working with data, it is common to use factors to represent categorical variables. Factors are data structures in R that allow us to define categorical variables with specific levels or categories. These levels act as references for our data. However, an error occurs when the data and reference factors do not have the same levels.

To understand why this error is significant, we need to comprehend the importance of having consistent levels between data and reference factors. When performing analyses or creating visualizations, R assumes that the levels of both factors correspond to each other. If they do not match, it can lead to incorrect comparisons, misrepresentations, or even missing values. Therefore, it is vital to address this error to ensure accurate and reliable results.

Causes of the Error

There are several potential causes for the error of having data and reference factors with different levels:

1. Missing data: If there are missing values in the data, it could result in different levels compared to the reference. This typically occurs when data is collected from different sources or at different points in time.

2. Differing categories: If the categories in the data do not match exactly with the reference, such as having additional categories or missing ones, it can cause a mismatch in the levels.

3. Typographical errors: Simple typographical or spelling mistakes can create new levels in the data that do not align with the reference.

The error often manifests itself in various forms such as “factor has new levels” or “invalid factor level.” These error messages indicate that the levels in the data and reference factors do not match.

Resolving the Error

To address the error of having data and reference factors with different levels, the following steps can be taken:

1. Inspect the data: Examine the levels of both the data and reference factors thoroughly. Identify the differences, such as missing or extra levels.

2. Update the reference: If the error is due to missing levels or categories in the reference, update it accordingly. Make sure to include all necessary levels to ensure consistency with the data.

3. Recode the data: If the error is caused by differing categories or additional levels in the data, it may require re-coding the data. This process involves reclassifying or modifying the levels to match the reference.

4. Merge or subset the data: In some cases, it may be necessary to merge or subset the data to ensure the levels align correctly. This can be achieved using functions like `merge()` or `subset()` in R.

5. Correct typographical errors: If the error is due to typographical errors, correct them in the data to ensure consistent levels with the reference.

Frequently Asked Questions (FAQs)

Q1. What can happen if the error of having different levels in data and reference factors is not addressed?

A1. If this error is not resolved, it can lead to incorrect comparisons, misleading visualizations, or the exclusion of values, ultimately resulting in flawed analysis.

Q2. Can this error occur in any statistical software or only in R?

A2. This error can occur in any statistical software that uses factors to represent categorical variables. However, the specific error messages may differ across software.

Q3. How can I prevent this error from happening in the first place?

A3. To prevent this error, it is crucial to have a well-defined reference before collecting or analyzing the data. Ensuring consistency in the levels between the data and reference from the beginning can mitigate this issue.

Q4. Can automated tools help identify and resolve this error?

A4. Yes, there are automated tools available that can assist in identifying and resolving this error. These tools can compare levels, highlight differences, and suggest appropriate updates to ensure consistency.

Q5. Are there any best practices to follow when working with factors in R?

A5. Yes, some best practices include carefully defining factor levels, documenting any changes made, and ensuring constant communication between data collectors and analysts to maintain consistency.

Conclusion

Ensuring that the levels of data and reference factors align is crucial for accurate analysis and proper interpretation of results. This article has outlined the error of having different levels in data and reference factors, its causes, and steps to address and resolve it. By understanding this error and following the recommended practices, data analysts can minimize errors, improve the reliability of their findings, and make more informed decisions based on accurate data.

Dispersion Parameter For Binomial Family Taken To Be 1

The dispersion parameter is a critical aspect of statistical modeling, particularly when dealing with binomial data. It plays a vital role in estimating and interpreting the variability of observed outcomes. In this article, we will delve into the concept of dispersion parameter specifically for the binomial family, assuming it to be 1. We will discuss its meaning, interpretation, implications, and address frequently asked questions surrounding this topic.

Understanding the Dispersion Parameter:

When working with binomial data, which involves yes-or-no outcomes or binary events, it is crucial to assess the dispersion parameter to account for potential heterogeneity or overdispersion in the data. The dispersion parameter represents the amount of variability that is not explained by the model.

In the binomial family, the dispersion parameter is taken to be 1 as a default assumption. With this assumption, the model assumes that the observed data follows the predicted probabilities accurately and that there is no additional unexplained variability beyond what is expected. Therefore, the dispersion parameter being set to 1 implies that the data fits the model perfectly, with no evidence of additional variation or heterogeneity.

Interpreting the Dispersion Parameter in the Binomial Family:

In the context of the binomial family, where data is often binary (such as success or failure), the dispersion parameter of 1 can be understood as an indication that the model adequately explains the observed outcome probabilities. It suggests that the predicted probabilities from the model closely match the observed data.

A dispersion parameter of 1 essentially means that the observed variation in the data can be fully attributed to the underlying model. In other words, the model captures all the observed variability, and there is no unaccounted-for variation or overdispersion. Hence, a dispersion parameter of 1 indicates a well-fitting model where the predicted probabilities align well with the observed outcomes.

Implications of a Dispersion Parameter of 1:

When the dispersion parameter is chosen to be 1 for the binomial family, it affects various aspects of statistical analysis and modeling.

1. Goodness-of-Fit Testing: With a dispersion parameter of 1, the goodness-of-fit tests assume that the model accurately represents the observed data. This assumption is based on the absence of overdispersion or additional variability beyond what the model accounts for. Deviations from this assumption might suggest a lack of fit between the model and the data.

2. Model Comparison: When comparing multiple models, assuming a dispersion parameter of 1 allows for comparing the models on the same basis. It ensures that the variability attributed to the models is consistent, enabling fair model selection.

3. Efficiency of Parameter Estimates: Estimating parameters in a model with a dispersion parameter of 1 is generally efficient, as the focus concentrates solely on the model’s explanatory power, rather than accounting for unexplained variability.

4. Predictive Accuracy: Assuming a dispersion parameter of 1 indicates that the model’s predicted probabilities align closely with the observed data. Thus, the model is expected to provide accurate predictions for similar contexts, enhancing its predictive capability.

Frequently Asked Questions:

Q1: Can the dispersion parameter in the binomial family be other than 1?

Yes, it is possible to estimate dispersion parameters other than 1. In fact, estimating the dispersion parameter allows for accounting for potential overdispersion or underdispersion in the data, indicating whether the variability is higher or lower than what is expected by the model.

Q2: Is a dispersion parameter of 1 always desirable?

While assuming a dispersion parameter of 1 can simplify analysis and model interpretation, it might not always reflect the actual variation in the data. It is ideal when the observed variation in the data aligns well with the predicted probabilities of the model. However, if there is evidence of overdispersion, which suggests additional variation beyond what the model accounts for, estimating other dispersion parameters would be more appropriate.

Q3: How can one test for overdispersion?

The presence of overdispersion can be tested using various statistical tests, such as the Pearson chi-square test, Likelihood Ratio test, or Deviance test. These tests assess the goodness-of-fit between the model and the data, evaluating if there is unexplained variation beyond what is expected.

Q4: What are the consequences of ignoring overdispersion?

Ignoring overdispersion can lead to biased parameter estimates, inflated p-values, and incorrect conclusions. It underestimates the uncertainty associated with model predictions and can potentially mislead decision-making processes.

In conclusion, the dispersion parameter for the binomial family, when taken to be 1, assumes that the model perfectly explains the observed probabilities, with no additional unexplained variability. Understanding its implications is crucial for appropriate statistical analysis, model selection, and interpretation. While assuming a dispersion parameter of 1 simplifies analysis, it is imperative to test for potential overdispersion and consider other dispersion parameters when necessary.

Images related to the topic glm.fit: fitted probabilities numerically 0 or 1 occurred

Found 25 images related to glm.fit: fitted probabilities numerically 0 or 1 occurred theme

Article link: glm.fit: fitted probabilities numerically 0 or 1 occurred.

Learn more about the topic glm.fit: fitted probabilities numerically 0 or 1 occurred.

- glm.fit: fitted probabilities numerically 0 or 1 occurred – Statology

- R warning message – glm.fit: fitted probabilities numerically 0 …

- Warning Message Glm.Fit Fitted Probabilities Numerically 0 Or …

- glm.fit: fitted probabilities numerically 0 or 1 occurred however …

- glm.fit: fitted probabilities numerically 0 or 1 occurred – Statology

- Probability distribution fitting – Wikipedia

- How to fix fitted probabilities numerically 0 or 1 occurred …

- ” glm.fit: fitted probabilities numerically 0 or 1 occurred …

- Glm.fit: fitted probabilities numerically 0 or 1 occurred Warning …

- Tidymodels: a demonstration – Amazon AWS

- How to Fix in R: glm.fit: algorithm did not converge

- Appendix B. Handling of warnings and errors – Research Square