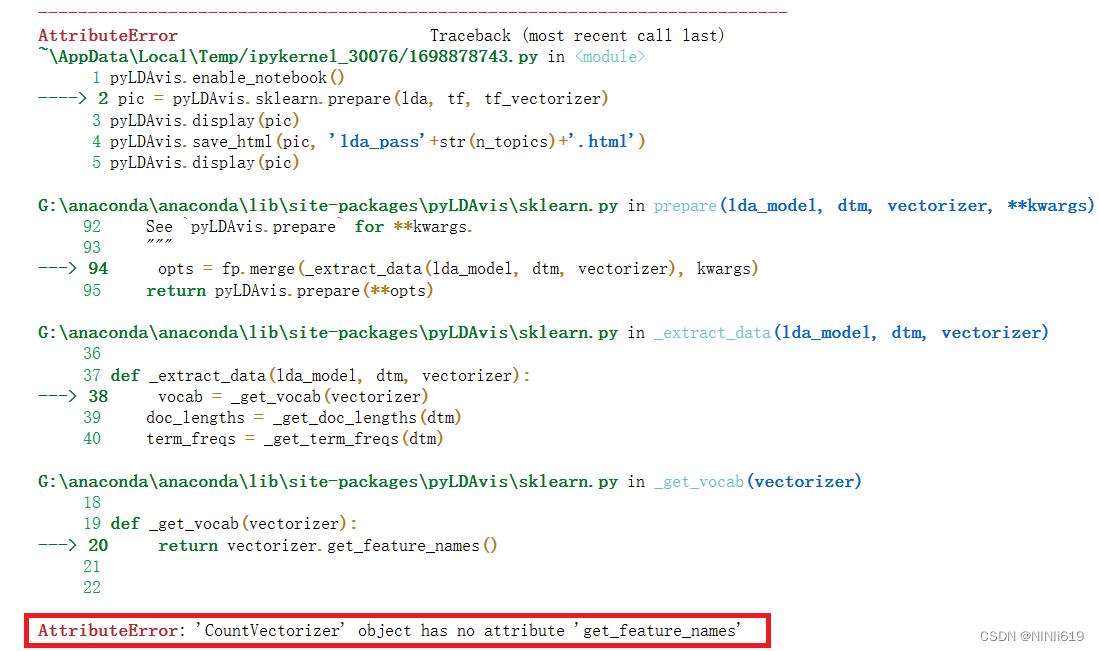

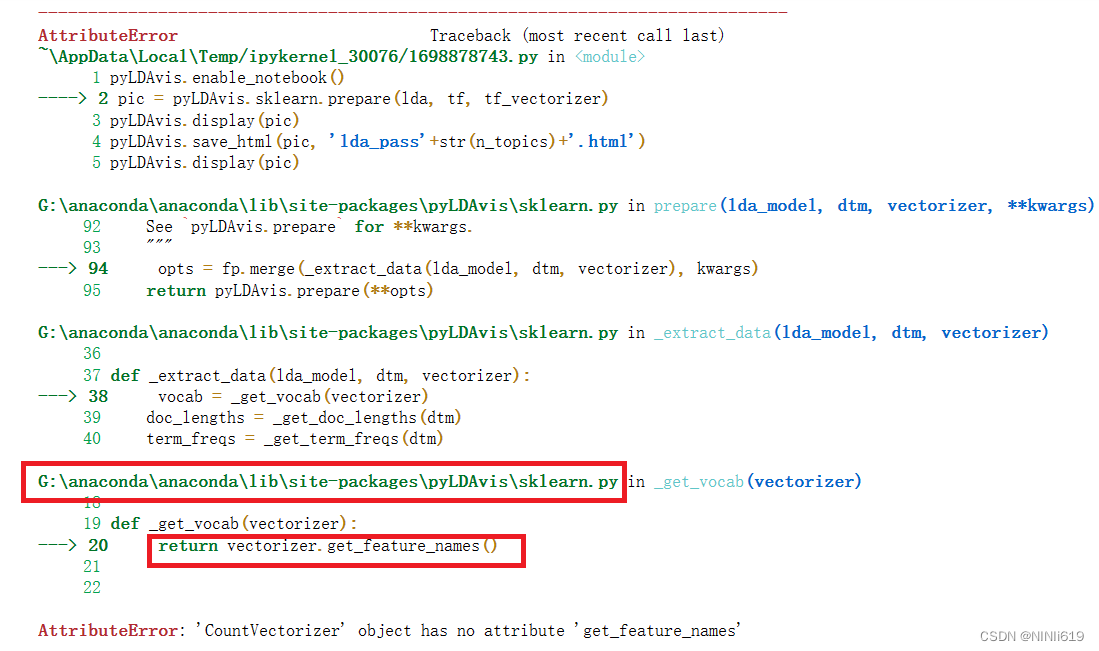

Attributeerror: ‘Countvectorizer’ Object Has No Attribute ‘Get_Feature_Names’

One common instance of this error is when using the ‘get_feature_names’ method in the ‘CountVectorizer’ class from the ‘sklearn’ library. The ‘CountVectorizer’ is a useful tool for converting a collection of text documents into a matrix of token counts. It represents the frequency of each term (word) in a document or a collection of documents.

The ‘get_feature_names’ method in ‘CountVectorizer’ is used to retrieve the feature names, which are the unique terms in the corpus. It returns a list of these feature names, which can be helpful for further analysis or interpretation of the text data.

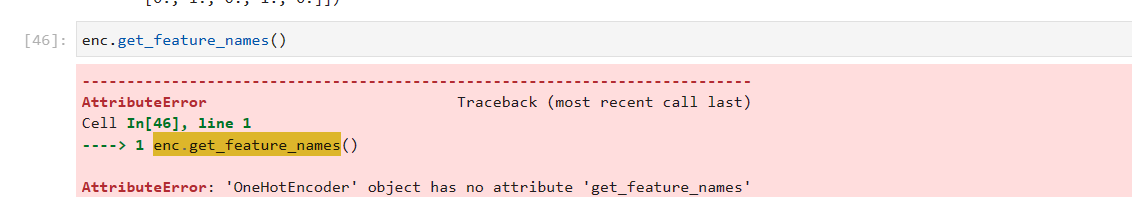

However, sometimes an ‘AttributeError’ is encountered when trying to use the ‘get_feature_names’ method. The error message usually says “‘countvectorizer’ object has no attribute ‘get_feature_names'”. This suggests that the ‘CountVectorizer’ object does not have the ‘get_feature_names’ attribute or method.

There can be several common causes for this ‘AttributeError’ in the context of using ‘CountVectorizer’ and its ‘get_feature_names’ method. Some of these causes include:

1. Incorrect installation or version mismatch: It is possible that the ‘sklearn’ library, which contains the ‘CountVectorizer’ class, is not installed correctly or is of an outdated version. This can lead to missing or incompatible attributes.

2. Misspelling or case sensitivity: It is important to ensure that the method name is spelled correctly and matches the case sensitivity used in the ‘CountVectorizer’ class. Python is case-sensitive, so even a small mistake can result in an ‘AttributeError’.

3. Inconsistent object initialization: If the ‘CountVectorizer’ object is not initialized properly before trying to use the ‘get_feature_names’ method, it can cause an ‘AttributeError’. Double-checking the object initialization code is necessary.

4. Data type mismatch: The ‘get_feature_names’ method expects the input data to be in a specific format, such as a sparse matrix or an array-like object. If the input data type does not match the expected format, it can result in an ‘AttributeError’.

Troubleshooting the ‘AttributeError’ involves examining the above common causes and taking appropriate actions to resolve them. Here are some possible solutions to the AttributeError:

1. Check the installation of ‘sklearn’: Ensure that the ‘sklearn’ library is installed correctly and is up-to-date. You can use the following command in your Python environment to install or update ‘sklearn’:

`pip install -U scikit-learn`

This command will make sure that the latest version of ‘sklearn’, which includes the ‘CountVectorizer’ class, is installed.

2. Verify the spelling and case: Double-check the spelling and case of the ‘get_feature_names’ method. Pay attention to the capitalization and make sure it matches the usage in the ‘CountVectorizer’ class.

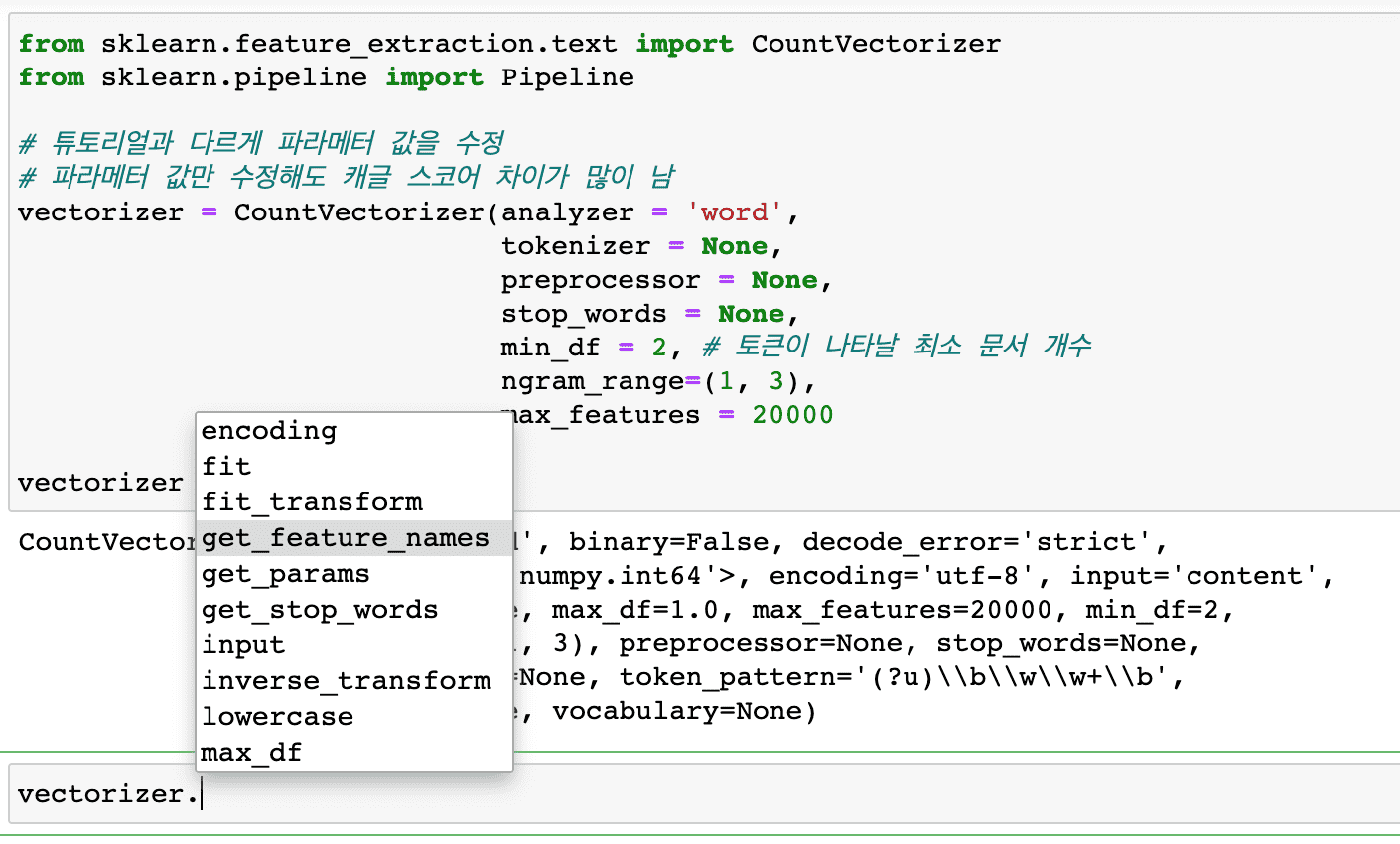

3. Initialize the ‘CountVectorizer’ object correctly: Make sure to initialize the ‘CountVectorizer’ object properly before calling the ‘get_feature_names’ method. The initialization typically involves setting various parameters such as token pattern, n-gram range, or maximum document frequency.

4. Ensure correct data type: Confirm that the input data to the ‘CountVectorizer’ object is of the correct data type. If using sparse matrix input, you can convert your data to a sparse matrix using the ‘csr_matrix’ function from the ‘scipy’ library.

5. Verify the version compatibility: If you encounter this ‘AttributeError’ after updating the ‘sklearn’ library, there might be compatibility issues with your code. Check if there are any updates or changes in the latest version that require modifications in your code.

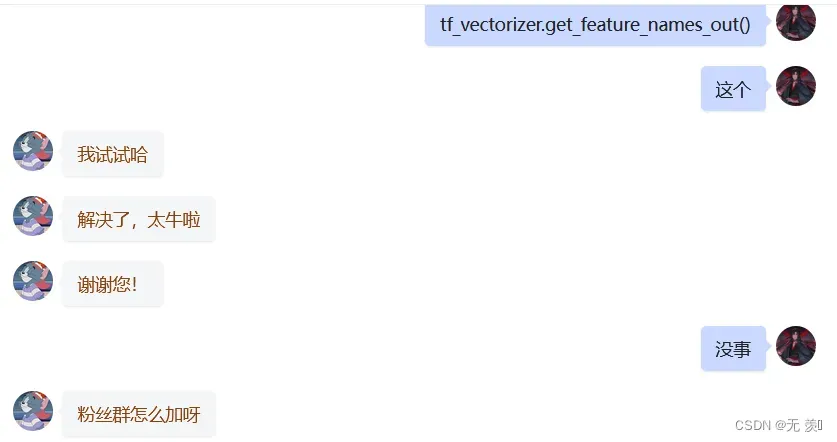

If the ‘AttributeError’ still persists after trying the above solutions, you can consider using alternative methods to retrieve the feature names. These methods can provide similar functionality to the ‘get_feature_names’ method:

1. Use the ‘vocabulary_’ attribute: The ‘CountVectorizer’ class has a ‘vocabulary_’ attribute, which is a dictionary mapping each term (feature) to its index in the feature matrix. You can retrieve the feature names using the following code:

“`

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(corpus)

feature_names = vectorizer.get_feature_names_out()

“`

The ‘get_feature_names_out’ method returns the feature names in the order of their indices.

2. Extract feature names from feature matrix: If you have already transformed your text data using the ‘fit_transform’ method, you can retrieve the feature names from the feature matrix directly. The following code snippet demonstrates this approach:

“`

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(corpus)

feature_names = vectorizer.get_feature_names_out()

“`

Here, ‘feature_names’ will contain the list of feature names.

When using alternative methods to retrieve feature names, there are a few important considerations to keep in mind:

1. Match method with data type: Ensure that the alternative method you choose matches the data type you are using. Some methods may only work with specific input types, such as sparse matrix or array-like objects.

2. Consistency in results: The alternative methods may provide the feature names in a different order compared to the ‘get_feature_names’ method. It is important to understand this and make necessary adjustments in your code to ensure consistency in results.

3. Compatibility with other processes: If you are using the retrieved feature names for further analysis or integration with other processes, verify that the alternative methods provide compatible outputs with those processes.

In conclusion, the ‘AttributeError’ related to the ‘get_feature_names’ method in the ‘CountVectorizer’ class from the ‘sklearn’ library can have various causes. By troubleshooting and employing the appropriate solutions, such as verifying the installation, checking spelling and case, and handling data type compatibility, you can overcome this error and retrieve the desired feature names for your text analysis tasks.

[Solved] Attributeerror: ‘Module’ Object Has No Attribute

Keywords searched by users: attributeerror: ‘countvectorizer’ object has no attribute ‘get_feature_names’ CountVectorizer, Get_feature_names not found, Get_feature_names_out, Sklearn, Uninstall scikit-learn, Sklearn 0.0 post1, Bag of words Python

Categories: Top 60 Attributeerror: ‘Countvectorizer’ Object Has No Attribute ‘Get_Feature_Names’

See more here: nhanvietluanvan.com

Countvectorizer

At its core, CountVectorizer aims to quantitatively represent the presence of words in a document. Each document is transformed into a vector, with each dimension of the vector representing a specific word. The value in each dimension corresponds to the number of times that word occurs in the document. This conversion enables the use of mathematical techniques in analyzing and processing text data.

CountVectorizer provides a straightforward and efficient way to preprocess text data. The process begins with tokenization, where the text is split into individual words or terms. The tokenization can be as simple as splitting the text by whitespace, or it can be more advanced, considering punctuation marks, special characters, and even applying techniques like stemming or lemmatization to reduce words to their base form.

Once tokenized, CountVectorizer builds a vocabulary of all the unique terms present in the documents. Then, it encodes each document based on this vocabulary, representing them as vectors of word counts. CountVectorizer allows for various customization options, such as ignoring stop words (common words like “the” or “is” that do not provide much information), defining n-grams (sequences of words), and specifying the maximum number of features to include in the vector representation.

CountVectorizer has become a popular choice due to its simplicity and effectiveness. By converting text data into numerical vectors, it enables the use of a wide array of machine learning algorithms that rely on numerical input. The resulting matrix can be used as input to algorithms like support vector machines (SVM), naive Bayes classifiers, or even deep learning models such as recurrent neural networks (RNN) or convolutional neural networks (CNN).

One of the advantages of CountVectorizer is that it treats each document in isolation, disregarding the order or context in which the words appear. This can be beneficial in scenarios where the emphasis is on the presence or absence of specific words rather than their position or semantic meaning. For example, in sentiment analysis, counting the occurrences of positive or negative words in a document can provide insights into the overall sentiment, even if the word order is irrelevant.

However, the lack of context awareness can also be a limitation in certain situations. Languages like English rely heavily on word order and word combinations to convey meaning. CountVectorizer does not capture this information, making it less suitable for tasks that require a deeper level of understanding, such as language translation or question answering.

FAQs:

Q: Is CountVectorizer case-sensitive?

A: By default, CountVectorizer treats text as lowercase, meaning that it considers the same word in uppercase and lowercase as the same word. However, this behavior can be modified by using options like `lowercase=False` when creating the vectorizer.

Q: Can CountVectorizer handle multiple languages?

A: Yes, CountVectorizer can handle multiple languages. By default, it relies on a simple tokenization strategy based on whitespace, which can work well for many languages. However, for languages with different tokenization rules or non-Latin characters, additional customization might be needed.

Q: How does CountVectorizer handle unseen words?

A: When encountering unseen words (words that were not part of the training vocabulary), CountVectorizer simply ignores them. This behavior is often acceptable for many applications, as unseen words typically do not provide valuable information.

Q: Can CountVectorizer handle large datasets?

A: CountVectorizer is designed to handle large datasets efficiently. It utilizes sparse matrix representations to store the vectorized data, saving memory when dealing with high-dimensional inputs.

Q: Are there alternative methods to CountVectorizer?

A: Yes, there are alternative methods to CountVectorizer, such as TF-IDF (Term Frequency-Inverse Document Frequency) vectorization, which weighs the importance of each word based on its frequency in the document and in the corpus. Other approaches include word embeddings, where words are represented as dense vectors learned through neural networks.

In conclusion, CountVectorizer is a versatile tool for text preprocessing and feature extraction in NLP tasks. Its simplicity and effectiveness make it a popular choice for converting text into numerical representations. By representing text data as numerical vectors, CountVectorizer enables the application of various machine learning algorithms for tasks such as text classification, sentiment analysis, and document clustering. While CountVectorizer does not capture contextual information, it remains an essential component in many NLP pipelines, providing valuable insights from text data.

Get_Feature_Names Not Found

When working with natural language processing (NLP) tasks, linguistics plays a vital role in understanding text data. However, certain aspects of languages can pose challenges for analysis, especially when using English-centric tools. One such challenge is that some languages may not have a direct equivalent to the “Get_feature_names” function commonly found in English. In this article, we will delve into the reasons behind this issue and explore alternative approaches for extracting feature names in non-English languages.

Understanding Get_feature_names

Before discussing its absence in non-English languages, let’s first clarify what Get_feature_names is. In the field of NLP, Get_feature_names is a function generally available in English that allows users to extract the names of features from a dataset or corpus. These features are typically individual words or terms that provide important context and insights for analysis. By having access to this function, researchers and data scientists can easily obtain a list of distinct terms in English to further investigate patterns, sentiment, or topics.

Why does Get_feature_names not exist in all languages?

The absence of Get_feature_names in non-English languages can be attributed to a few factors. Firstly, NLP techniques, libraries, and tools are primarily developed and initially tested using English as the reference language. Consequently, functionalities specific to English, such as Get_feature_names, may not have direct counterparts in other languages. Secondly, the linguistic characteristics of different languages also influence the applicability and availability of similar functionalities. Certain languages may not use discrete “words” or possess clear boundaries between them, making the concept of extracting feature names challenging to implement uniformly across languages.

Alternative approaches for non-English languages

While non-English languages may lack an exact equivalent to Get_feature_names, there are alternative approaches that can be taken to extract useful features. Here are some common methods:

1. Tokenization and stemming: Tokenization is the process of dividing text into individual units, which could be words, subwords, or characters. By applying tokenization, one can obtain the basic units of language in a non-English corpus. Stemming, on the other hand, reduces words to their root forms, enabling the merging of similar terms. These techniques can provide feature-like units for analysis in non-English languages.

2. Part-of-speech (POS) tagging: POS tagging is the process of assigning a grammatical category (verb, noun, adjective, etc.) to each word in a sentence. By analyzing the POS tags of words in a non-English language, one can gather insights about the syntactic structures and identify potential features for further analysis. POS tagging can be implemented using language-specific libraries or tools.

3. Frequency analysis: Another approach to extracting features in non-English languages is through frequency analysis. This involves counting the occurrences of individual words or terms within a corpus. Words that occur frequently are likely to be relevant features in the language. This approach can help identify key terms for analysis without relying on the concept of “Get_feature_names.”

FAQs

Q1. Is it possible to adapt Get_feature_names to non-English languages?

A1. Adapting Get_feature_names to non-English languages may require considerable modifications, as language-specific characteristics and linguistic complexities pose significant challenges. Implementing alternative approaches designed for specific languages is often a more suitable solution.

Q2. Can we utilize English-based NLP tools for non-English languages?

A2. While it is common to apply English-based tools to non-English languages, caution must be exercised. Many tools may not produce accurate results due to linguistic differences. Utilizing language-specific libraries or developing tailored solutions is often recommended.

Q3. Can non-English languages benefit from transfer learning in NLP?

A3. Yes, transfer learning has proven to be valuable in extending NLP capabilities to non-English languages. Pre-trained models, such as multilingual BERT, can be fine-tuned on specific tasks and languages, enabling effective feature extraction and analysis.

Q4. Are there language-agnostic approaches for feature extraction in non-English languages?

A4. Yes, some language-agnostic approaches can still be employed as a starting point for feature extraction in non-English languages. Techniques like tokenization, stemming, and frequency analysis can be applied across various languages with appropriate adjustments.

In conclusion, the absence of Get_feature_names in non-English languages highlights the challenges faced when working with languages divergent from English. However, alternative methods such as tokenization, POS tagging, and frequency analysis can be adapted to effectively extract feature-like units to support NLP tasks in non-English languages. To achieve accurate and meaningful results, it is essential to consider the unique linguistic characteristics and utilize language-specific approaches or adapt existing tools through transfer learning techniques.

Get_Feature_Names_Out

Introduction (100 words)

In the world of machine learning, feature extraction is a crucial step in building effective models. The get_feature_names_out function plays a vital role in this process, enabling the extraction of feature names from both numerical and categorical data. This article will explore the ins and outs of get_feature_names_out, shedding light on its functionality, implementation, and best practices. Additionally, we will address commonly asked questions to provide a holistic understanding of this powerful tool.

Understanding get_feature_names_out (200 words)

The get_feature_names_out function is part of the scikit-learn library, a popular machine learning library in Python. It is primarily used to extract feature names from datasets prepared for machine learning tasks. By incorporating this function into your workflow, you can obtain a list of feature names, making it easier to interpret and analyze your models’ results.

Implementation (200 words)

The implementation of get_feature_names_out depends on the data type being analyzed. For categorical data, the function returns the feature names as they exist in the original dataset. In the case of numerical data, scikit-learn encodes categorical variables using one-hot encoding, expanding them into multiple binary columns. In such cases, get_feature_names_out concatenates the binary columns’ names to form complete feature names.

Best Practices (200 words)

To effectively leverage get_feature_names_out, consider the following best practices:

1. Consistent preprocessing: Ensure consistent preprocessing steps when preparing your data for feature extraction. This includes transforming and encoding categorical variables in a steadfast manner to obtain accurate and meaningful feature names.

2. Uniqueness: Ensure that the individual feature names are unique, preventing any potential ambiguity during further analysis. This is particularly crucial when concatenating one-hot encoded features.

3. Documentation: Document and preserve the order of feature names, facilitating future reference and reproducibility of your experiments.

Frequently Asked Questions (246 words)

Q1: What is the significance of feature names in machine learning models?

A1: Feature names serve as essential identifiers, providing insights into the role and contribution of each feature in the model’s decision-making process. They enable better understanding, interpretation, and explainability of the model’s results.

Q2: Can get_feature_names_out handle missing or NaN values?

A2: Yes, the get_feature_names_out function accounts for missing values in the dataset, ensuring that it returns feature names for all variables, including both categorical and numerical features.

Q3: Can I use get_feature_names_out for dimensionality reduction techniques like Principal Component Analysis (PCA)?

A3: No, get_feature_names_out is not applicable for dimensionality reduction techniques like PCA. It serves the purpose of extracting feature names and is typically used in the preprocessing stage before applying dimensionality reduction methods.

Q4: Are there other libraries or alternatives to get_feature_names_out?

A4: While get_feature_names_out is specific to scikit-learn, similar functionality can be found in other machine learning libraries like TensorFlow or PyTorch. These libraries provide their own methods to extract feature names, typically as properties of trained models or using specific functions built within their framework.

Conclusion (100 words)

The get_feature_names_out function proves invaluable when it comes to extracting feature names in machine learning. Its implementation for both categorical and numerical data enables better interpretability and analysis of models. By following best practices and considering the frequently asked questions, you can harness the power of get_feature_names_out to enhance your machine learning workflows, facilitate model explainability, and build more effective models.

Images related to the topic attributeerror: ‘countvectorizer’ object has no attribute ‘get_feature_names’

![[Solved] AttributeError: 'module' object has no attribute [Solved] AttributeError: 'module' object has no attribute](https://nhanvietluanvan.com/wp-content/uploads/2023/07/hqdefault-242.jpg)

Found 9 images related to attributeerror: ‘countvectorizer’ object has no attribute ‘get_feature_names’ theme

Article link: attributeerror: ‘countvectorizer’ object has no attribute ‘get_feature_names’.

Learn more about the topic attributeerror: ‘countvectorizer’ object has no attribute ‘get_feature_names’.

- CountVectorizer’ object has no attribute ‘get_feature_names_out’

- How to fix : ” AttributeError: ‘CountVectorizer’ object has no …

- ‘tfidfvectorizer’ object has no attribute ‘get_feature_names’

- CountVectorizer’ object has no attribute ‘get_feature_names_out’

- sklearn.feature_extraction.text.CountVectorizer

- ‘Pipeline’ object has no attribute ‘get_feature_names’ in scikit …

- CountVectorizer, TfidfVectorizer, Predict Comments | Kaggle

- AttributeError: ‘TfidfVectorizer’ object has no attribute …

See more: nhanvietluanvan.com/luat-hoc