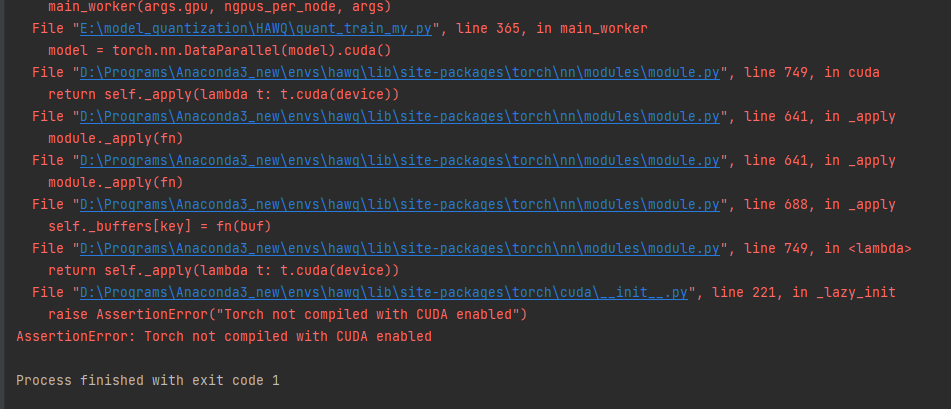

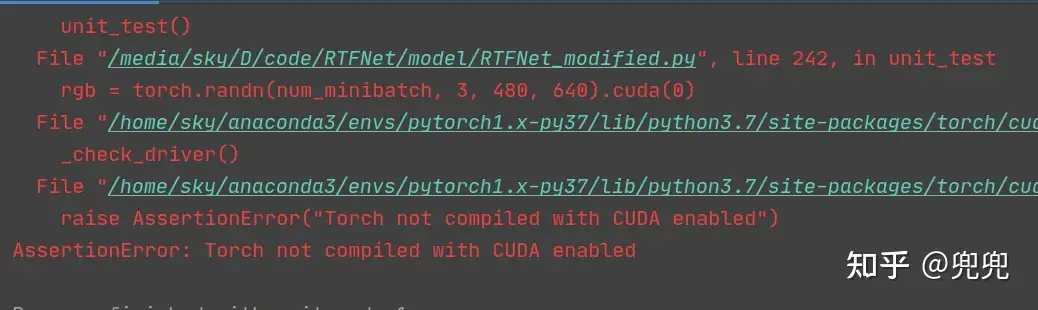

Assertionerror Torch Not Compiled With Cuda Enabled

Introduction:

PyTorch is a popular open-source machine learning library that provides a powerful framework for developing deep learning models. One of its key features is the ability to utilize GPUs for accelerated computations, which is achieved through the CUDA backend. However, users may encounter an Assertion Error stating that Torch was not compiled with CUDA enabled. In this article, we will explore the reasons behind this error, how to resolve it, and provide troubleshooting tips for common issues.

Overview of Assertion Error with Torch and CUDA:

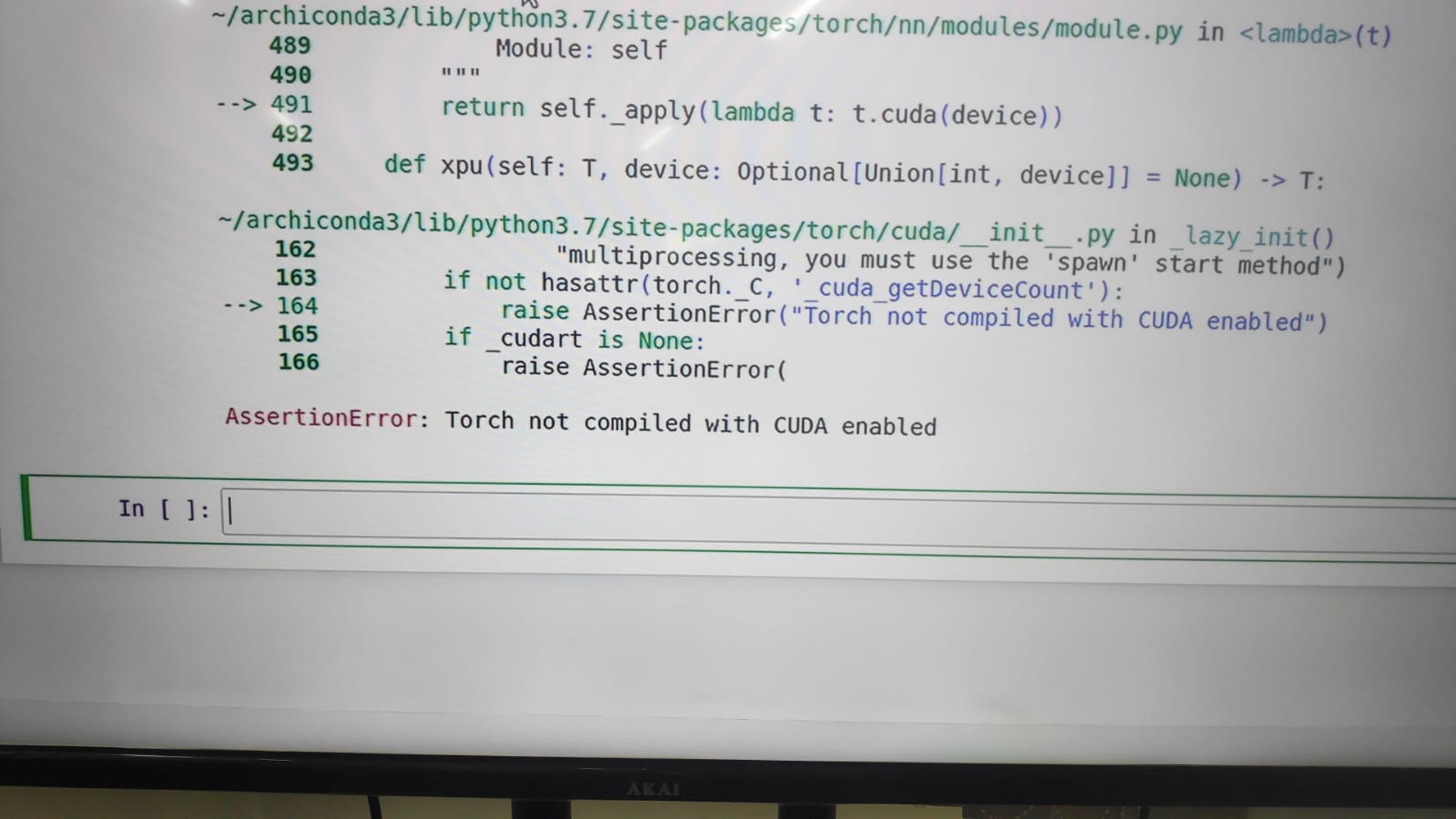

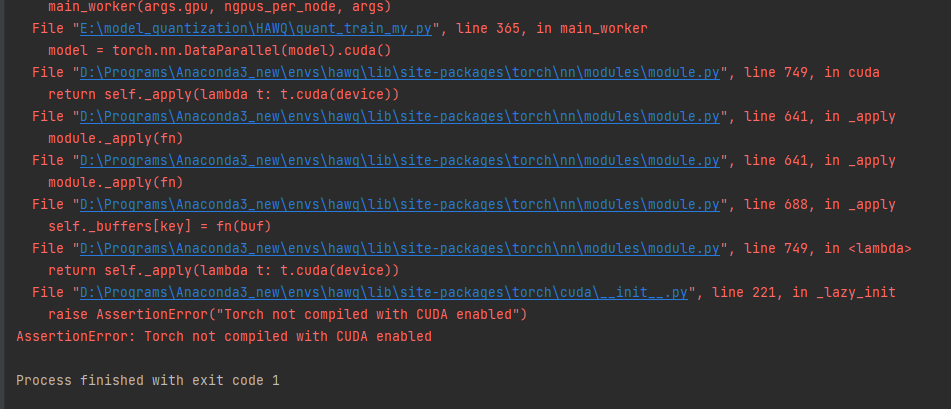

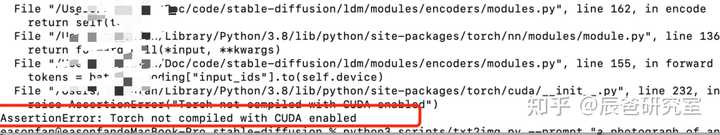

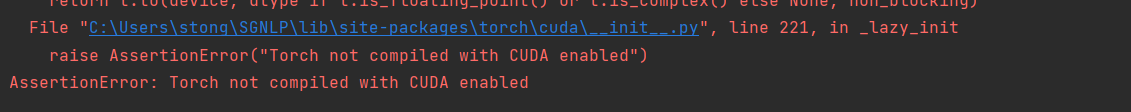

The Assertion Error “Torch not compiled with CUDA enabled” occurs when attempting to execute CUDA-specific code on a system that does not have the necessary CUDA libraries installed or when the PyTorch installation itself was built without CUDA support. This error prevents the use of GPU acceleration, resulting in slower computations on the CPU.

Understanding Compilation with CUDA Enabled:

Compilation with CUDA enabled refers to the process of building PyTorch with CUDA support. CUDA is the parallel computing platform and API model created by NVIDIA, which allows developers to leverage the power of NVIDIA GPUs for deep learning computations. When PyTorch is compiled with CUDA enabled, it becomes capable of offloading computations to the GPU, resulting in significant speedups.

Possible Causes of Assertion Error:

1. CUDA Libraries Not Installed: The most common cause of the Assertion Error is the absence of CUDA libraries on the system. PyTorch requires these libraries to be installed before it can utilize the GPU. Without them, the Torch installation will default to CPU-only mode.

2. Incompatible CUDA Version: If the installed CUDA version does not match the version for which PyTorch was built, compatibility issues may arise. This can lead to Assertion Errors when attempting to execute CUDA-specific code.

Resolving Assertion Error: Step-by-Step Guide:

1. Check CUDA Availability and Compatibility: First, ensure that CUDA is correctly installed on your system. You can do this by checking whether the “torch.cuda.is_available()” function returns “True”. Additionally, verify that the installed CUDA version matches the version required by your PyTorch installation.

2. Rebuilding Torch with CUDA Support: If CUDA is available and compatible, but still encounter the Assertion Error, rebuilding PyTorch with CUDA support may be necessary. This process involves recompiling the library with the appropriate CUDA configurations. Detailed instructions can be found in the official PyTorch documentation.

Troubleshooting Common Issues:

1. PyTorch Not Compiled with CUDA Enabled in PyCharm: If you encounter the Assertion Error in a PyCharm IDE, ensure that the correct PyTorch environment with CUDA support is selected. Verify the Python interpreter settings and make sure it corresponds to the CUDA-enabled version of PyTorch.

2. torch.cuda.is_available() Returns False: If “torch.cuda.is_available()” consistently returns “False,” it indicates that PyTorch cannot find the CUDA toolkit installation. Make sure that CUDA is correctly installed, and the respective environment variables are set up.

3. Mac Torch Not Compiled with CUDA Enabled: Mac systems have certain limitations regarding CUDA support. Ensure that your Mac version is supported by the CUDA toolkit, and follow the official CUDA installation guide for Mac to ensure proper configuration.

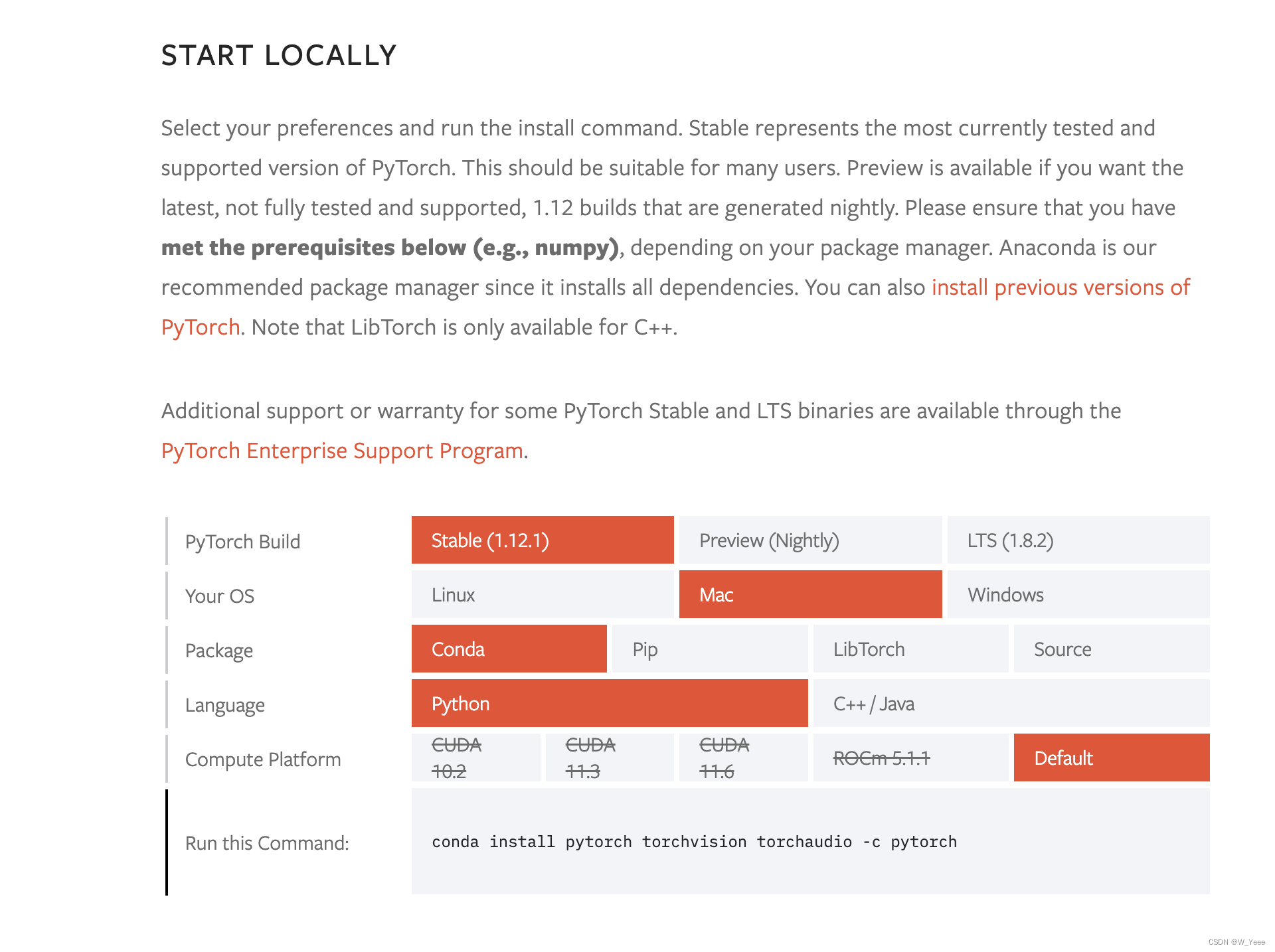

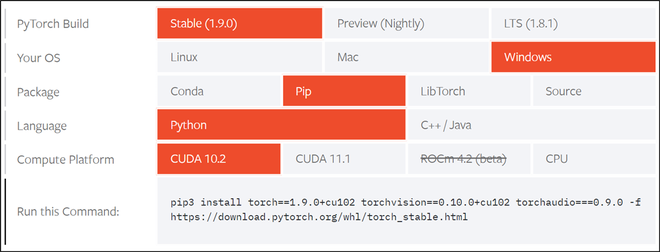

4. How to Enable CUDA in PyTorch: To enable CUDA support in PyTorch, the library needs to be compiled with CUDA configurations during the installation process. Make sure to follow the instructions specific to your operating system and CUDA version from the PyTorch website.

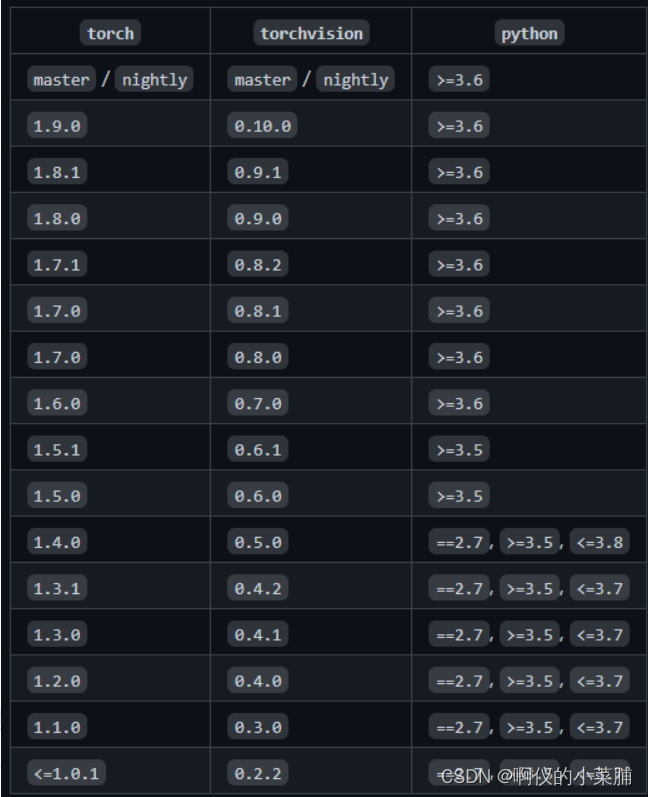

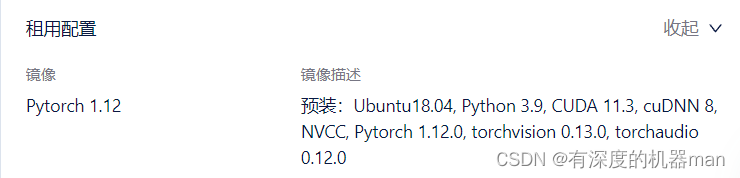

5. Downloading PyTorch with CUDA: If you are starting from scratch, ensure you download the correct PyTorch version pre-built with CUDA support for your system. This avoids the need for manual compilation and offers an easier setup process.

6. CUDA Not Available – Defaulting to CPU Note: If you encounter a statement like “CUDA not available – defaulting to CPU” during the execution of PyTorch code, it indicates that the Assertion Error occurred, preventing GPU utilization. Follow the aforementioned steps to address this issue.

Conclusion:

The Assertion Error “Torch not compiled with CUDA enabled” indicates a lack of CUDA support in your PyTorch installation, leading to slower computations on the CPU instead of benefiting from GPU acceleration. By following the outlined steps and troubleshooting tips, you can resolve this error, ensure CUDA availability, and unleash the full potential of PyTorch’s deep learning capabilities.

Remember, proper installation and configuration of CUDA and PyTorch are crucial for harnessing the power of GPUs and achieving optimal performance in your machine learning workflows.

Grounding Dino | Assertionerror: Torch Not Compiled With Cuda Enabled | Solve Easily

What Is Assertionerror Torch Not Compiled With Cuda Enabled In Windows?

If you are a data scientist or machine learning enthusiast working with deep learning frameworks, you might have come across the error message “AssertionError: torch not compiled with CUDA enabled” when using the Torch library in Windows. This article will delve into the details of this error and explore possible causes and solutions.

Understanding CUDA and Torch

Before diving into the error itself, it is crucial to grasp the concepts of CUDA and Torch. CUDA is a parallel computing platform and API model created by NVIDIA that allows developers to leverage the power of NVIDIA GPUs for general computing tasks. It enables significant acceleration in computations compared to traditional CPUs.

Torch, on the other hand, is a popular open-source deep learning framework that provides a wide range of tools and modules for building and training machine learning models. It is widely used due to its efficient implementation and ease of use. Torch relies on CUDA for parallel computing and GPU acceleration.

Understanding the Error Message

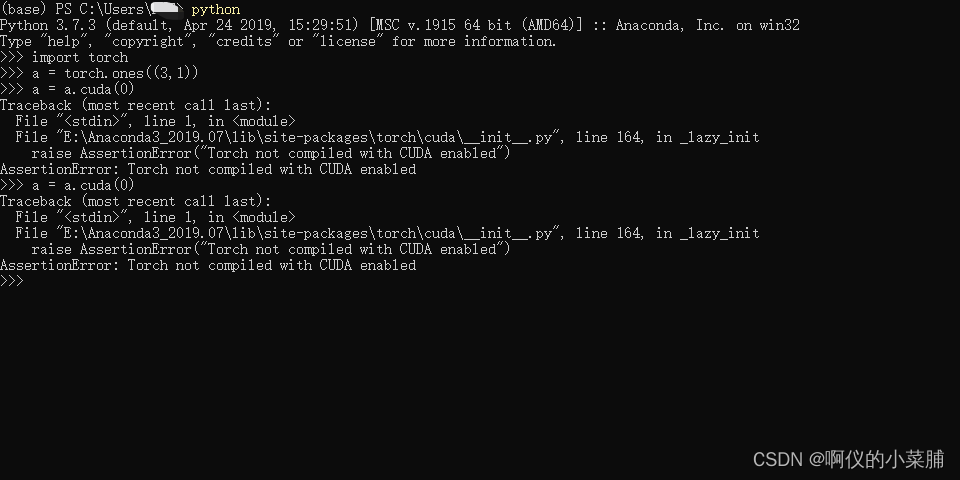

When encountering the error message “AssertionError: torch not compiled with CUDA enabled,” it indicates that Torch was not compiled with CUDA support during the installation process or that CUDA is not properly configured on your Windows system. As a result, Torch cannot utilize the GPU for computations, which can significantly impact performance, especially when working with large datasets and complex models.

Possible Causes and Solutions

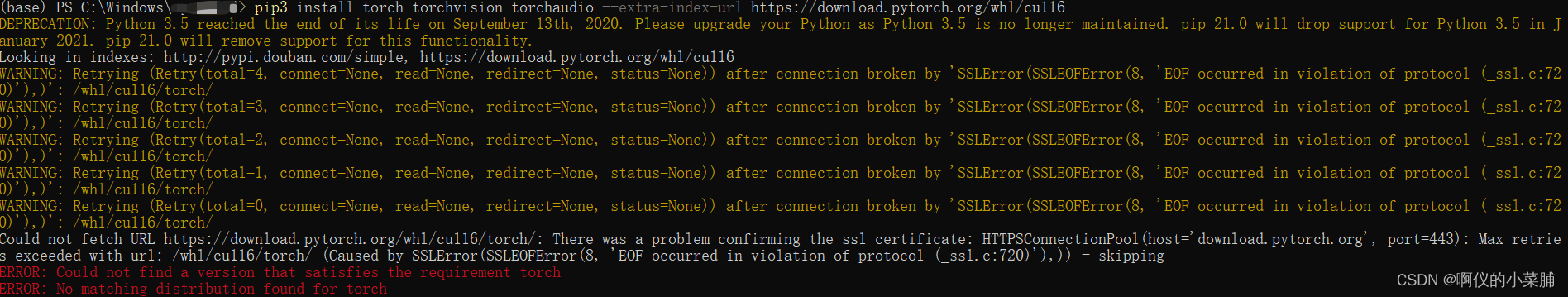

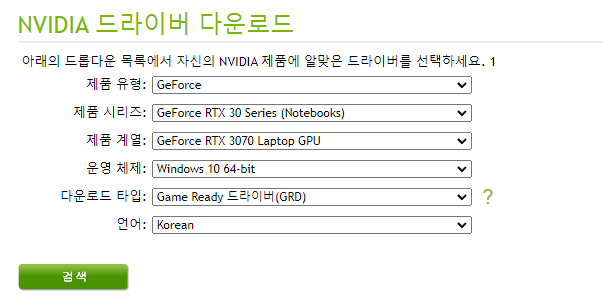

1. Missing or Incompatible CUDA Toolkit: One possible cause is that the CUDA Toolkit, which provides the necessary libraries and drivers for using CUDA, is either missing or incompatible with your system. To resolve this issue, ensure that you have installed the correct version of the CUDA Toolkit compatible with your GPU architecture. Visit the NVIDIA website to download the appropriate CUDA Toolkit.

2. Incorrect CUDA Environment Configuration: Even if the CUDA Toolkit is installed correctly, Torch may not be able to locate the necessary CUDA libraries if the environment variables are not set up properly. To fix this, ensure that the CUDA installation directory is added to the PATH environment variable. You can do this by searching for “Environment Variables” in the Windows search bar, selecting “Edit the system environment variables,” and adding the CUDA installation path (e.g., C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\bin).

3. Mismatched Torch and CUDA Versions: Another possible cause is a version mismatch between Torch and the CUDA Toolkit. Torch relies on specific CUDA libraries, so if you have recently updated Torch without updating the CUDA Toolkit or vice versa, compatibility issues may arise. Ensure that you have the compatible versions of Torch and CUDA installed on your system. Check the respective documentation and release notes for compatibility information.

4. Unsupported GPU Architecture: If you have an older GPU with an architecture that is not supported by the CUDA Toolkit or Torch, you may encounter this error. Older GPUs often lack the necessary computational capabilities required by Torch. In such cases, you may need to obtain a more recent GPU or consider using a CPU-based solution.

Frequently Asked Questions (FAQs)

Q: Can I use Torch without CUDA?

A: Yes, Torch can run on CPUs without CUDA support. However, utilizing CUDA and GPU acceleration significantly enhances performance in deep learning tasks.

Q: How can I check if CUDA is properly installed?

A: One way to confirm that CUDA is installed correctly is by running the command `nvcc –version` in the Windows command prompt. It should display the installed CUDA version without any errors.

Q: Can I use CUDA with Windows Subsystem for Linux (WSL)?

A: As of now, CUDA is not officially supported in WSL. However, NVIDIA recently announced developments to bring CUDA support to WSL, so it may become a possibility in the future.

Q: I have checked all the possible solutions, but I still encounter the AssertionError. What should I do?

A: If none of the solutions mentioned above work for you, it is recommended to seek assistance from the Torch community or forums for specific guidance regarding your setup and configurations.

In conclusion, the “AssertionError: torch not compiled with CUDA enabled” error in Windows indicates an issue with the CUDA configuration for Torch. By understanding the causes and implementing the suggested solutions, you can ensure that Torch harnesses the power of CUDA and utilize GPU acceleration properly, enabling efficient deep learning computations.

How To Install Torch With Cuda Enabled?

Torch is a popular deep learning framework that offers efficient GPU acceleration for training neural networks. CUDA, on the other hand, is a parallel computing platform and programming model that allows developers to drastically speed up their code by leveraging the power of NVIDIA GPUs. By combining Torch with CUDA, users can take advantage of the immense computational capabilities provided by GPUs for training complex machine learning models.

In this article, we will provide a step-by-step guide on how to install Torch with CUDA enabled. We will cover the installation process on both Linux and Windows platforms, ensuring that you have all the necessary tools and prerequisites to get started with deep learning using Torch and CUDA.

Note: Before proceeding with the installation, it is assumed that you have a compatible NVIDIA GPU and CUDA toolkit installed on your system.

Installation on Linux:

Step 1: Open the terminal and navigate to the desired installation directory.

Step 2: Clone the Torch repository from GitHub using the following command:

“`

git clone https://github.com/torch/distro.git ~/torch –recursive

“`

Step 3: Change into the torch directory:

“`

cd ~/torch

“`

Step 4: Run the installation script and enable CUDA support:

“`

./install.sh -s

“`

Step 5: Once the installation completes, it is necessary to update the environment variables to recognize the Torch installation. Open the ‘.bashrc’ file:

“`

nano ~/.bashrc

“`

Step 6: Add the following lines at the end of the file:

“`

export PATH=~/torch/install/bin:$PATH

export LD_LIBRARY_PATH=~/torch/install/lib:$LD_LIBRARY_PATH

“`

Step 7: Save the changes and exit the file. Then update the changes in your current session:

“`

source ~/.bashrc

“`

Step 8: Verify the installation by running the following command:

“`

th

“`

If the installation was successful, you will see the Torch interactive shell.

Installation on Windows:

Step 1: Visit the Torch website at https://torch.ch and click on the “Windows” tab.

Step 2: Download the latest Torch installer for Windows.

Step 3: Launch the installer and follow the instructions provided in the setup process.

Step 4: During the installation, make sure to enable the option “Install using CUDA support” to enable GPU acceleration.

Step 5: Once the installation completes, open the command prompt and run the following command to update the environment variables:

“`

path %path%;C:\path\to\torch\bin

setx /m PATH “%PATH%”

“`

Step 6: Verify the installation by running the following command:

“`

th

“`

If the installation was successful, you will see the Torch interactive shell.

FAQs:

Q1: What are the prerequisites for installing Torch with CUDA enabled?

A: You need to have a compatible NVIDIA GPU and the CUDA toolkit installed on your system.

Q2: Can I install Torch with CUDA on macOS?

A: As of now, Torch with CUDA support is not officially available for macOS. However, you can use Torch without CUDA on macOS.

Q3: How can I check if my GPU is compatible with CUDA?

A: The CUDA toolkit provides a list of supported GPUs on their website. You can check if your GPU model is listed to ensure compatibility.

Q4: What advantages do I gain by using Torch with CUDA?

A: Torch with CUDA allows you to leverage the immense computational power of GPUs, resulting in faster training of deep learning models compared to using only CPUs.

Q5: Can I use Torch with CUDA for inference as well?

A: Yes, Torch with CUDA is also used for efficient GPU-accelerated inference, resulting in faster predictions and improved overall performance.

In conclusion, installing Torch with CUDA enabled provides an efficient and powerful environment for training deep learning models. By following the step-by-step instructions provided in this article, you can successfully install Torch with CUDA on both Linux and Windows platforms. Ensure to meet the prerequisites and take advantage of the massive computational capabilities provided by GPUs for all your deep learning tasks.

Keywords searched by users: assertionerror torch not compiled with cuda enabled PyTorch, Torch not compiled with CUDA enabled pycharm, torch.cuda.is_available() false, Mac Torch not compiled with CUDA enabled, How to enable CUDA in PyTorch, Download pytorch with cuda, CUDA not available – defaulting to CPU note This module is much faster with a GPU, Torch CUDA

Categories: Top 66 Assertionerror Torch Not Compiled With Cuda Enabled

See more here: nhanvietluanvan.com

Pytorch

In the ever-evolving field of artificial intelligence (AI) and deep learning, researchers and practitioners constantly seek efficient and flexible tools to push the boundaries of what computers can achieve. PyTorch, often hailed as one of the most popular and powerful deep learning frameworks, has emerged as a versatile solution that empowers developers and researchers alike. In this article, we will delve into the intricacies of PyTorch, exploring its core features, benefits, and its role in shaping the future of deep learning.

Understanding PyTorch:

Developed and maintained by Facebook’s AI research lab, PyTorch is an open-source machine learning library for Python that primarily focuses on deep learning applications. Its solid foundation lies in Torch, an earlier deep learning framework created by Yann LeCun and colleagues. PyTorch differentiates itself by providing an intuitive and dynamic interface which enables users to efficiently build and train neural networks. It is widely appreciated for its simplicity, ease of use, and flexibility.

Benefits of PyTorch:

1. Dynamic Computational Graphs: PyTorch’s dynamic computational graph mechanism sets it apart from many other deep learning frameworks. While static graphs are commonly used in frameworks like TensorFlow, PyTorch’s dynamic graphs allow users to define and modify models on-the-fly. This flexibility facilitates experimentation, easier debugging, and quick prototyping of complex architectures.

2. Easy Debugging and Error Tracing: Debugging deep learning models can be a challenging task. However, PyTorch simplifies this process with its imperative nature. It enables users to easily examine variables, perform standard debugging tasks, and pinpoint errors in the code. This feature significantly reduces development time and increases efficiency.

3. Rich Ecosystem and Community Support: PyTorch’s popularity has grown rapidly in recent years, resulting in a vibrant community of contributors and users. The community actively shares knowledge and resources, making it easier for newcomers to learn and get started. Also, PyTorch provides extensive documentation, tutorials, and a range of pre-trained models, further enriching the user experience.

4. Seamless GPU Support: PyTorch deeply integrates with NVIDIA’s CUDA library, enabling GPU acceleration for computationally intensive deep learning tasks. The seamless GPU support allows users to leverage their hardware efficiently, resulting in significant speed improvements during training and inference.

Applications of PyTorch:

PyTorch has found wide applications in various domains, ranging from computer vision to natural language processing (NLP). Its user-friendly nature and dynamic nature make it particularly suitable for research and development purposes. Some prominent applications include:

1. Image Classification: PyTorch has proven effective in solving image classification problems. With its extensive collection of pre-trained models and efficient training mechanisms, PyTorch allows researchers to tackle complex tasks such as object recognition, image segmentation, and scene understanding.

2. Natural Language Processing: PyTorch’s versatility extends to the field of NLP, where it is used for tasks such as sentiment analysis, language translation, and text generation. Its capabilities, combined with the availability of popular models like BERT and GPT, make PyTorch a preferred choice for NLP researchers.

3. Reinforcement Learning: The dynamic nature of PyTorch makes it an ideal framework for reinforcement learning tasks. Integrating seamlessly with popular reinforcement learning libraries such as OpenAI Gym, PyTorch enables researchers to build and train complex RL algorithms more efficiently.

4. Generative Models: PyTorch provides powerful tools for building and training generative models such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). These models find applications in image synthesis, data augmentation, and anomaly detection.

FAQs about PyTorch:

Q: Is PyTorch suitable for beginners?

A: Yes, PyTorch is beginner-friendly due to its intuitive Pythonic syntax and extensive documentation. Its dynamic nature allows newcomers to experiment with models and understand them better.

Q: Does PyTorch work on both CPUs and GPUs?

A: Yes, PyTorch can utilize both CPUs and GPUs. It benefits from GPU acceleration through CUDA, which significantly improves the training and inference speeds.

Q: Is PyTorch only used for research or is it suitable for production-level applications?

A: While PyTorch gained popularity in research settings, it is increasingly being used in production environments due to its flexible nature and growing community support.

Q: Are there any disadvantages to using PyTorch?

A: PyTorch, being a dynamic framework, may be slightly slower than static frameworks like TensorFlow during graph compilation. However, this difference is diminishing due to the continuous optimizations in PyTorch’s backend.

Q: Can PyTorch models be deployed on mobile devices?

A: Yes, PyTorch provides mechanisms like TorchScript and ONNX (Open Neural Network Exchange) to convert models into formats compatible with mobile devices and other frameworks like TensorFlow.

In conclusion, PyTorch has rapidly gained popularity in the deep learning community due to its simplicity, flexibility, and extensive support. Its dynamic computational graph mechanism, ease of debugging, and GPU acceleration capabilities make it a go-to choice for research and development. With its thriving community and wide range of applications, PyTorch is undeniably empowering the future of deep learning.

Torch Not Compiled With Cuda Enabled Pycharm

What is Torch?

Torch is an open-source machine learning library that is widely used for tasks such as computer vision, natural language processing, and other machine learning tasks. It provides a high-level interface for building neural networks and other machine learning models, making it easy for both beginners and experts to utilize.

What does it mean when Torch is not compiled with CUDA enabled PyCharm?

PyCharm, a popular integrated development environment (IDE), provides developers with a powerful toolset for Python programming. With the CUDA (Compute Unified Device Architecture) enabled version of PyCharm, developers can leverage the computational power of NVIDIA GPUs for accelerated machine learning computations.

When Torch is not compiled with CUDA enabled PyCharm, it means that the PyTorch package installed in your PyCharm environment does not have the necessary CUDA capabilities enabled. This can limit your ability to utilize GPU acceleration with PyTorch, leading to slower performance in certain machine learning tasks.

Reasons behind Torch not being compiled with CUDA enabled PyCharm:

1. PyTorch version: It is possible that the specific version of PyTorch you have installed does not come with CUDA support by default. The PyTorch package needs to be compiled with CUDA support to enable GPU acceleration.

2. Installation issues: Incorrect installation or setup of PyTorch and PyCharm can also lead to Torch not being compiled with CUDA enabled PyCharm. It is important to follow the installation instructions provided by the PyTorch and PyCharm documentation to ensure all necessary dependencies, including CUDA, are correctly installed.

Workarounds for using Torch without CUDA enabled PyCharm:

1. Utilize CPU: When Torch is not compiled with CUDA enabled PyCharm, you can still run your machine learning tasks using the CPU. While this might result in slower computations compared to GPU acceleration, it allows you to continue working on your projects without any major disruptions.

2. Separate CUDA-enabled environment: Another workaround is to create a separate conda or virtual environment with a version of PyTorch that has CUDA support enabled. By installing the appropriate PyTorch version and ensuring CUDA is properly configured in this separate environment, you can continue to reap the benefits of GPU acceleration.

FAQs:

Q: How can I check if Torch is compiled with CUDA enabled PyCharm?

A: Torch’s official website and PyTorch’s documentation provide information on whether the pre-compiled binaries come with CUDA support. Additionally, you can check the output of `torch.cuda.is_available()` in your PyTorch environment. If it returns `True`, it indicates that PyTorch is compiled with CUDA support.

Q: Can I enable CUDA support in an existing PyCharm project?

A: Enabling CUDA support in an existing PyCharm project depends on the PyTorch version you are using. If your current version does not have CUDA support enabled, you may need to create a new PyCharm project or switch to a CUDA-enabled environment.

Q: Does using CPU instead of a GPU impact performance significantly?

A: Yes, using CPU instead of GPU for machine learning computations can significantly impact performance. GPUs are specifically designed for parallel computations, making them more suitable for certain machine learning tasks. However, many machine learning tasks can still be done efficiently using the CPU, although it might take more time.

Q: Are there any alternatives to PyCharm for GPU-accelerated Torch?

A: Yes, there are other IDEs like Jupyter Notebook, Visual Studio Code, and Spyder that support GPU-accelerated Torch. These IDEs often have dedicated extensions or settings for enabling CUDA support and can be used as alternatives if you are facing limitations with PyCharm.

In conclusion, although Torch is a powerful machine learning library, it is not always compiled with CUDA enabled PyCharm by default. This limitation can be overcome by using alternative environments, such as CPU usage or creating separate environments with CUDA support. Understanding the reasons behind this limitation, exploring workarounds, and considering alternative IDEs can help developers continue utilizing the benefits of Torch in their machine learning projects.

Images related to the topic assertionerror torch not compiled with cuda enabled

Found 21 images related to assertionerror torch not compiled with cuda enabled theme

Article link: assertionerror torch not compiled with cuda enabled.

Learn more about the topic assertionerror torch not compiled with cuda enabled.

- Torch not compiled with CUDA enabled” in spite upgrading to …

- AssertionError: torch not compiled with cuda enabled ( Fix )

- AssertionError: Torch not compiled with CUDA enabled #30664

- How to Fix AssertionError: torch not compiled with cuda enabled

- Torch Not Compiled With Cuda Enabled: Causes and Solutions

- Torch not compiled with CUDA enabled – PyTorch Forums

- Torch not compiled with CUDA enabled in PyTorch – LinuxPip

- AssertionError: torch not compiled with cuda enabled ( Fix )

- How to Install PyTorch with CUDA 10.0 – VarHowto

- Which PyTorch version is CUDA 30 compatible | Saturn Cloud Blog

- Setting up Tensorflow-GPU with Cuda and Anaconda on Windows

- Torch not compiled with CUDA enabled – Jetson AGX Xavier

See more: nhanvietluanvan.com/luat-hoc